This topic describes the metrics of data transformation jobs and how to view the data transformation dashboard and configure job monitoring.

Metric data

To view metric data of data transformation jobs, you must first enable the service log feature and select job operational logs as the log type. For more information, see Use the service log feature.

Dashboard

After you create a data transformation job, Simple Log Service creates a dashboard for the job on the job details page. You can view metrics of the job on the dashboard.

Procedure

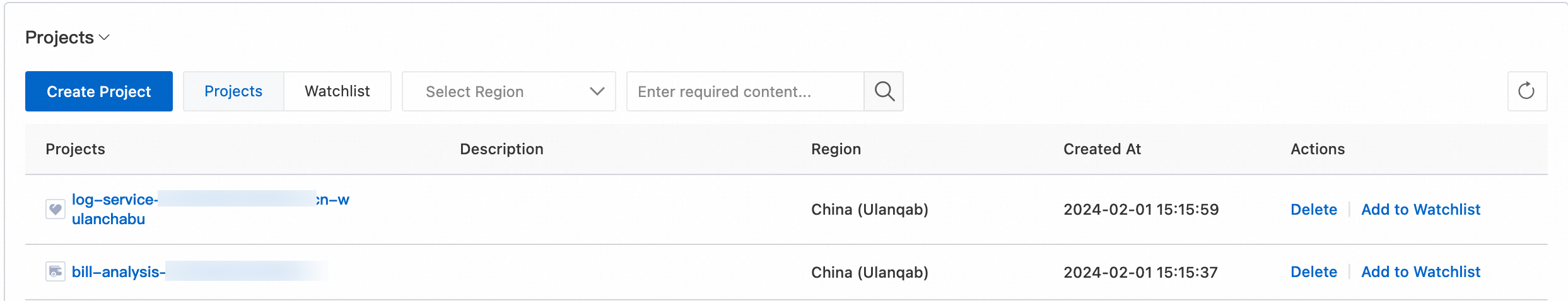

Log on to the Simple Log Service console.

In the Projects section, click the project that you want to manage.

In the left-side navigation pane, choose .

Click the data transformation job that you want to manage. Then, view the dashboard in the Execution Status section.

Overall metrics

The overall metrics are as follows:

Transforming Speed (events/s): the transformation rate in events per second. The default statistical window is one hour.

ingest: the number of data records that were read from each shard of the source Logstore.

deliver: the number of data records that were successfully written to the destination Logstore.

failed: the number of data records that were read but failed to be transformed from each shard of the source Logstore.

Total Events Read: the total number of data records that were read from each shard of the source Logstore. The default statistical window is one day.

Total Events Delivered: the total number of data records written to all destination Logstores. The default statistical window is one day.

Total Events Failed: the total number of data records that were read but failed to be transformed from each shard of the source. The default statistical window is one day.

Event Delivered Ratio: the ratio of the number of data records that were successfully delivered to the destination Logstore to the number of data records that were read from the source Logstore. The default statistical window is one day.

Shard details analysis

This section displays metrics of each shard in each minute when the transformation job reads data from the source Logstore.

Shard Consuming Latency (s): the difference between the time when the last data record written to each shard was received and the time when the data record being processed by the shard was received. Unit: seconds.

Shard Transforming Stats (events): the statistics about active shards. The default statistical window is one hour.

shard: the sequence number of the shard.

ingest: the number of raw data records that were read from the shard.

failed: the number of raw data records that were read from the shard but failed to be transformed.

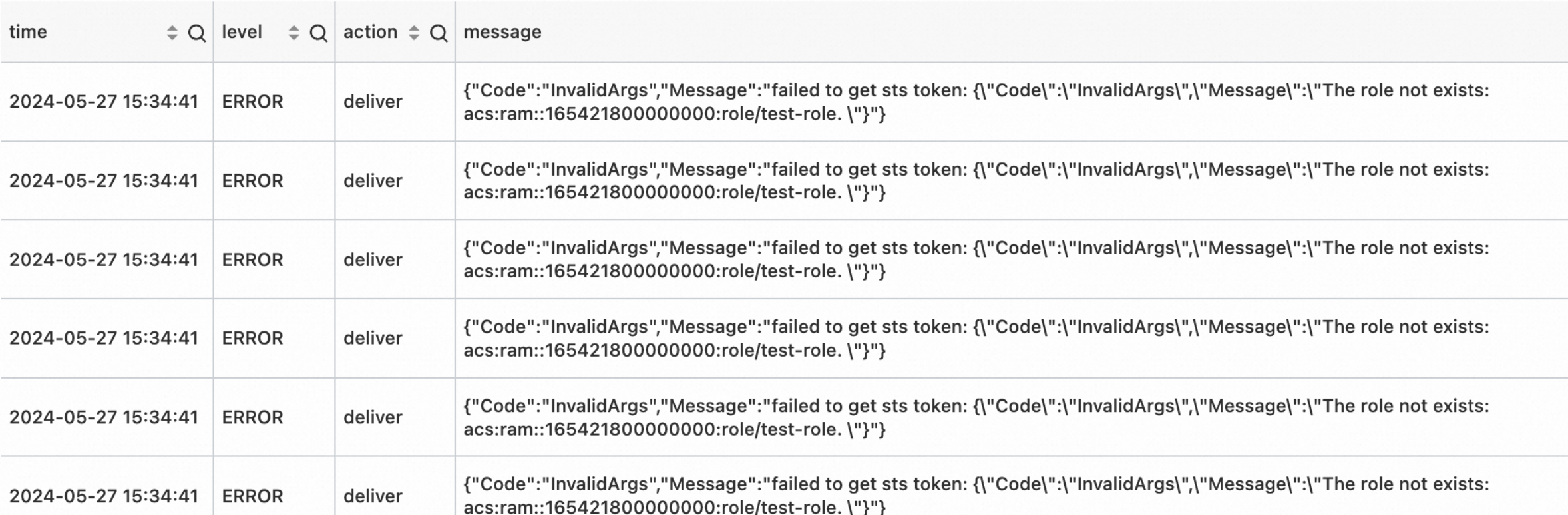

Transformation errors

You can view the details of an error based on the message field.

Alert monitoring rules

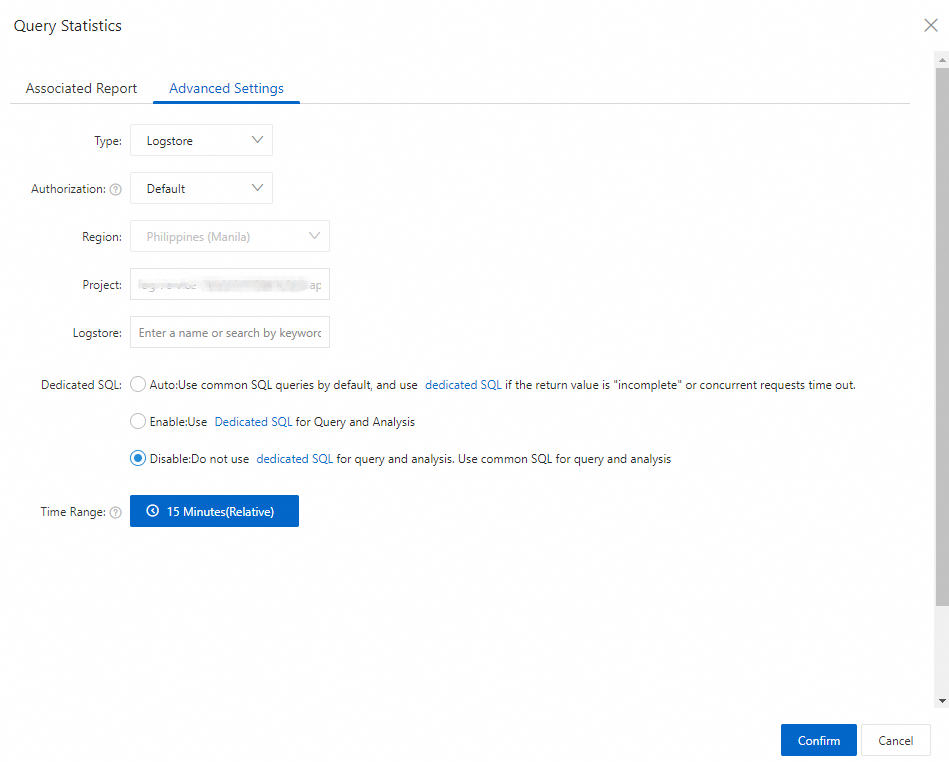

Data transformation (new version) jobs rely on task metrics to be monitored. For more information, see Metric data. You can use the alerting feature of Simple Log Service to monitor jobs. For more information, see Alerting. This section describes the following alert rules for data transformation (new version) jobs: shard consumption latencies, data transformation exceptions, transformation traffic (absolute values), and transformation traffic (same-period comparisons). For more information about how to create an alert rule, see Create an alert rule.

To create an alert rule, you must add the same project and Logstore for statistical purposes as those saved in the run logs of the project. For more information about how to save job operational logs, see Use the service log feature.

Shard consumption latencies

Item | Description |

Purpose | A rule of this type monitors the latency that occurs when data is consumed from shards in data transformation jobs. If the latency during data transformation exceeds the threshold in the rule, an alert is triggered. |

Associated dashboard | For more information, see Shard details analysis. |

Sample SQL | In the following template, replace |

Monitoring rule |

Note We recommend that you configure the rule based on these settings to prevent metrics from being updated and processed based on a one-minute window and to prevent false alerts caused by latencies attributed to data spikes. |

Handling method | You can clear triggered alerts based on the following rules:

|

Data transformation exceptions

Item | Description |

Purpose | A rule of this type monitors exceptions in data transformation jobs. If an exception occurs during data transformation, an alert is triggered. |

Associated dashboard | For more information, see Transformation errors. |

Sample SQL | In the following template, replace |

Monitoring rule |

|

Handling method | Fix exceptions based on the error message.

|

Events delivered ratios (same-period comparisons)

Item | Description |

Purpose | A rule of this type monitors the comparisons between the ratios of data records written to the destination Logstore with those of the same period the previous day or week. An alert is triggered if the value goes beyond the specified threshold for increase or drops below the specified threshold for decrease. |

Associated dashboard | Event Delivered Ratio: the ratio of the number of data records that were successfully delivered to the destination Logstore to the number of data records that were read from the source Logstore. The default statistical window is one day. |

Sample SQL | In the dialog box for creating the alert rule, enter the following SQL statement for queries: In the following template, replace |

Monitoring rule |

Note We recommend that you set a threshold of 20% or higher for daily or weekly comparisons or adjust the comparison cycle to match the raw traffic cycle. This helps prevent false alerts caused by periodic fluctuations in the raw data traffic. |

Handling method | You can clear triggered alerts based on the following rules:

|

Events read ratios (same-period comparisons)

Item | Description |

Purpose | A rule of this type monitors the comparisons between the ratios of data records read from the source Logstore with those of the same period the previous day or week. An alert is triggered if the value goes beyond the specified threshold for increase or drops below the specified threshold for decrease. |

Associated dashboard | Total Events Read: the total number of data records that were read from each shard of the source Logstore. The default statistical window is one day. |

Sample SQL | In the dialog box for creating the alert rule, enter the following SQL statement for queries: In the following template, replace |

Monitoring rule |

Note We recommend that you set a threshold of 20% or higher for daily or weekly comparisons or adjust the comparison cycle to match the raw traffic cycle. This helps prevent false alerts caused by periodic fluctuations in the raw data traffic. |

Handling method | You can clear triggered alerts based on the following rules:

|

Events delivered (same-period comparisons)

Item | Description |

Purpose | A rule of this type monitors the comparisons between the number of data records written to the destination Logstore with those of the same period the previous day or week. An alert is triggered if the value goes beyond the specified threshold for increase or drops below the specified threshold for decrease. |

Associated dashboard | Dashboard > Overall metrics > Total Events Delivered |

Sample SQL | In the dialog box for creating the alert rule, enter the following SQL statement for queries: In the following template, replace |

Monitoring rule |

Note We recommend that you set a threshold of 20% or higher for daily or weekly comparisons or adjust the comparison cycle to match the raw traffic cycle. This helps prevent false alerts caused by periodic fluctuations in the raw data traffic. |

Handling method | You can clear triggered alerts based on the following rules:

|