Kubernetes provides multiple metrics. You can collect the metrics from Kubernetes clusters to the Full-stack Observability application for visualization. Simple Log Service and Alibaba Cloud OpenAnolis have jointly developed the non-intrusive monitoring feature. You can use the feature to analyze network traffic flows and identify bottleneck issues for Kubernetes clusters in cloud-native scenarios.

Prerequisites

A Full-stack Observability instance is created. For more information, see Create an instance.

Limits

If you want to enable data plane monitoring on a host, the operating system of the host must be Linux x86_64, and the kernel version must be 4.19 or later. If the host runs a CentOS operating system whose version is between 7.6 and 7.9, the kernel version must be 3.1.0. You can run the uname -r command to view the kernel information of an operating system.

Step 1: Create a Logtail configuration

Log on to the Simple Log Service console.

In the Log Application section, click the Intelligent O&M tab. Then, click Full-stack Observability.

On the Simple Log Service Full-stack Observability page, click the instance that you want to manage.

In the left-side navigation pane, click Full-stack Monitoring.

If this is your first time to use Full-stack Monitoring in the instance, click Enable.

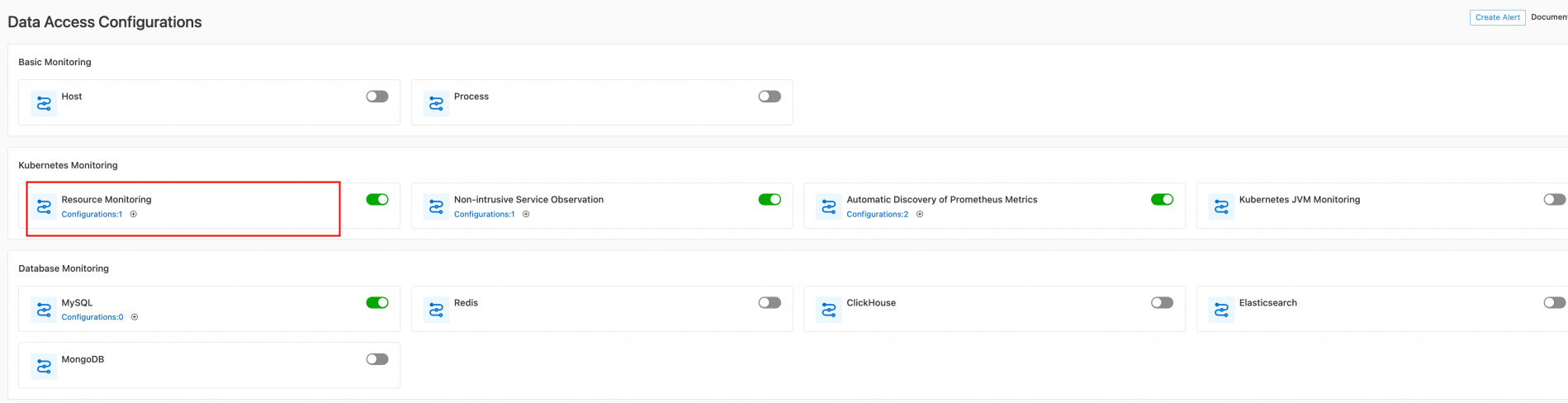

In the left-side navigation tree, click Data Import. On the Data Import Configurations page, find Resource Monitoring in the Kubernetes Monitoring section.

The first time you create a Logtail configuration for host monitoring data, turn on the switch to go to the configuration page. If you created a Logtail configuration, click the

icon to go to the configuration page.

icon to go to the configuration page. Create a machine group.

If a machine group is created, skip this step.

Create a machine group for a Container Service for Kubernetes (ACK) cluster. For more information, see Create an IP address-based machine group.

Create a machine group for a self-managed Kubernetes cluster. For more information, see Create a custom identifier-based machine group.

Download the custom resource definition (CRD) template tool.

Method

Description

Method

Description

Install the CRD template tool outside a cluster

If you want to install the CRD template tool outside a cluster, make sure that the

~/.kube/configconfiguration file exists in the logon account. The configuration file includes settings that allow you to perform management operations on the cluster. You can run the kubectl command to perform related operations.Install the CRD template tool in a cluster

If you want to install the CRD template tool in a container, the system creates CRDs based on the permissions of an installed component named

alibaba-log-controller. If the~/.kube/configconfiguration file does not exist or connection failures occur due to poor network conditions, you can use this method to install the CRD template tool.Install the CRD template tool outside a cluster

Log on to a cluster and download the CRD template tool.

China

curl https://logtail-release-cn-hangzhou.oss-cn-hangzhou.aliyuncs.com/kubernetes/crd-tool.tar.gz -o /tmp/crd-tool.tar.gzOutside China

curl https://logtail-release-ap-southeast-1.oss-ap-southeast-1.aliyuncs.com/kubernetes/crd-tool.tar.gz -o /tmp/crd-tool.tar.gz

Install the CRD template tool. After the tool is installed,

sls-crd-toolis generated in the folder in which the CRD template tool is installed.tar -xvf /tmp/crd-tool.tar.gz -C /tmp &&chmod 755 /tmp/crd-tool/install.sh && sh -x /tmp/crd-tool/install.shRun the

./sls-crd-tool listcommand to check whether the tool is installed. If a value is returned, the tool is installed.

Install the CRD template tool in a container

Log on to a cluster and access the

alibaba-log-controllercontainer.kubectl get pods -n kube-system -o wide |grep alibaba-log-controller | awk -F ' ' '{print $1}' kubectl exec -it {pod} -n kube-system bash cd ~Download the CRD template tool.

If you can download resources in the cluster over the Internet, run one of the following commands to download the CRD template tool.

China

curl https://logtail-release-cn-hangzhou.oss-cn-hangzhou.aliyuncs.com/kubernetes/crd-tool.tar.gz -o /tmp/crd-tool.tar.gzOutside China

curl https://logtail-release-ap-southeast-1.oss-ap-southeast-1.aliyuncs.com/kubernetes/crd-tool.tar.gz -o /tmp/crd-tool.tar.gz

If you cannot download resources in the cluster over the Internet, you can download the CRD template tool outside the cluster. Then, run the

kubectl cp <source> <destination>command or use the file upload feature of ACK to upload the CRD template tool to the container.

Install the CRD template tool. After the tool is installed,

sls-crd-toolis generated in the folder in which the CRD template tool is installed.tar -xvf /tmp/crd-tool.tar.gz -C /tmp &&chmod 755 /tmp/crd-tool/install.sh && sh -x /tmp/crd-tool/install.shRun the

./sls-crd-tool listcommand to check whether the tool is installed. If a value is returned, the tool is installed.

Use the CRD template tool to generate a Logtail configuration.

Run the following command to view the definition of the template:

./sls-crd-tool get k8sMonitorReplace the REQUIRED parameter with the current instance ID and run the following command to preview the value of the parameter:

./sls-crd-tool apply -f template-k8sMonitor.yaml --create=falseCheck whether the project parameter specifies the project to which the current instance belongs. If yes, run the following command to deploy the template file:

./sls-crd-tool apply -f template-k8sMonitor.yamlGo to the Data Import Configurations page. The following figure shows that the Logtail configuration is generated. The number next to Configurations in the Resource Monitoring section is incremented by one. If the Logtail configuration is not generated, the number remains unchanged.

Resources for the monitoring component

The Kubernetes resources that are used to collect Kubernetes monitoring data are all created in the sls-monitoring namespace. The resources include one Deployment, one StatefulSet, one DaemonSet, and seven AliyunLogConfig CRDs.

Resource | Resource name | Description |

Resource | Resource name | Description |

AliyunLogConfig | {instance-id}-k8s-metas | Used to collect the configuration data of Kubernetes clusters, including the name, namespace, label, image, and limit of each Deployment, pod, Ingress, and Service. By default, the collected data is stored in a Logstore named {instance}-metas. |

{instance-id}-k8s-metrics | Used to collect the metric data of Kubernetes clusters, including the CPU, memory, and network data of pods and containers. By default, the collected data is stored in a Metricstore named {instance}-k8s-metrics. | |

{instance-id}-k8s-metrics-kubelet | Used to collect the metric data of Kubernetes kubelet. By default, the collected data is stored in a Metricstore named {instance}-k8s-metrics. | |

{instance-id}-node-metas | Used to collect the configuration data of Kubernetes nodes, including CPU models and memory sizes. By default, the collected data is stored in a Logstore named {instance}-metas. | |

{instance-id}-node-metrics | Used to collect the metric data of Kubernetes nodes, including CPU utilization and memory usage. By default, the collected data is stored in a Metricstore named {instance}-node-metrics. |

What to do next

After you collect Kubernetes monitoring data to Full-stack Observability, Full-stack Observability automatically creates dedicated dashboards for the monitoring data. You can use the dashboards to analyze the monitoring data. For more information, see View dashboards.