Stable Diffusion is a high-quality image generation model. The open source Stable Diffusion WebUI provides a browser interface with various image generation tools. Platform for AI (PAI) Elastic Algorithm Service (EAS) offers scenario-based deployment for Stable Diffusion WebUI with minimal configuration. This topic describes how to deploy and call the service, and answers frequently asked questions.

Features and benefits

EAS deployment provides the following features and benefits:

Ease of use: Deploy ready-to-use model services and dynamically switch GPU resources as needed.

Enterprise-level features: Supports multi-GPU scheduling, user isolation, and bill splitting with separate frontend and backend.

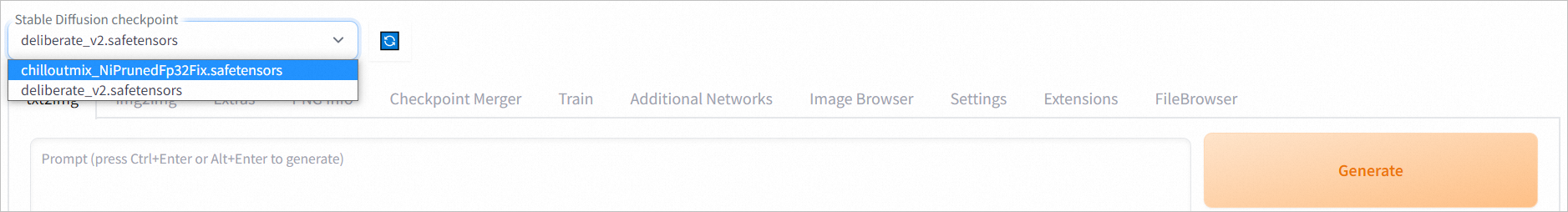

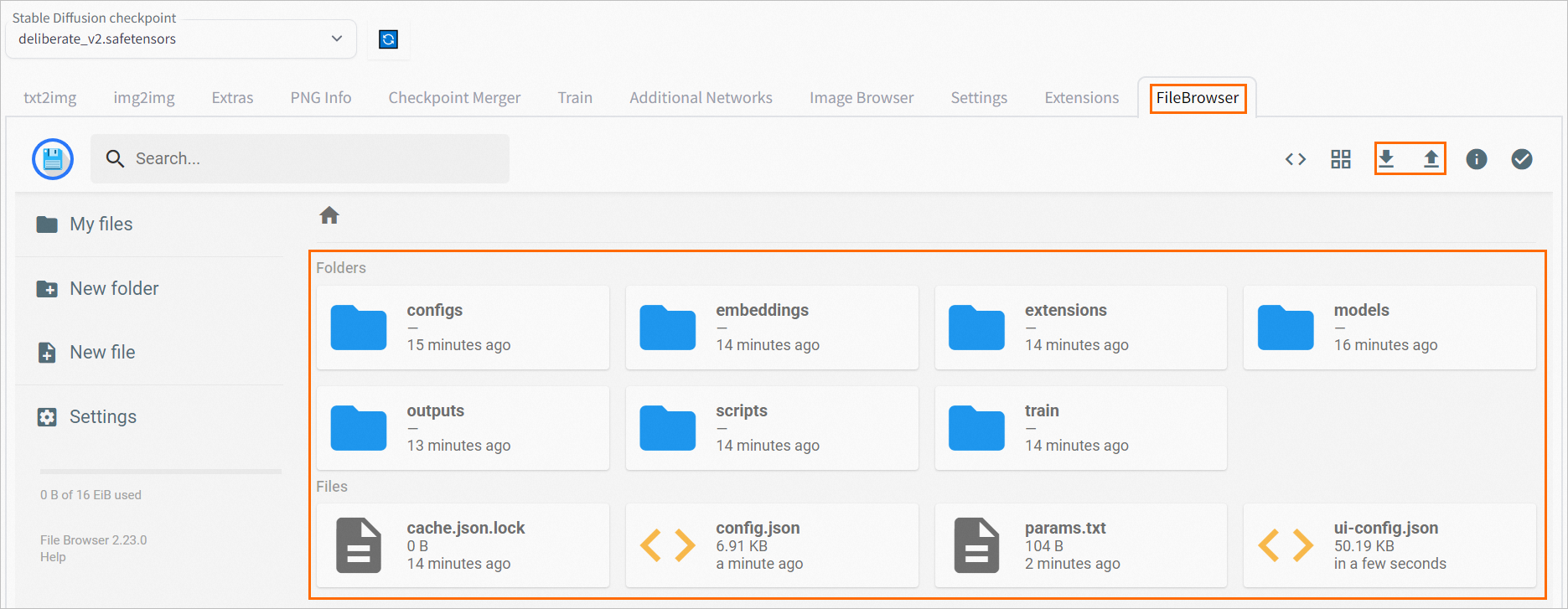

Extensions and optimization: PAI provides Stable Diffusion WebUI with PAI-Blade optimization, FileBrowser for uploading/downloading models and images, and ModelZoo for accelerated model downloads.

Deployment editions

Stable Diffusion WebUI offers four editions: Standard, API, Cluster Edition WebUI, and Serverless. The following table describes each edition.

Scenarios | Supported calling methods | Billing | |

Standard Edition | Single-user testing. Supports only single instance deployment. |

| You are billed based on your deployment configurations. For more information, see Billing of Elastic Algorithm Service (EAS). |

API Edition | Automatic asynchronous mode for high concurrency scenarios. | API calling (synchronous & asynchronous) | |

Cluster Edition WebUI | Multi-user WebUI access, such as design team collaboration. | Web UI | |

Serverless Edition | Auto-scaling for fluctuating demand based on request volume. | Web UI | Free deployment; billed only for image generation duration. |

API Edition: Automatically deploys as an asynchronous service with queue instances, requiring additional CPU resources.

Cluster Edition WebUI: Independent backend environment per user, enabling GPU sharing and file management.

Serverless Edition: Available only in the China (Shanghai) and China (Hangzhou) regions.

For custom deployments with more features, use official images for Standard, Cluster, or API editions. For details, see Parameters for custom deployment in the console.

Prerequisites

A NAS file system or OSS bucket is created to store model files and generated images.

Create a General-purpose NAS file system. For more information, see Create a file system.

Create an OSS bucket. For more information, see Create a bucket.

To call services using API operations, configure environment variables. For details, see Configure access credentials.

Deploy a model service

The following deployment methods are supported:

Method 1: Scenario-based model deployment (recommended)

This method is applicable to Standard Edition, API Edition, Cluster Edition, and Serverless Edition. Perform the following steps:

Log on to the PAI console. Select a region on the top of the page. Then, select the desired workspace and click Elastic Algorithm Service (EAS).

On the Elastic Algorithm Service (EAS) page, click Deploy Service. In the Scenario-based Model Deployment section, click AI Painting - SD Web UI Deployment.

On the AI Painting - SD Web UI Deployment page, configure the following key parameters.

Parameter

Description

Basic Information

Edition

See Deployment editions.

Model Settings

Stores model files and generated images.

ImportantModel configuration is mandatory for API and Cluster editions.

Click Add to configure the model. The following configuration types are supported:

Object Storage Service (OSS): Easier for data upload/download and can generate public URLs for images. Model switching and image saving are slower than NAS.

File Storage (NAS): Faster model switching and image saving. Supports Standard, API, and Cluster Edition WebUI.

NAS Mount Target: Select an existing NAS file system and mount target.

NAS Source Path: Set the value to

/.

In this example, OSS is used.

Resource Configuration

Instance Type

Supported for Standard, API, and Cluster Edition WebUI.

Select GPU type, for Instance Type Name we recommend using ml.gu7i.c16m60.1-gu30 (best cost-performance ratio).

Inference Acceleration

The inference acceleration feature. Valid values:

PAI-Blade: PAI's inference optimization tool for optimal performance.

xFormers: Open source Transformer-based acceleration tool that reduces image generation time.

Not Accelerated: Disables inference acceleration.

Network information

VPC

When Model Settings is set to File Storage (NAS), the system automatically matches the VPC where the NAS file system resides. No modifications needed.

vSwitch

Security Group

After you configure the parameters, click Deploy.

Method 2: Custom deployment

This method is applicable to the following editions: Standard Edition, API Edition, and Cluster Edition WebUI. Perform the following steps:

Log on to the PAI console. Select a region on the top of the page. Then, select the desired workspace and click Elastic Algorithm Service (EAS).

Click Deploy Service. In the Custom Model Deployment section, click Custom Deployment.

On the Custom Deployment page, configure the following key parameters.

Parameter

Description

Environment Information

Deployment Method

To deploy Standard Edition and Cluster Edition WebUI services, select Image-based Deployment, and select Enable Web App.

To deploy API Edition services, select Image-based Deployment, and select Asynchronous Queue.

Image Configuration

In the Alibaba Cloud Image list, select stable-diffusion-webui, and choose the highest version number, where:

x.x-standard: Standard Edition

x.x-api: API Edition

x.x-cluster-webui: Cluster Edition WebUI

NoteThe image version is updated frequently. We recommend that you select the latest version.

If you want to allow multiple users to use a Stable Diffusion WebUI to generate images, select the x.x-cluster-webui version.

For more information about the scenarios for each version, see Deployment editions.

Mount storage

Stores model files and generated images.

ImportantModel configuration is mandatory for API and Cluster editions.

The following configuration types are supported:

OSS

Uri: Set the OSS path to an existing OSS Bucket path.

Mount Path: Set to

/code/stable-diffusion-webui/data.

Standard NAS, Extreme NAS

Select A File System: Select an existing NAS file system.

Mount Target: Select an existing mount target.

File System Path: Set to

/.Mount Path: Set to

/code/stable-diffusion-webui/data.

PAI Model

PAI Model: Select a PAI model and a model version.

Mount Path: Set to

/code/stable-diffusion-webui/data.

In this example, an OSS bucket is mounted.

Command To Run

After completing the configurations, the system generates the run command automatically.

After specifying model configuration, add

--data-dirto the command to mount data. The path must match the mount path, e.g.,--data-dir /code/stable-diffusion-webui/data.(Optional) Add

--bladeor--xformersto enable inference acceleration. For details, see Parameters supported at service startup.

Resource Information

Deployment Resources

Select GPU type, for Resource Specification we recommend using ml.gu7i.c16m60.1-gu30 (best cost-performance ratio).

Network information

VPC configuration

When Model Settings is set to NAS, the system automatically matches the VPC where the NAS file system resides. No modifications needed.

vSwitch

Security Group

After you configure the parameters, click Deploy.

Method 3: JSON deployment

You can deploy a Stable Diffusion WebUI service using a JSON script. The following section describes how to use JSON to deploy Standard Edition and API Edition services.

Log on to the PAI console. Select a region on the top of the page. Then, select the desired workspace and click Elastic Algorithm Service (EAS).

On the Elastic Algorithm Service (EAS) page, click Deploy Service, and in the Custom Model Deployment section, click JSON Deployment.

On the JSON Deployment page, configure the following content in JSON format.

Deploy Standard Edition service

{ "metadata": { "instance": 1, "name": "sd_v32", "enable_webservice": true }, "containers": [ { "image": "eas-registry-vpc.<region>.cr.aliyuncs.com/pai-eas/stable-diffusion-webui:4.2", "script": "./webui.sh --listen --port 8000 --skip-version-check --no-hashing --no-download-sd-model --skip-prepare-environment --api --filebrowser --data-dir=/code/stable-diffusion-webui/data", "port": 8000 } ], "cloud": { "computing": { "instance_type": "ml.gu7i.c16m60.1-gu30", "instances": null }, "networking": { "vpc_id": "vpc-t4nmd6nebhlwwexk2****", "vswitch_id": "vsw-t4nfue2s10q2i0ae3****", "security_group_id": "sg-t4n85ksesuiq3wez****" } }, "storage": [ { "oss": { "path": "oss://examplebucket/data-oss", "readOnly": false }, "properties": { "resource_type": "model" }, "mount_path": "/code/stable-diffusion-webui/data" }, { "nfs": { "path": "/", "server": "726434****-aws0.ap-southeast-1.nas.aliyuncs.com" }, "properties": { "resource_type": "model" }, "mount_path": "/code/stable-diffusion-webui/data" } ] }Take note of the following parameters:

Parameter

Required

Description

metadata.name

Yes

The name of the custom model service, which is unique in the same region.

containers.image

Yes

Replace <region> with the ID of the current region. For example, replace the variable with cn-shanghai for China (Shanghai). For information about how to query region IDs, see Regions and zones.

storage

No

You can choose one of the following two mounting methods:

oss: Compared with NAS, OSS is more convenient for data upload and download and can generate an Internet access address for generated images. However, the speed of switching models and saving images is slower. Set the storage.oss.path parameter to the path of the existing OSS bucket.

nas: NAS supports faster model switching and image saving. Set the storage.nfs.server parameter to the existing NAS file system.

In this example, OSS is used.

cloud.networking

No

If you set the storage parameter to nas, you must configure the VPC, including the ID of the VPC, vSwitch, and security group. The VPC must be the same as the VPC of the general-purpose NAS file system.

Deploy API Edition service

{ "metadata": { "name": "sd_async", "instance": 1, "rpc.worker_threads": 1, "type": "Async" }, "cloud": { "computing": { "instance_type": "ml.gu7i.c16m60.1-gu30", "instances": null }, "networking": { "vpc_id": "vpc-bp1t2wukzskw9139n****", "vswitch_id": "vsw-bp12utkudylvp4c70****", "security_group_id": "sg-bp11nqxfd0iq6v5g****" } }, "queue": { "cpu": 1, "max_delivery": 1, "memory": 4000, "resource": "" }, "storage": [ { "oss": { "path": "oss://examplebucket/aohai-singapore/", "readOnly": false }, "properties": { "resource_type": "model" }, "mount_path": "/code/stable-diffusion-webui/data" }, { "nfs": { "path": "/", "server": "0c9624****-fgh60.cn-hangzhou.nas.aliyuncs.com" }, "properties": { "resource_type": "model" }, "mount_path": "/code/stable-diffusion-webui/data" } ], "containers": [ { "image": "eas-registry-vpc.<region>.cr.aliyuncs.com/pai-eas/stable-diffusion-webui:4.2", "script": "./webui.sh --listen --port 8000 --skip-version-check --no-hashing --no-download-sd-model --skip-prepare-environment --api-log --time-log --nowebui --data-dir=/code/stable-diffusion-webui/data", "port": 8000 } ] }The following table describes only the parameters whose configuration are different from the Standard Edition service deployment.

Parameter

Description

Delete the following parameters:

metadata.enable_webservice

Delete this parameter to disable webserver.

containers.script

Delete the --filebrowser option that is specified in the containers.script parameter to accelerate the service startup.

Add the following parameters:

metadata.type

Set the value to Async to enable the asynchronous service.

metadata.rpc.worker_threads

Set the value to 1 to allow a single instance to process only one request at a time.

queue.max_delivery

Set the value to 1 to disable retry after an error occurs.

containers.script

The containers.script configuration adds --nowebui (to accelerate startup speed) and --time-log (to record interface response time).

For more information about parameter configuration, see Deploy a Standard Edition service.

Click Deploy.

Call the service

Call the service using the web UI

You can use the web UI to call a service of the Standard Edition, Cluster Edition WebUI, and Serverless Edition. Perform the following steps:

After the service is deployed, click Web Application in the Overview tab of the target service.

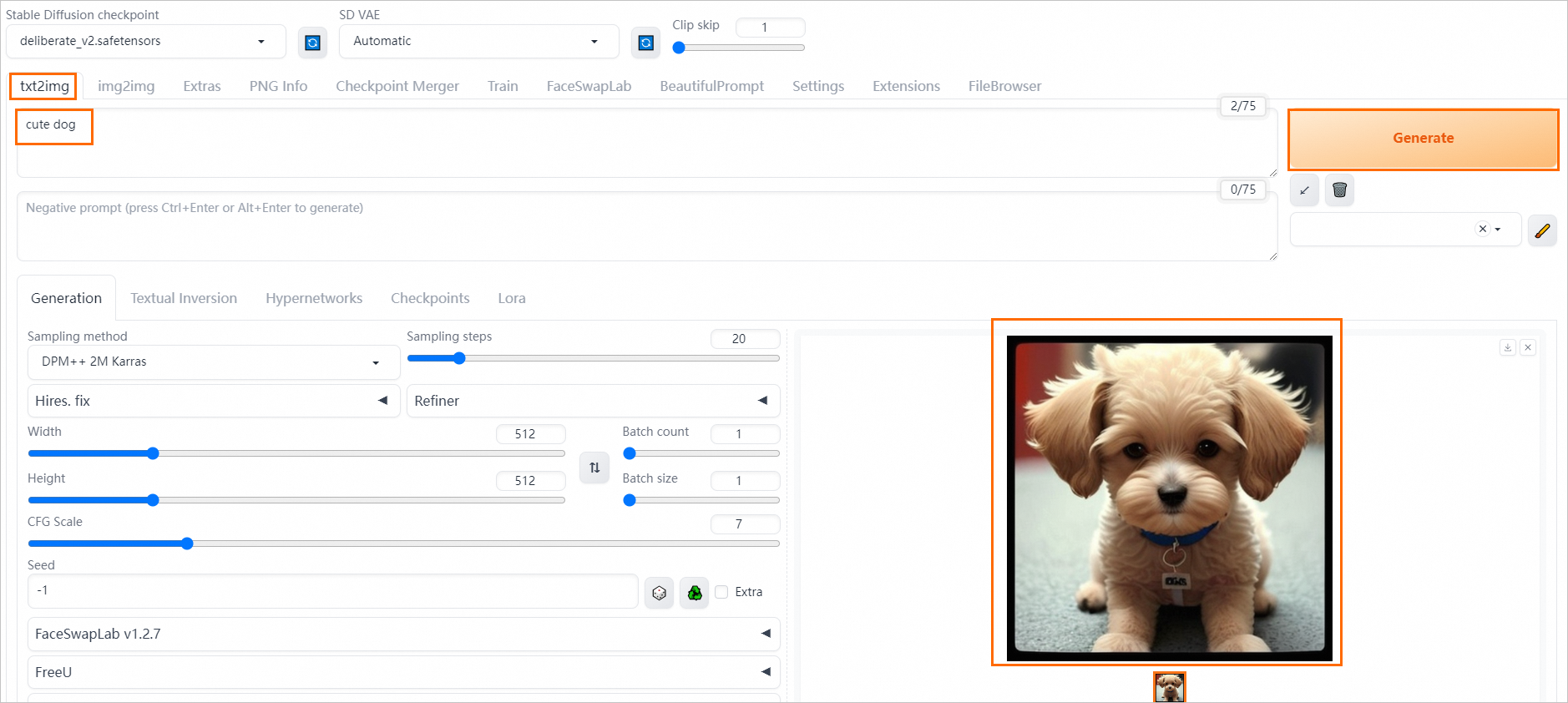

Perform model inference.

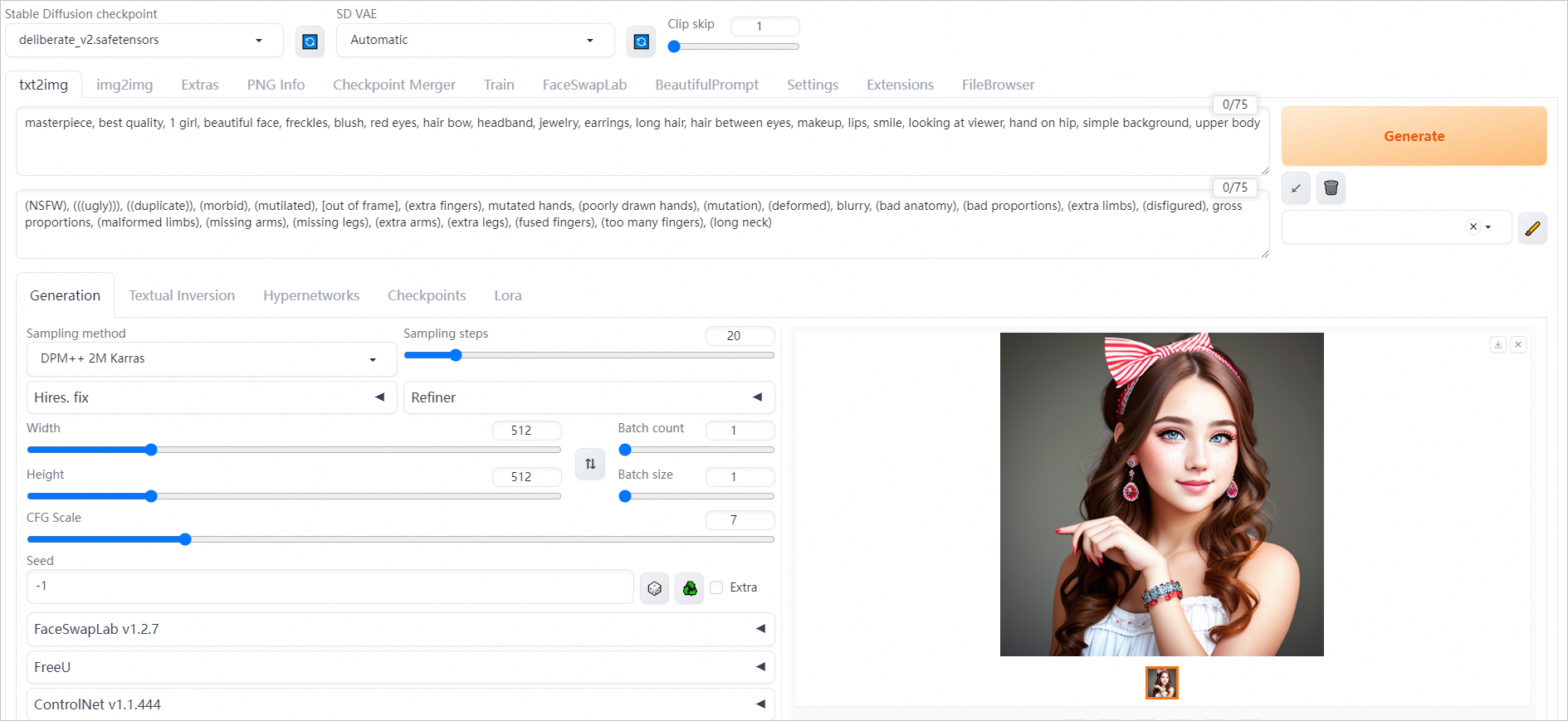

On the txt2img tab of the Stable Diffusion WebUI page, enter a positive prompt, such as

cute dog, and then click Generate. The following figure shows the generated image:

Call the service using API operations

You can use API operations to call Standard Edition or API Edition services. API calls support synchronous and asynchronous service calls. The Standard Edition service supports only synchronous calls. The API Edition service supports both synchronous and asynchronous service calls.

Synchronous call: Client waits for the result to return.

Asynchronous call: Client uses the EAS queue service to send requests and subscribes to results in the output queue.

Synchronous call

After deploying a Standard or API Edition service, send a synchronous request as follows.

Obtain the invocation information.

After the service is deployed, click the service name to go to the Overview page.

In the Basic Information section, click View Endpoint Information.

On the Invocation Information page, obtain the service endpoint and token.

If the service is the API Edition, you can obtain the service endpoint and token on the Synchronous Call tab.

For Standard Edition services, refer to the following figure to obtain the service endpoint and token.

Use one of the following methods to send a synchronous request.

Use a cURL command to send a service request

Sample code:

curl --location --request POST '<service_url>/sdapi/v1/txt2img' \ --header 'Authorization: <token>' \ --header 'Content-Type: application/json' \ --data-raw '{ "prompt":"cute dog ", "steps":20 }'Where:

<service_url>: Replace with the service endpoint that you obtained in Step 1. You need to delete the

/at the end of the endpoint.<token>: Set this parameter to the service token that you obtained in Step 1.

After you run the command, the system returns the Base64-encoded image.

Use Python to send a service request

Refer to the API to send service requests.

Example 1 (recommended): We recommend that you mount an OSS bucket to the EAS service to save the generated images. In the following example, the OSS mount path is used in the request body to save the image to OSS, and the oss2 SDK is used to download the image from OSS to your local device.

import requests import oss2 from oss2.credentials import EnvironmentVariableCredentialsProvider # Step 1: Send a request. The generated image is saved to OSS. url = "<service_url>" # The AccessKey pair of an Alibaba Cloud account has permissions on all API operations. Using these credentials to perform operations in OSS is a high-risk operation. We recommend that you use a RAM user to call API operations or perform routine O&M. To create a RAM user, log on to the RAM console. auth = oss2.ProviderAuth(EnvironmentVariableCredentialsProvider()) # In this example, the endpoint of the China (Hangzhou) region is used. Specify your actual endpoint. bucket = oss2.Bucket(auth, '<endpoint>', '<examplebucket>') payload = { "alwayson_scripts": { "sd_model_checkpoint": "deliberate_v2.safetensors", "save_dir": "/code/stable-diffusion-webui/data/outputs" }, "steps": 30, "prompt": "girls", "batch_size": 1, "n_iter": 2, "width": 576, "height": 576, "negative_prompt": "ugly, out of frame" } session = requests.session() session.headers.update({"Authorization": "<token>"}) response = session.post(url=f'{url}/sdapi/v1/txt2img', json=payload) if response.status_code != 200: raise Exception(response.content) data = response.json() # Step 2: Obtain images from OSS and download the images to your on-premises device. # The mount_path configuration for OSS that you specified when you deployed the service. mount_path = "/code/stable-diffusion-webui/data" # The OSS path that you specified when you deployed the service. oss_url = "oss://examplebucket/data-oss" for idx, img_path in enumerate(data['parameters']['image_url'].split(',')): # Obtain the actual URL of the generated image in OSS. img_oss_path = img_path.replace(mount_path, oss_url) print(idx, img_oss_path, url) # Download the OSS object to an on-premises file system. Replace <examplebucket> with the name of the OSS bucket that you created. bucket.get_object_to_file(img_oss_path[len("oss://examplebucket/"):], f'output-{idx}.png')The following table describes the key parameters:

Configuration

Description

url

Replace <service_url> with the service endpoint that you obtained in Step 1. You need to delete the

/at the end of the endpoint.bucket

Where:

<endpoint> refers to the configured OSS Endpoint. For example: the Endpoint for China (Shanghai) region is

http://oss-cn-shanghai.aliyuncs.com. If your service is deployed in another region, configure the parameter as needed. For more information, see OSS regions and endpoints.Replace <examplebucket> with the name of the OSS bucket that you created.

<token>

Set this parameter to the token that you obtained in Step 1.

mount_path

The OSS mount path that you configured when you deployed the service.

oss_url

The OSS storage path that you configured when you deployed the service.

If the code successfully runs, the following results are returned. You can go to the OSS console and view the generated images in the

outputsdirectory of the storage that you mounted when you deployed the service.0 /code/stable-diffusion-webui/data/outputs/txt2img-grids/2024-06-26/grid-093546-9ad3f23e-a5c8-499e-8f0b-6effa75bd04f.png oss://examplebucket/data-oss/outputs/txt2img-grids/2024-06-26/grid-093546-9ad3f23e-a5c8-499e-8f0b-6effa75bd04f.png 1 /code/stable-diffusion-webui/data/outputs/txt2img-images/2024-06-26/093536-ab4c6ab8-880d-4de6-91d5-343f8d97ea3c-3257304074.png oss://examplebucket/data-oss/outputs/txt2img-images/2024-06-26/093536-ab4c6ab8-880d-4de6-91d5-343f8d97ea3c-3257304074.png 2 /code/stable-diffusion-webui/data/outputs/txt2img-images/2024-06-26/093545-6e6370d7-d41e-4105-960a-b4739af30c0d-3257304075.png oss://examplebucket/data-oss/outputs/txt2img-images/2024-06-26/093545-6e6370d7-d41e-4105-960a-b4739af30c0d-3257304075.pngExample 2: Save an image to a local path. Run the following Python code to obtain the Base64-encoded image and save the image file to a local directory.

import requests import io import base64 from PIL import Image, PngImagePlugin url = "<service_url>" payload = { "prompt": "puppy dog", "steps": 20, "n_iter": 2 } session = requests.session() session.headers.update({"Authorization": "<token>"}) response = session.post(url=f'{url}/sdapi/v1/txt2img', json=payload) if response.status_code != 200: raise Exception(response.content) data = response.json() # You can obtain the Base64-encoded image from a synchronous request, but we recommend that you obtain an image URL instead. for idx, im in enumerate(data['images']): image = Image.open(io.BytesIO(base64.b64decode(im.split(",", 1)[0]))) png_payload = { "image": "data:image/png;base64," + im } resp = session.post(url=f'{url}/sdapi/v1/png-info', json=png_payload) pnginfo = PngImagePlugin.PngInfo() pnginfo.add_text("parameters", resp.json().get("info")) image.save(f'output-{idx}.png', pnginfo=pnginfo)Where:

<service_url>: Set this parameter to the service endpoint that you obtained in Step 1. Delete the trailing

/.<token>: Replace with the token that you obtained in Step 1.

You can also use LoRA and ControlNet data in the request to enable specific functionality.

LoRA configurations

When you send a service request, you can add

<lora:yaeMikoRealistic_Genshin:1000>in the request body to use a LoRA model. For more information, see LoRA.Sample request body:

{ "prompt":"girls <lora:yaeMikoRealistic_Genshin:1>", "steps":20, "save_images":true }ControlNet configurations

You can use the ControlNet data format in an API request to easily perform common transformations on the generated image, such as keeping the image horizontal or vertical. For more information about the configuration method, see Example: txt2img with ControlNet.

On the Invocation Method page, retrieve the service endpoint and the Token.

If the service is an API version, you can find the service endpoint and token on the Synchronous Call tab.

If the service is the Standard Edition, you can find the service endpoint and token as shown in the following figure.

Asynchronous call

After deploying an API Edition service, send an asynchronous request to subscribe to inference results in the output queue.

Obtain the invocation information.

Click View Endpoint Information in the Overview tab of the service. On the Asynchronous Call tab, view the service endpoint and token.

Send asynchronous requests. You can use the SDK for Python or SDK for Java.

ImportantThe queue service requires that input or output queues cannot exceed 8 KB in size. Take note of the following items:

If the request data contains an image, we recommend that you use a URL to pass the image information. SD WebUI automatically downloads and parses the image data.

To ensure that the response does not contain original image data, we recommend that you use the save_dir parameter to specify the path where the generated image is saved. For more information, see Additional configurable parameters for the API operations.

By default, EAS cannot connect to the Internet. If you use the image_link parameter to specify an image URL from the internet, you must configure public network access as described in EAS network settings so that the service can access the images.

Method 1: Use SDK for Python

Sample code:

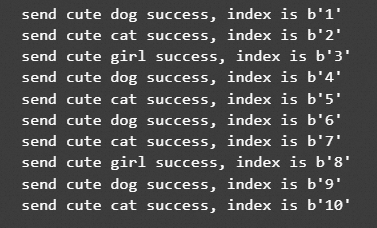

import requests url = "<service_url>" session = requests.session() session.headers.update({"Authorization": "<token>"}) prompts = ["cute dog", "cute cat", "cute girl"] for i in range(5): p = prompts[i % len(prompts)] payload = { "prompt": p, "steps": 20, "alwayson_scripts": { "save_dir": "/code/stable-diffusion-webui/data-oss/outputs/txt2img" }, } response = session.post(url=f'{url}/sdapi/v1/txt2img?task_id=txt2img_{i}', json=payload) if response.status_code != 200: exit(f"send request error:{response.content}") else: print(f"send {p} success, index is {response.content}") for i in range(5): p = prompts[i % len(prompts)] payload = { "prompt": p, "steps": 20, "alwayson_scripts": { "save_dir": "/code/stable-diffusion-webui/data-oss/outputs/img2img", "image_link": "https://eas-cache-cn-hangzhou.oss-cn-hangzhou-internal.aliyuncs.com/stable-diffusion-cache/tests/boy.png", }, } response = session.post(url=f'{url}/sdapi/v1/img2img?task_id=img2img_{i}', json=payload) if response.status_code != 200: exit(f"send request error:{response.content}") else: print(f"send {p} success, index is {response.content}")Where:

<service_url>: Replace the value with the service endpoint that you obtained in Step 1. Delete the

/at the end of the endpoint.<token>: Replace with the token that you obtained in Step 1.

NoteAll POST interfaces of the WebUI service are supported. You can send requests to the corresponding paths as needed.

If you want to pass custom information to the service, you can specify a custom tag using a URL parameter. For example, you can append the parameter

?task_id=task_abcto the URL to specify a tag named task_id, and the tag information is returned in the tags field of the service output.

If the code runs successfully, the system outputs the following result. Your actual results may vary.

Method 2: Use SDK for Java

Maven is used to manage Java projects. Therefore, you must add the required client dependencies to the pom.xml file. For more information, see SDK for Java.

Sample code:

import com.aliyun.openservices.eas.predict.http.HttpConfig; import com.aliyun.openservices.eas.predict.http.QueueClient; import com.aliyun.openservices.eas.predict.queue_client.QueueUser; import org.apache.commons.lang3.tuple.Pair; import java.util.HashMap; public class SDWebuiAsyncPutTest { public static void main(String[] args) throws Exception { // Create a client for the queue service. String queueEndpoint = "http://166233998075****.cn-hangzhou.pai-eas.aliyuncs.com"; String queueToken = "xxxxx=="; // The name of the input queue consists of the service name and the request path that you want to use. String inputQueueName = "<service_name>/sdapi/v1/txt2img"; // Create the input queue. After you add request data to the input queue, the inference service automatically reads the request data from the input queue. QueueClient inputQueue = new QueueClient(queueEndpoint, inputQueueName, queueToken, new HttpConfig(), new QueueUser()); // Clear queue data. Proceed with caution. // input_queue.clear(); // Add request data to the input queue. int count = 5; for (int i = 0; i < count; ++i) { // Create request data. String data = "{\n" + " \"prompt\": \"cute dog\", \n" + " \"steps\":20,\n" + " \"alwayson_scripts\":{\n" + " \"save_dir\":\"/code/stable-diffusion-webui/data-oss/outputs/txt2img\"\n" + " }\n" + " }"; // Create a custom tag. HashMap<String, String> map = new HashMap<String, String>(1); map.put("task_id", "txt2img_" + i); Pair<Long, String> entry = inputQueue.put(data.getBytes(), map); System.out.println(String.format("send success, index is %d, request_id is %s", entry.getKey(), entry.getValue())); // The queue service supports multi-priority queues. You can use the put function to set the priority level of the request. The default value is 0. A value of 1 specifies a high priority. // inputQueue.put(data.getBytes(), 0L, null); } // Close the client. inputQueue.shutdown(); inputQueueName = "<service_name>/sdapi/v1/img2img"; inputQueue = new QueueClient(queueEndpoint, inputQueueName, queueToken, new HttpConfig(), new QueueUser()); for (int i = 0; i < count; ++i) { // Create request data. String data = "{\n" + " \"prompt\": \"cute dog\", \n" + " \"steps\":20,\n" + " \"alwayson_scripts\":{\n" + " \"save_dir\":\"/code/stable-diffusion-webui/data-oss/outputs/img2img\",\n" + " \"image_link\":\"https://eas-cache-cn-hangzhou.oss-cn-hangzhou-internal.aliyuncs.com/stable-diffusion-cache/tests/boy.png\"\n" + " }\n" + " }"; HashMap<String, String> map = new HashMap<String, String>(1); map.put("task_id", "img2img_" + i); Pair<Long, String> entry = inputQueue.put(data.getBytes(), map); System.out.println(String.format("send success, index is %d, requestId is %s", entry.getKey(), entry.getValue())); } // Close the client. inputQueue.shutdown(); } }Where:

queueEndpoint: Configure as the service endpoint obtained in Step 1. Refer to the sample code to configure this parameter.

queueToken: Configure as the service token information obtained in Step 1.

<service_name>: Replace with the name of the deployed asynchronous service.

NoteIf you want to pass tags in the service, you can set the tag parameter in the put function. You can refer to the sample code for the usage of custom tags. The tag information is returned in the tag field of the output result.

If the code runs successfully, the system outputs the following result. Your actual results may vary.

send success, index is 21, request_id is 05ca7786-c24e-4645-8538-83d235e791fe send success, index is 22, request_id is 639b257a-7902-448d-afd5-f2641ab77025 send success, index is 23, request_id is d6b2e127-eba3-4414-8e6c-c3690e0a487c send success, index is 24, request_id is 8becf191-962d-4177-8a11-7e4a450e36a7 send success, index is 25, request_id is 862b2d8e-5499-4476-b3a5-943d18614fc5 send success, index is 26, requestId is 9774a4ff-f4c8-40b7-ba43-0b1c1d3241b0 send success, index is 27, requestId is fa536d7a-7799-43f1-947f-71973bf7b221 send success, index is 28, requestId is e69bdd32-5c7b-4c8f-ba3e-e69d2054bf65 send success, index is 29, requestId is c138bd8f-be45-4a47-a330-745fd1569534 send success, index is 30, requestId is c583d4f8-8558-4c8d-95f7-9c3981494007 Process finished with exit code 0Subscribe to the results of the asynchronous requests.

After the server completes processing related requests, the server automatically pushes the results to the client for efficient asynchronous communication. You can use SDK for Python or SDK for Java to subscribe to the results.

Method 1: Use SDK for Python

Sample code:

import json import oss2 from oss2.credentials import EnvironmentVariableCredentialsProvider from eas_prediction import QueueClient sink_queue = QueueClient('139699392458****.cn-hangzhou.pai-eas.aliyuncs.com', 'sd_async/sink') sink_queue.set_token('<token>') sink_queue.init() mount_path = "/code/stable-diffusion-webui/data-oss" oss_url = "oss://<examplebucket>/aohai-singapore" # The AccessKey pair of an Alibaba Cloud account has permissions on all API operations. Using these credentials to perform operations in OSS is a high-risk operation. We recommend that you use a RAM user to call API operations or perform routine O&M. To create a RAM user, log on to the RAM console. auth = oss2.ProviderAuth(EnvironmentVariableCredentialsProvider()) # In this example, the endpoint of the China (Hangzhou) region is used. Specify your actual endpoint. bucket = oss2.Bucket(auth, 'http://oss-cn-hangzhou.aliyuncs.com', '<examplebucket>') watcher = sink_queue.watch(0, 5, auto_commit=False) for x in watcher.run(): if 'task_id' in x.tags: print('index {} task_id is {}'.format(x.index, x.tags['task_id'])) print(f'index {x.index} data is {x.data}') sink_queue.commit(x.index) try: data = json.loads(x.data.decode('utf-8')) for idx, path in enumerate(data['parameters']['image_url'].split(',')): url = path.replace(mount_path, oss_url) # Download the OSS object to an on-premises file system. bucket.get_object_to_file(url[len("oss://<examplebucket>/"):], f'{x.index}-output-{idx}.png') print(f'save {url} to {x.index}-output-{idx}.png') except Exception as e: print(f'index {x.index} process data error {e}')The following table describes the key parameters:

Configuration

Description

sink_queue

Replace

139699392458****.cn-hangzhou.pai-eas.aliyuncs.comwith the service endpoint from the service address you obtained in the previous step, following the format shown in the sample code.Replace sd_async with the name of the asynchronous service that you deployed in the format shown in the sample code.

<token>

Set the value to the service token that you obtained in Step 1.

oss_url

Set this parameter to the OSS path that you specified when you deployed the service.

bucket

Where:

http://oss-cn-hangzhou.aliyuncs.comrepresents the OSS Endpoint configured using China (Hangzhou) region as an example. If your service is deployed in another region, specify your actual endpoint. For more information, see OSS regions and endpoints.Replace <examplebucket> with the name of the OSS bucket that you created.

NoteYou can perform the commit operation manually, or set

auto_commit=Trueto perform it automatically.If the client of the queue service stops consuming data, we recommend that you close the client to release resources.

You can also use a cURL command or call a synchronous API to subscribe to the results. For more information, see Deploy an asynchronous inference service.

The client continuously listens for results from the server using the

watcher.run()method. If the server returns no result, the client keeps waiting. If the server returns a result, the client prints the result. The following is a sample result. Your actual results may vary. You can go to the OSS console to view the generated images in the OSS storage path that you specified when you deployed the service.index 1 task_id is txt2img_0 index 1 data is b'{"images":[],"parameters":{"id_task":null,"status":0,"image_url":"/code/stable-diffusion-webui/data/outputs/txt2img/txt2img-images/2024-07-01/075825-a2abd45f-3c33-43f2-96fb-****50329671-1214613912.png","seed":"1214613912","error_msg":"","image_mask_url":""},"info":"{\\"hostname\\": \\"***-8aff4771-5c86c8d656-hvdb8\\"}"}' save oss://examplebucket/xx/outputs/txt2img/txt2img-images/2024-07-01/075825-a2abd45f-3c33-43f2-96fb-****50329671-1214613912.png to 1-output-0.png index 2 task_id is txt2img_1 index 2 data is b'{"images":[],"parameters":{"id_task":null,"status":0,"image_url":"/code/stable-diffusion-webui/data/outputs/txt2img/txt2img-images/2024-07-01/075827-c61af78c-25f2-47cc-9811-****aa51f5e4-1934284737.png","seed":"1934284737","error_msg":"","image_mask_url":""},"info":"{\\"hostname\\": \\"***-8aff4771-5c86c8d656-hvdb8\\"}"}' save oss://examplebucket/xx/outputs/txt2img/txt2img-images/2024-07-01/075827-c61af78c-25f2-47cc-9811-****aa51f5e4-1934284737.png to 2-output-0.png index 3 task_id is txt2img_2 index 3 data is b'{"images":[],"parameters":{"id_task":null,"status":0,"image_url":"/code/stable-diffusion-webui/data/outputs/txt2img/txt2img-images/2024-07-01/075829-1add1f5c-5c61-4f43-9c2e-****9d987dfa-3332597009.png","seed":"3332597009","error_msg":"","image_mask_url":""},"info":"{\\"hostname\\": \\"***-8aff4771-5c86c8d656-hvdb8\\"}"}' save oss://examplebucket/xx/outputs/txt2img/txt2img-images/2024-07-01/075829-1add1f5c-5c61-4f43-9c2e-****9d987dfa-3332597009.png to 3-output-0.png index 4 task_id is txt2img_3 index 4 data is b'{"images":[],"parameters":{"id_task":null,"status":0,"image_url":"/code/stable-diffusion-webui/data/outputs/txt2img/txt2img-images/2024-07-01/075832-2674c2d0-8a93-4cb5-9ff4-****46cec1aa-1250290207.png","seed":"1250290207","error_msg":"","image_mask_url":""},"info":"{\\"hostname\\": \\"***-8aff4771-5c86c8d656-hvdb8\\"}"}' save oss://examplebucket/xx/outputs/txt2img/txt2img-images/2024-07-01/075832-2674c2d0-8a93-4cb5-9ff4-****46cec1aa-1250290207.png to 4-output-0.png index 5 task_id is txt2img_4 index 5 data is b'{"images":[],"parameters":{"id_task":null,"status":0,"image_url":"/code/stable-diffusion-webui/data/outputs/txt2img/txt2img-images/2024-07-01/075834-8bb15707-ff0d-4dd7-b2da-****27717028-1181680579.png","seed":"1181680579","error_msg":"","image_mask_url":""},"info":"{\\"hostname\\": \\"***-8aff4771-5c86c8d656-hvdb8\\"}"}' save oss://examplebucket/xx/outputs/txt2img/txt2img-images/2024-07-01/075834-8bb15707-ff0d-4dd7-b2da-****27717028-1181680579.png to 5-output-0.pngMethod 2: Use SDK for Java

Sample code:

import com.aliyun.openservices.eas.predict.http.HttpConfig; import com.aliyun.openservices.eas.predict.http.QueueClient; import com.aliyun.openservices.eas.predict.queue_client.DataFrame; import com.aliyun.openservices.eas.predict.queue_client.QueueUser; import com.aliyun.openservices.eas.predict.queue_client.WebSocketWatcher; public class SDWebuiAsyncWatchTest { public static void main(String[] args) throws Exception { // Create a client for the queue service. String queueEndpoint = "http://166233998075****.cn-hangzhou.pai-eas.aliyuncs.com"; String queueToken = "xxxxx=="; // The name of the output queue consists of the service name and "/sink". String sinkQueueName = "<service_name>/sink"; // The output queue. The inference service processes the input data and writes the results to the output queue. QueueClient sinkQueue = new QueueClient(queueEndpoint, sinkQueueName, queueToken, new HttpConfig(), new QueueUser()); // Clear queue data. Proceed with caution. // sinkQueue.clear(); // Subscribe to the queue and obtain queue data. WebSocketWatcher watcher = sinkQueue.watch(0L, 5L, false, false, null); try { while (true) { DataFrame df = watcher.getDataFrame(); if (df.getTags().containsKey("task_id")) { System.out.println(String.format("task_id = %s", df.getTags().get("task_id"))); } System.out.println(String.format("index = %d, data = %s, requestId = %s", df.getIndex(), new String(df.getData()), df.getTags().get("requestId"))); sinkQueue.commit(df.getIndex()); } } catch (Exception e) { System.out.println("watch error:" + e.getMessage()); e.printStackTrace(); watcher.close(); } // Close the client. sinkQueue.shutdown(); } }Where:

queueEndpoint: Configure as the service endpoint obtained in Step 1. Refer to the sample code to configure this parameter.

queueToken: Configure as the service token information obtained in Step 1.

<service_name>: Replace with the name of the deployed asynchronous service.

NoteYou can manually commit the data, or set

auto_commit=Trueto automatically commit the data.If the client of the queue service stops consuming data, we recommend that you close the client to release resources.

You can also use a cURL command or call a synchronous API to subscribe to the results. For more information, see Deploy an asynchronous inference service.

The client uses the

watcher.getDataFrame()method to continuously listen for results from the server. If the server returns no result, the client keeps waiting. If the server returns a result, the client prints the result. The following shows a sample result. Your actual results may vary. You can go to the OSS console and view the generated images in the corresponding path.2023-08-04 16:17:31,497 INFO [com.aliyun.openservices.eas.predict.queue_client.WebSocketWatcher] - WebSocketClient Successfully Connects to Server: 1396993924585947.cn-hangzhou.pai-eas.aliyuncs.com/116.62.XX.XX:80 task_id = txt2img_0 index = 21, data = {"images":[],"parameters":{"id_task":null,"status":0,"image_url":"/code/stable-diffusion-webui/data-oss/outputs/txt2img/txt2img-images/2023-08-04/54363a9d-24a5-41b5-b038-2257d43b8e79-412510031.png","seed":"412510031","error_msg":"","total_time":2.5351321697235107},"info":""}, requestId = 05ca7786-c24e-4645-8538-83d235e791fe task_id = txt2img_1 index = 22, data = {"images":[],"parameters":{"id_task":null,"status":0,"image_url":"/code/stable-diffusion-webui/data-oss/outputs/txt2img/txt2img-images/2023-08-04/0c646dda-4a53-43f4-97fd-1f507599f6ae-2287341785.png","seed":"2287341785","error_msg":"","total_time":2.6269655227661133},"info":""}, requestId = 639b257a-7902-448d-afd5-f2641ab77025 task_id = txt2img_2 index = 23, data = {"images":[],"parameters":{"id_task":null,"status":0,"image_url":"/code/stable-diffusion-webui/data-oss/outputs/txt2img/txt2img-images/2023-08-04/4d542f25-b9cc-4548-9db2-5addd0366d32-1158414078.png","seed":"1158414078","error_msg":"","total_time":2.6604185104370117},"info":""}, requestId = d6b2e127-eba3-4414-8e6c-c3690e0a487c task_id = txt2img_3After you send an asynchronous request and subscribe to its results, you can use the

search()method to query the request status. For more information, see SDK for Python and SDK for Java.

EAS supports new features on top of the native Stable Diffusion WebUI API. You can add optional parameters in the request data to enable more advanced features or meet custom requirements. For more information, see Additional configurable parameters for the API operations.

Install extensions for enhanced features

Configure extensions for Stable Diffusion WebUI to enable features. PAI provides preset extensions like BeautifulPrompt for expanding and polishing prompts. This section uses BeautifulPrompt as an example.

Install the extension

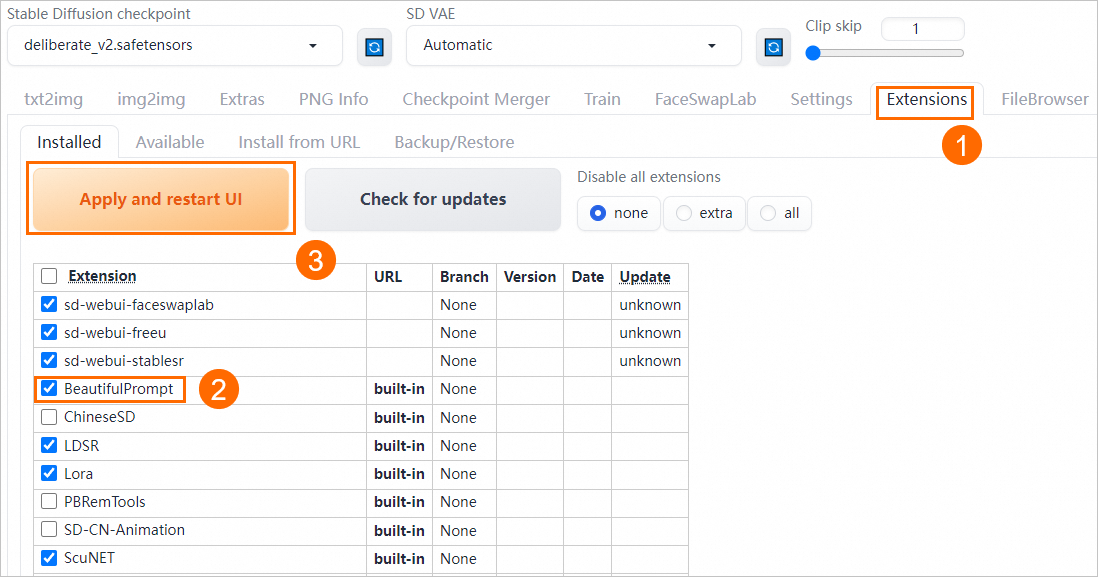

View and install extensions on the Extensions tab of the web UI.

Click Web Application in the Overview tab of the target service to start the web UI.

On the Extensions tab of the web UI page, check whether BeautifulPrompt is selected. If it is not selected, select the extension and click Apply and restart UI to reload the BeautifulPrompt extension.

When you install the extension, the WebUI page will automatically restart. After it is reloaded, you can perform inference verification.

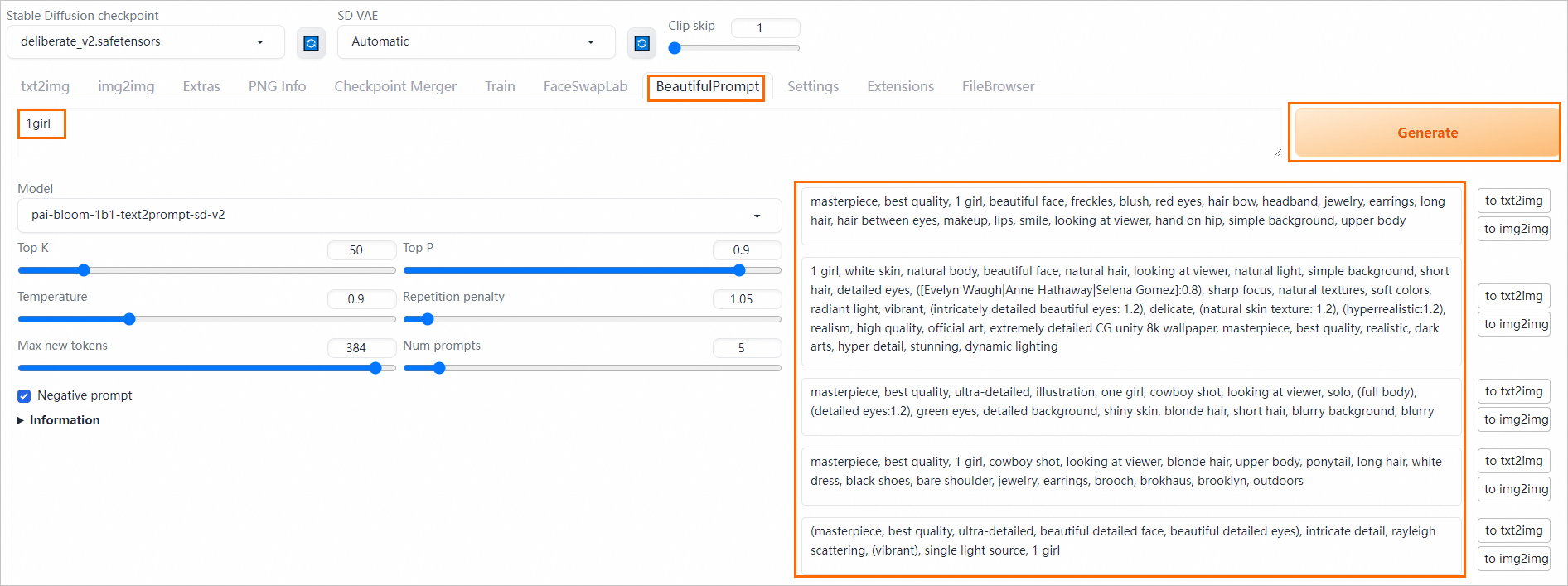

Use the extension for inference verification

On the BeautifulPrompt tab, enter a simple prompt in the text box and click Generate to generate a more detailed prompt.

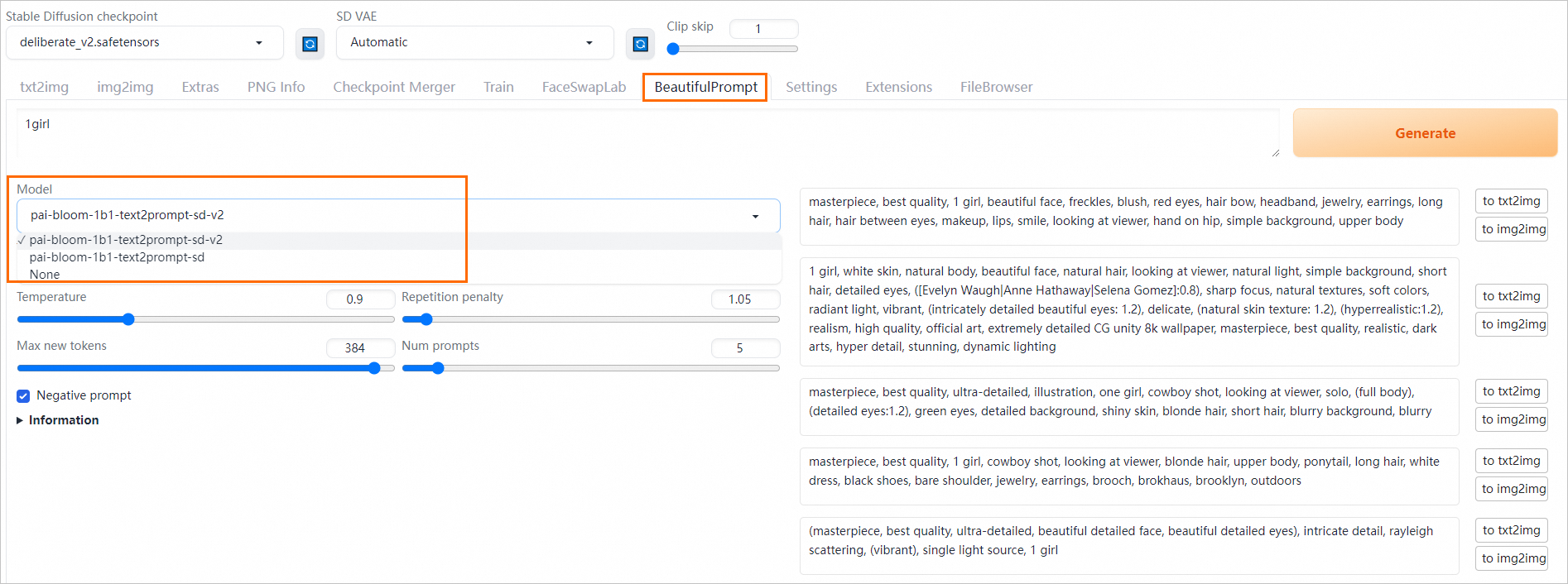

PAI provides multiple prompt generation models. The prompt generated by each model varies:

pai-bloom-1b1-text2prompt-sd-v2: Excels at generating prompts for complex scenarios.

pai-bloom-1b1-text2prompt-sd: Generates prompts that describe a single object.

You can select an appropriate model to generate prompts based on your scenario needs.

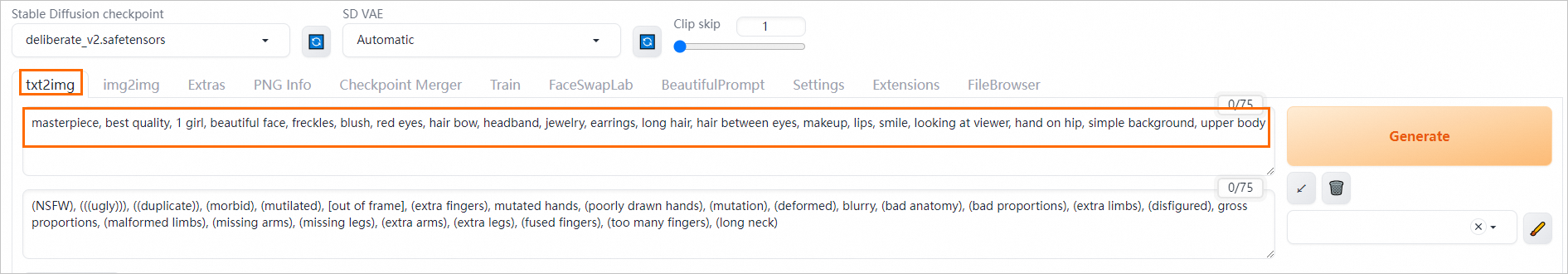

Select the Prompt that you want to use, and click to txt2img next to the Prompt.

The page automatically switches to the Text-to-image tab, and the Prompt area is automatically populated.

Click Generate to generate an image on the right side of the web UI page.

BeautifulPrompt improves image aesthetics and adds more details. Comparison before and after using BeautifulPrompt:

Input prompt

Effect without BeautifulPrompt

With BeautifulPrompt

a cat

a giant tiger

FAQ

How do I use my model and output directory?

What do I do if the service freezes for a long period of time?

How do I change the default language of the web application interface to English?

How do I manage my file system?

Error: No such file or directory: 'data-oss/data-********.png'

What can I do if I cannot access the WebUI page

Appendix

Optional configuration parameters when creating a service

Common parameters

Common parameter

Description

Usage recommendations

--blade

Enables PAI-Blade to accelerate the image generation.

We recommend that you enable this feature.

--filebrowser

Allows you to upload and download models or images.

By default, this feature is enabled.

--data-dir /code/stable-diffusion-webui/data-oss

The path used to mount the persistent storage.

Used when mounting persistent storage, the default starting path is

/code/stable-diffusion-webui/, or you can use a relative path.--api

The API calling mode of the web UI.

By default, this feature is enabled.

--enable-nsfw-censor

By default, this feature is disabled. If you require security compliance, you can enable the pornography detection feature.

Enable the feature as needed.

--always-hide-tabs

Hide specific tabs.

Enable the feature as needed.

--min-ram-reserved 40 --sd-dynamic-cache

Cache the Stable Diffusion model to memory.

N/A

Cluster Edition parameters

NoteThe ckpt and ControlNet models automatically load files in the public directory and the custom files.

Cluster Edition parameters

Description

Usage notes

--lora-dir

Specify the public LoRA model directory, for example:

--lora-dir /code/stable-diffusion-webui/data-oss/models/Lora.By default, this parameter is not configured. All LoRA directories of the user are isolated and only LoRA models in the user folder are loaded. If you specify a directory, all users load the LoRA models in the public directory and the LoRA models in the user folder.

--vae-dir

Specify the public VAE model directory, for example:

--vae-dir /code/stable-diffusion-webui/data-oss/models/VAE.By default, this parameter is not configured. All VAE directories of the user are isolated and only VAE models in the user folder are loaded. If you specify a directory, all users load the VAE models in the public directory.

--gfpgan-dir

Specify the public GFPGAN model directory, for example:

--gfpgan-dir /code/stable-diffusion-webui/data-oss/models/GFPGAN.By default, this parameter is not configured. All GFPGAN directories of the user are isolated and only GFPGAN models in the user folder are loaded. If you specify a directory, all users load only the GFPGAN models in the public directory.

--embeddings-dir

Specify the public embeddings model directory, for example:

--embeddings-dir /code/stable-diffusion-webui/data-oss/embeddings.By default, this parameter is not configured. All embeddings directories of the user are isolated and only embedding models in the user folder are loaded. If you specify a directory, all users load the embedding models in the public directory.

--hypernetwork-dir

Specify the public hypernetwork model directory, for example:

--hypernetwork-dir /code/stable-diffusion-webui/data-oss/models/hypernetworks.By default, this parameter is not configured. All hypernetwork directories of the user are isolated and only hypernetwork models in the user folder are loaded. If you specify a directory, all users load only the hypernetwork models in the public directory.

--root-extensions

Uses the extension directory as a public directory. If you configure this parameter, all users can see the same extensions.

If you want to install or manage extensions in a centralized manner, use this parameter.

What parameters can I configure for an API operation?

EAS adds support for new features to the native Stable Diffusion WebUI API. In addition to the required parameters, you can add optional parameters to API requests to enable more functionalities and meet custom requirements:

Configure the SD model, the VAE model, and the path to save the generated images.

Use URL parameters to send requests, for which status codes are returned.

Access the generated images through URLs, including the images that are processed by ControlNet.

Sample code:

Txt2img request and response example

Sample request body:

{

"alwayson_scripts": {

"sd_model_checkpoint": "deliberate_v2.safetensors",

"save_dir": "/code/stable-diffusion-webui/data-oss/outputs",

"sd_vae": "Automatic"

},

"steps": 20,

"prompt": "girls",

"batch_size": 1,

"n_iter": 2,

"width": 576,

"height": 576,

"negative_prompt": "ugly, out of frame"

}The following table describes the key parameters:

sd_model_checkpoint: Specifies the Stable Diffusion model parameter, which can automatically switch to a large model.

sd_vae: Specifies the VAE model.

save_dir: Specifies the path to save the generated images.

Sample synchronous request:

# Call the synchronous API to test the model.

curl --location --request POST '<service_url>/sdapi/v1/txt2img' \

--header 'Authorization: <token>' \

--header 'Content-Type: application/json' \

--data-raw '{

"alwayson_scripts": {

"sd_model_checkpoint": "deliberate_v2.safetensors",

"save_dir": "/code/stable-diffusion-webui/data-oss/outputs",

"sd_vae": "Automatic"

},

"prompt": "girls",

"batch_size": 1,

"n_iter": 2,

"width": 576,

"height": 576,

"negative_prompt": "ugly, out of frame"

}'

Sample response format:

{

"images": [],

"parameters": {

"id_task": "14837",

"status": 0,

"image_url": "/code/stable-diffusion-webui/data-oss/outputs/txt2img-grids/2023-07-24/grid-29a67c1c-099a-4d00-8ff3-1ebe6e64931a.png,/code/stable-diffusion-webui/data-oss/outputs/txt2img-images/2023-07-24/74626268-6c81-45ff-90b7-faba579dc309-1146644551.png,/code/stable-diffusion-webui/data-oss/outputs/txt2img-images/2023-07-24/6a233060-e197-4169-86ab-1c18adf04e3f-1146644552.png",

"seed": "1146644551,1146644552",

"error_msg": "",

"total_time": 32.22393465042114

},

"info": ""

}Sample asynchronous request:

# Send the data to the asynchronous queue.

curl --location --request POST '<service_url>/sdapi/v1/txt2img' \

--header 'Authorization: <token>' \

--header 'Content-Type: application/json' \

--data-raw '{

"alwayson_scripts": {

"sd_model_checkpoint": "deliberate_v2.safetensors",

"id_task": "14837",

"uid": "123",

"save_dir": "tmp/outputs"

},

"prompt": "girls",

"batch_size": 1,

"n_iter": 2,

"width": 576,

"height": 576,

"negative_prompt": "ugly, out of frame"

}'Img2img request data format example

Sample request body:

{

"alwayson_scripts": {

"image_link":"https://eas-cache-cn-hangzhou.oss-cn-hangzhou-internal.aliyuncs.com/stable-diffusion-cache/tests/boy.png",

"sd_model_checkpoint": "deliberate_v2.safetensors",

"sd_vae": "Automatic",

"save_dir": "/code/stable-diffusion-webui/data-oss/outputs"

},

"prompt": "girl",

"batch_size": 1,

"n_iter": 2,

"width": 576,

"height": 576,

"negative_prompt": "ugly, out of frame",

"steps": 20, # Sampling steps

"seed": 111,

"subseed": 111, # Variation seed

"subseed_strength": 0, # Variation strength

"seed_resize_from_h": 0, # Resize seed from height

"seed_resize_from_w": 0, # Resize seed from width

"seed_enable_extras": false, # Extra

"sampler_name": "DDIM", # Sampling method

"cfg_scale": 7.5, # CFG Scale

"restore_faces": true, # Restore faces

"tiling": false, # Tiling

"init_images": [], # image base64 str, default None

"mask_blur": 4, # Mask blur

"resize_mode": 1, # 0 just resize, 1 crop and resize, 2 resize and fill, 3 just resize

"denoising_strength": 0.75, # Denoising strength

"inpainting_mask_invert": 0, #int, index of ['Inpaint masked', 'Inpaint not masked'], Mask mode

"inpainting_fill": 0, #index of ['fill', 'original', 'latent noise', 'latent nothing'], Masked content

"inpaint_full_res": 0, # index of ["Whole picture", "Only masked"], Inpaint area

"inpaint_full_res_padding": 32, #minimum=0, maximum=256, step=4, value=32, Only masked padding, pixels

#"image_cfg_scale": 1, # resized by scale

#"script_name": "Outpainting mk2", # The name of the script. Skip this field if you do not use a script.

#"script_args": ["Outpainting", 128, 8, ["left", "right", "up", "down"], 1, 0.05] # The parameters of the script in the following order: fixed field, pixels, mask_blur, direction, noise_q, and color_variation.

}Sample response format:

{

"images":[],

"parameters":{

"id_task":"14837",

"status":0,

"image_url":"/data/api_test/img2img-grids/2023-06-05/grid-0000.png,/data/api_test/img2img-images/2023-06-05/00000-1003.png,/data/api_test/img2img-images/2023-06-05/00001-1004.png",

"seed":"1003,1004",

"error_msg":""

},

"info":""

}Txt2img with ControlNet data format

Sample request format:

{

"alwayson_scripts": {

"sd_model_checkpoint": "deliberate_v2.safetensors", # The name of the model.

"save_dir": "/code/stable-diffusion-webui/data-oss/outputs",

"controlnet":{

"args":[

{

"image_link": "https://pai-aigc-dataset.oss-cn-hangzhou.aliyuncs.com/pixabay_images/00008b87bf3ff6742b8cf81c358b9dbc.jpg",

"enabled": true,

"module": "canny",

"model": "control_v11p_sd15_canny",

"weight": 1,

"resize_mode": "Crop and Resize",

"low_vram": false,

"processor_res": 512,

"threshold_a": 100,

"threshold_b": 200,

"guidance_start": 0,

"guidance_end": 1,

"pixel_perfect": true,

"control_mode": "Balanced",

"input_mode": "simple",

"batch_images": "",

"output_dir": "",

"loopback": false

}

]

}

},

# Key parameters.

"prompt": "girls",

"batch_size": 1,

"n_iter": 2,

"width": 576,

"height": 576,

"negative_prompt": "ugly, out of frame"

}Sample response format:

{

"images":[],

"parameters":{

"id_task":"14837",

"status":0,

"image_url":"/data/api_test/txt2img-grids/2023-06-05/grid-0007.png,/data/api_test/txt2img-images/2023-06-05/00014-1003.png,/data/api_test/txt2img-images/2023-06-05/00015-1004.png",

"seed":"1003,1004",

"error_msg":"",

"image_mask_url":"/data/api_test/controlnet_mask/2023-06-05/00000.png,/data/api_test/controlnet_mask/2023-06-05/00001.png"

},

"info":""

}References

For more information about billing for EAS, see Billing of Elastic Algorithm Service (EAS).