This topic describes how to run and debug a Spark job locally after the Spark job is developed.

Prerequisites

- The public endpoint of Lindorm Distributed Processing System (LDPS) is available.

- The local IP address is added to the allowlist of the Lindorm instance. For information about how to configure an allowlist, see Configure whitelists.

- A project of the Spark job is available.

Run a Spark job locally

- Click the download link of the environment installation package to download the latest environment installation package of LDPS.

- Decompress the package. You can specify a path in which the package is decompressed.

- Configure the environment variables of LDPS locally. Specify the value of the SPARK_HOME environment variable as the path in which the package is decompressed.

- To configure the environment variables of LDPS in Windows, perform the following steps:

- On your local machine, go to the System Properties page ,and click Environment Variables....

- In the Environment Variables window, click New... in the System variables section.

- In the New System Variable dialog box, configure the following parameters:

- Variable name: Enter SPARK_HOME.

- Variable value: Enter the path in which the package is decompressed.

- Click OK.

- Click Apply.

- To configure the environment variables of LDPS on Linux, run the

export SPARK_HOME="<Path in which the package is decompressed>"command and add the command to~/.bashrc.

- To configure the environment variables of LDPS in Windows, perform the following steps:

- Package the project of the Spark job, and use $SPARK_HOME/bin/spark-submit to submit the Spark job. Example of submitting a job:

- In this example, a Spark job is submitted. For more information, see Sample Spark job. Download and decompress the package of the project of the Spark job.

- Set the following parameters.

Parameter Value Description Parameter Value Description spark.sql.catalog.lindorm_table.url ld-bp1z3506imz2f****-proxy-lindorm-pub.lindorm.rds.aliyuncs.com:30020. The public endpoint that is used to connect to LindormTable. Enter the public endpoint that is used to connect to LindormTable of a Lindorm instance by calling an HBase Java API operation. The endpoint can be used to connect to only wide table engines of the same Lindorm instance. spark.sql.catalog.lindorm_table.username The default username is root. The username that is used to connect to LindormTable. spark.sql.catalog.lindorm_table.password The default password is root. The password that is used to connect to LindormTable. $SPARK_HOME/bin/spark-submit \ # You can run the -- jars command to add the JAR file as a dependency for the job. To obtain information about other parameters, run spark-submit -h. --class com.aliyun.lindorm.ldspark.examples.LindormSparkSQLExample \ lindorm-spark-examples/target/lindorm-spark-examples-1.0-SNAPSHOT.jarIf you do not specify the running mode of the Spark job when you submit the Spark job, the Spark job is run locally by default. You can also specify the spark.master=local[*] parameter to run the Spark job locally. - Create a database table based on the schema that is specified in the SQL statement.

- Run the

mvn clean packagecommand to package the job.

- After a Spark job is developed locally, you can submit a JAR job to run the Spark job on the cloud. For more information, see Step 1: Add dependencies. Change the endpoint that is used in the Spark job to the virtual private cloud (VPC) endpoint of LDPS.

Debug a Spark job

The following section provides an example on how to use IntelliJ IDEA to debug a Spark job. For more information, see Sample Spark job. Download IntelliJ IDEA from the official website of IntelliJ IDEA.

- Perform Step 1 to Step 3 that is described in the "Run the Spark job locally" section.

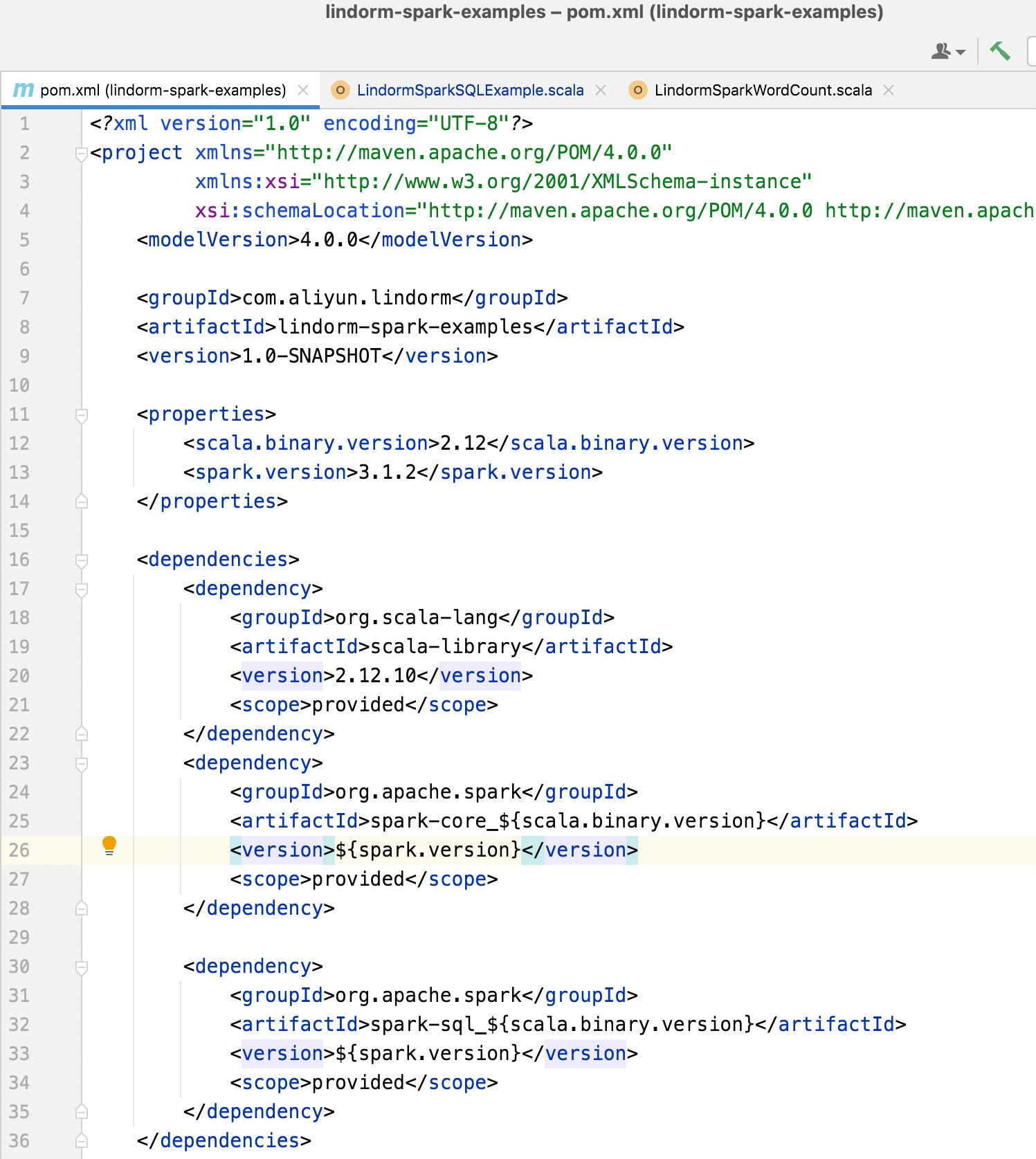

- Open IntelliJ IDEA. Set the dependencies for the Spark job in the pom.xml file to

<scope>provided</scope>.

- Add $SPARK_HOME/jars to project dependencies.

- In the top navigation bar of IntelliJ IDEA, choose .

- In the left-side navigation pane, choose , and click the add sign (+) to add a Java class library.

- Select $SPARK_HOME/jars.

- Click OK.

- Run the Spark job. When the Spark job is running, you can view the SparkUI by using the endpoint in the log.

2022/01/14 15:27:58 INFO SparkUI:Bound SparkUI to 0.0.0.0,and started at http://30.25.XX.XX:4040