In audio and video systems, transcoding consumes much computing power. You can use Function Compute and CloudFlow to build an elastic and highly available audio and video processing system in a serverless architecture. This topic describes the differences in engineering efficiency, O&M, performance, and costs between traditional and serverless solutions for audio and video processing. It also describes how to build and use a serverless audio and video processing system.

Background

You can use dedicated cloud-based transcoding services. However, you may want to build your own transcoding service in certain scenarios. Self-managed transcoding services can help meet the following requirements:

Auto scaling

You want to improve the elasticity of your video processing service.

For example, you have deployed a video processing service on a virtual machine or container platform using FFmpeg, and you want to enhance its elasticity and availability.

Efficient engineering

You want to process multiple videos simultaneously.

You want to process multiple oversized videos within a short period of time.

For example, every Friday, hundreds of 1080p videos, each larger than 4 GB, are produced and need to be processed within a few hours.

Custom processing

You have advanced custom processing requirements.

For example, you want to record the transcoding details in your database each time a video is transcoded. Or you may want trending videos to be automatically prefetched to Alibaba Cloud CDN (CDN) nodes once they are transcoded to relieve the pressure on origin servers.

You want to convert audio formats, customize sampling rates, or filter out background noise.

You want to directly read and process source files.

For example, your video files are stored in File Storage NAS (NAS) or on disks that are attached to Elastic Compute Service (ECS) instances, and you want your video processing system to directly read and process those files from their current storage locations, eliminating the need to migrate the files to an Object Storage Service (OSS) bucket first.

You want to convert the formats of videos and add more features.

For example, you want to add transcoding parameter adjustment and other features to your video processing system, which can already transcode videos, add watermarks, and generate GIF thumbnails. You also want to make sure that the existing online services provided by the system remain unaffected when new features are released.

Cost control

You want to implement simple transcoding or lightweight media processing services.

For example, you want to generate a GIF image based on the first few frames of a video, or query the duration of an audio file or a video file. In this case, building your own media processing system is more cost-effective.

You can use a traditional self-managed architecture or a serverless solution to build your own transcoding services. This topic compares the two options and describes the procedure of deploying a serverless solution.

Solutions

Traditional self-managed architecture

With the development of computer technology and the Internet, the products of cloud computing vendors are constantly maturing and improving. You can use Alibaba Cloud services, such as ECS, OSS, and CDN, to build an audio and video processing system to store audio and video files and accelerate playbacks of the files.

Serverless solutions

Simple video processing system

The following figure shows the architecture of a solution that you can use to perform simple processing on videos.

When you upload a video to OSS, OSS automatically triggers the execution of a function, which then calls FFmpeg to transcode the video and saves the transcoded video to OSS.

For more information about the demo and procedure of the simple video processing system, see Simple video processing system.

Video processing workflow system

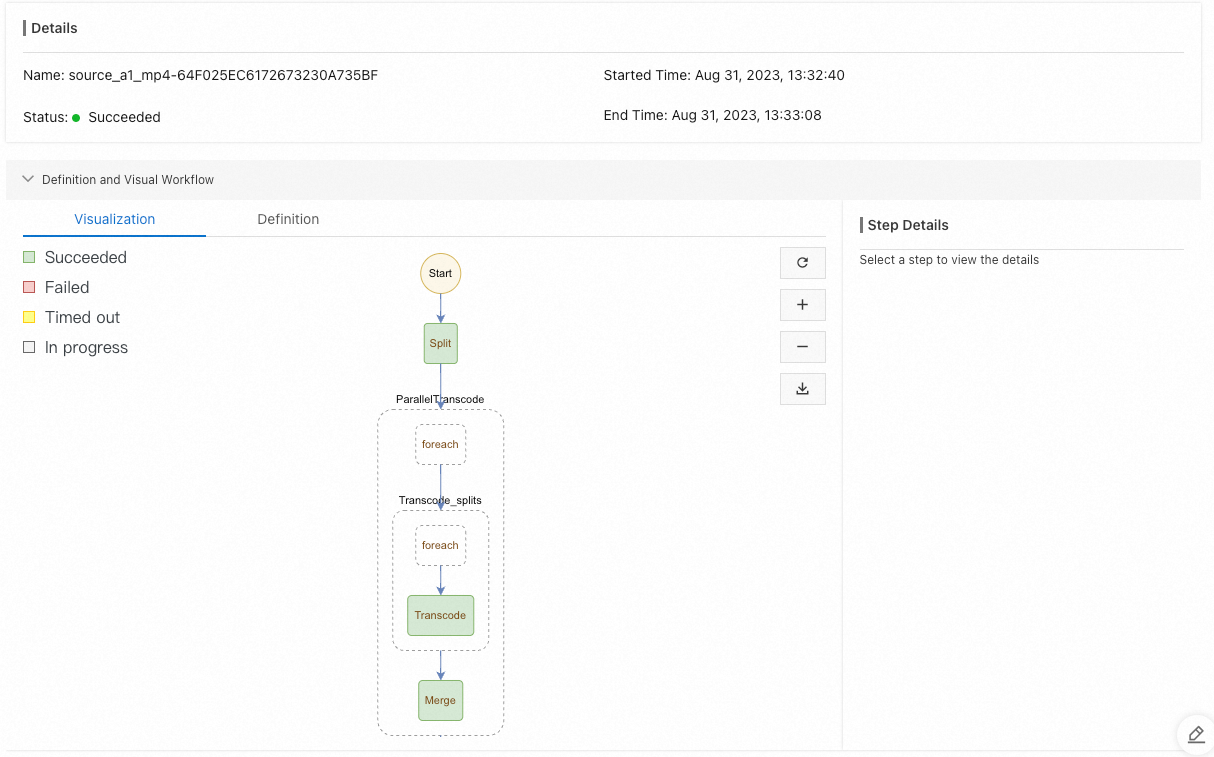

If you want to accelerate the transcoding of large videos or perform complex operations on videos, use CloudFlow to orchestrate functions and build a powerful video processing system. The following figure shows the architecture of the solution.

When you upload an MP4 video to OSS, OSS triggers the execution of a function, which then invokes CloudFlow to transcode the video format. You can use this solution in the following scenarios:

A video can be transcoded to various formats at the same time and processed based on custom requirements, such as adding watermarks to the video or updating video details in the database after processing.

When multiple files are uploaded to OSS at the same time, Function Compute performs auto scaling to process the files in parallel and simultaneously transcode them into multiple formats.

You can use NAS and video segmentation to transcode oversized videos. Each oversized video is first segmented, then the segments are transcoded in parallel, and finally, they are merged back into a single video. Proper segmentation intervals significantly improve the transcoding speed of large videos.

NoteVideo segmentation refers to splitting a video stream into multiple segments at specified time intervals and recording information about those segments in a generated index file.

For more information about the demo and procedure of the video processing workflow system, see Video processing workflow system.

Benefits of a serverless solution

Improved engineering efficiency

Item | Serverless solution | Traditional self-managed architecture |

Infrastructure | None. | You must purchase and manage infrastructure resources. |

Development efficiency | You can focus on the development of business logic and use Serverless Devs to orchestrate and deploy resources. | In addition to developing business logic, you must also build an online runtime environment. This includes software installation, service configurations, and security updates. |

Parallel and distributed video processing | Once they are orchestrated by CloudFlow, the functions allow for the parallel processing of multiple videos and facilitate the segmentation of a large video for distributed processing. You do not need to worry about stability and monitoring, as Alibaba Cloud takes care of these aspects. | Strong development capabilities and a sound monitoring system are required to ensure the stability of the video processing system. |

Training costs | You only need to write code in the corresponding programming language and be familiar with FFmpeg. | In addition to programming languages and FFmpeg, you may also need to use Kubernetes and ECS. This requires you to have a better understanding of services, terms, and parameters. |

Business cycle | The solution requires about three person-days: two for development and debugging, and one for stress testing and observation. | The system is estimated to require at least 30 person-days to complete the following tasks: hardware procurement, software and environment configurations, system development, testing, monitoring and alerting configurations, and canary release. Note that the workload of business logic development is not included in this estimate. |

Auto scaling and O&M-free

Item | Serverless solution | Traditional self-managed architecture |

Elasticity and high availability | Function Compute allows millisecond-level auto scaling and can quickly scale out the underlying resources to cope with burst traffic. This O&M-free system delivers excellent transcoding performance. | You must manage a Server Load Balancer (SLB) instance to implement auto scaling, and the scaling speed of SLB is slower compared to Function Compute. |

Monitoring and alerting metrics | More fine-grained metrics are provided for you to monitor executions in CloudFlow and Function Compute. You can query the latency and logs of each function execution. In addition, the solution uses a more comprehensive monitoring and alerting mechanism. | Only metrics on auto scaling or containers are provided. |

Excellent transcoding performance

For example, a cloud service takes 188 seconds to convert an 89-second MOV video file to the MP4 format, which is a typical transcoding process. This duration, 188 seconds, is marked as T for reference. The following formula is used to calculate the performance acceleration rate: Performance acceleration rate = T/Function Compute transcoding duration.

Video segmentation interval (s) | Function Compute transcoding duration (s) | Performance acceleration rate (%) |

45 | 160 | 117.5 |

25 | 100 | 188 |

15 | 70 | 268.6 |

10 | 45 | 417.8 |

5 | 35 | 537.1 |

The performance acceleration rates in the preceding table apply only to CPU instances. If you use GPU-accelerated instances, see Best practices for audio and video processing.

High cost-effectiveness

In specific scenarios, using Function Compute can reduce video processing costs. The serverless solution even has great cost competitiveness over dedicated video transcoding services from other cloud vendors.

This section uses the conversion between MP4 and FLV files to compare the costs of using Function Compute and another cloud service. In this example, the memory capacity of Function Compute is set to 3 GB. The following table compares the costs.

The following formula is used to calculate the cost reduction rate: Cost reduction rate = (Cost of another cloud service - Function Compute transcoding cost)/Cost of another cloud service.

Table 1. MP4 to FLV

Resolution | Speed | Frame rate | Function Compute transcoding duration | Function Compute transcoding cost | Cost of another cloud service | Cost reduction rate |

Standard definition (SD): 640 × 480 pixels | 889 KB/s | 24 | 11.2s | 0.003732288 | 0.032 | 88.3% |

High definition (HD): 1280 × 720 pixels | 1963 KB/s | 24 | 20.5s | 0.00683142 | 0.065 | 89.5% |

Ultra HD: 1920 × 1080 pixels | 3689 KB/s | 24 | 40s | 0.0133296 | 0.126 | 89.4% |

4K 3840*2160 | 11185 KB/s | 24 | 142s | 0.04732008 | 0.556 | 91.5% |

Table 2. FLV to MP4

Resolution | Speed | Frame rate | Function Compute transcoding duration | Function Compute transcoding cost | Cost of another cloud service | Cost reduction rate |

Standard definition (SD): 640 × 480 pixels | 712 KB/s | 24 | 34.5s | 0.01149678 | 0.032 | 64.1% |

High definition (HD): 1280 × 720 pixels | 1806 KB/s | 24 | 100.3s | 0.033424 | 0.065 | 48.6% |

Ultra HD: 1920 × 1080 pixels | 3911 KB/s | 24 | 226.4s | 0.0754455 | 0.126 | 40.1% |

4K 3840*2160 | 15109 KB/s | 24 | 912s | 0.30391488 | 0.556 | 45.3% |

The other cloud service follows standard transcoding billing rules, which means that video durations are rounded up to the nearest full minutes for billing purposes. In this example, the billing duration is 2 minutes. Even if you are charged for 1.5 minutes instead of 2, the difference in payment would be minimal, and the cost reduction rate would vary by less than 10%.

The preceding tables describe the prices for transcoding videos between FLV and MP4 formats. FLV videos are more complex than MP4 videos in transcoding. As shown by the metrics displayed in the tables, in both transcoding directions, the solution based on Function Compute and CloudFlow is more cost-effective in terms of computing resources compared with the solution provided by the other cloud service provider. Based on practical experience, the actual cost reduction is more apparent than what is described in the previous tables. The following items describe the reasons for the reduction in costs:

The test videos have high bitrates, whereas most of the videos in actual use are in the SD or low definition (LD) quality and they have lower bitrates. The videos in actual use require fewer computing resources. Transcoding by Function Compute takes less time and thus the costs become lower. However, the configured billing policies of general cloud transcoding services do not vary with the video quality or bitrate.

For videos with certain resolutions, the billing rules adopted by general cloud transcoding services have a significant adverse effect on the cost-effectiveness. For example, a video with a resolution of 856 × 480 pixels is billed as an HD video whose resolution is 1280 × 720 pixels. Similarly, a video with a resolution of 1368 × 768 pixels is billed as an ultra HD video whose resolution is 1920 × 1080 pixels. In these cases, the unit price for video transcoding is greatly increased, whereas the increase in the actually consumed computing resources is probably less than 30%. Function Compute, on the other hand, allows you to pay only for the computing resources that you actually use.

Deployment

This section describes how to deploy a simple video processing system and a video processing workflow system.

Simple video processing system

Prerequisites

Function Compute is activated. For more information, see Step 1: Activate Function Compute.

Object Storage Service is activated. For more information, see Create buckets.

Procedure

Create a service.

Log on to the Function Compute console. In the left-side navigation pane, click Services & Functions.

In the top navigation bar, select a region. On the Services page, click Create Service.

In the Create Service panel, enter a service name and description, configure the parameters based on your business requirements, and then click OK.

In this example, the service role is set to

AliyunFCDefaultRoleandAliyunOSSFullAccessis added to the policy.For more information about how to create a service, see Create a service.

Creates a function.

On the Functions page, click Create Function.

On the Create Function page, select a method to create the function, configure the following parameters, and then click Create.

Method to create the function: Use Built-in Runtime.

Basic Settings: Configure the basic information of the function, including Function Name and Handler Type. Set Handler Type to Event Handler.

Code: Set Runtime to Python 3.9 and Code Upload Method to Use Sample Code.

Advanced Settings: The processing of video files is time-consuming. In this example, vCPU Capacity is set to 4 vCPUs, Memory Capacity is set to 8 GB, Size of Temporary Disk is set to 10 GB, and Execution Timeout Period is set to 7200 seconds. Make sure to consider the sizes of your videos when configuring the preceding parameters.

Retain the default values for other parameters. For more information about how to create a function, see Create a function.

Create an OSS trigger.

On the Function Details page, click the Triggers tab, select a version or alias from the Version or Alias drop-down list, and then click Create Trigger.

In the Create Trigger panel, specify related parameters and click OK.

Parameter

Description

Example

Trigger Type

Select the type of the trigger. In this example, OSS is selected.

OSS

Name

Enter the trigger name.

oss-trigger

Version or Alias

Specify the version or alias. The default value is LATEST. If you want to create a trigger for another version or alias, select the version or alias from the Version or Alias drop-down list on the Function Details page. For more information about versions and aliases, see Manage versions and Manage aliases.

LATEST

Bucket Name

Select the bucket that you created.

testbucket

Object Prefix

Enter the prefix of the object name that you want to match. We recommend that you configure Object Prefix and Object Suffix to prevent unexpected costs generated by nested loops. If you specify the same event type for different triggers of a bucket, the prefix and suffix cannot be the same. For more information, see Triggering rules.

ImportantThe object prefix cannot start with a forward slash (

/). Otherwise, the OSS trigger will not function.source

Object Suffix

Enter the suffix of the object name that you want to match. We recommend that you configure Object Prefix and Object Suffix to prevent unexpected costs generated by nested loops. If you specify the same event type for different triggers of a bucket, the prefix and suffix cannot be the same. For more information, see Triggering rules.

mp4

Trigger Event

Select one or more trigger events. For more information about the event types of OSS, see OSS events.

oss:ObjectCreated:PutObject, oss:ObjectCreated:PostObject, oss:ObjectCreated:CompleteMultipartUpload

Role Name

Specify the name of a resource access management (RAM) role. In this example, AliyunOSSEventNotificationRole is selected.

NoteAfter you configure the preceding parameters, click OK. If this is the first time that you create a trigger of this type, click Authorize Now in the message that appears.

AliyunOSSEventNotificationRole

Write function code.

On the Function Details page, click the Code tab and then write code in the code editor.

The function converts MP4 files to the FLV format and stores the FLV files in the

destdirectory of an OSS bucket. In this example, Python is used. The following sample code provides an example:# -*- coding: utf-8 -*- import logging import oss2 import os import json import subprocess import shutil logging.getLogger("oss2.api").setLevel(logging.ERROR) logging.getLogger("oss2.auth").setLevel(logging.ERROR) LOGGER = logging.getLogger() def get_fileNameExt(filename): (_, tempfilename) = os.path.split(filename) (shortname, extension) = os.path.splitext(tempfilename) return shortname, extension def handler(event, context): LOGGER.info(event) evt = json.loads(event) evt = evt["events"] oss_bucket_name = evt[0]["oss"]["bucket"]["name"] object_key = evt[0]["oss"]["object"]["key"] output_dir = "dest" dst_format = "flv" shortname, _ = get_fileNameExt(object_key) creds = context.credentials auth = oss2.StsAuth(creds.accessKeyId, creds.accessKeySecret, creds.securityToken) oss_client = oss2.Bucket(auth, 'oss-%s-internal.aliyuncs.com' % context.region, oss_bucket_name) exist = oss_client.object_exists(object_key) if not exist: raise Exception("object {} is not exist".format(object_key)) input_path = oss_client.sign_url('GET', object_key, 6 * 3600) # M3U8 special handling. rid = context.request_id if dst_format == "m3u8": return handle_m3u8(rid, oss_client, input_path, shortname, output_dir) else: return handle_common(rid, oss_client, input_path, shortname, output_dir, dst_format) def handle_m3u8(request_id, oss_client, input_path, shortname, output_dir): ts_dir = '/tmp/ts' if os.path.exists(ts_dir): shutil.rmtree(ts_dir) os.mkdir(ts_dir) transcoded_filepath = os.path.join('/tmp', shortname + '.ts') split_transcoded_filepath = os.path.join( ts_dir, shortname + '_%03d.ts') cmd1 = ['ffmpeg', '-y', '-i', input_path, '-c:v', 'libx264', transcoded_filepath] cmd2 = ['ffmpeg', '-y', '-i', transcoded_filepath, '-c', 'copy', '-map', '0', '-f', 'segment', '-segment_list', os.path.join(ts_dir, 'playlist.m3u8'), '-segment_time', '10', split_transcoded_filepath] try: subprocess.run( cmd1, stdout=subprocess.PIPE, stderr=subprocess.PIPE, check=True) subprocess.run( cmd2, stdout=subprocess.PIPE, stderr=subprocess.PIPE, check=True) for filename in os.listdir(ts_dir): filepath = os.path.join(ts_dir, filename) filekey = os.path.join(output_dir, shortname, filename) oss_client.put_object_from_file(filekey, filepath) os.remove(filepath) print("Uploaded {} to {}".format(filepath, filekey)) except subprocess.CalledProcessError as exc: # if transcode fail, trigger invoke dest-fail function raise Exception(request_id + " transcode failure, detail: " + str(exc)) finally: if os.path.exists(ts_dir): shutil.rmtree(ts_dir) # Remove the ts file. if os.path.exists(transcoded_filepath): os.remove(transcoded_filepath) return {} def handle_common(request_id, oss_client, input_path, shortname, output_dir, dst_format): transcoded_filepath = os.path.join('/tmp', shortname + '.' + dst_format) if os.path.exists(transcoded_filepath): os.remove(transcoded_filepath) cmd = ["ffmpeg", "-y", "-i", input_path, transcoded_filepath] try: subprocess.run( cmd, stdout=subprocess.PIPE, stderr=subprocess.PIPE, check=True) oss_client.put_object_from_file( os.path.join(output_dir, shortname + '.' + dst_format), transcoded_filepath) except subprocess.CalledProcessError as exc: # if transcode fail, trigger invoke dest-fail function raise Exception(request_id + " transcode failure, detail: " + str(exc)) finally: if os.path.exists(transcoded_filepath): os.remove(transcoded_filepath) return {}Click Deploy.

Test the function code.

You can configure function input parameters to simulate OSS events and verify your code. In actual operations, the function is automatically triggered when a specified OSS event occurs.

On the Function Details page, click the Code tab, click the

icon next to Test Function, and then select Configure Test Parameters from the drop-down list.

icon next to Test Function, and then select Configure Test Parameters from the drop-down list. In the Configure Test Parameters panel, click the Create New Test Event or Modify Existing Test Event tab, and specify Event Name and the event content. Then, click OK.

The following sample code provides an example of event configurations. For more information about the event parameters, see Step 2: Configure input parameters of the function.

{ "events": [ { "eventName": "oss:ObjectCreated:CompleteMultipartUpload", "eventSource": "acs:oss", "eventTime": "2022-08-13T06:45:43.000Z", "eventVersion": "1.0", "oss": { "bucket": { "arn": "acs:oss:cn-hangzhou:123456789:testbucket", "name": "testbucket", "ownerIdentity": "164901546557****" }, "object": { "deltaSize": 122539, "eTag": "688A7BF4F233DC9C88A80BF985AB****", "key": "source/a.mp4", "size": 122539 }, "ossSchemaVersion": "1.0", "ruleId": "9adac8e253828f4f7c0466d941fa3db81161****" }, "region": "cn-hangzhou", "requestParameters": { "sourceIPAddress": "140.205.XX.XX" }, "responseElements": { "requestId": "58F9FF2D3DF792092E12044C" }, "userIdentity": { "principalId": "164901546557****" } } ] }Click Test Function. On the Code tab, view the execution result.

Video processing workflow system

Prerequisites

Activate Function Compute and create an OSS bucket

Function Compute is activated. For more information, see Step 1: Activate Function Compute.

Object Storage Service is activated. For more information, see Create buckets.

CloudFlow is activated. For more information, visit Activate CloudFlow.

File Storage NAS is activated. For more information, visit Activate NAS.

Virtual Private Cloud is activated. For more information, visit Activate VPC.

Configure a service role

AliyunFcDefaultRole: The default role of Function Compute. You must configure this role when you create a service. Attach the

AliyunOSSFullAccess,AliyunFnFFullAccess, andAliyunFCInvocationAccesspermissions to this role to invoke functions, manage workflows, and manage OSS.AliyunOSSEventNotificationRole: The default role that is used by OSS to send event notifications.

fnf-execution-default-role: The role required to create and manage workflows. You must attach the

AliyunFCInvocationAccessandAliyunFnFFullAccesspermissions to this role.

Install and configure Serverless Devs

Serverless Devs and dependencies are installed. For more information, see Install Serverless Devs and Docker.

Serverless Devs is configured. For more information, see Configure Serverless Devs.

Procedure

This solution implements a video processing system by using CloudFlow to orchestrate functions. Code and workflows of multiple functions need to be developed and configured. In this example, Serverless Devs is used to deploy the system.

Run the following command to initialize the application:

s init video-process-flow -d video-process-flowThe following table describes the items to be configured. Specify the items based on your business requirements.

Parameter

Example

Region ID

cn-hangzhou

Service

video-process-flow-demo

Alibaba Cloud Resource Name (ARN) of a RAM role

acs:ram::10343546****:role/aliyunfcdefaultrole

OSS bucket name

testBucket

Prefix

source

Path to save transcoded videos

dest

ARN of the RAM role associated with an OSS trigger

acs:ram::10343546****:role/aliyunosseventnotificationrole

Segmentation interval

30

Video format after transcoding

mp4, flv, avi

Name of a workflow

video-process-flow

ARN of the RAM role associated with the workflow

acs:ram::10343546****:role/fnf-execution-default-role

please select credential alias

default

Run the following command to go to the project and deploy it:

cd video-process-flow && s deploy - yThe following content is returned if the deployment is successful.

[2023-08-31 13:22:21] [INFO] [S-CORE] - Project video-demo-flow successfully to execute fc-video-demo-split: region: cn-hangzhou service: name: video-process-flow-wg76 function: name: split runtime: python3 handler: index.handler memorySize: 3072 timeout: 600 fc-video-demo-transcode: region: cn-hangzhou service: name: video-process-flow-wg76 function: name: transcode runtime: python3 handler: index.handler memorySize: 3072 timeout: 600 fc-video-demo-merge: region: cn-hangzhou service: name: video-process-flow-wg76 function: name: merge runtime: python3 handler: index.handler memorySize: 3072 timeout: 600 fc-video-demo-after-process: region: cn-hangzhou service: name: video-process-flow-wg76 function: name: after-process runtime: python3 handler: index.handler memorySize: 512 timeout: 120 fc-oss-trigger-trigger-fnf: region: cn-hangzhou service: name: video-process-flow-wg76 function: name: trigger-fnf runtime: python3 handler: index.handler memorySize: 128 timeout: 120 triggers: - type: oss name: oss-t video-demo-flow: RegionId: cn-hangzhou Name: video-process-flowTest the project.

Log on to the OSS console. Go to the source directory of testBucket and upload an MP4 file.

Log on to the CloudFlow console. On the Workflows page, click the target workflow. On the Execution Records tab, click the name of the target execution to view its process and status.

If the status of the execution is Execution Succeeded, you can go to the dest directory of testBucket to view the transcoded files.

If you find your transcoded files there, the service of the video processing system is running as expected.

References

FAQ

I have deployed a video processing system on a virtual machine or container platform by using FFmpeg. How can I improve the elasticity and availability of the system?

You can migrate your system from the virtual machine or container platform to Function Compute with ease. Function Compute can be integrated with FFmpeg-related commands. The system reconstruction is cost-effective, seamlessly inheriting the elasticity and high availability of Function Compute.

What can I do if I need to process a large number of videos in parallel?

For more information about the deployment solution, see Video processing workflow system. When multiple videos are uploaded to OSS at the same time, Function Compute automatically scales out resources to process videos in parallel. For more information, see fc-fnf-video-processing.

Every Friday, hundreds of 1080p videos, each larger than 4 GB, are produced and need to be processed within a few hours. What should I do in this situation?

Video segmentation can greatly improve transcoding efficiency. You can specify the size of video segments to ensure that the original oversized videos have adequate computing resources for transcoding. For more information about the deployment solution, see fc-fnf-video-processing.

I want to record the transcoding details in my database each time a video is transcoded. I also want trending videos to be automatically prefetched to CDN nodes once they are transcoded to relieve the pressure on origin servers. What should I do to achieve these goals?

For more information about the deployment solution, see Video processing workflow system. You can perform specific custom operations during media processing or perform additional operations based on the process. For example, you can add preprocessing steps before the process begins or add subsequent steps.

My video processing system can transcode videos, add watermarks, and generate GIF thumbnails. Now, I want to add transcoding parameter adjustment and some other features to the system. I also want to make sure that the existing online services provided by the system remain unaffected when the new features are released.

For more information about the deployment solution, see Video processing workflow system. CloudFlow is used only for function orchestration. Therefore, you can focus on updating functions used for media processing. Function versions and aliases are also supported for you to better control canary releases. For more information, see Manage versions.

I want to generate a GIF image based on the first few frames of a video, and query the duration of an audio file or a video file. I would like to build my own media processing system to handle these simple transcoding and lightweight media processing requirements because it is more cost-effective. What should I do to achieve this goal?

Function Compute supports custom features. You can run specific FFmpeg commands to achieve your goal with ease. For more information about the typical sample project, see fc-oss-ffmpeg.

My video files are stored in NAS or on disks attached to ECS instances, and I want my video processing system to directly read and process those files from their current storage locations, eliminating the need to migrate the files to an OSS bucket first.

You can integrate Function Compute with NAS to let Function Compute process the files stored in NAS. For more information, see Configure a NAS file system.