This topic describes common issues that you may encounter when you use the Terway or Flannel network plugin and explains how to resolve them. For example, this topic answers questions about how to select a network plugin, whether you can install third-party network plugins in a cluster, and how to plan a cluster network.

Index

Terway

How can I tell if Terway is in exclusive ENI mode or shared ENI mode?

How can I tell if Terway network acceleration is DataPathv2 or IPvlan+eBPF?

Does the routing in Terway DataPathv2 or IPvlan+eBPF mode bypass IPVS?

Can I switch the network plugin for an existing ACK cluster?

What should I do if a vSwitch runs out of IP resources in Terway network mode?

How do I choose between the Terway and Flannel network plugins for a Kubernetes cluster?

How do I enable in-cluster load balancing for a Terway IPvlan cluster?

How do I add a specific CIDR block to the whitelist for pods in an ACK cluster that uses Terway?

What is the number of pods supported in Terway network mode?

Pod creation fails with a "cannot find MAC address" error in Terway network mode

Flannel

How do I choose between the Terway and Flannel network plugins for a Kubernetes cluster?

In which scenarios do I need to configure multiple route tables for a cluster?

Why do pods fail to start and report the "no IP addresses available in range" error?

How do I change the number of node IPs, the Pod IP CIDR block, or the Service IP CIDR block?

kube-proxy

IPv6

Other

What are the main network modes of Terway?

Terway has two main network modes: shared elastic network interface (ENI) mode and exclusive ENI mode. For more information about each mode, see Shared ENI mode and exclusive ENI mode. Note that only the shared ENI mode of Terway supports network acceleration (DataPathv2 or IPvlan+eBPF). DataPathv2 is an upgraded version of the IPvlan+eBPF acceleration mode. In Terway v1.8.0 and later, DataPathv2 is the only available acceleration option when you create a cluster and install the Terway plugin.

How can I tell if Terway is in exclusive ENI mode or shared ENI mode?

In Terway v1.11.0 and later, Terway uses the shared ENI mode by default. You can enable the exclusive ENI mode by configuring the exclusive ENI network mode for a node pool.

In versions earlier than Terway v1.11.0, you can select either exclusive or shared ENI mode when you create a cluster. After the cluster is created, you can identify the mode as follows:

Exclusive ENI mode: The name of the Terway DaemonSet in the kube-system namespace is

terway-eni.Shared ENI mode: The name of the Terway DaemonSet in the kube-system namespace is

terway-eniip.

How can I tell if Terway network acceleration is DataPathv2 or IPvlan+eBPF?

Only the shared ENI mode of Terway supports network acceleration (DataPathv2 or IPvlan+eBPF). DataPathv2 is an upgraded version of the IPvlan+eBPF acceleration mode. In Terway v1.8.0 and later, DataPathv2 is the only available acceleration option when you create a cluster and install the Terway plugin.

You can check the eniip_virtual_type configuration in the eni-config ConfigMap in the kube-system namespace to determine whether network acceleration is enabled. The value is datapathv2 or ipvlan.

Does the routing in Terway DataPathv2 or IPvlan+eBPF mode bypass IPVS?

When you enable an acceleration mode (DataPathv2 or IPvlan+eBPF) for Terway, it uses a different traffic forwarding path than the regular shared ENI mode. In specific scenarios, such as a pod accessing an internal Service, traffic can bypass the node's network protocol stack and does not need to pass through the node's IPVS route. Instead, eBPF resolves the Service address to the address of a backend pod. For more information about traffic flows, see Network acceleration.

Can I switch the network plugin for an existing ACK cluster?

You can only select a network plugin (Terway or Flannel) when you create a cluster. The network plugin cannot be changed after the cluster is created. To use a different network plugin, you must create a new cluster. For more information, see Create an ACK managed cluster.

What should I do if the cluster cannot access the Internet after I add a vSwitch in Terway network mode?

Symptom

You manually add a vSwitch because pods have run out of IP resources. After adding the vSwitch, you discover that the cluster cannot access the Internet.

Cause

The vSwitch that provides IP addresses to the pods does not have Internet access.

Solution

You can use the SNAT feature of NAT Gateway to configure an SNAT rule for the vSwitch that provides IP addresses to the pods. For more information, see Enable Internet access for a cluster.

After manually upgrading the Flannel image version, how do I resolve the incompatibility with clusters of version 1.16 or later?

Symptom

After you upgrade the cluster to version 1.16, the cluster nodes enter the NotReady state.

Cause

This issue occurs because you manually upgraded the Flannel version but did not upgrade the Flannel configuration. As a result, the kubelet cannot recognize the new configuration.

Solution

Edit the Flannel configuration to add the

cniVersionfield.kubectl edit cm kube-flannel-cfg -n kube-systemAdd the

cniVersionfield to the configuration."name": "cb0", "cniVersion":"0.3.0", "type": "flannel",Restart Flannel.

kubectl delete pod -n kube-system -l app=flannel

How do I resolve the latency issue after a pod starts?

Symptom

After a pod starts, there is a delay before the network becomes available.

Cause

A configured Network Policy can cause latency. Disabling the Network Policy can resolve this issue.

Solution

Modify the Terway ConfigMap to add a configuration that disables NetworkPolicy.

kubectl edit cm -n kube-system eni-configAdd the following field to the configuration.

disable_network_policy: "true"Optional:If you are not using the latest version of Terway, upgrade it in the console.

Log on to the ACK console. In the navigation pane on the left, choose Clusters.

On the Clusters page, click the name of the target cluster. In the navigation pane on the left, click Add-ons.

On the Add-ons page, click the Networking tab, and then click Upgrade for the Terway add-on.

In the dialog box that appears, follow the prompts to complete the configuration and click OK.

Restart all Terway pods.

kubectl delete pod -n kube-system -l app=terway-eniip

How can I allow a pod to access the service it exposes?

Symptom

A pod cannot access the service it exposes. Access is intermittent or fails when the pod is scheduled to itself.

Cause

This can happen because loopback access is not enabled for the Flannel cluster.

Flannel versions earlier than v0.15.1.4-e02c8f12-aliyun do not allow loopback access. After you upgrade, loopback access remains disabled by default but can be enabled manually.

Loopback access is enabled by default only for new deployments of Flannel v0.15.1.4-e02c8f12-aliyun and later versions.

Solution

Use a Headless Service to expose and access the service. For more information, see Headless Services.

NoteThis is the recommended method.

Recreate the cluster and use the Terway network plugin. For more information, see Use the Terway network plugin.

Modify the Flannel configuration, and then recreate the Flannel plugin and the pod.

NoteThis method is not recommended because the configuration may be overwritten by subsequent upgrades.

Edit cni-config.json.

kubectl edit cm kube-flannel-cfg -n kube-systemIn the configuration, add

hairpinMode: trueto thedelegatesection.Example:

cni-conf.json: | { "name": "cb0", "cniVersion":"0.3.1", "type": "flannel", "delegate": { "isDefaultGateway": true, "hairpinMode": true } }Restart Flannel.

kubectl delete pod -n kube-system -l app=flannelDelete and recreate the pod.

How do I choose between the Terway and Flannel network plugins for a Kubernetes cluster?

This section describes the two network plugins, Terway and Flannel, that are available when you create an ACK cluster.

When you create a Kubernetes cluster, ACK provides two network plugins:

Flannel: This plugin uses the simple and stable Flannel CNI plugin from the open source community. It works with the high-speed Alibaba Cloud VPC network to provide a high-performance and stable network for containers. However, it provides only basic features and lacks advanced capabilities, such as standard Kubernetes Network Policies.

Terway: This is a network plugin developed by ACK. It is fully compatible with Flannel and supports assigning Alibaba Cloud ENIs to containers. It also supports standard Kubernetes NetworkPolicies to define access policies between containers and lets you limit the bandwidth of individual containers. If you do not need to use Network Policies, you can choose Flannel. Otherwise, we recommend that you use Terway. For more information about the Terway network plugin, see Use the Terway network plugin.

How do I plan the cluster network?

When you create an ACK cluster, you need to specify a VPC, vSwitches, a Pod network CIDR block, and a Service CIDR block. We recommend that you plan the ECS instance addresses, Kubernetes pod addresses, and Service addresses in advance. For more information, see Plan the network for an ACK managed cluster.

Does ACK support hostPort mapping?

Only the Flannel plugin supports hostPort. Other plugins do not support this feature.

Pod addresses in ACK can be directly accessed by other resources in the same VPC without requiring extra port mapping.

To expose a service to an external network, use a NodePort or LoadBalancer type Service.

How do I view the cluster's network type and its corresponding vSwitches?

ACK supports two container network types: Flannel and Terway.

To view the network type that you selected when you created the cluster, perform the following steps:

Log on to the ACK console. In the navigation pane on the left, click Clusters.

On the Clusters page, click the name of the target cluster. In the navigation pane on the left, choose Cluster Information.

Click the Basic Information tab. In the Network section, view the container network type of the cluster, which is the value next to Network Plug-in.

If Network Plug-in is set to Terway, the container network type is Terway.

If Network Plug-in is set to Flannel, the container network type is Flannel.

To view the node vSwitches used by the network type, perform the following steps:

In the navigation pane on the left, choose .

On the Node Pools page, find the target node pool and click Details in the Actions column. Then, click the Overview tab.

In the Node Configurations section, view the Node vSwitch ID.

To query the pod vSwitch ID used by the Terway network type, perform the following steps:

NoteOnly the Terway network type uses pod vSwitches. The Flannel network type does not.

In the navigation pane on the left, click Add-ons.

On the Add-ons page, click Configuration on the terway-eniip card. The PodVswitchId option shows the pod vSwitches that are currently in use.

How do I view the cloud resources used in a cluster?

To view information about the cloud resources used in a cluster, including virtual machines, VPCs, and worker RAM roles, perform the following steps:

Log on to the ACK console. In the navigation pane on the left, click Clusters.

On the Clusters page, click the name of the target cluster, or click Details in the Actions column for the cluster.

Click the Basic Information tab to view information about the cloud resources used in the cluster.

How do I modify the kube-proxy configuration?

By default, ACK managed clusters deploy the kube-proxy-worker DaemonSet for load balancing. You can control its parameters using the kube-proxy-worker ConfigMap. If you use an ACK dedicated cluster, a kube-proxy-master DaemonSet and a corresponding ConfigMap are also deployed in your cluster and run on the master nodes.

The kube-proxy configurations are compatible with the community KubeProxyConfiguration standard. You can customize the configuration based on this standard. For more information, see kube-proxy Configuration. The kube-proxy configuration file has strict formatting requirements. Do not omit colons or spaces. To modify the kube-proxy configuration, perform the following steps:

If you use a managed cluster, you must modify the configuration of kube-proxy-worker.

Log on to the ACK console. In the navigation pane on the left, click Clusters.

On the Clusters page, click the name of the target cluster. In the navigation pane on the left, choose .

At the top of the page, select the kube-system namespace. Then, find the

kube-proxy-workerConfigMap and click Edit YAML in the Actions column.In the Edit YAML panel, modify the parameters and click OK.

Recreate all kube-proxy-worker containers for the configuration to take effect.

ImportantRestarting kube-proxy does not interrupt running services. If services are being released concurrently, the new services may take slightly longer to become effective in kube-proxy. We recommend that you perform this operation during off-peak hours.

In the navigation pane on the left of the cluster management page, choose .

In the list of DaemonSets, find and click kube-proxy-worker.

On the kube-proxy-worker page, on the Pods tab, choose and then click OK.

Repeat this operation to delete all pods. The system automatically recreates the pods after they are deleted.

If you use a dedicated cluster, you must modify the configurations of both kube-proxy-worker and kube-proxy-master. Then, delete the kube-proxy-worker and kube-proxy-master pods. The pods are automatically recreated, and the new configuration takes effect. For more information, see the preceding steps.

How do I increase the Linux connection tracking (conntrack) limit?

If the kernel log (dmesg) contains the conntrack full error message, the number of conntrack entries has reached the conntrack_max limit. You must increase the Linux conntrack limit.

Run the following commands to check the current conntrack usage and count for each protocol.

# View table details. You can use a grep pipeline to check the status, or use cat /proc/net/nf_conntrack. conntrack -L # View the count. cat /proc/sys/net/netfilter/nf_conntrack_count # View the current maximum value of the table. cat /proc/sys/net/netfilter/nf_conntrack_maxIf many TCP protocol entries are present, check the specific services. If they are short-lived connection applications, consider changing them to persistent connection applications.

If many DNS entries are present, use NodeLocal DNSCache in your ACK cluster to improve DNS performance. For more information, see Using the NodeLocal DNSCache component.

If many application-layer timeouts or 504 errors occur, or if the operating system kernel log prints the

kernel: nf_conntrack: table full, dropping packet.error, adjust the conntrack-related parameters with caution.

If the actual conntrack usage is reasonable, or if you do not want to modify your services, you can adjust the connection tracking limit by adding the maxPerCore parameter to the kube-proxy configuration.

If you use a managed cluster, you need to add the maxPerCore parameter to the kube-proxy-worker configuration and set its value to 65536 or higher. Then, delete the kube-proxy-worker pod. The pod is automatically recreated, and the new configuration takes effect. For more information about how to modify and delete kube-proxy-worker, see How do I modify the kube-proxy configuration?.

apiVersion: v1 kind: ConfigMap metadata: name: kube-proxy-worker namespace: kube-system data: config.conf: | apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration featureGates: IPv6DualStack: true clusterCIDR: 172.20.0.0/16 clientConnection: kubeconfig: /var/lib/kube-proxy/kubeconfig.conf conntrack: maxPerCore: 65536 # Set maxPerCore to a reasonable value. 65536 is the default setting. mode: ipvs # Other fields are omitted.If you use a dedicated cluster, you need to add the maxPerCore parameter to the kube-proxy-worker and kube-proxy-master configurations and set its value to 65536 or higher. Then, delete the kube-proxy-worker and kube-proxy-master pods. The pods are automatically recreated, and the new configuration takes effect. For more information about how to modify and delete kube-proxy-worker and kube-proxy-master, see How do I modify the kube-proxy configuration?.

In Terway DataPath V2 or IPvlan mode, conntrack information for container traffic is stored in eBPF maps. In other modes, conntrack information is stored in Linux conntrack. For more information about how to adjust the eBPF conntrack size, see Optimize conntrack configurations in Terway mode.

How do I change the IPVS load balancing mode in kube-proxy?

If your services use persistent connections, the number of requests to backend pods may be uneven because each connection sends multiple requests. You can resolve this uneven load issue by changing the IPVS load balancing mode in kube-proxy. Perform the following steps:

Select an appropriate scheduling algorithm. For more information about how to select an appropriate scheduling algorithm, see parameter-changes in the Kubernetes documentation.

For cluster nodes created before October 2022, not all IPVS scheduling algorithms may be enabled by default. You must manually enable the IPVS scheduling algorithm kernel module on all cluster nodes. For example, to use the least connection (lc) scheduling algorithm, log on to each node and run lsmod | grep ip_vs_lc to check if there is any output. If you choose another algorithm, replace `lc` with the corresponding keyword.

If the command outputs ip_vs_lc, the scheduling algorithm kernel module is already loaded, and you can skip this step.

If it is not loaded, run modprobe ip_vs_lc to make it take effect immediately on the node. Then, run echo "ip_vs_lc" >> /etc/modules-load.d/ack-ipvs-modules.conf to ensure that the change persists after the node restarts.

Set the ipvs.scheduler parameter in kube-proxy to a reasonable scheduling algorithm.

If you use a managed cluster, you need to set the ipvs.scheduler parameter of kube-proxy-worker to a reasonable scheduling algorithm. Then, delete the kube-proxy-worker pod. The pod is automatically recreated, and the new configuration takes effect. For more information about how to modify and delete kube-proxy-worker, see How do I modify the kube-proxy configuration?.

apiVersion: v1 kind: ConfigMap metadata: name: kube-proxy-worker namespace: kube-system data: config.conf: | apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration featureGates: IPv6DualStack: true clusterCIDR: 172.20.0.0/16 clientConnection: kubeconfig: /var/lib/kube-proxy/kubeconfig.conf conntrack: maxPerCore: 65536 mode: ipvs ipvs: scheduler: lc # Set scheduler to a reasonable scheduling algorithm. # Other fields are omitted.If you use a dedicated cluster, you need to set the ipvs.scheduler parameter in kube-proxy-worker and kube-proxy-master to a reasonable scheduling algorithm. Then, delete the kube-proxy-worker and kube-proxy-master pods. The pods are automatically recreated, and the new configuration takes effect. For more information about how to modify and delete kube-proxy-worker and kube-proxy-master, see How do I modify the kube-proxy configuration?.

Check the kube-proxy running logs.

Run the kubectl get pods command to check if the new kube-proxy-worker container in the kube-system namespace is in the Running state. If you use a dedicated cluster, also check kube-proxy-master.

Run the kubectl logs command to view the logs of the new container.

If the log contains Can't use the IPVS proxier: IPVS proxier will not be used because the following required kernel modules are not loaded: [ip_vs_lc], the IPVS scheduling algorithm kernel module failed to load. Verify that the previous steps were performed correctly and try again.

If the log contains Using iptables Proxier., kube-proxy failed to enable the IPVS module and automatically fell back to iptables mode. In this case, we recommend that you first roll back the kube-proxy configuration and then restart the node.

If the preceding log entries do not appear and the log shows Using ipvs Proxier., the IPVS module was successfully enabled.

If all the preceding checks pass, the change was successful.

How do I change the IPVS UDP session persistence timeout in kube-proxy?

If your ACK cluster uses kube-proxy in IPVS mode, the default IPVS session persistence policy can cause probabilistic packet loss for UDP backends for up to five minutes after they are removed. If your services depend on CoreDNS, you may experience business interface latency, request timeouts, and other issues for up to five minutes when the CoreDNS component is upgraded or its node is restarted.

If your services in the ACK cluster do not use the UDP protocol, you can reduce the impact of parsing latency or failures by lowering the session persistence timeout for the IPVS UDP protocol. Perform the following steps:

If your own services use the UDP protocol, please submit a ticket for consultation.

For clusters of K8s 1.18 and later

If you use a managed cluster, you need to modify the udpTimeout parameter value in kube-proxy-worker. Then, delete the kube-proxy-worker pod. The pod is automatically recreated, and the new configuration takes effect. For more information about how to modify and delete kube-proxy-worker, see How do I modify the kube-proxy configuration?.

apiVersion: v1 kind: ConfigMap metadata: name: kube-proxy-worker namespace: kube-system data: config.conf: | apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration # Other irrelevant fields are omitted. mode: ipvs # If the ipvs key does not exist, you need to add it. ipvs: udpTimeout: 10s # The default is 300 seconds. Changing it to 10 seconds can reduce the impact time of packet loss after an IPVS UDP backend is removed to 10 seconds.If you use a dedicated cluster, you need to modify the udpTimeout parameter value in kube-proxy-worker and kube-proxy-master. Then, delete the kube-proxy-worker and kube-proxy-master pods. The pods are automatically recreated, and the new configuration takes effect. For more information about how to modify and delete kube-proxy-worker, see How do I modify the kube-proxy configuration?.

For clusters of K8s 1.16 and earlier

The kube-proxy component in clusters of this version does not support the udpTimeout parameter. To adjust the UDP timeout configuration, you can use CloudOps Orchestration Service (OOS) to run the following

ipvsadmcommand in batch on all nodes in the cluster:yum install -y ipvsadm ipvsadm -L --timeout > /tmp/ipvsadm_timeout_old ipvsadm --set 900 120 10 ipvsadm -L --timeout > /tmp/ipvsadm_timeout_new diff /tmp/ipvsadm_timeout_old /tmp/ipvsadm_timeout_newFor more information about batch operations in OOS, see Batch operation instances.

How do I resolve common issues with IPv6 dual-stack?

Symptom: The pod IP address displayed in kubectl is still an IPv4 address.

Solution: Run the following command to display the Pod IPs field. The expected output is an IPv6 address.

kubectl get pods -A -o jsonpath='{range .items[*]}{@.metadata.namespace} {@.metadata.name} {@.status.podIPs[*].ip} {"\n"}{end}'Symptom: The Cluster IP displayed in kubectl is still an IPv4 address.

Solution:

Make sure that spec.ipFamilyPolicy is not set to SingleStack.

Run the following command to display the Cluster IPs field. The expected output is an IPv6 address.

kubectl get svc -A -o jsonpath='{range .items[*]}{@.metadata.namespace} {@.metadata.name} {@.spec.ipFamilyPolicy} {@.spec.clusterIPs[*]} {"\n"}{end}'

Symptom: Cannot access a pod using its IPv6 address.

Cause: Some applications, such as Nginx containers, do not listen on IPv6 addresses by default.

Solution: Run the

netstat -anpcommand to confirm that the pod is listening on an IPv6 address.Expected output:

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 127.0.XX.XX:10248 0.0.0.0:* LISTEN 8196/kubelet tcp 0 0 127.0.XX.XX:41935 0.0.0.0:* LISTEN 8196/kubelet tcp 0 0 0.0.XX.XX:111 0.0.0.0:* LISTEN 598/rpcbind tcp 0 0 0.0.XX.XX:22 0.0.0.0:* LISTEN 3577/sshd tcp6 0 0 :::30500 :::* LISTEN 1916680/kube-proxy tcp6 0 0 :::10250 :::* LISTEN 8196/kubelet tcp6 0 0 :::31183 :::* LISTEN 1916680/kube-proxy tcp6 0 0 :::10255 :::* LISTEN 8196/kubelet tcp6 0 0 :::111 :::* LISTEN 598/rpcbind tcp6 0 0 :::10256 :::* LISTEN 1916680/kube-proxy tcp6 0 0 :::31641 :::* LISTEN 1916680/kube-proxy udp 0 0 0.0.0.0:68 0.0.0.0:* 4892/dhclient udp 0 0 0.0.0.0:111 0.0.0.0:* 598/rpcbind udp 0 0 47.100.XX.XX:323 0.0.0.0:* 6750/chronyd udp 0 0 0.0.0.0:720 0.0.0.0:* 598/rpcbind udp6 0 0 :::111 :::* 598/rpcbind udp6 0 0 ::1:323 :::* 6750/chronyd udp6 0 0 fe80::216:XXXX:fe03:546 :::* 6673/dhclient udp6 0 0 :::720 :::* 598/rpcbindIf

Protoistcp, it means the service is listening on an IPv4 address. If it istcp6, it means the service is listening on an IPv6 address.Symptom: You can access a pod within the cluster using its IPv6 address, but you cannot access it from the Internet.

Cause: Internet bandwidth may not be configured for the IPv6 address.

Solution: Configure Internet bandwidth for the IPv6 address. For more information, see Enable and manage IPv6 Internet bandwidth.

Symptom: Cannot access a pod using its IPv6 Cluster IP.

Solution:

Make sure that spec.ipFamilyPolicy is not set to SingleStack.

Run the

netstat -anpcommand to confirm that the pod is listening on an IPv6 address.Expected output:

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 127.0.XX.XX:10248 0.0.0.0:* LISTEN 8196/kubelet tcp 0 0 127.0.XX.XX:41935 0.0.0.0:* LISTEN 8196/kubelet tcp 0 0 0.0.XX.XX:111 0.0.0.0:* LISTEN 598/rpcbind tcp 0 0 0.0.XX.XX:22 0.0.0.0:* LISTEN 3577/sshd tcp6 0 0 :::30500 :::* LISTEN 1916680/kube-proxy tcp6 0 0 :::10250 :::* LISTEN 8196/kubelet tcp6 0 0 :::31183 :::* LISTEN 1916680/kube-proxy tcp6 0 0 :::10255 :::* LISTEN 8196/kubelet tcp6 0 0 :::111 :::* LISTEN 598/rpcbind tcp6 0 0 :::10256 :::* LISTEN 1916680/kube-proxy tcp6 0 0 :::31641 :::* LISTEN 1916680/kube-proxy udp 0 0 0.0.0.0:68 0.0.0.0:* 4892/dhclient udp 0 0 0.0.0.0:111 0.0.0.0:* 598/rpcbind udp 0 0 47.100.XX.XX:323 0.0.0.0:* 6750/chronyd udp 0 0 0.0.0.0:720 0.0.0.0:* 598/rpcbind udp6 0 0 :::111 :::* 598/rpcbind udp6 0 0 ::1:323 :::* 6750/chronyd udp6 0 0 fe80::216:XXXX:fe03:546 :::* 6673/dhclient udp6 0 0 :::720 :::* 598/rpcbindIf

Protoistcp, it means the service is listening on an IPv4 address. If it istcp6, it means the service is listening on an IPv6 address.Symptom: A pod cannot access the Internet using IPv6.

Solution: To use IPv6 for Internet access, you need to enable an IPv6 gateway and configure Internet bandwidth for the IPv6 address. For more information, see Create and manage an IPv6 gateway and Enable and manage IPv6 Internet bandwidth.

What should I do if a vSwitch runs out of IP resources in Terway network mode?

Description

When you try to create a pod, the creation fails. Log on to the VPC console, select the target Region, and view the information of the vSwitch used by the cluster. You find that the Available IP Addresses of the vSwitch is 0. For more information about how to confirm the issue, see More information.

Cause

The vSwitch used by Terway on the node has no available IP addresses. This causes the pod to remain in the ContainerCreating state because of a lack of IP resources.

Solution

You can scale out the vSwitch by adding a new vSwitch to increase the IP resources of the cluster:

Log on to the VPC console, select the target Region, and create a new vSwitch.

NoteThe new vSwitch must be in the same region and zone as the vSwitch that has insufficient IP resources. If pod density is increasing, we recommend that the network prefix of the vSwitch CIDR block for pods is /19 or smaller, which means the CIDR block contains at least 8,192 IP addresses.

Log on to the ACK console. In the navigation pane on the left, click Clusters.On the Clusters page, click the name of the target cluster, and then in the navigation pane on the left, click Add-ons.

On the Add-ons page, find the terway-eniip card, click Configuration, and add the ID of the vSwitch created in the previous step to the PodVswitchId option.

Run the following command to delete all Terway pods. The Terway pods are automatically recreated after they are deleted.

NoteIf you selected Exclusive ENI for Pods to achieve best performance when you created the cluster with Terway, you are using the ENI single-IP mode. If you did not select this option, you are using the ENI multi-IP mode. For more information, see Terway network plugin.

For the ENI multi-IP scenario:

kubectl delete -n kube-system pod -l app=terway-eniipFor the ENI single-IP scenario:

kubectl delete -n kube-system pod -l app=terway-eni

Then, run the

kubectl get podcommand to confirm that all Terway pods are successfully recreated.Create a new pod and confirm that it is created successfully and is assigned an IP address from the new vSwitch.

More information

Connect to the Kubernetes cluster. For more information about how to connect, see Connect to a Kubernetes cluster using kubectl. Run the kubectl get pod command and find that the pod status is ContainerCreating. Run the following commands to view the logs of the Terway container on the node where the pod is located.

kubectl get pod -l app=terway-eniip -n kube-system | grep [$Node_Name] # [$Node_Name] is the name of the node where the pod is located. Use it to find the name of the Terway pod on that node.

kubectl logs --tail=100 -f [$Pod_Name] -n kube-system -c terway # [$Pod_Name] is the name of the Terway pod on the node where the pod is located.The system displays a message similar to the following, with an error message such as `InvalidVSwitchId.IpNotEnough`, which indicates that the vSwitch has insufficient IP addresses.

time="2020-03-17T07:03:40Z" level=warning msg="Assign private ip address failed: Aliyun API Error: RequestId: 2095E971-E473-4BA0-853F-0C41CF52651D Status Code: 403 Code: InvalidVSwitchId.IpNotEnough Message: The specified VSwitch \"vsw-AAA\" has not enough IpAddress., retrying"What should I do if a pod in Terway network mode is assigned an IP address that is not in the vSwitch CIDR block?

Symptom

In a Terway network, a created pod is assigned an IP address that is not within the configured vSwitch CIDR block.

Cause

Pod IP addresses are sourced from the VPC and assigned to containers using ENIs. You can configure a vSwitch only when you create a new ENI. If an ENI already exists, pod IP addresses continue to be allocated from the vSwitch that corresponds to that ENI.

This issue typically occurs in the following two scenarios:

You add a node to a cluster, but this node was previously used in another cluster and its pods were not drained when the node was removed. In this case, ENI resources from the previous cluster may remain on the node.

You manually add or modify the vSwitch configuration used by Terway. Because ENIs with the original configuration may still exist on the node, new pods may continue to use IP addresses from the original ENIs.

Solution

You can ensure that the configuration file takes effect by creating new nodes and rotating old nodes.

To rotate an old node, perform the following steps:

Drain and remove the old node. For more information, see Remove a node.

Detach the ENIs from the removed node. For more information, see Manage ENIs.

After the ENIs are detached, add the removed node back to the original ACK cluster. For more information, see Add an existing node.

What should I do if pods still cannot be assigned IP addresses after I scale out a vSwitch in Terway network mode?

Symptom

In a Terway network, pods still cannot be assigned IP addresses after a vSwitch is scaled out.

Cause

Pod IP addresses are sourced from the VPC and assigned to containers using ENIs. You can configure a vSwitch only when you create a new ENI. If an ENI already exists, pod IP addresses continue to be allocated from the vSwitch that corresponds to that ENI. Because the ENI quota on the node has been exhausted, no new ENIs can be created, and thus the new configuration cannot take effect. For more information about ENI quotas, see ENI overview.

Solution

You can ensure that the configuration file takes effect by creating new nodes and rotating old nodes.

To rotate an old node, perform the following steps:

Drain and remove the old node. For more information, see Remove a node.

Detach the ENIs from the removed node. For more information, see Manage ENIs.

After the ENIs are detached, add the removed node back to the original ACK cluster. For more information, see Add an existing node.

How do I enable in-cluster load balancing for a Terway IPvlan cluster?

Symptom

In IPvlan mode, for Terway v1.2.0 and later, in-cluster load balancing is enabled by default for new clusters. When you access an ExternalIP or LoadBalancer from within the cluster, the traffic is load balanced to the Service network. How can I enable in-cluster load balancing for an existing Terway IPvlan cluster?

Cause

Kube-proxy short-circuits traffic to ExternalIPs and LoadBalancers from within the cluster. This means that when you access these external addresses from within the cluster, the traffic does not actually go outside but is instead redirected directly to the corresponding backend Endpoints. In Terway IPvlan mode, traffic to these addresses is handled by Cilium, not kube-proxy. Terway versions earlier than v1.2.0 did not support this short-circuiting. After the release of Terway v1.2.0, this feature is enabled by default for new clusters but not for existing ones.

Solution

Terway must be v1.2.0 or later and use IPvlan mode.

If the cluster has not enabled IPvlan mode, this configuration is invalid and does not need to be configured.

This feature is enabled by default for new clusters and does not need to be configured.

Run the following command to modify the Terway ConfigMap.

kubectl edit cm eni-config -n kube-systemAdd the following content to eni_conf.

in_cluster_loadbalance: "true"NoteMake sure that

in_cluster_loadbalanceandeni_confare at the same level.Run the following command to recreate the Terway pods for the in-cluster load balancing configuration to take effect.

kubectl delete pod -n kube-system -l app=terway-eniipVerify The Configuration

Run the following command to check the terway-ennip policy log. If it shows

enable-in-cluster-loadbalance=true, the configuration has taken effect.kubectl logs -n kube-system <terway pod name> policy | grep enable-in-cluster-loadbalance

How do I add a specific CIDR block to the whitelist for pods in an ACK cluster that uses Terway?

Symptom

You often need to set up a whitelist for services such as databases to provide more secure access control. This requirement also exists in container networks, where you need to set up a whitelist for dynamically changing pod IP addresses.

Cause

ACK's container networks mainly use two plugins: Flannel and Terway.

In a Flannel network, because pods access other services through nodes, you can schedule client pods to a small, fixed set of nodes using node affinity. Then, you can add the IP addresses of these nodes to the database's whitelist.

In a Terway network, pod IP addresses are provided by ENIs. When a pod accesses an external service through an ENI, the external service sees the client IP as the IP address provided by the ENI, not the node's IP. Even if you bind the pod to a node with affinity, the client IP for external access is still the ENI's IP. Pod IP addresses are still randomly assigned from the vSwitch specified by Terway. Moreover, client pods often have configurations such as auto-scaling, which makes it difficult to meet the needs of elastic scaling even if you could fix the pod IP. We recommend that you assign a specific CIDR block for the client to allocate IP addresses from, and then add this CIDR block to the database's whitelist.

Solution

By adding labels to specific nodes, you can specify the vSwitch that pods use. When a pod is scheduled to a node with a fixed label, it can create its pod IP using the custom vSwitch.

In the kube-system namespace, create a separate ConfigMap named eni-config-fixed, which specifies a dedicated vSwitch.

This example uses vsw-2zem796p76viir02c**** and 10.2.1.0/24.

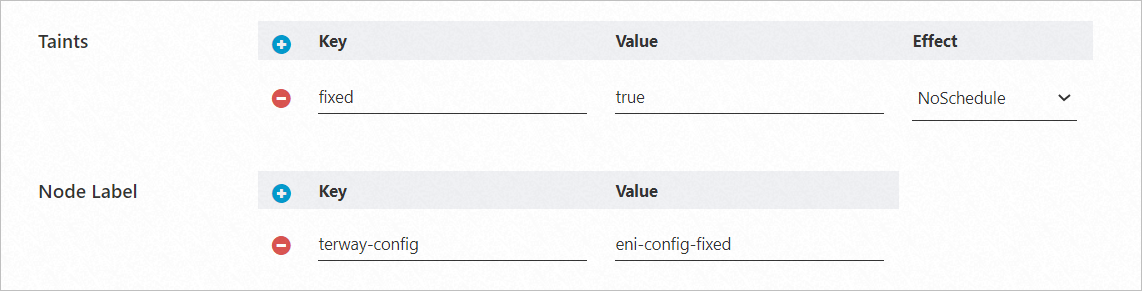

apiVersion: v1 data: eni_conf: | { "vswitches": {"cn-beijing-h":["vsw-2zem796p76viir02c****"]}, "security_group": "sg-bp19k3sj8dk3dcd7****", "security_groups": ["sg-bp1b39sjf3v49c33****","sg-bp1bpdfg35tg****"] } kind: ConfigMap metadata: name: eni-config-fixed namespace: kube-systemCreate a node pool and add the label

terway-config:eni-config-fixedto the nodes. For more information about how to create a node pool, see Create a node pool.To ensure that no other pods are scheduled to the nodes in this node pool, you can also configure a taint for the node pool, such as

fixed=true:NoSchedule.

Scale out the node pool. For more information, see Manually scale a node pool.

Nodes scaled out from this node pool will have the node label and taint set in the previous step by default.

Create a pod and schedule it to a node that has the

terway-config:eni-config-fixedlabel. You need to add a toleration.apiVersion: apps/v1 # For versions earlier than 1.8.0, use apps/v1beta1. kind: Deployment metadata: name: nginx-fixed labels: app: nginx-fixed spec: replicas: 2 selector: matchLabels: app: nginx-fixed template: metadata: labels: app: nginx-fixed spec: tolerations: # Add a toleration. - key: "fixed" operator: "Equal" value: "true" effect: "NoSchedule" nodeSelector: terway-config: eni-config-fixed containers: - name: nginx image: nginx:1.9.0 # Replace with your actual image <image_name:tags>. ports: - containerPort: 80Verify The Result

Run the following command to view the pod IP addresses.

kubectl get po -o wide | grep fixedExpected output:

nginx-fixed-57d4c9bd97-l**** 1/1 Running 0 39s 10.2.1.124 bj-tw.062149.aliyun.com <none> <none> nginx-fixed-57d4c9bd97-t**** 1/1 Running 0 39s 10.2.1.125 bj-tw.062148.aliyun.com <none> <none>You can see that the pod IP addresses are assigned from the specified vSwitch.

Run the following command to scale out the pod to 30 replicas.

kubectl scale deployment nginx-fixed --replicas=30Expected output:

nginx-fixed-57d4c9bd97-2**** 1/1 Running 0 60s 10.2.1.132 bj-tw.062148.aliyun.com <none> <none> nginx-fixed-57d4c9bd97-4**** 1/1 Running 0 60s 10.2.1.144 bj-tw.062149.aliyun.com <none> <none> nginx-fixed-57d4c9bd97-5**** 1/1 Running 0 60s 10.2.1.143 bj-tw.062148.aliyun.com <none> <none> ...You can see that all generated pod IP addresses are within the specified vSwitch. Then, you can add this vSwitch to the database's whitelist to control access for dynamic pod IP addresses.

We recommend that you use newly created nodes. If you use existing nodes, you need to detach the ENIs from the ECS instances before adding the nodes to the cluster. You must use the automatic method to add existing nodes (which replaces the system disk). For more information, see Manage ENIs and Automatically add existing nodes.

Be sure to add labels and taints to the specific node pool to ensure that services that do not need to be whitelisted are not scheduled to these nodes.

This whitelisting method is essentially a configuration override. ACK will use the configuration in the specified ConfigMap to override the previous eni-config. For more information about the configuration parameters, see Terway Node Dynamic Configuration.

We recommend that the number of IP addresses in the specified vSwitch be at least twice the expected number of pods. This provides a buffer for future scaling and helps prevent situations where no IP addresses are available for allocation due to failures that prevent timely IP address reclamation.

Why can't pods ping some ECS nodes?

Symptom

In Flannel network mode, you check that the VPN route is normal, but when you enter a pod, you find that some ECS nodes cannot be pinged.

Cause

There are two reasons why a pod cannot ping some ECS nodes.

Cause 1: The ECS instance that the pod is accessing is in the same VPC as the cluster but not in the same security group.

Cause 2: The ECS instance that the pod is accessing is not in the same VPC as the cluster.

Solution

The solution varies depending on the cause.

For Cause 1, you need to add the ECS instance to the cluster's security group. For more information, see Configure a security group.

For Cause 2, you need to access the ECS instance through its public endpoint. You must add the cluster's public egress IP address to the ECS instance's security group.

Why do cluster nodes have the NodeNetworkUnavailable taint?

Symptom

In Flannel network mode, newly added cluster nodes have the NodeNetworkUnavailable taint, which prevents pods from being scheduled.

Cause

The Cloud Controller Manager (CCM) did not promptly remove the node taint, possibly due to a full route table or the existence of multiple route tables in the VPC.

Solution

Use the kubectl describe node command to view the node's event information and handle the error based on the actual output. For issues with multiple route tables, you need to manually configure CCM to support them. For more information, see Use multiple route tables in a VPC.

Why do pods fail to start and report the "no IP addresses available in range" error?

Symptom

In Flannel network mode, pods fail to start. When you check the pod events, you see an error message similar to failed to allocate for range 0: no IP addresses available in range set: 172.30.34.129-172.30.34.190.

Cause

Solutions

For IP address leaks caused by older ACK versions, you can upgrade your cluster to version 1.20 or later. For more information, see Manually upgrade a cluster.

For IP address leaks caused by older Flannel versions, you can upgrade Flannel to v0.15.1.11-7e95fe23-aliyun or later. Perform the following steps:

In Flannel v0.15.1.11-7e95fe23-aliyun and later, ACK migrates the default IP range allocation database in Flannel to the temporary directory /var/run. This directory is automatically cleared upon restart, preventing IP address leaks.

Update Flannel to 0.15.1.11-7e95fe23-aliyun or later. For more information, see Manage components.

Run the following command to edit the kube-flannel-cfg file. Then, add the dataDir and ipam parameters to the kube-flannel-cfg file.

kubectl -n kube-system edit cm kube-flannel-cfgThe following is an example of the kube-flannel-cfg file.

# Before modification { "name": "cb0", "cniVersion":"0.3.1", "plugins": [ { "type": "flannel", "delegate": { "isDefaultGateway": true, "hairpinMode": true } }, # portmap # May not exist in older versions. Ignore if not used. { "type": "portmap", "capabilities": { "portMappings": true }, "externalSetMarkChain": "KUBE-MARK-MASQ" } ] } # After modification { "name": "cb0", "cniVersion":"0.3.1", "plugins": [ { "type": "flannel", "delegate": { "isDefaultGateway": true, "hairpinMode": true }, # Note the comma. "dataDir": "/var/run/cni/flannel", "ipam": { "type": "host-local", "dataDir": "/var/run/cni/networks" } }, { "type": "portmap", "capabilities": { "portMappings": true }, "externalSetMarkChain": "KUBE-MARK-MASQ" } ] }Run the following command to restart the Flannel pods.

Restarting Flannel pods does not affect running services.

kubectl -n kube-system delete pod -l app=flannelDelete the IP directory on the node and restart the node.

Drain the existing pods on the node. For more information, see Drain a node and manage its scheduling status.

Log on to the node and run the following commands to delete the IP directories.

rm -rf /etc/cni/ rm -rf /var/lib/cni/Restart the node. For more information, see Restart an instance.

Repeat the preceding steps to delete the IP directories on all nodes.

Run the following commands on the node to verify if the temporary directory is enabled.

if [ -d /var/lib/cni/networks/cb0 ]; then echo "not using tmpfs"; fi if [ -d /var/run/cni/networks/cb0 ]; then echo "using tmpfs"; fi cat /etc/cni/net.d/10-flannel.conf*If

using tmpfsis returned, it indicates that the current node has enabled the temporary directory /var/run for the IP range allocation database, and the change was successful.

If you cannot upgrade ACK or Flannel in the short term, you can use the following method for temporary emergency handling. This temporary fix is applicable to leaks caused by both of the preceding reasons.

This temporary fix only helps you clean up leaked IP addresses. IP address leaks may still occur, so you still need to upgrade the Flannel or cluster version.

NoteThe following commands are not applicable to Flannel v0.15.1.11-7e95fe23-aliyun and later versions that have already switched to using /var/run to store IP address allocation information.

The following scripts are for reference only. If the node has been customized, the scripts may not work correctly.

Set the problematic node to an unschedulable state. For more information, see Drain a node and manage its scheduling status.

Use the following script to clean up the node based on the runtime engine.

If you are using the Docker runtime, use the following script to clean up the node.

#!/bin/bash cd /var/lib/cni/networks/cb0; docker ps -q > /tmp/running_container_ids find /var/lib/cni/networks/cb0 -regex ".*/[0-9]+\.[0-9]+\.[0-9]+\.[0-9]+" -printf '%f\n' > /tmp/allocated_ips for ip in $(cat /tmp/allocated_ips); do cid=$(head -1 $ip | sed 's/\r#g' | cut -c-12) grep $cid /tmp/running_container_ids > /dev/null || (echo removing leaked ip $ip && rm $ip) doneIf you are using the containerd runtime, use the following script to clean up the node.

#!/bin/bash # install jq yum install -y jq # export all running pod's configs crictl -r /run/containerd/containerd.sock pods -s ready -q | xargs -n1 crictl -r /run/containerd/containerd.sock inspectp > /tmp/flannel_ip_gc_all_pods # export and sort pod ip cat /tmp/flannel_ip_gc_all_pods | jq -r '.info.cniResult.Interfaces.eth0.IPConfigs[0].IP' | sort > /tmp/flannel_ip_gc_all_pods_ips # export flannel's all allocated pod ip ls -alh /var/lib/cni/networks/cb0/1* | cut -f7 -d"/" | sort > /tmp/flannel_ip_gc_all_allocated_pod_ips # print leaked pod ip comm -13 /tmp/flannel_ip_gc_all_pods_ips /tmp/flannel_ip_gc_all_allocated_pod_ips > /tmp/flannel_ip_gc_leaked_pod_ip # clean leaked pod ip echo "Found $(cat /tmp/flannel_ip_gc_leaked_pod_ip | wc -l) leaked Pod IP, press <Enter> to clean." read sure # delete leaked pod ip for pod_ip in $(cat /tmp/flannel_ip_gc_leaked_pod_ip); do rm /var/lib/cni/networks/cb0/${pod_ip} done echo "Leaked Pod IP cleaned, removing temp file." rm /tmp/flannel_ip_gc_all_pods_ips /tmp/flannel_ip_gc_all_pods /tmp/flannel_ip_gc_leaked_pod_ip /tmp/flannel_ip_gc_all_allocated_pod_ips

Set the problematic node to a schedulable state. For more information, see Drain a node and manage its scheduling status.

How do I change the number of node IPs, the Pod IP CIDR block, or the Service IP CIDR block?

The number of node IPs, the Pod IP CIDR block, and the Service IP CIDR block cannot be changed after the cluster is created. Plan your network segments reasonably when you create the cluster.

In which scenarios do I need to configure multiple route tables for a cluster?

In Flannel network mode, the following are common scenarios where you need to configure multiple route tables for cloud-controller-manager. For more information about how to configure multiple route tables for a cluster, see Use multiple route tables in a VPC.

Scenarios

Scenario 1:

System diagnosis prompts "The node's Pod CIDR block is not in the VPC route table entries. Please refer to adding a custom route entry to the custom route table to add the next-hop route for the Pod CIDR block to the current node."

Cause: When creating a custom route table in the cluster, you need to configure CCM to support multiple route tables.

Scenario 2:

The cloud-controller-manager component reports a network error:

multiple route tables found.Cause: When multiple route tables exist in the cluster, you need to configure CCM to support multiple route tables.

Scenario 3:

In Flannel network mode, newly added cluster nodes have the NodeNetworkUnavailable taint, and the cloud-controller-manager component does not promptly remove the node taint, which prevents pods from being scheduled. For more information, see Why do cluster nodes have the NodeNetworkUnavailable taint?.

Are third-party network plugins supported?

ACK clusters do not support the installation and configuration of third-party network plugins. Installing them may cause the cluster network to become unavailable.

Why are there insufficient Pod CIDR addresses, causing the no IP addresses available in range set error?

This error occurs because your ACK cluster uses the Flannel network plugin. Flannel defines a Pod network CIDR block, which provides a limited set of IP addresses for each node to assign to pods. This range cannot be changed. After the IP addresses in the range are exhausted, new pods cannot be created on the node. You must release some IP addresses or re-create the cluster. For more information about how to plan a cluster network, see Plan the network for an ACK managed cluster.

What is the number of pods supported in Terway network mode?

The number of pods supported by a cluster in Terway network mode is the number of IP addresses supported by the ECS instance. For more information, see Use the Terway network plugin.

Terway DataPath V2 data plane mode

Starting from Terway v1.8.0, when you create a new cluster and select the IPvlan option, DataPath V2 mode is enabled by default. For existing clusters that have already enabled the IPvlan feature, the data plane remains the original IPvlan method.

DataPath V2 is a new generation data plane path. Compared with the original IPvlan mode, DataPath V2 mode has better compatibility. For more information, see Use the Terway network plugin.

How do I view the lifecycle of a Terway network pod?

Log on to the ACK console. In the navigation pane on the left, choose Clusters.

On the Clusters page, click the name of the target cluster. In the navigation pane on the left, choose .

At the top of the DaemonSets page, click

and select the kube-system namespace.

and select the kube-system namespace.On the DaemonSets page, click terway-eniip.

The following describes the current status of the pods:

Type

Description

Ready

All containers in the pod have started and are running normally.

Pending

The pod is waiting for Terway to configure network resources for it.

The pod has not been scheduled to a node due to insufficient node resources. For more information, see Troubleshoot pod exceptions.

ContainerCreating

The pod has been scheduled to a node, indicating that the pod is waiting for network initialization to complete.

For more information, see Pod Lifecycle.

FAQ about Terway component upgrade failures

Symptom | Solution |

The error code | The EIP feature is no longer supported in the Terway component. To continue using this feature, see Migrate EIPs from Terway to ack-extend-network-controller. |

In Terway network mode, pod creation fails with a "cannot find MAC address" error

Symptom

Pod creation fails with an error indicating that the MAC address cannot be found.

failed to do add; error parse config, can't found dev by mac 00:16:3e:xx:xx:xx: not foundSolution

The loading of the network interface card in the system is asynchronous. When the CNI is being configured, the network interface card may not have loaded successfully. In this case, the CNI automatically retries, and this should not cause any issues. Check the final status of the pod to determine if it was successful.

If the pod fails to be created for a long time and the preceding error is reported, it is usually because the driver failed to load when the ENI was being attached due to insufficient high-order memory. You can resolve this by restarting the instance.

What do I need to know about configuring the cluster domain (ClusterDomain)?

The default ClusterDomain for an ACK cluster is cluster.local. You can also customize the cluster domain when you create a cluster. Note the following:

You can configure the ClusterDomain only when you create the cluster. It cannot be modified after the cluster is created.

The cluster's ClusterDomain is the top-level domain for the domains of Services within the cluster. It is an independent domain resolution zone for internal cluster services. The ClusterDomain must not overlap with private or public DNS zones outside the cluster to avoid DNS resolution conflicts.