By Farruh

TensorFlow runs in Docker and GPU within Docker

In the previous article, we prepared ECS and Docker environment. Part 2 of this series explains how to install NVIDIA-Docker and pull Tenforflow GPU Docker images.

The code examples are compatible with TensorFlow version 2.1.0 with GPU support, so we pull this version from the Docker registry:

First, we will check GPU card availability:

lspci | grep -i nvidiaThen, we verify your nvidia-docker installation:

docker run --rm --gpus all nvidia/cuda:10.1-base nvidia-smiIn the same manner, we verify the GPU-enabled TensorFlow image verification:

docker run --gpus all -it --rm tensorflow/tensorflow:latest-gpu \

python -c "import tensorflow as tf; print(tf.reduce_sum(tf.random.normal([1000, 1000])))"Finally, we pull TensorFlow:

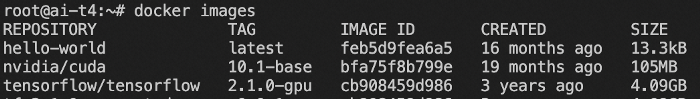

docker pull tensorflow/tensorflow:2.1.0-gpuLet's check the Docker images in the machine: docker images

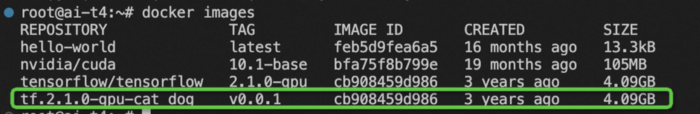

The original name of the Docker image has no connection with the current product. Hence, let's modify the tags to make them related to a related project. As an example, we use dog and cat classification tasks: machine learning library name-its version-project name:the tag will be the version of the docker image.

It gives us an opportunity to quickly identify and understand when we will have a dozen or more Docker images in the machine.

docker tag tensorflow/tensorflow:2.1.0-gpu tf.2.1.0-gpu-cat_dog:v0.0.1

docker imagesChange the tag and view existing images:

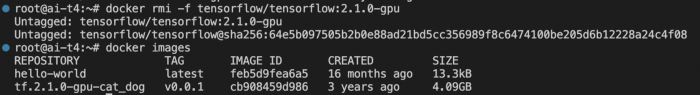

Now, we have two containers with different tags but the same image_id. It's better to untag the redundant one and print the list of Docker images again:

docker rmi -f tensorflow/tensorflow:2.1.0-gpu

docker images

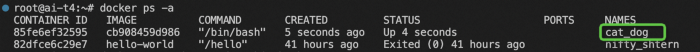

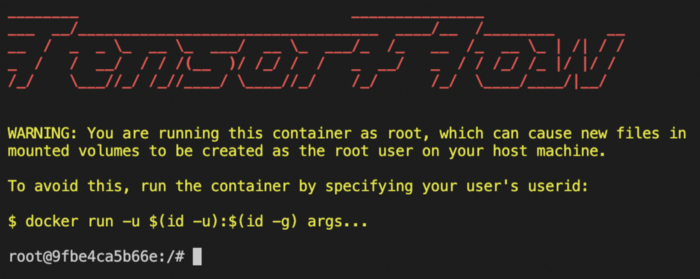

Congratulations! Now, you have a running container with TensorFlow installed that supports GPU. The next step is accessing the container. PreviousRun the docker images command to obtain the ImageId value, which is cb908459d986. Then, run the docker run command to access the container. In order to keep the container running in the background, we run it with --detach (or -d) argument. Usually, Docker gives a random sweet name to the container. We can name the container by ourselves. We need to add the --name parameter to the command to specify cat_dog as the container name.

docker run -t -d --name cat_dog cb908459d986 /bin/bashAfter running the docker ps –a command, we can see the list of containers:

Container with the Specified Name

Let's access the container that runs in the background:

docker exec -it cat_dog /bin/bash

To from the container bash, run the exit.

Now, we have the ready environment to run the inference model on this machine. Alibaba Cloud ECS instances with Docker are a fantastic platform for deploying and using AI technologies. The combination of Docker and Alibaba Cloud ECS instances provides a range of benefits, including the ability to run applications in isolated containers, manage the lifecycle of applications, and access a range of AI and big data services. Developers can use these tools to build and deploy intelligent applications with ease and efficiency, solving complex problems and making tasks faster, easier, and more efficient. The next article will explain how to run a Machine Learning model on Docker under the Alibaba Cloud environment.

Working with Alibaba Cloud ECS - Part 1: Preparing the GPU-Container Environment

Farruh - March 1, 2023

Farruh - March 6, 2023

Alibaba Clouder - January 25, 2019

Alibaba Clouder - March 5, 2019

Alibaba Clouder - July 24, 2020

Alibaba Clouder - January 24, 2019

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More GPU(Elastic GPU Service)

GPU(Elastic GPU Service)

Powerful parallel computing capabilities based on GPU technology.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn MoreMore Posts by Farruh