By Deng Hongchao, Senior Technical Expert at Alibaba Cloud and the core maintainer of OAM and KubeVela project, with the title of "the Second Kubernetes Operator".

After the release of the KubeVela project, many community members across the world asked a similar question: Is KubeVela exactly the same as PaaS products like Heroku? This question is frequently raised because the experience on KubeVela is really great. It can nearly be considered as the Heroku of Kubernetes.

Today, I'd like to talk about this topic: What is the difference between KubeVela and PaaS?

Note: The PaaS mentioned in this article includes both classic PaaS products, such as Heroku, and various Kubernetes-based "cloud-native" PaaS. Although their underlying implementations are different, they provide similar usage interfaces and experiences to users. OpenShift is an exception. As a project that is more complicated than Kubernetes itself, OpenShift is an authentic release version of Kubernetes. It is not included in the easy-to-use and user-oriented PaaS discussed in this article.

Here is the conclusion: Although KubeVela can bring users an experience similar to that of PaaS, KubeVela is not a PaaS product.

Most PaaS products provide complete lifecycle management functions for an application. They also focus on providing a simple and user-friendly experience and improving R&D efficiency. In these aspects, the goal and user experience of KubeVela are highly consistent with that of PaaS. However, in terms of the implementation details of KubeVela, the overall design and implementation of KubeVela are actually very different from that of various PaaS projects. From the user perspective, these differences are directly reflected in the "extensibility" of the entire project.

In detail, although the user experience is good, PaaS itself is often not extensible. Let's take a look at the new Kubernetes PaaS project, such as Rancher Rio. This project provides a good application deployment experience. For example, rio run allows quick deployment of containerized applications, automatic allocation of domain names, and access rules. However, what if we want Rio to support more capabilities to meet different user demands?

For example:

The key point is that these capabilities are common in the Kubernetes ecosystem, and some are even built-in capabilities in Kubernetes. However, to support any of the aforementioned capabilities in PaaS, a round of development is required for PaaS. Additionally, large-scale reconstruction is likely required due to previous assumptions and designs.

For example, I have a PaaS system that assumes that all applications are run through Deployment. So, the release and scaling functions of this PaaS system are also implemented directly according to Deployment. Now, users are asking for in-place update, which requires the availability of CloneSet in PaaS. The whole system may have to be reconstructed. This problem is even worse when it comes to the O&M capability. For example, my PaaS system supports the Blue Green Deployment strategy. Therefore, a lot of interaction and integration are required between PaaS and traffic management, monitoring, and other systems. Now, if I want my PaaS system to support a new strategy called Canary Release, all the interaction and execution logic must be reconstructed, which is a huge workload.

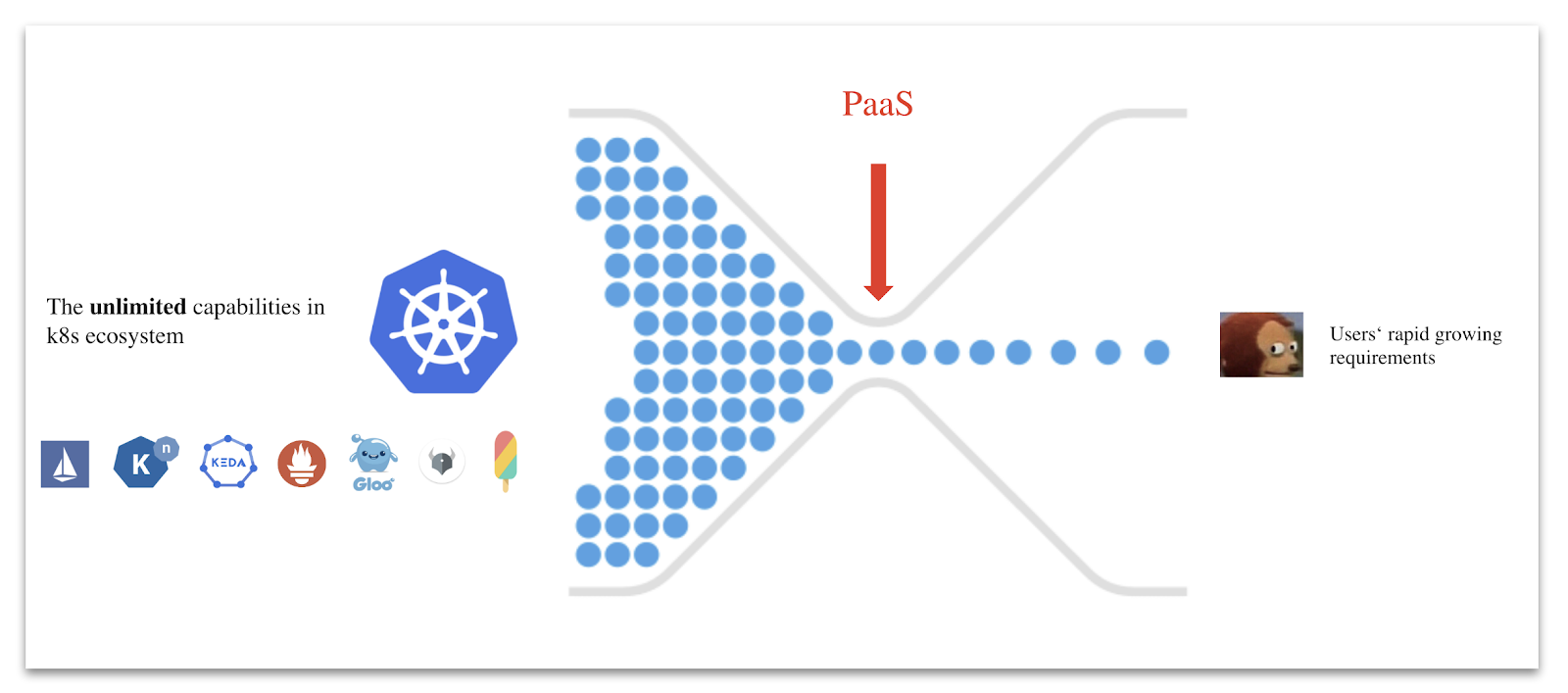

Of course, not all PaaS are completely inextensible. PaaS products with strong engineering capabilities, such as Cloud Foundry and Heroku, have their own plug-in capabilities and center. These products, on the premise of ensuring user experience and the capability controllability, open up certain plug-in capabilities, such as allowing users to access their own databases or developing some simple features. However, no matter how this plug-in mechanism is designed, it is actually a small closed ecosystem exclusive to PaaS. In the cloud native era, the open source community has already created an almost "unlimited" capability pool, that is, the Kubernetes ecosystem. It outshines any small ecosystem exclusive to PaaS.

The preceding problems can be collectively referred to as the "capability dilemma" of PaaS.

In contrast, KubeVela aims to use the entire Kubernetes ecosystem as its "plug-in center" from the beginning, and to "deliberately" design each of its built-in capabilities as independent and pluggable plug-ins. This highly extensible model actually has sophisticated design and implementation. For example, how does KubeVela ensure that a completely independent trait is bound to a specific workload type? How to check whether there is any conflict between these independent traits? These issues are solved by taking Open Application Model (OAM) as the model layer of KubeVela. In short, OAM is a highly extensible application definition and capability assembly model.

Moreover, definition files of any workload type and trait can be stored on GitHub after being designed and produced. Thus, these files can be used by any KubeVela user in the world in their own Appfile. For more information, see Documentation of $ vela cap (management commands for plug-in capability).

So, KubeVela advocates the future-oriented cloud-native platform architecture. For this architecture:

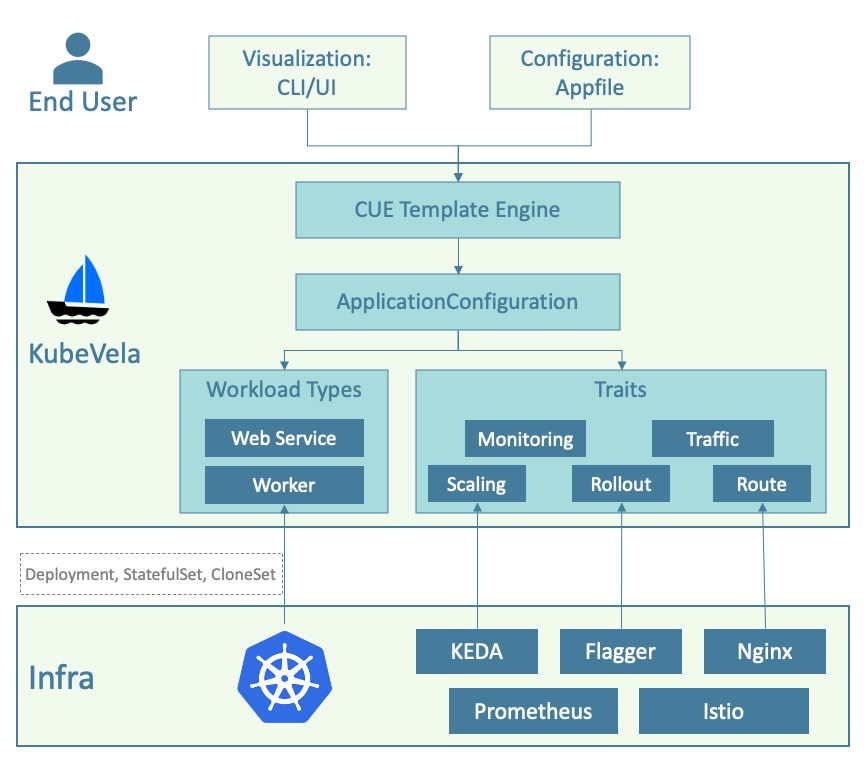

The following figure shows the overall architecture of KubeVela:

In terms of architecture, KubeVela has only one controller running on Kubernetes as a plug-in. This provides Kubernetes with application-layer-oriented abstractions and a user-oriented interface based on abstractions, called Appfile. The core of the Appfile and even KubeVela operation mechanism is OAM. Based on OAM, KubeVela provides a capability assembly process based on registration and self-discovery for system administrators. It allows system administrators to connect any capability in the Kubernetes ecosystem to KubeVela. Thus, KubeVela can adapt to different scenarios (such as AI PaaS and database PaaS) by "matching one core framework with different capabilities".

Specifically, system administrators and platform developers can use the preceding process to register any Kubernetes API resources (including CRD) and corresponding controllers on KubeVela as "capabilities". Then, these capabilities are encapsulated into available abstractions (that is, to become part of the Appfile) through the CUE template language.

Next, let's demonstrate how to insert the alerting mechanism in KubeWatch community into KubeVela as an alert trait.

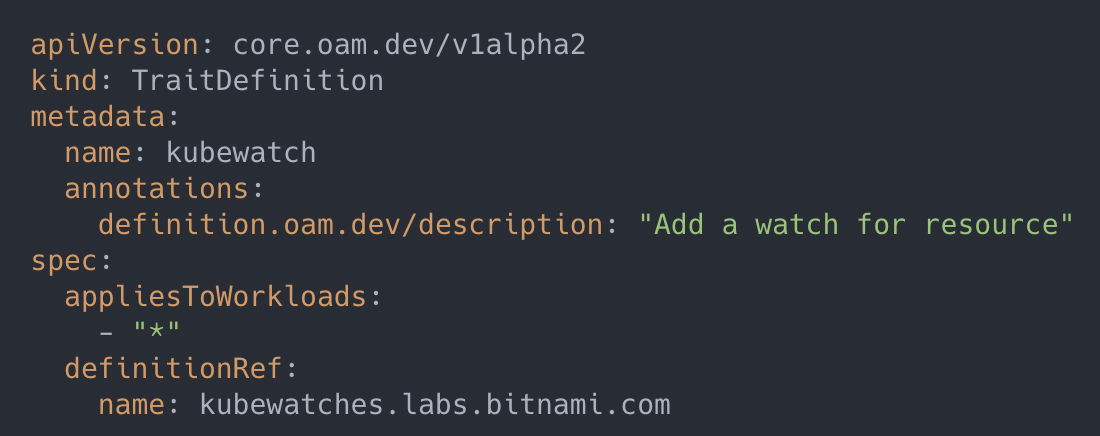

First, you need to determine what the capability represented by CRD corresponds for, a workload type or a trait? The difference here is that workload type determines the way running your code. Trait refers to the maintenance, management, or operating method of ongoing code instances.

As an alerting mechanism, KubeWatch is naturally used as a trait. At this time, it can be registered by writing a yaml file of TraitDefinition:

The server-side Runtime built in KubeVela recognizes the TraitDefinition registration event that is monitored, and then incorporates this capability into the platform management.

After this step, KubeWatch registration is done and available in KubeVela platform. However, in the next step, it still needs to be exposed to users, so we need to define an interface for external use of this capability.

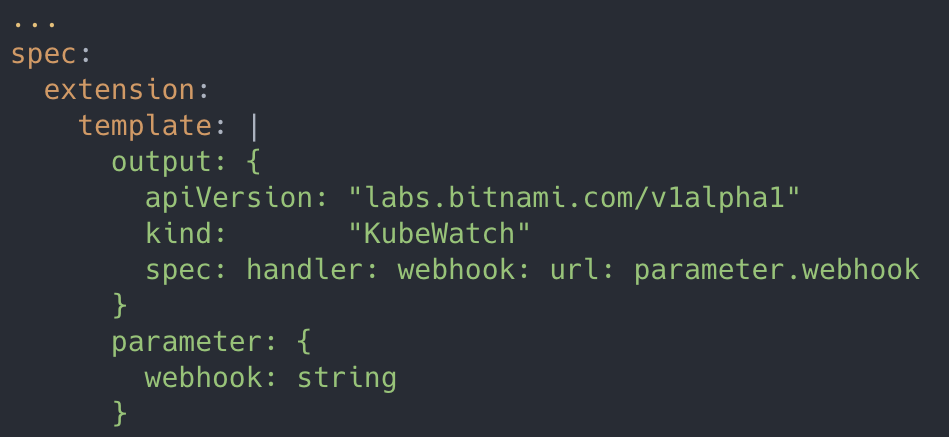

In fact, although most communities are very capable, they are more complicated for end users and are very difficult to learn and get started. Therefore, in KubeVela, platform administrators can further encapsulate capabilities to expose simple and easy-to-use interfaces to users. In most scenarios, a few parameters are enough for these interfaces. For capability encapsulation, KubeVela chooses the CUE template language to connect the user interface with the backend capability. It also naturally supports fully dynamic template binding, which means changing the template without restarting or redeployment of the system. The following example shows the template of the KubeWatch trait:

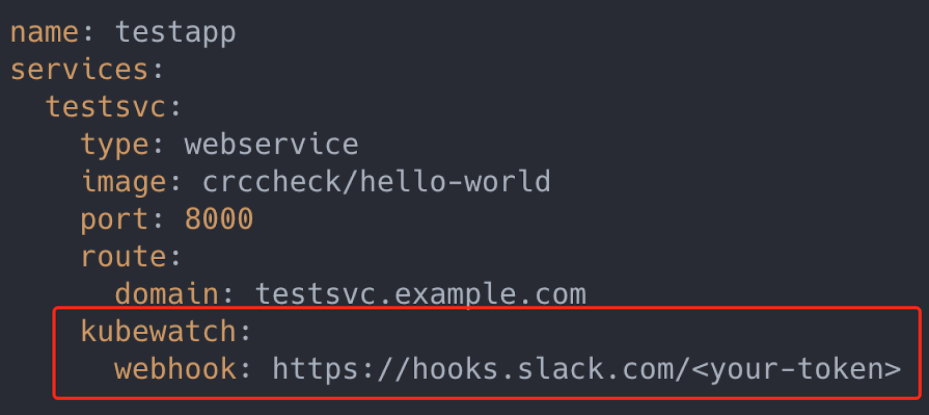

Add the template to the Definition file and apply $ kubectl apply -f in Kubernetes. Then, KubeVela automatically recognizes and processes the input. At this time, the user can directly declare and use the newly added capability in the Appfile. For example, the user can send alarm information to the designated Slack channel:

As you can see, this KubeWatch configuration is a new capability expanded through a third party. Managing the Kubernetes extension capability through the KubeVela platform is just as simple like this. With KubeVela, platform developers can quickly build a PaaS on Kubernetes and rapidly encapsulate any Kubernetes capability into an end-user-oriented upper-layer abstraction.

The preceding example just shows a very small part of KubeVela extensibility. In subsequent articles, I will introduce more details about the KubeVela capability assembly process, such as:

The native extensibility and capability assembly mechanism fundamentally distinguish KubeVela from most PaaS projects. They are also the reason why the implementation and model of KubeVela are essentially different from that of most PaaS projects. Therefore, the core goal of KubeVela is to provide users with simple application management, and deliver fully Kubernetes-native extensibility and flexibility for platform administrators.

The KubeVela project is an official project of the OAM community. It is maintained by several senior members of the cloud native community from Alibaba and Microsoft. It is also the core component of Alibaba Cloud EDAS and multiple internal application management platforms supporting the Double 11. KubeVela aims to build a future-oriented cloud-native PaaS architecture, bringing the best practices such as horizontal extensibility and application-centered features to everyone. It also hopes to promote and even lead the development of the cloud native community in the application layer.

Want to know more?

KubeVela: The Extensible App Platform based on Open Application Model and Kubernetes

640 posts | 55 followers

FollowAlibaba Cloud Native Community - November 11, 2022

Alibaba Developer - June 21, 2021

Alibaba Cloud Native Community - January 27, 2022

Alibaba Cloud Native Community - September 16, 2022

Alibaba Developer - November 18, 2020

Alibaba Developer - March 16, 2021

640 posts | 55 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native Community