By Liu Chen (Lorraine)

Recently, I have been thinking on Kubernetes from a multidimensional perspective. When studying and reviewing the knowledge of Kubernetes, I think that, in order to adapt to the Cloud Native trend, application developers need to master the fundamental knowledge of Kubernetes from multiple perspectives.

And that is why I wrote a series of articles on "understanding Kubernetes" to explore Kubernetes from multiple perspectives. In this article, I will discuss Kubernetes from the perspective of resource management. For a detailed introduction to basic Kubernetes knowledge, see the previous article titled, Understanding Kubernetes from the Perspective of Application Development.

Since I am responsible for managing Kubernetes clusters as a DevOps in the team, this article will revisit Kubernetes from the perspective of resource management.

In the era of physical or virtual machine clusters, we typically manage clusters that use hosts as units. A host node can be a physical machine, or a VM based on Hypervisor virtualization middleware. The resource types include CPU, memory, disk I/O, and network bandwidth.

Generally, a Kubernetes cluster consists of at least one Master node and several Worker nodes. The Master node includes the Scheduler, Controller, APIServer, and Etcd. Worker nodes communicate with the Master node through Kubelet. Their resources and load pods are scheduled and controlled by the Master node. The Kubernetes architecture uses C/S mode, namely, the resource management and scheduling of Worker nodes are controlled by the Master node.

Kubernetes cluster resources are no longer divided based on node unit in the traditional way. Instead, the resource types will be introduced in three aspects: workload, storage, and network. The basic cluster resource management and container orchestration are implemented based on the core resource objects provided by Kubernetes, including Pod, PVC/PV, and Service/Ingress.

In a Kubernetes cluster, the CPU and memory resource are defined in Pod and cannot be directly managed by users. The PVC/PV mode decouples storage resources from node resources. Service provides automatic service discovery, which enables the self-recovery in Kubernetes clusters. Ingress controls the network traffic of clusters, enabling elastic scaling of Kubernetes by taking workload and network loads into account.

Kubernetes uses Pod to manage the lifecycle of a group of containers. Pod is the smallest unit for Kubernetes scheduling, and deployment and scheduling of services. It can be called workload as well. The deployment of stateless applications is defined and managed by ReplicaSet, while the deployment of stateful and codependent applications is defined and managed by StatefulSet. ReplicaSet and StatefulSet will be introduced in details subsequent articles. The CPU and memory resources required by the applications are defined in the Pod by spec->containers->resources, since CPU and memory resources are at the container level.

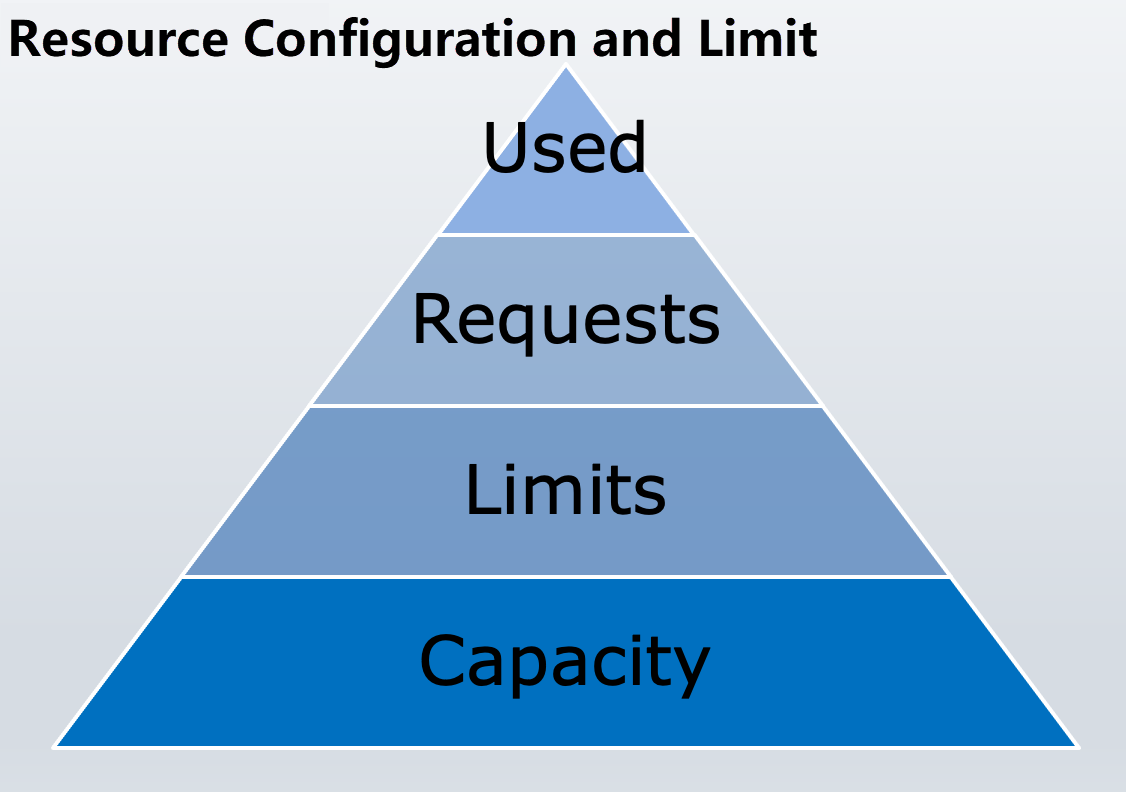

Kubernetes provides two parameters, called requests and limits, to define the resource scope and quota.

The "requests" defines the minimum resource limits of workload. It is the default value of Kubernetes resource allocation when a container is started.

The "limits" defines the maximum resource limits of workload. It is the pre-allocated quota of Kubernetes resources when a container is running.

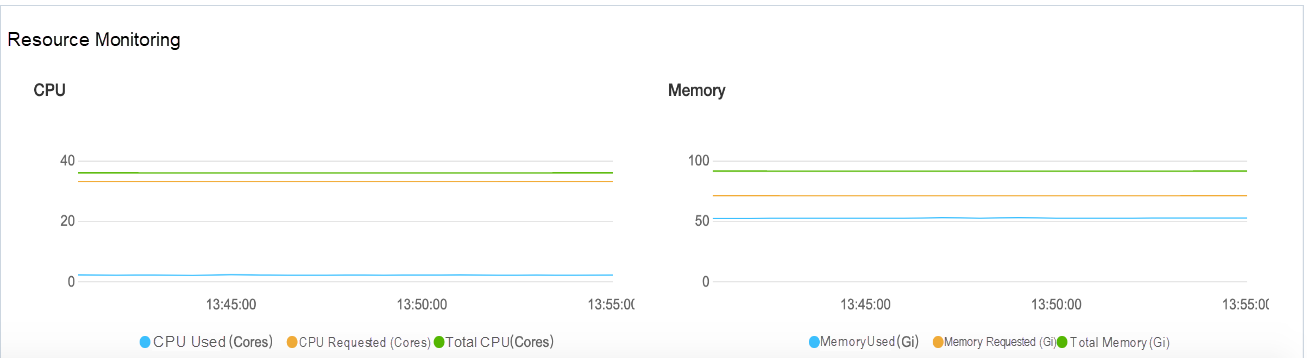

The storage volume, network namespace, and PID namespace of the Pod are shared by containers. Containers also possess allocated quota of CPU and memory resources. During resource management, it is necessary to distinguish between CPU/memory resource quota and actual CPU/memory utilization of the workload. The Kubernetes cluster information console displays the CPU and memory quotas defined by the workload.

PVC is short for PersistentVolumeClaim and PV is short for PersistentVolume. As the name implies, PV is the resource object definition for Kubernetes to describe various storage resource types, such as block storage, NAS, and object storage. The storage resources at the IaaS layer can be used for Kubernetes cluster storage by using the storage management plug-ins provided by cloud vendors.

It seems that Kubernetes storage resources can be adapted to the IaaS storage resources of various cloud vendors by PV abstraction. So, why bother to develop the PVC resource object abstraction? PVC is similar to the abstract class in OOP. Abstract classes are used in development to decouple object calls from object implementations. Pod is bound with PVC rather than PV to decouple Pod deployment from PV resource allocation.

Pod deployment is generally a sub-step of application deployment, and is controlled by developers. PV is defined and allocated by the developments team and controlled by cluster administrators.

Pod and PVC are resource objects within the access control range of namespace, while PV is resource object within the access control range of clusters.

The PVC/PV mode decouples storage resources from node resources. In this mode, storage resources defined by PVC/PV are independent of node resources. They migrate along with workload between node resources through dynamic binding. The specific process goes in accordance with the implementation method of storage plug-ins provided by cloud vendors.

The server-side automatic discovery mechanism is implemented by Service. That is, a set of Pods that provide application services can provide services externally through the same access address of the service domain name. The addition or removal of Pods does not affect the overall performance of the service. The workload is bound with a virtual IP address and port through Service and is not sensitive to the node resources allocated by the Pod. Thus, the Service is decoupled from the network resources of nodes, enabling load migration and fault self-recovery for the entire cluster.

Unlike Service object, Ingress is a resource object for Kubernetes to provide services outside the cluster. Before Kubernetes, Nginx reverse proxy and load balancing were mostly used for load and traffic control to extend the load processing capability of servers. Essentially, Ingress is an NginxPod. It is exposed through the Service object and provides reverse proxy and load balancing for external applications based on the LoadBalancer mode. Ingress is similar to a router and an access gateway of a cluster. Through configuring the routing rules in Ingress, the workload is bound with the parsing path of the corresponding domain name. By doing so, services in multiple clusters can be exposed with only one external IP address, saving IP address resources.

Currently, Kubernetes is the most popular container orchestration platform that provides platform-level elastic scaling and fault self-recovery. Kubernetes clusters are based on the C/S architecture, and the cluster resources and load balancing are controlled and managed by Master node in a unified way. Pod is the smallest unit for Kubernetes resource scheduling. Therefore, Pod scheduling and management is the first step to manage and schedule cluster resources.

To support platform-level elastic scaling and fault self-recovery, Kubernetes needs to understand the running status of the application services. The simplest way is to check whether the container is running. If the container running fails, Kubernetes will automatically keep trying to restart the process. In many cases, restarting can fix the problems. Therefore, such health checks are simple but still effective and necessary.

However, if exceptions like Out of Memory (OOM) and deadlock occur when a java application is running, the JVM process is still running. At this time, the Pod is still running, but the application process cannot provide services anymore. In this case, the health check mentioned above doesn't work.

However, with LivenessProbe provided by Kubernetes, the service-level exceptions of applications can be checked to see whether an application service is running properly in an all-round way.

Similar to process health check, LivenessProbe also performs the health check to container processes. When a failed process is detected, LivenessProbe restarts the process to fix the failure automatically.

The difference is that, LivenessProbe connects the exposed IP address and Port of the Pod by calling the HTTP GET API defined by the application service. It also determines the running status of the container process by identifying whether the returned code of request is between 200 and 399.

In addition to calling HTTP GET API, the running status can also be determined based on determining whether the TCP Socket is successfully connected.

Such logic for determining the running status is from Kubernetes rather than internal application services. It enables Kubernetes to master the service-level health status of applications.

When overload occurs, even if the container process is still healthy, this application may still fail to provide services normally. Kubernetes detects such problems through ReadinessProbe.

ReadinessProbe works in the same way as LivenessProbe does. The Pod workload can be reported to the Kubernetes management node through HTTP GET API or TCP Socket Connection.

If ReadinessProbe fails and the application process cannot process requests normally, the Pod is not restarted. Instead, it is removed from the Service endpoint and no longer receives the request load from the Service. This is similar to traffic degradation to ensure that the Pod can properly handle the received request load.

Since Google made Kubernetes open-source in 2015, it has proposed the initial definition of Cloud Native, namely, application containerization, microservices-oriented architecture, and container orchestration and scheduling supported by applications. Container orchestration and scheduling is the core technology of Kubernetes. When facing clusters with hundreds of thousands of microservices containers, it becomes a complex task for the Pod scheduling and resource management. A group of containers in a Pod are correlated at runtime. They run on the same node and share node resources. When application load changes, the node resources consumption changes accordingly. What's more, the capacity and availability of node resources also affect the performance and stability of application services.

Scheduler of Kubernetes selects appropriate nodes for scheduling, based on the Pod resource objects defined by APIServer and the resource usage on each node reported by Kubelet. Scheduler controls the creation, elastic scaling, and load migration of Pods. In addition, Scheduler makes decisions based on the dependencies between containers during runtime, resource requirement settings, and default scheduling policies. The default scheduling policy generally takes scheduled nodes into consideration to ensure high availability, high performance, and low latency of workloads. Therefore, the default scheduling policy is recommended unless users have a special use for the node.

The customized Scheduler can operate the configuration files of clusters, like the JSON file shown below. The "predicates" means that only node resources that meet the above rules are used when scheduling Pods. The "priorities" indicates that, the nodes resources filtered by the predicates rules are sorted based on weight according to their priorities. The node resources with the highest weight are selected for scheduling. For example, the Scheduler will preferentially select the node with lowest resource requirements for scheduling, as shown in the following example.

{

"kind" : "Policy",

"apiVersion" : "v1",

"predicates" : [

{"name" : "PodFitsHostPorts"},

{"name" : "PodFitsResources"},

{"name" : "NoDiskConflict"},

{"name" : "NoVolumeZoneConflict"},

{"name" : "MatchNodeSelector"},

{"name" : "MaxEBSVolumeCount"},

{"name" : "MaxAzureDiskVolumeCount"},

{"name" : "checkServiceAffinity"},

{"name" : "PodToleratesNodeNoExecuteTaints"},

{"name" : "MaxGCEPDVolumeCount"},

{"name" : "MatchInterPodAffinity"},

{"name" : "PodToleratesNodeTaints"},

{"name" : "HostName"}

],

"priorities" : [

{"name" : "LeastRequestedPriority", "weight" : 2},

{"name" : "BalancedResourceAllocation", "weight" : 1},

{"name" : "ServiceSpreadingPriority", "weight" : 1},

{"name" : "EqualPriority", "weight" : 1}

]

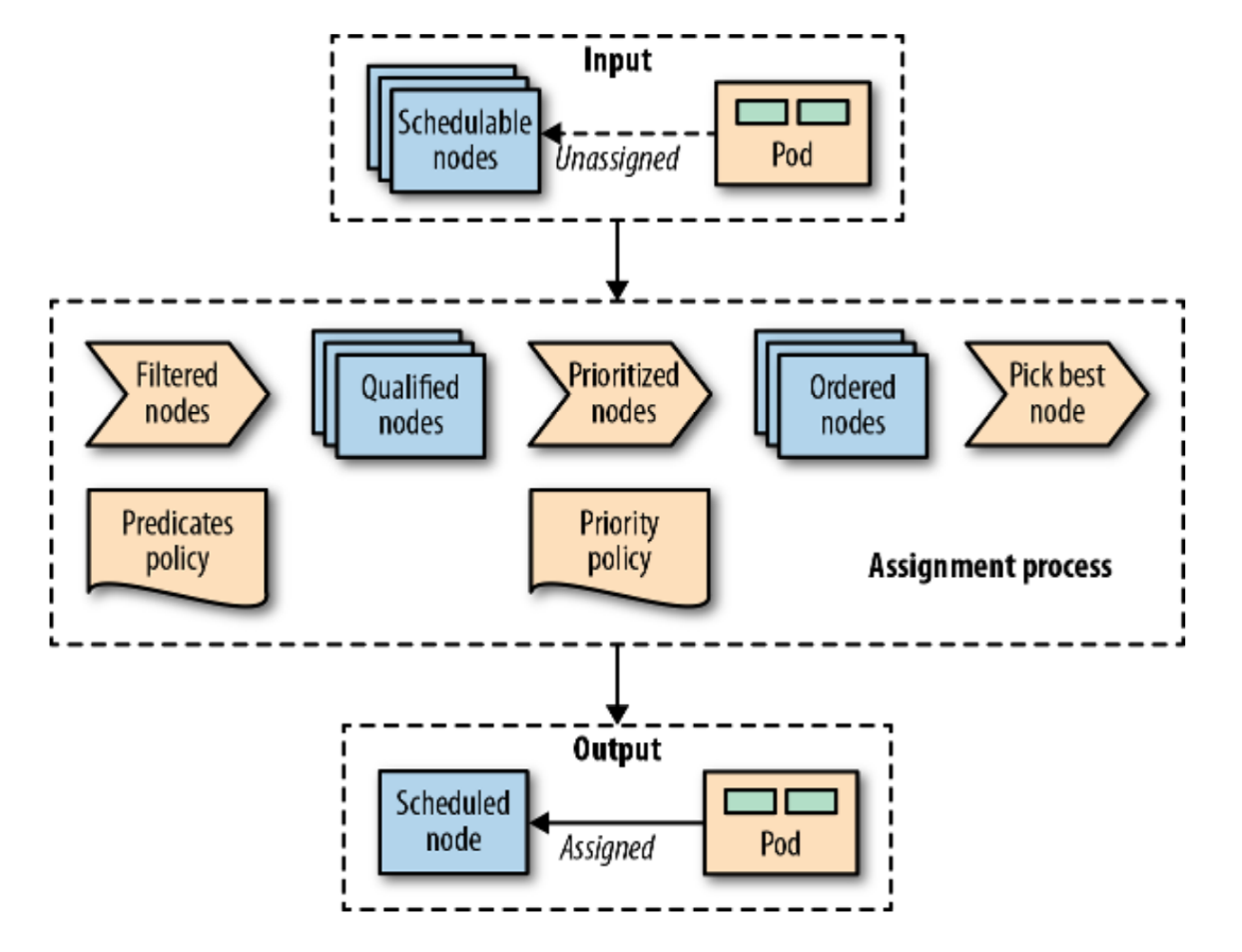

}When the Scheduler detects the Pod resource object definition generated by APIServer, it first filters the set of node resources that meet the rules through "predicates". Then, it sorts these node resources based on their priorities. Next, it selects the optimal node, and allocates resources to create Pod.

Scheduler Scheduling Process from Kubernetes-Patterns

Generally, a Kubernetes cluster provides sufficient resources for the Scheduler to schedule Pods. Pods are usually scheduled to nodes where the resource capacity is greater than the Pod requirements. Normally, some node resources are pre-allocated by the node OS and Kubernetes management components. The amount of allocable resources is usually smaller than the total amount of node resources. The resources that can be scheduled by Scheduler refer to the resources that can be allocated of nodes, also known as node capacity. The calculation method is as follows:

Allocatable Capacity= Node Capacity - Kube-Reserved - System-Reserved

Allocatable Capacity is the node resources that can be allocated by Scheduler to an application service Pod.

In the above introduction about workload, we mentioned that the requests and limits methods are used to determine the amount and quota of resources consumed by running containers. Not all node resources can be used for resource scheduling. Therefore, it is recommended that, the resources for "requests" and "limits" of a container are clearly determined, when defining a Pod template. By doing so, the scheduling failure caused by the resource competition between workload and Kubernetes component can be avoided.

The "limits" defines the maximum limit of container resource usage. The "requests" defines the initialization configuration of container resources. Generally, resources are allocated based on "requests" when the container is started. When the container is running, the resource consumed is generally less than that allocated by "requests", as shown in the following figure.

There are quite a lot of resource fragments in such pyramid-based resource allocation method. When workloads compete for resources, Kubernetes provides three levels of service assurance.

Most application services running in Kubernetes clusters are distributed systems based on microservices architectures. Services often call each other. When an application service Pod is scheduled, the Scheduler selects the optimal node for resource allocation and Pod creation. Before starting the container, ClusterIP address is randomly allocated to this Pod. Therefore, when another application Pod needs to communicate with this Pod, it is difficult to obtain the randomly allocated ClusterIP.

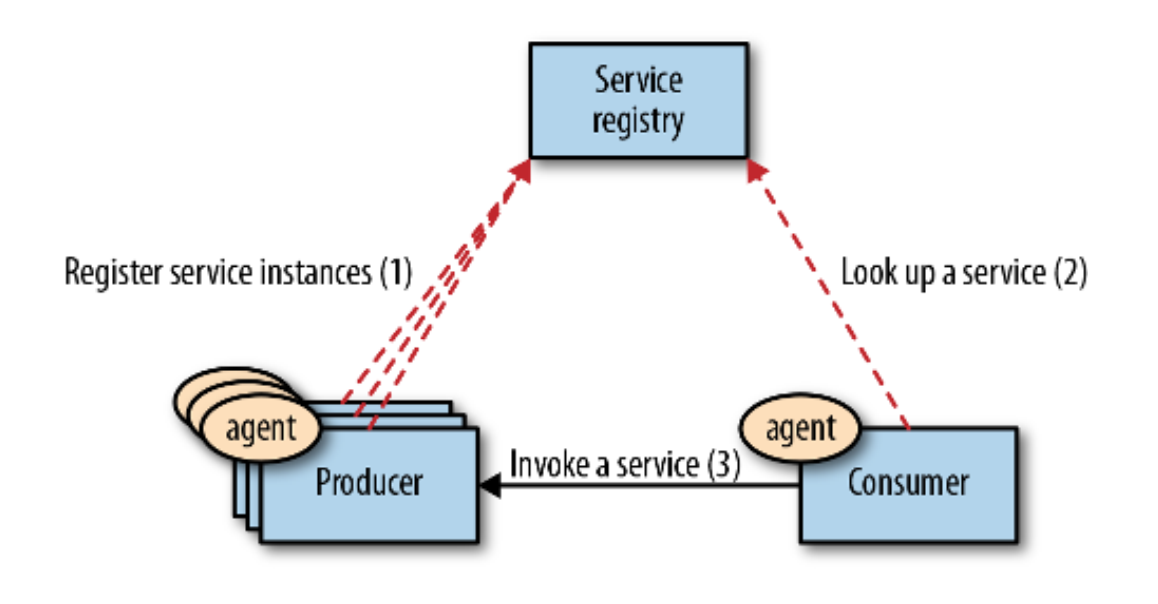

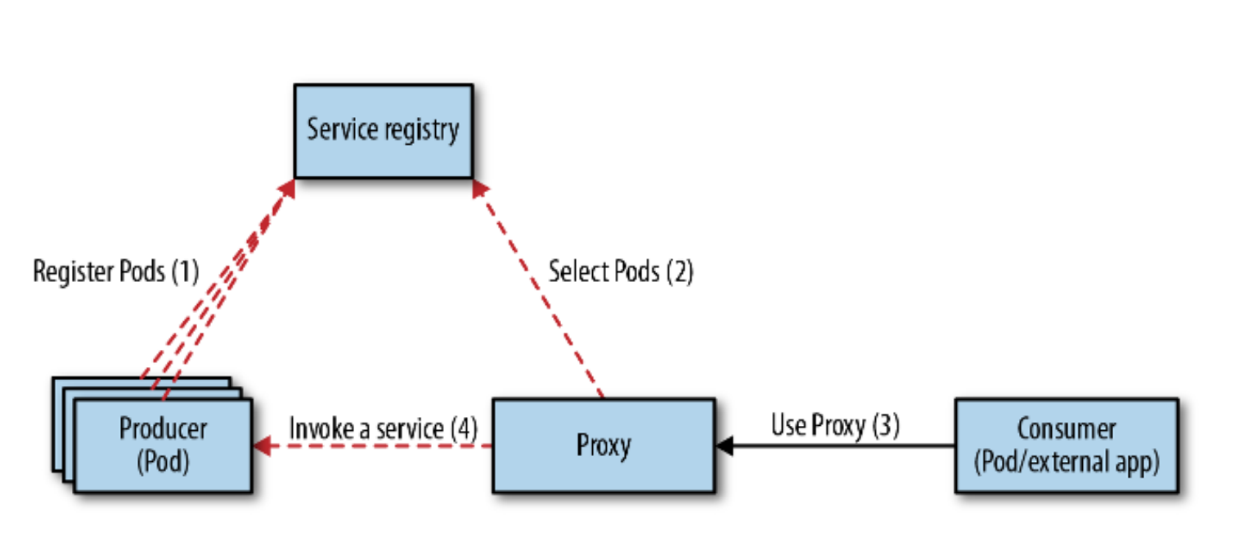

Traditional distributed systems, such as ZooKeeper, often apply client discovery to realize automatic discovery between services. The client service has a built-in probe called Agent. It can discover the service registry and select a service instance for communication. The service instance of the server reports its status to the service registry. Then, through searching the information at the service registry, the client service selects and wakes up the service instance to interact.

Client Service Discovery by Agent

Kubernetes implements the service discovery based on the server. That is, the server Pod must actively report its service capacity to the service registry. Therefore, it should be guaranteed that the client Pod is able to access the service registry, provide service information through the service registry, and access the server Pod. The client Pod accesses the same service through a constant virtual IP address, regardless of which Pod provides the service.

Server Discovery By Proxy

Service resource object implements the server discovery of Kubernetes. Service is defined by Pod selector and port number. It can bind a virtual IP, also known as ClusterIP, to a group of Pods. Example is as follows:

apiVersion: v1

kind: Service

metadata:

name: index-helm

namespace: bss-dev

spec:

clusterIP: 172.21.7.94

ports:

- name: http

port: 8081 // This is the port number through which Service provides services externally.

protocol: TCP

targetPort: 8081 // This is the listening port number of the Pod.

selector:

app.kubernetes.io/instance: index //The spec->selector is bound to the corresponding Pod.

app.kubernetes.io/name: helm

sessionAffinity: None

type: ClusterIP // ClusterIP is the default Service type.Since ClusterIP is randomly allocated after the Pod is started, how do other service Pods discover this ClusterIP and communicate with it? There are currently two methods: environment variable and DNS query.

When the Pod is created, the Service object bound to it is also created. Then, the bound port number is immediately monitored. The ClusterIP and Port value related to the Service are automatically set in the Pod as environment variables. Thus, the application can provide services externally through ClusterIP and port.

After a Pod is started, the corresponding environment variables of the Service cannot be injected. Therefore, ClusterIP and port binding based on environment variables can only occur when the Pod is started.

Kubernetes provides a platform-level DNS service that can be configured for all Pods. When a Service resource object is created, the DNS service can bind a DNS access address to the corresponding Pod for access. DNS service manages the mapping between the DNS access address of an application service and the ClusterIP and port allocated when a Pod starts. It is also responsible for parsing the traffic load from the DNS access address to the corresponding Pod.

If the serviceName and the corresponding namespace are clear to the client service, it can directly access the application service Pod through the internal domain name address service-name.namsapce.svc.cluster.local.

Recently, the author has been reviewing the basic knowledge of Kubernetes. As the companion of another article "Understanding Kubernetes from The Perspective of Application Development", this article focuses on the understanding of Kubernetes from the perspective of resource management. Kubernetes users are typically classified into application developers and cluster administrators. The cluster administrator usually needs to know more about Kubernetes in terms of the cluster, resource, and performance. Thus, it is necessary to master and understand the basic concepts, such as Pod scheduling and resource management, storage and network resources, and service and traffic management.

The content of this article can only be considered as general introduction. If you are interested in the in-depth learning of relevant knowledge, check the following articles.

By Liu Chen (Lorraine), working on Fintech, proficient in continuous integration and release. Liu Chen has practical experience in continuous deployment and release of more than 100 applications across all platforms, and is now aspiring to become a Kubernetes developer.

Understanding Kubernetes from the Perspective of Application Development

GitHub Actions + ACK: A Powerful Combination for Cloud-Native DevOps Implementation

223 posts | 33 followers

FollowAlibaba Container Service - March 12, 2021

Alibaba Developer - September 16, 2020

Alibaba Cloud Native - February 5, 2021

Alibaba Cloud Serverless - November 27, 2023

Alibaba Clouder - September 24, 2020

Alibaba Clouder - August 17, 2021

223 posts | 33 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn More Resource Management

Resource Management

Organize and manage your resources in a hierarchical manner by using resource directories, folders, accounts, and resource groups.

Learn MoreMore Posts by Alibaba Container Service