Image recognition and analysis have always been a staple in Alibaba's research and development (R&D) projects, and have played an important role in Alibaba's product innovation. However, these applications typically involve high workloads with strict requirements on service quality. Current solutions such as GPU are not able to balance the low latency and high performance requirements at the same time.

In order to provide a good user experience while applying deep learning, Alibaba's Infrastructure Service Group and the algorithm team from Machine Intelligence Technologies have architected an ultra low latency and high performance DLP (deep learning processor) on FPGA.

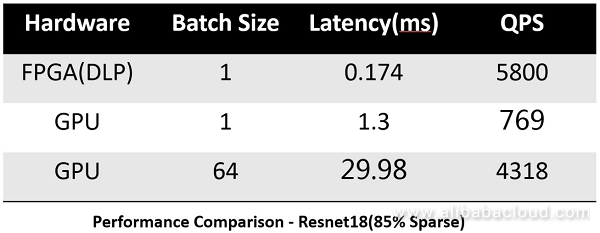

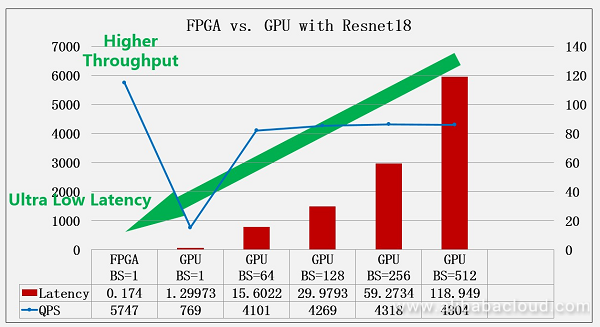

The DLP FPGA can support sparse convolution and low precision data computing at the same time, while a customized ISA (instruction Set Architecture) was defined to meet the requirements for flexibility and user experience. Latency test results with Resnet18 (sparse kernel) show that Alibaba's FPGA has a delay of only 0.174ms.

In this article, we will briefly discuss how Alibaba and the team from Machine Intelligence Technologies are able to achieve such a feat with the new DLP FPGA.

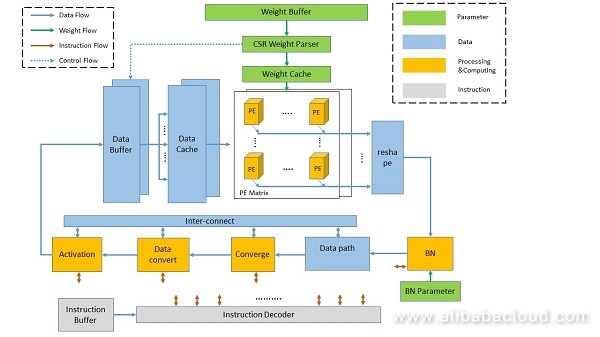

Alibaba's newly developed DLP have 4 types of modules, which are classified based on their functions.

The Protocal Engine (PE) in the DLP can support:

This PE also offers over 90% efficiency. Furthermore, the DLP's weight loading supports CSR Decoder and data pre-fetching.

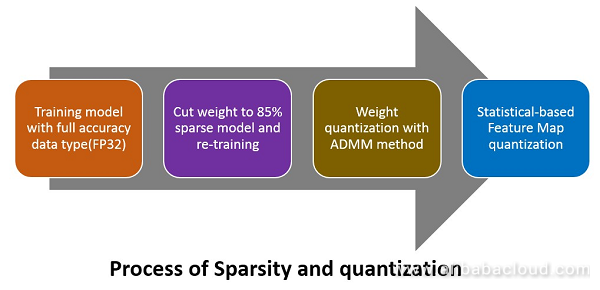

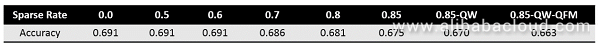

Re-training is needed to develop a high accuracy model. There are 4 main steps illustrated below to get both sparse weight and low precision data feature map.

We used an effective method to train the Resnet18 model to sparse and low precision (1707.09870). The key component in our method is discretization. We focused on compressing and accelerating deep models with network weights represented by very small numbers of bits, referred to as extremely low bit neural network. We then modeled this problem as a discretely constrained optimization problem.

Borrowing the idea from Alternating Direction Method of Multipliers (ADMM), we decoupled the continuous parameters from the discrete constraints of the network, and casted the original hard problem into several sub-problems. We proposed to solve these subproblems using extragradient and iterative quantization algorithms, which lead to considerably faster convergence compared to conventional optimization methods.

Extensive experiments on image recognition and object detection verify that the proposed algorithm is more effective than state-of-the-art approaches when coming to extremely low bit neural network.

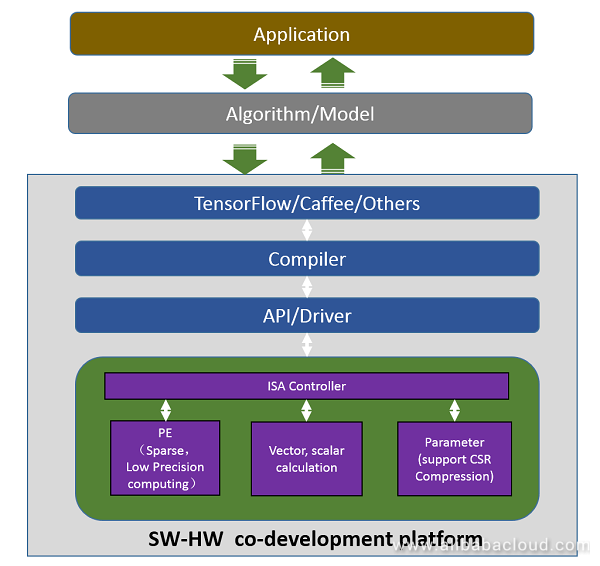

As mentioned previously, only having low latency is not enough for most online service and usage scenarios since the algorithm model will change frequently. As we know, FPGA development cycle is very long; it usually takes a few weeks' or months' time to finish a customized design. In order to solve this challenge, we designed an industry standard architecture (ISA) and compiler to reduce model upgrade time to only a few minutes.

The SW-HW co-development platform consists of the following items:

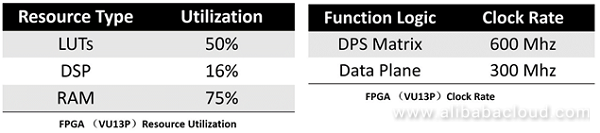

The DLP was implemented on Alibaba designed FPGA Card, which has PCIe and DDR4 memory. The DLP, combined with this FPGA card, can benefit application scenarios such as online image search on Alibaba.

FPGA test results with Resnet18 show that our design achieved ultra-low level latency meanwhile maintaining very high performance with less than 70W chip power.

Read similar articles and learn more about Alibaba Cloud's products and solutions at www.alibabacloud.com/blog.

Alibaba Cloud ET Environment Brain Assists in AI-based Flood Control in Zhejiang, China

2,593 posts | 793 followers

FollowAlibaba Clouder - June 4, 2018

Alibaba Clouder - September 29, 2017

Alibaba Cloud Community - March 22, 2022

Alibaba Clouder - September 20, 2018

Alibaba Cloud Community - July 27, 2022

ApsaraDB - April 13, 2020

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Online Education Solution

Online Education Solution

This solution enables you to rapidly build cost-effective platforms to bring the best education to the world anytime and anywhere.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn MoreMore Posts by Alibaba Clouder