By Yang Hang (Hangxian) and Publisher Yao Xinlu

Recently, Jeff Zhang, President of Alibaba Cloud Intelligence, unveiled Cloud Infrastructure Processing Unit (CIPU) at Alibaba Cloud Summit 2022. Unlike the traditional CPU centric architecture design, CIPU is defined as the control and core performance acceleration center of cloud computing

With the new architecture, CIPU transfers the compute, storage, and network resources of data centers to the cloud and provides hardware acceleration. It is connected to Alibaba Cloud's Apsara system, turning millions of servers around the world into one computer.

Throughout the history of computing, there have been many advances in technology that significantly improved our lives, such as the invention of the first computer. So, what values will the Alibaba Cloud Apsara operating system and CIPU deliver in the cloud computing era?

This article provides a detailed explanation about Alibaba Cloud CIPU and offers answers to the following questions: What is CIPU? What problems does CIPU mainly focus on? What is the background of the development of CIPU and what are the prospects of CIPU?

Cloud computing has evolved significantly since its inception in 2006. And now, we are once again at the turning point of the cloud computing industry. With a host of innovative technologies arising, what does the future hold for cloud computing? What kind of cloud services do we expect to see in the coming years?

To answer these questions, we first need to take a step back and understand the fundamentals of cloud computing.

Similar to public resources and social infrastructure such as water and electricity, the core characteristics of cloud computing are elasticity and multi-tenancy.

In a broad sense, elasticity keeps IT capabilities in sync with business requirements, providing seemingly "unlimited capacity" to users. More specifically, elasticity gives users unparalleled flexibility to meet the demands of a wide variety scenarios.

IT computing power has become the backbone for many industries. It goes without saying that the shortage of computing power hinders business development. However, the construction of computing power cannot be completed in a short period of time. To build computing capabilities, you must construct such facilities, including building power supply and water supply facilities, installing networks and connecting the data center with the Internet, purchasing, customizing, and deploying servers, bringing the data center online, and performing O&M. Moreover, different computing power requires different data center deployment strategies, such as single data center, multiple data centers, geo-redundant data centers, and intercontinental data centers. After setting up your data center, you must recruit and train professionals to make sure that the data center runs securely and stably, and enable disaster recovery and backup capabilities. All of the efforts above are time-consuming, labor-intensive, and costly.

That was until the emergence of cloud computing, which, through its elasticity, made the acquisition of computing power simple and easy.

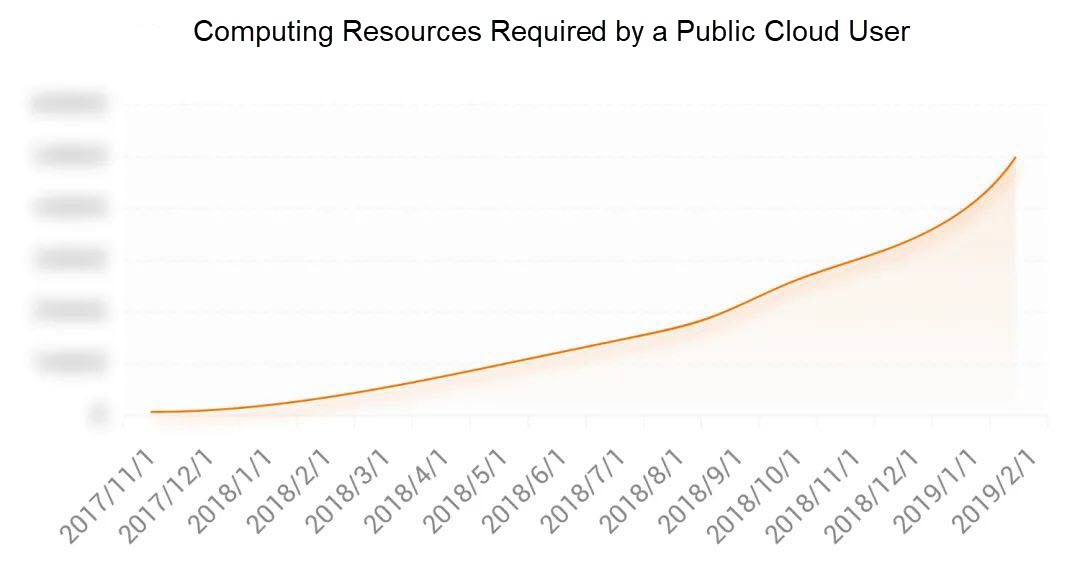

The following figure depicts the growth of the computing power purchased by a public cloud user whose businesses grew rapidly. The demand for computing power exploded from zero to millions of cores in just 15 months. The ample supply of elastic computing power has boosted the user's business development significantly.

You may wonder why the multi-tenancy feature is of the same importance as elasticity. Strictly speaking, multi-tenancy is one of the prerequisites for achieving extreme elasticity and IT resource efficiency.

It cannot be denied that the private cloud helps enterprises to flexibly and efficiently use enterprise IT resources to some extent. However, multi-tenancy, one of the core features of public cloud, makes public cloud profoundly different from the private cloud.

Giving an accurate definition of elasticity and multi-tenancy in cloud computing paves the way for our discussion on how to realize both features at a technical level and how to enable the evolution of cloud computing technology from the perspectives of security, stability, performance, and cost-efficiency.

Infrastructure as a service (IaaS) is a type of cloud computing service that offers essential compute, storage, and network resources on demand. Platform as a service (PaaS) is a type of cloud computing service that allows you to use the platforms of databases, big data, AI, Kubernetes cloud-native and middleware as services. Software as a service (SaaS) allows users to connect to and use cloud-based apps over the Internet.

Traditionally, cloud computing mainly refers to IaaS, while PaaS and SaaS are cloud-native services on the IaaS cloud platform. CIPU mainly resides at the IaaS layer, and therefore, the demands for CIPU of PaaS and SaaS are not included in this article.

To realize the flexible and on-demand supply of IaaS IT resources such as the compute, storage, and network resources, the features including resource pooling, multi-tenancy of services, flexible supply, and automatic O&M must be implemented. The core technology is virtualization.

It is clear that virtualization technologies and IaaS cloud computing services reinforce each other. IaaS cloud services discover and explore the business values of virtualization technologies, making virtualization technologies the cornerstone of IaaS cloud services. At the same time, virtualization technology brings dividend in increasing resource utilization rate which makes IaaS cloud services possible in terms of business return.

In 2003, the Xen virtualization technology was launched. In 2005, Intel began to introduce virtualization in Xeon processors, added new instruction sets, and changed the x86 architecture, making deployment of virtualization on a large scale possible. In 2007, the KVM virtualization technology was initiated. The 20-year evolution of the IaaS virtualization technology has always been motivated by four business objectives: better security, more stable, higher performance, and lower cost.

The preceding history shows that the Achilles' heel (or weakness) of IaaS is the pain points of virtualization technologies.

1. Cost. In the Xen era, Xen Hypervisor DOM0 consume half of XEON CPU resources. Only half of the CPU resources can be sold. You can see that the computing tax of virtualization is extremely heavy.

2. Performance. In the Xen era, the latency of core network virtualization reached 150us, and the network latency and jitter were visibly large. Network forwarding rate in PPS became the key bottleneck of enterprise core businesses. Xen virtualization architecture in storage and network I/O virtualization had insurmountable performance bottlenecks.

3. Security. QEMU has a large number of device emulation code that are meaningless for IaaS cloud computing. These code not only cause additional resource overheads, but also make it impossible to converge security attack surfaces.

As we all know, one of the foundations for the establishment of the public cloud is data security in a multi-tenancy environment. Continuously improving the trust capability of hardware and the secure encryption of data movement among compute, storage, network, and other subsystems are quite challenging under Xen/KVM virtualization.

4. Stability. The improvement of the stability of cloud computing relies on two core technologies: the underlying chip white box and the big data O&M. The underlying chip white box outputs more RAS data.

To further improve the stability of the virtualization system, it is necessary to further deepen the implementation details of compute, network and storage/memory chips to obtain more data that affects the stability of the system.

5. Elastic bare metal support. Secure containers, such as Kata and Firecracker, and multi-card GPU servers in PCIe switch P2P introduces virtualization overhead. In order to reduce the overhead of extreme computing and memory virtualization, and provide VMware/OpenStack support, Elastic Bare Metal is required. However, the virtualization architecture based on Xen/KVM virtualization architecture does not support Elastic Bare Metal.

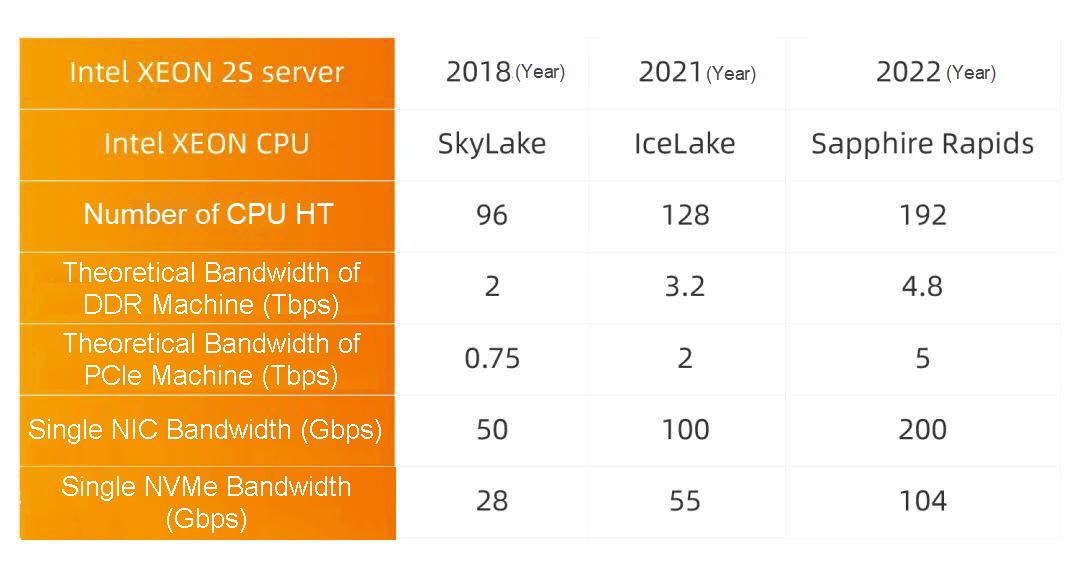

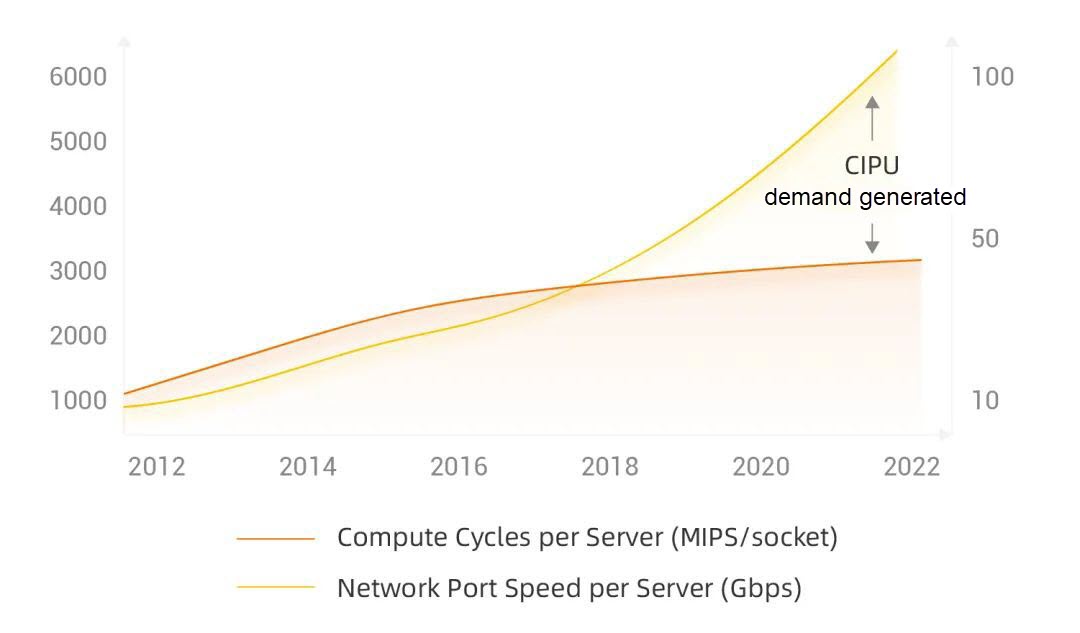

6. The gap between I/O and computing power. The following example uses the dual-socket Intel XEON servers as an example to analyze the storage and network I/O and XEON CPU PCIe bandwidth expansion capabilities, between which a simple comparison is made to the development of CPU computing power:

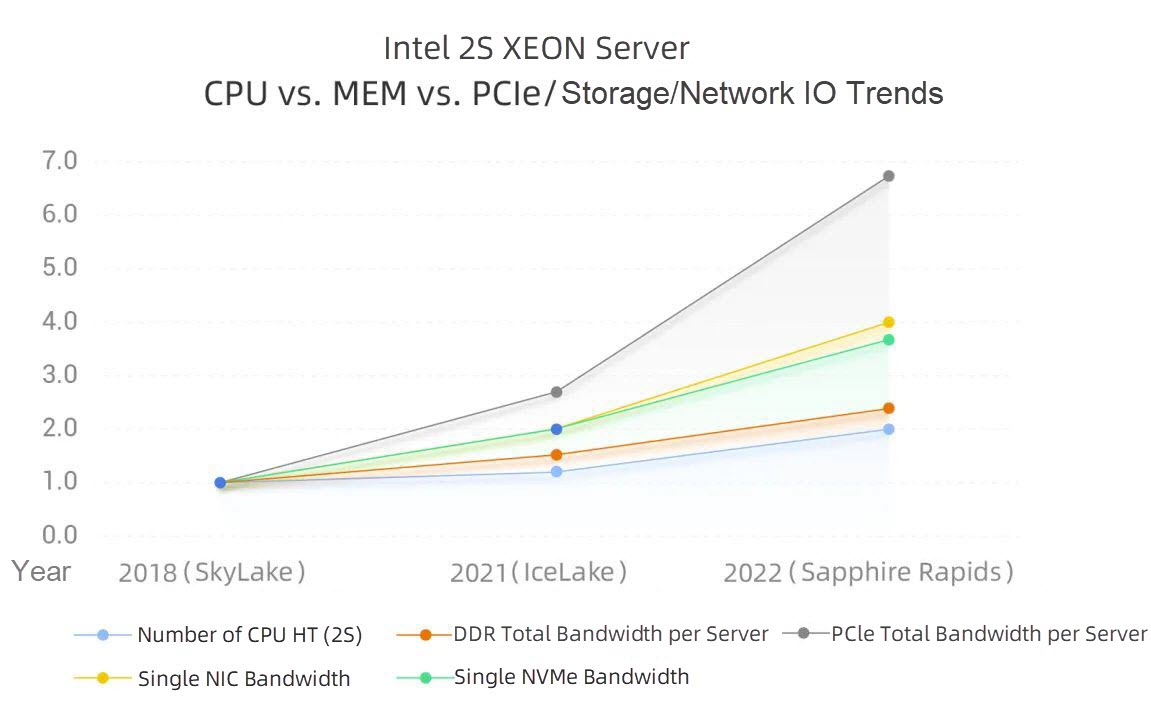

Furthermore, the development trend of each technical item is compared horizontally based on the various indicators of the SkyLake 2S server in 2018, such as the number of CPU HTs and the theoretical bandwidth of the DDR machine.

Taking the number of CPU HT as an example, 96HT SkyLake is set to baseline 1, IceLake 128HT/96HT = 1.3, and Sapphire Rapids 192HT/96HT = 2.0. Therefore, the following Intel 2S XEON server CPU vs. MEM vs. PCIe /storage/network I/O development trends can be obtained.

From the comparison of the four-year data from 2018 to 2022 in the preceding figure, we can draw the following conclusions:

Anyone with a background in virtualization technologies should feel uneasy after seeing the above analysis. After computing and memory hardware virtualization technologies such as Intel VT were widely deployed, many of the overhead issues of computing and memory virtualization, including isolation and jitter, have been solved. However, if the paravirtualization technology continues to be used while the above-mentioned PCIe/NIC/NVMe/AEP and other I/O technologies are developing rapidly, the challenges on memory copy, VM Exit, latency, and other aspects will become more and more prominent.

The preceding content explained the technical problems and challenges faced by conventional IaaS cloud computing. In this section, we will discuss the development of the CIPU technology by answering the question, where did CIPU come from?

After further analysis of the pain points in virtualization technologies, you may notice that they are more or less related to the cost, security, and performance of the I/O virtualization subsystems. Therefore, any effective technical solution begins with the I/O virtualization subsystems.

The 20-year history of technological development also confirms the above derivation. This article selects two key technologies to explain where CIPU originated from:

There is a huge gap between the demands and technologies in IO virtualization subsystems. Many organizations are focused on solving this issue, leading to the growth of the following areas: DMA direct memory access, the improvement of IRQ interrupt requests under virtualization conditions, and the follow-up of the corresponding PCIe standardization organization.

The specific technical implementation methods, including IOMMU address translation, interrupt remapping, posted interrupt, PCIe SR-IOV/MR-IOV, and Scalable IOV, are not discussed in this article.

The only purpose of listing the Intel VT-d IO hardware virtualization technology is to make clear that the maturity of CPU I/O hardware virtualization technology is the prerequisite of the development of the CIPU technology.

Another design idea of CIPU comes from the field of communication (especially digital communication).

Those familiar with data communications should already be familiar with chips such as Ethernet switch chips, route chips, and fabric chips. The Network Processor Unit (NPU) is a key technology in the field of digital communication. Note that NPU in this paper does not mean AI Neural Processing Unit..

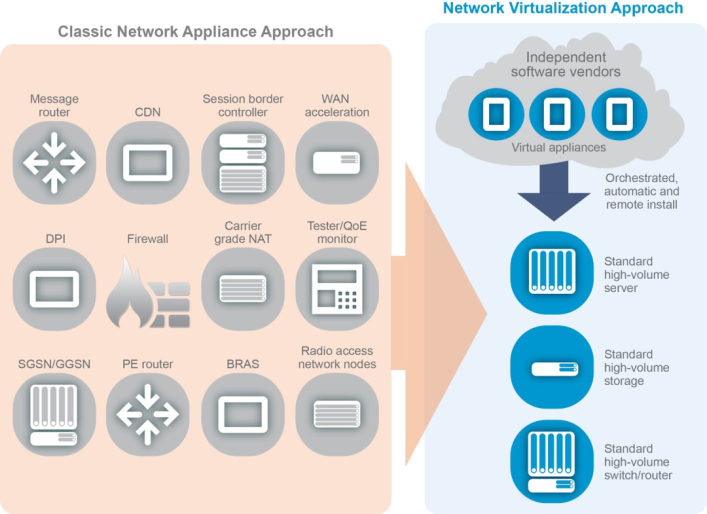

Around 2012, Network function virtualization (NFV) became the key research and development direction in the wireless core network and broadband access server (BRAS) of the communication field.

NFV achieves network element features in communication by using the IT standardization and virtualization infrastructure, such as the standard x86 servers, standard Ethernet switch networks, and standard IT storage. NFV gets rid of the traditional communication silo and vertical non-standard tightly-coupled software and hardware system, helping network operators reduce costs and increase efficiency, and improving business agility.

Image source: ETSI NFV Problem Statement and Solution Vision

However, NFV runs on the infrastructure of IT standardization and virtualization, and will definitely encounter quite a few technical difficulties.

One of these technical difficulties is that NFV, as a network service, has very different technical requirements on network virtualization compared with typical online transaction/offline big data services in the IT field. NFV has more stringent requirements on high bandwidth throughput (the default line speed and bandwidth), high PPS processing capability, latency and jitter.

At this time, the traditional NPU comes into view of the technical requirements of SDN/NFV. NPU is placed over NIC. An NIC that is configured with NPU is called SmartNIC.

As you can see, bottlenecks of network virtualization are encountered when users want to deploy the communication NFV businesses to a standardized and virtualized IT infrastructure. At the same time, IT domain public cloud virtualization technology encountered I/O virtualization technology bottlenecks.

The above needs converged unexpectedly around 2012. At this point, traditional communication technologies such as network NPU and SmartNIC have begun to enter the vision of IT domain.

Up to now, we can see that SmartNIC, DPU, and IPU are all used in solving the problem of cloud computing I/O virtualization. One of the reasons is that they are deeply related with each other. At the same time, the names are confusing, which comes from the unfamiliarity of engineers that cross over the communication field to the IT field and the fact that some major chip suppliers may not be familiar with cloud business needs and scenarios.

Based on the preceding technologies, this chapter describes the definition and positioning of CIPU.

CIPU, or Cloud Infrastructure Processing Unit, is a specialized service processor that brings IDC compute, storage, and network infrastructure into the cloud and hardware accelerated.

Once compute, storage, and network resources are connected to a CIPU, they are transformed into the cloud for virtual computing. These resources can be scheduled by a cloud platform to provide users with clusters that have high-quality and hardware accelerated elastic cloud computing power.

The architecture of a CIPU has the following characteristics:

High-performance I/O hardware device virtualization can be realized based on the VT-d technology. In the meantime, it is important that the device models be compatible with the mainstream OS ecosystem. Therefore, industry-standard I/O device models such as virtio-net, virtio-blk, and NVMe must be used.

It is also important to optimize PCIe protocol layers for the sake of high performance of I/O devices. The key to I/O hardware device virtualization depends on how to reduce the traffic of PCIe transaction layer packets (TLPs) and the number of interrupted guest OSs while balancing the latency requirement, so that hardware resources in queue can be pooled flexibly and new I/O services can be fully programmable or flexibly configurable.

The preceding chapters briefly analyzed the pain points of network virtualization. Here we discuss the business requirements of network virtualization:

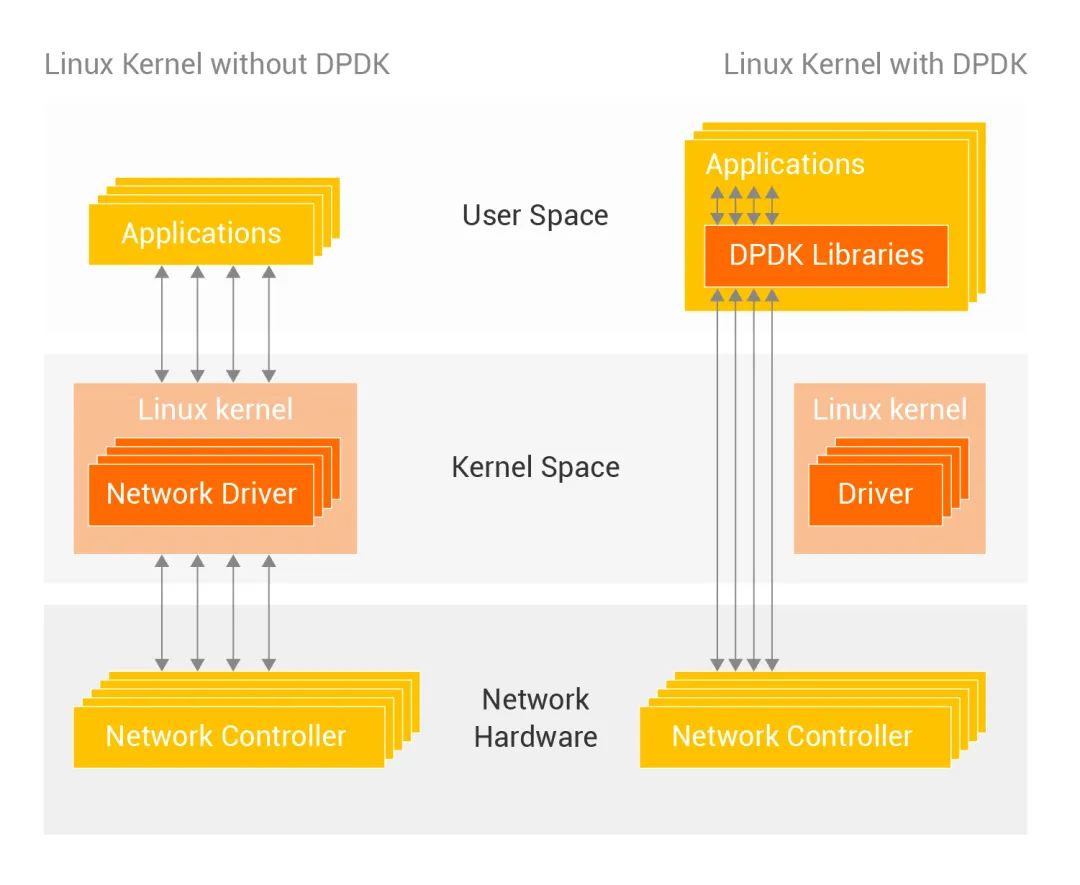

At the implementation level, we face the following problems if we want to upgrade from kernel-mode network virtualization in the Xen era to user-mode network virtualization based on DPDK vSwitch with Data Plane Development Kit (DPDK) under the KVM architecture:

1. Increasingly widening gap between network bandwidth and CPU processing capability

Source: Xilinx

2. Bottleneck of optimization for DPDK based pure software network forwarding performance

Further analysis of the above problems reveals the following fundamental difficulties:

The requirement for hardware-based forwarding acceleration emerges. It can be implemented in the following technical regards:

Forwarding technologies based on configurable ASIC such as Intel FXP have the highest performance per watt and lowest forwarding latency, but lack flexibility.

Many-core NPU based technologies have forwarding flexibility to a degree, but are not comparable to forwarding technologies based on configurable ASIC in terms of power-performance-area (PPA) and forwarding latency.

Forwarding technologies achieved through FPGA reconfigurability logic have a big advantage in time to market, but face challenges in forwarding 400 Gbit/s and 800 Gbit/s services.

All in all, there is a tradeoff at the technical implementation level. For IPU/DPU chips, we tend to sacrifice certain PPA and forwarding latency to obtain commonality because of the need to cover more customers. However, with CIPU, more in-depth vertical customization can be performed based on the vendor's own forwarding services, thus obtaining more extreme PPA and minimizing forwarding latency.

To achieve nine 9's data durability for public cloud storage and meet the elastic requirements on compute and storage, decoupled storage and compute is inevitable. Alibaba Cloud Elastic Block Storage (EBS) must be able to access distributed storage clusters with high performance and low latency.

Specifically,

The storage protocol between the computing initiator and the distributed storage target is highly vertically optimized and customized by cloud vendors, which is the fundamental basis of how CIPU realizes hardware acceleration for EBS distributed storage access.

Although local storage does not have nine 9's data durability and reliability like EBS, it has advantages in terms of low cost, high performance, and low latency. Local storage is required in business scenarios such as big data and those that involve computing cache.

After local disks are virtualized, the bandwidth, IOPS, and latency are not attenuated, and at the same time, the capabilities of one-to-many virtualization, QoS isolation, and O&M are obtained. This is the core competitiveness of hardware acceleration for local storage virtualization.

Remote direct memory access (RDMA) networks play an increasingly important role in HPC, AI, big data, database, storage, and other data centric services. In fact, RDMA networks have become crucial to what makes a data centric service different. Implementing inclusive RDMA capabilities on the public cloud is a key capability of CIPU.

Specifically,

At the implementation level of elastic RDMA, the first step is to overcome the low-latency hardware forwarding of VPC. With the abandonment of PFC and lossless networks, the deep vertical customization and optimization of transmission protocols and congestion control algorithms will become inevitable options for CIPU.

From users' point of view of cloud computing, security matters the most. Without security, all other capabilities are just empty talk. Therefore, to continuously improve the competitiveness of cloud services, cloud vendors must continuously strengthen hardware trust technologies, perform full encryption of VPC east-west traffic and full encryption of EBS and local-disk virtualization data, and develop hardware-based enclave technologies.

The core of cloud computing is servitization, which allows users to use IT resources without O&M. The core of the IaaS elastic computing O&M is the capability of hot upgrades of all components without losses and the capability of hot migration of virtual machines without losses. Therefore, a large amount of software and hardware co-design work between CIPU and the cloud platform base is involved.

The following figure shows the eight key business characteristics that must be implemented by elastic bare metal services at the definition level.

In addition, in order to achieve more elastic efficiency , elastic cloud computing inevitably requires scheduling of compute resources such as elastic bare metal instances, virtual machines, and secure containers in a single resource pool.

Considering the huge differences between general computing and AI computing in terms of network, storage, and computing power, CIPU must have the pooling capability. The CIPU pooling technology helps general computing significantly improve the utilization of CIPU resources, improving the core competitiveness at the cost level. In the meantime, the needs of high-bandwidth services such as AI can be met under a set of CIPU technology architecture system.

The features of computing virtualization and memory virtualization are enhanced. Cloud vendors have defined many core requirements for CIPU.

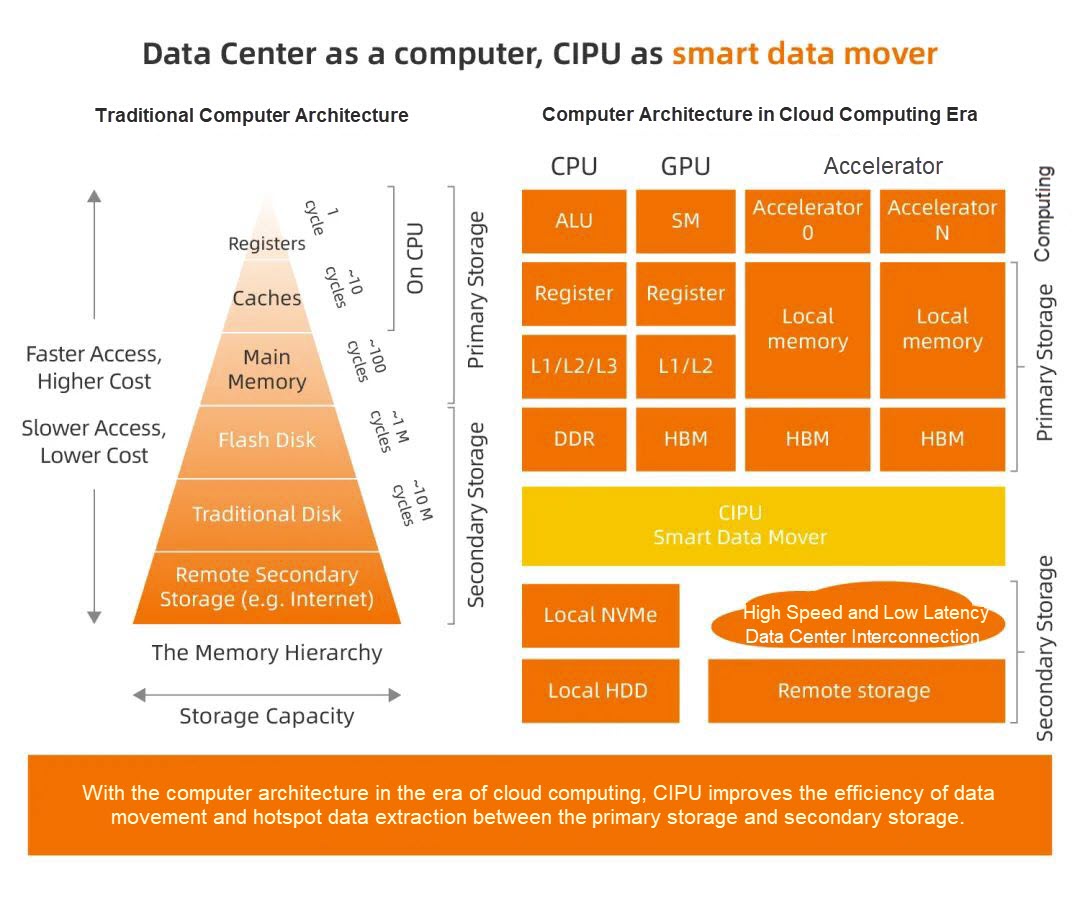

The previous chapter provided a detailed definition of CIPU. In this chapter, we will further analyze the computing architecture of CIPU from a theoretical perspective.

We can get an insight into the nature of CIPU and find out the technical path of next-stage engineering practices only when we look at computer engineering practices from the perspective of computer science.

Earlier, we came to a conclusion that the bandwidth of a single NIC, including the Ethernet switching network connected to the NIC, has been increased by 4 times, the bandwidth of a single NVMe has been increased by 3.7 times, and the bandwidth of the whole PCIe has been increased by 6.7 times. It can be seen that the gap between I/O capabilities of network/storage/PCIe and the computing power of the Intel XEON CPU is continuously increasing.

You may immediately jump to the conclusion that CIPU hardware acceleration is brought by offloading of the computing power. But it's not that simple.

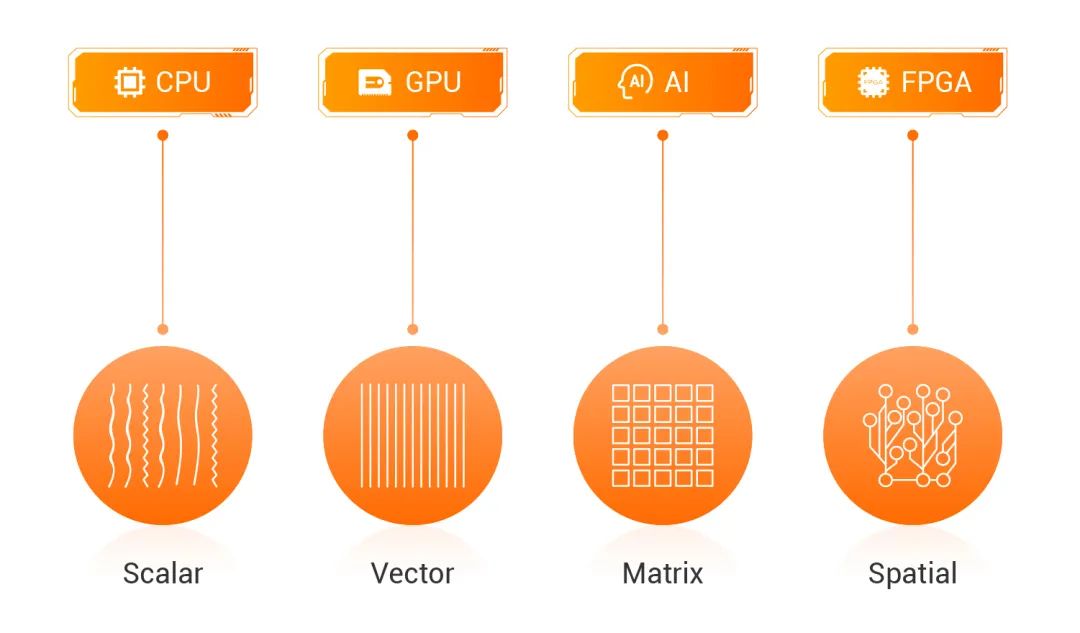

XEON computing power can be simplified as: computing processing capabilities such as arithmetic logic units (ALUs), data hierarchical cache capabilities, and memory access capabilities. For general computing (scalar computing), XEON's superscalar computing capability is sufficient.

It is unrealistic for CIPU to complete the offloading of XEON ALU computing power and GPU stream processors in general scalar computing and AI vector computing. As shown in the following figure, Intel precisely defines the computing power feature of different workloads and the chip that best matches.

Source: Intel

So the question arises: For the socket of CIPU, what are the common characteristics of the most suitable service workloads?

Through an in-depth analysis of the 10 features described in the preceding chapter, we can see their common characteristics: In the process of data flow (movement), workloads need to go through deep vertical software and hardware co-design, so that data movement such as off-chip can be reduced as much as possible, in order to improve computing efficiency.

Therefore, from the perspective of computer architecture, CIPU's main work is to optimize the access efficiency of data hierarchical cache, memory, and storage between and within cloud computing servers.

At this point, I would like to summarize this section with a quote from Nvidia's chief computer scientist Bill Dally: "Locality is efficiency. Efficiency is power. Power is performance. Performance is king."

If CIPU hardware acceleration is not only about computing power offloading, then what is it?

The short answer is, CIPU is inline heterogeneous computing.

Nvidia/Mellanox has been advocating in-network computing for many years. What is the relationship between CIPU inline heterogeneous computing and in-network computing? In the storage field, there are also concepts like computational storage, in-storage computing, and near-data computing. What is the relationship between these concepts and CIPU inline heterogeneous computing?

The answer is simple, CIPU inline heterogeneous computing = In-network computing + In-storage computing.

The concept of data processing unit (DPU) may have originated from Fungible.

After Nvidia acquired Mellanox, the CEO of NVidia, Huang Renxun, made a bold statement on the industry trend: "The future of data centers will be a tripartite confrontation of CPUs, GPUs, and DPUs, which will be a momentum for Nvidia's BlueField DPUs."

There has been a round of investment boom for DPU/IPU technologies. However, my judgment is that the socket must be based on the business requirements of the cloud platform base (CloudOS), so that the deep software and hardware co-design of CloudOS + CIPU can be completed.

It is only cloud vendors who can maximize the value of the socket.

In the field of IaaS, cloud vendors pursue northbound interface standardization, zero code change for IaaS, and compatibility with OSs and application ecosystems, and at the same time, aim to secure the foundation and achieve deep vertical integration of software and hardware. The technical logic behind this is "software defined hardware acceleration".

Alibaba Cloud has developed the Apsara operating system and a variety of data center core components, accumulating a deep technical background. Based on the cloud OS's basic modules, a deep and vertical integration of software and hardware, along with the launch of CIPU, are the only way for Alibaba Cloud to achieve the best computing efficiency and capabilities.

It is also worth mentioning that the business understanding and knowledge accumulated through the long-term and large-scale R&D and operations during use of the Apsara operating system, as well as the vertical and complete R&D team built in this process, are the pre-requisites of making a successful and meaningful CIPU. Chips and software are but only the implementation of the solidified knowledge mentioned above.

1,042 posts | 256 followers

FollowAlibaba Cloud Community - July 29, 2022

Alibaba Cloud Community - November 8, 2022

Alibaba Cloud Community - June 14, 2022

Alibaba Cloud Community - December 30, 2022

OceanBase - August 26, 2022

Alibaba Clouder - March 2, 2021

1,042 posts | 256 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Cloud Community

Dikky Ryan Pratama May 4, 2023 at 5:32 pm

Your post is very inspiring and inspires me to think more creatively., very informative and gives interesting new views on the topic., very clear and easy to understand, makes complex topics easier to understand, very impressed with your writing style which is smart and fun to work with be read. , is highly relevant to the present and provides a different and valuable perspective.