AnalyticDB for PostgreSQL, offered by Alibaba Cloud, is an exceptional solution that combines the speed and efficiency of the fastest vector database with the power of PostgreSQL. This technology empowers developers to process, store, and analyze massive volumes of structured and unstructured data at unprecedented speeds.

With AnalyticDB for PostgreSQL, you can supercharge your GenAI applications on Alibaba Cloud PAI. Whether you are working on recommendation systems, natural language processing, or image recognition, this solution provides the performance boost you need to take your applications to the next level.

In this tutorial, we will guide you through the process of harnessing AnalyticDB for PostgreSQL's amazing capabilities in combination with Alibaba Cloud PAI. You will learn how to seamlessly integrate language models, query vector data, and leverage the advanced features of AnalyticDB for PostgreSQL.

Join us on this exciting journey and unlock the full potential of your GenAI applications with AnalyticDB for PostgreSQL and Alibaba Cloud PAI.

Note: Before proceeding with the steps mentioned below, ensure that you have an Alibaba Cloud account and have access to the Alibaba Cloud Console.

1. Login to Alibaba Cloud Console and create an instance of AnalyticDB for PostgreSQL.

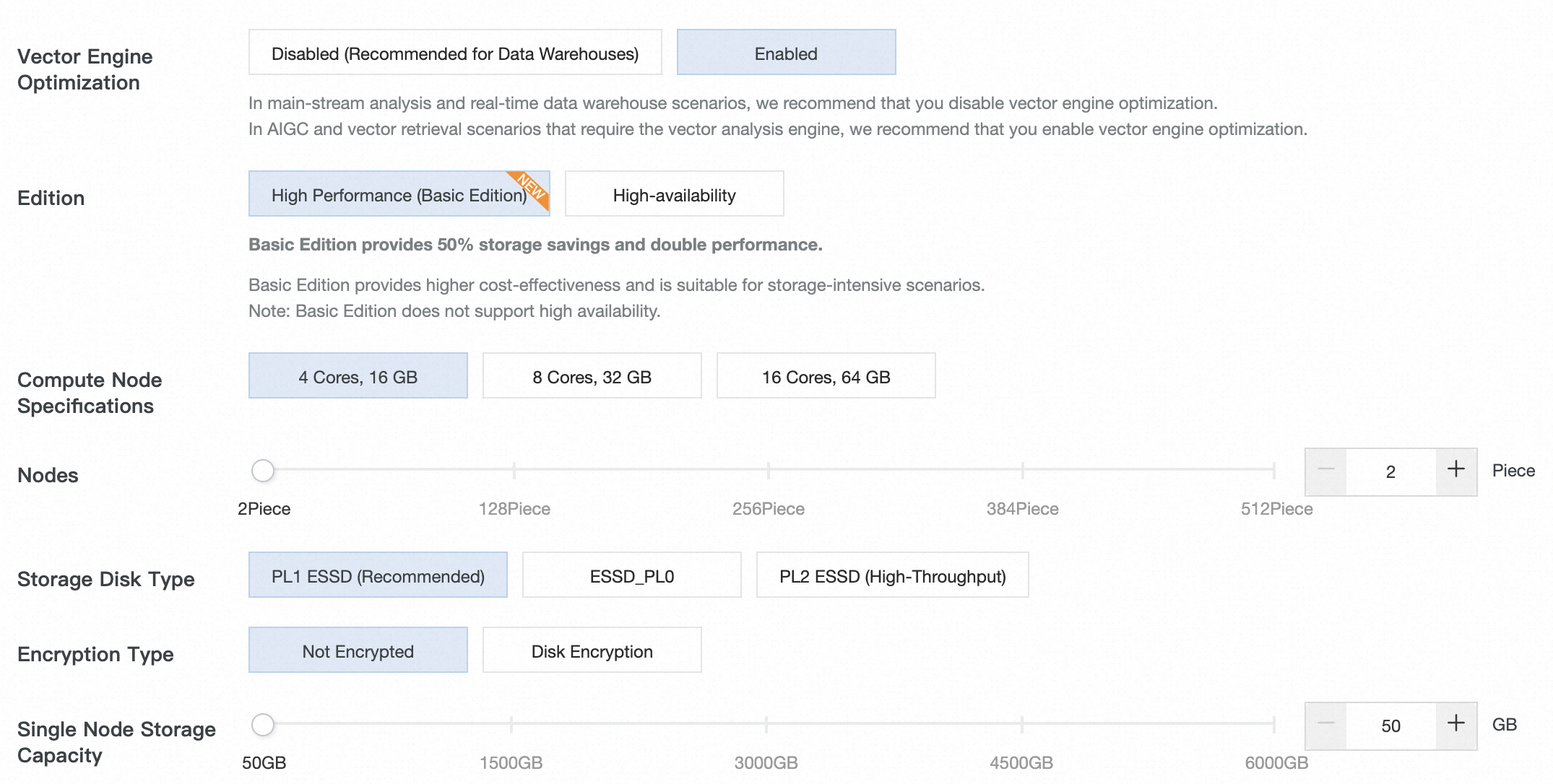

2. We chose the following for test purposes:

3. Create an account to connect to DB

4. We must enable Vector Engine Optimization to use the vector database.

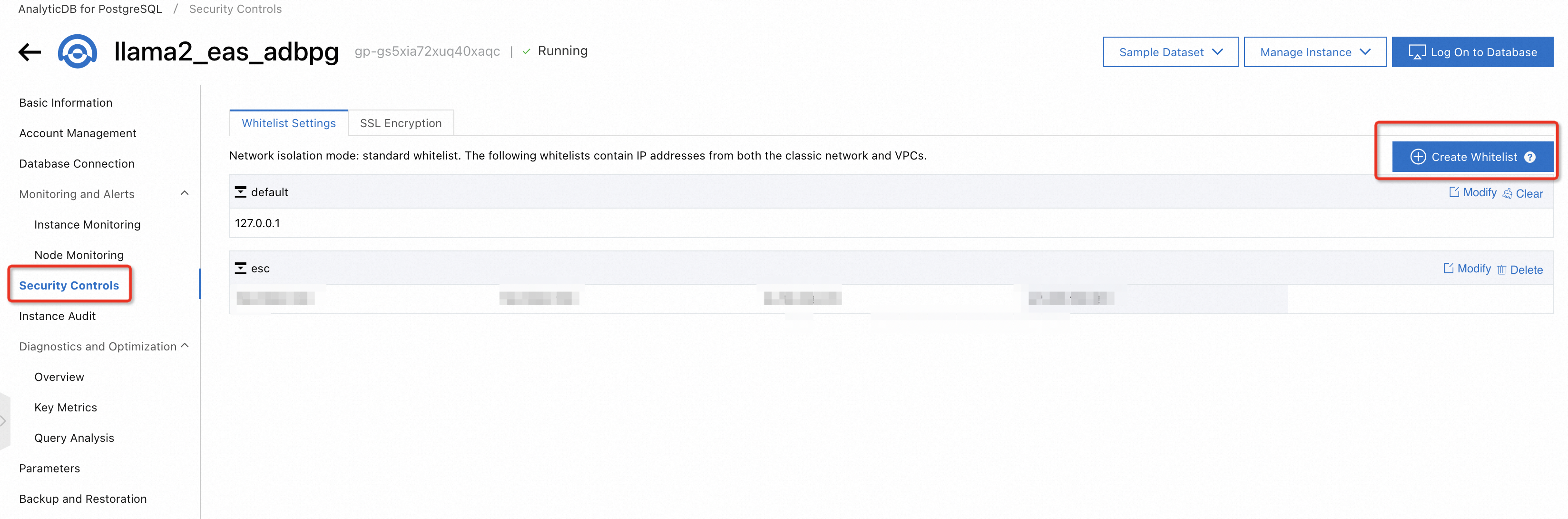

5. We recommend using ECS, where they will be installed: UI Configure an IP address whitelist, which will be created in the next section. If ECS and AnalyticDB are in on VPC (Virtual Private Cloud), you can put the Primary Private IP Address. If not, then a public IP address requires to be put.

Now it's time to work on an ECS instance.

1. Assume the user has already login to Alibaba Cloud Console (following section 1.1)

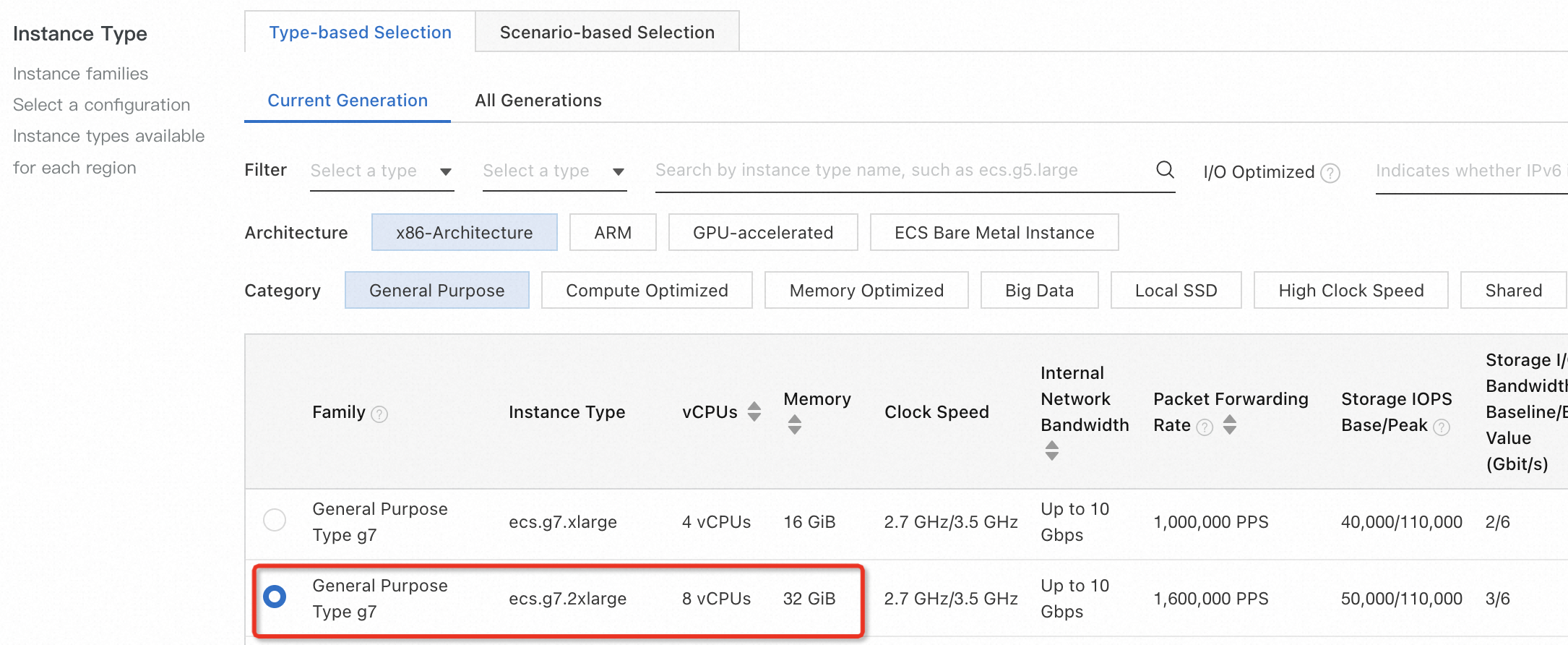

2. Create an ECS instance. We recommend at least ecs.g7.2xlarge with the parameters for testing purposes:

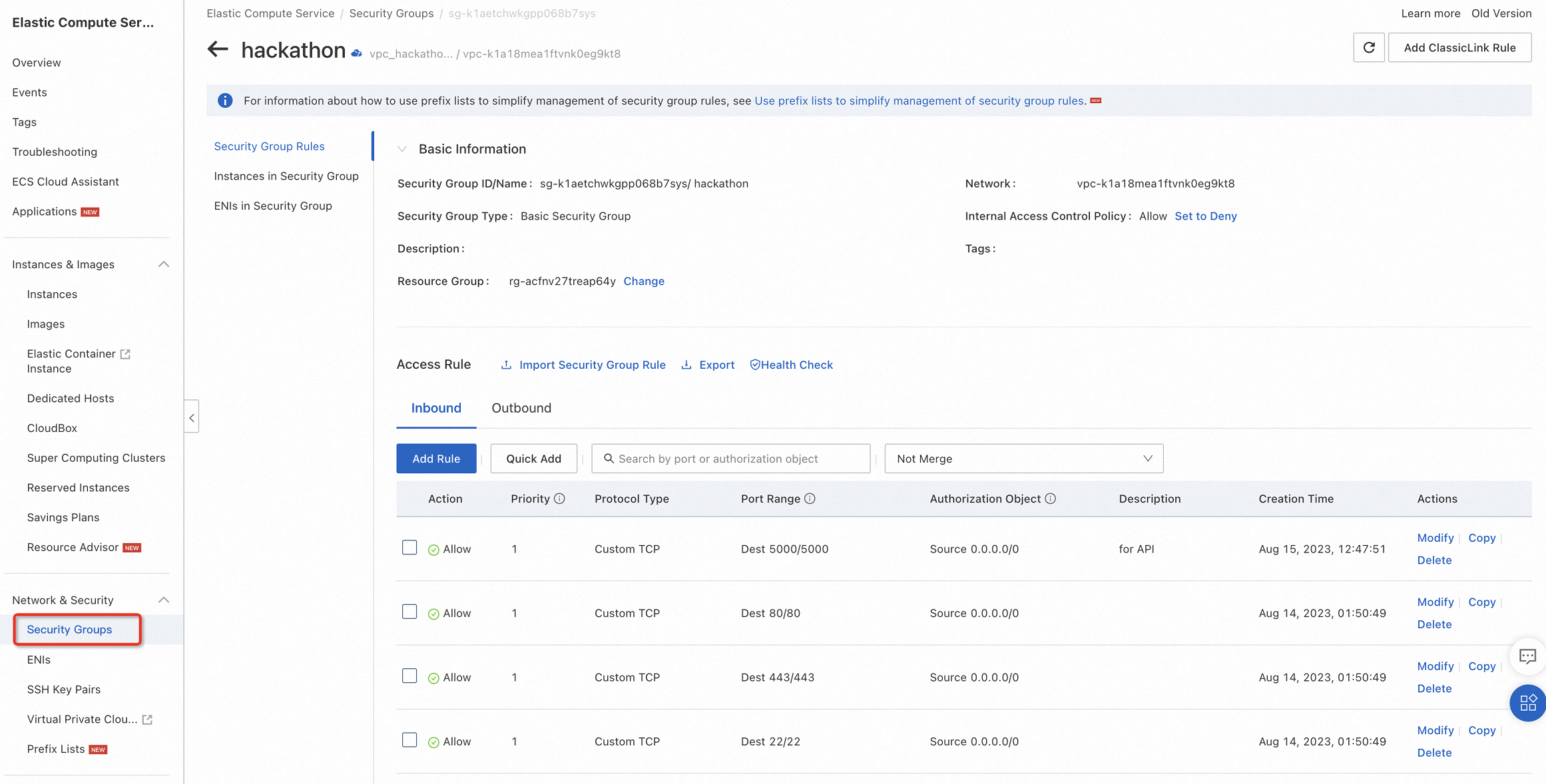

3. Need to add port 5000 to the security group by following this documentation:

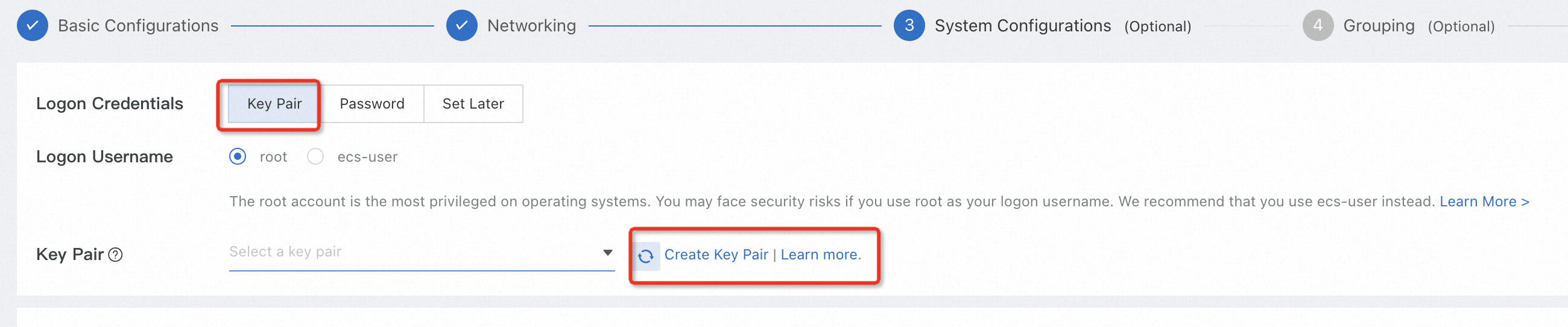

1. Once the ECS instance is created, navigate to the Instances section in the Alibaba Cloud Console.

2. Find the newly created instance and click on the Connect button.

3. Follow the instructions to download the private key (.pem) file.

4. Open a terminal on your local machine and navigate to the directory where the private key file is downloaded.

5. Run the following command to set the correct permissions for the private key file:

chmod 400 key_pair.pem6. Use the SSH command to connect to the ECS instance using the private key file:

ssh -i key_pair.pem root@<ECS_Instance_Public_IP>1. Run the following commands to install Docker.io on the ECS instance:

apt-get -y update

apt-get -y install docker.io2. Start the Docker service:

systemctl start docker1. Depending on your location you can choose from below the closest docker image registry. Then run the command to start the Docker container:

sudo docker run -t -d --network host --name llm_demo hackathon-registry.ap-southeast-5.cr.aliyuncs.com/generative_ai/langchain:llm_langchain_adbpg_v02sudo docker run -t -d --network host --name llm_demo hackathon-frankfurt-registry.eu-central-1.cr.aliyuncs.com/generative_ai/langchain:llm_frankfurt_v01It takes around 15-25 minutes to download, depending on network speed. This image will be available only during the Generative AI Hackathon event period. If you want to use this image, please feel free to contact us.

2. Enter the Docker container:

docker exec -it llm_demo bash1. Use a text editor to open the config.json file:

vim config.jsonTo fill the config file, please follow the instructions below:

{

"embedding": {

"model_dir": "/code/",

"embedding_model": "SGPT-125M-weightedmean-nli-bitfit",

"embedding_dimension": 768

},

"EASCfg": {

"url": "http://xxxxx.pai-eas.aliyuncs.com/api/predict/llama2_model",

"token": "xxxxxx"

},

"ADBCfg": {

"PG_HOST": "gp.xxxxx.rds.aliyuncs.com",

"PG_DATABASE": "database name, usually it's user name",

"PG_USER": "pg_user",

"PG_PASSWORD": "password"

},

"create_docs":{

"chunk_size": 200,

"chunk_overlap": 0,

"docs_dir": "docs/",

"glob": "**/*"

},

"query_topk": 4,

"prompt_template": "Answer user questions concisely and professionally based on the following known information. If you cannot get an answer from it, please say \"This question cannot be answered based on known information\" or \"Insufficient relevant information has been provided\", no fabrication is allowed in the answer, please use English for the answer. \n=====\nKnown information:\n{context}\n=====\nUser question:\n{question}"

}2. Replace the placeholder values with your specific configuration details as described below:

/code/

SGPT-125M-weightedmean-nli-bitfit).3. Save the changes to the config.json file.

Ensure that you provide accurate and valid information in the configuration file to ensure the proper functioning of your application.

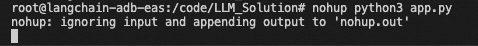

1. You can start to run need run the Flask application by the below command:

nohup python3 app.py

If successful, you will get a similar response as shown above figure. Then the terminal can be closed.

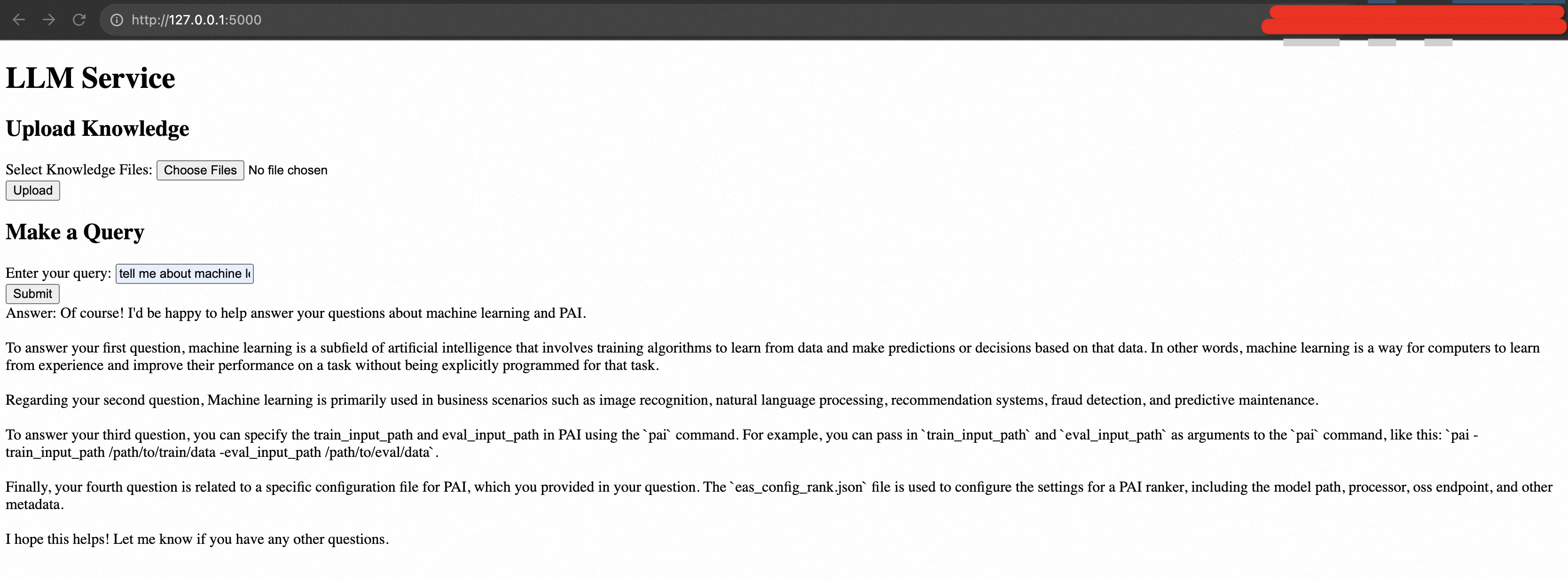

2. Test the running API:

<your public IP address>:5000Replace with the actual IP address or hostname of your machine running the Docker container.

3. Make any necessary modifications to the index.html file or connect your application using the provided API endpoints:

<your public IP address>:5000/upload

<your public IP address>:5000/query

Ensure that you update the URLs in your application code or any other relevant places to use the correct API endpoints.

Here is an example CURL to run services for query and upload:

curl -X POST -H "Authorization: Bearer <JWT_TOKEN>" -F "file=@/path/to/file.txt" <your public IP address>:5000/upload

curl -X POST -H "Authorization: Bearer <JWT_TOKEN>" -H "Content-Type: application/json" -d '{"query": "Your query here"}' <your public IP address>:5000/queryCongratulations! You have successfully completed the step-by-step tutorial for setting up and running the LLM demo on Alibaba Cloud using ECS, PAI-EAS, AnalyticDB for PostgreSQL, and the Llama 2 project.

We have explored the capabilities of AnalyticDB for PostgreSQL in conjunction with large language models on Alibaba Cloud's PAI platform. By leveraging the power of AnalyticDB for PostgreSQL, developers can efficiently store, process, and analyze massive amounts of data while seamlessly integrating large language models for advanced language processing tasks.

Combining AnalyticDB for PostgreSQL, large language models, and Alibaba Cloud's PAI platform opens up a world of possibilities for businesses and developers. The high performance and scalability of AnalyticDB for PostgreSQL ensure efficient data handling while integrating large language models enabling sophisticated language processing capabilities.

This powerful solution allows businesses to extract valuable insights, improve natural language understanding, and develop innovative applications across various domains, such as recommendation systems, sentiment analysis, chatbots, and more. Additionally, Alibaba Cloud's PAI platform provides a user-friendly environment for managing, deploying, and scaling these applications with ease.

We invite you to explore the potential of AnalyticDB for PostgreSQL, large language models, and Alibaba Cloud's PAI platform. Leverage the power of these cutting-edge technologies to enhance your language processing capabilities, gain valuable insights from data, and drive innovation in your business.

Unlock the full potential of AnalyticDB for PostgreSQL, large language models, and Alibaba Cloud's PAI platform to revolutionize your language-driven applications and stay ahead in the digital landscape. Contact Alibaba Cloud to embark on an exciting journey and unleash the power of data and language processing.

Mastering Generative AI - Run Llama2 Models on Alibaba Cloud's PAI with Ease

Solution 2: Build Your Llama2 LLM Solution with Compute Nest (ECS + AnalyticDB for PostgreSQL)

Farruh - January 22, 2024

Alibaba Cloud Community - August 23, 2023

ApsaraDB - May 15, 2024

Alibaba Cloud Community - January 4, 2024

Farruh - November 23, 2023

Farruh - July 25, 2023

AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Farruh