Join us at the Alibaba Cloud ACtivate Online Conference on March 5-6 to challenge assumptions, exchange ideas, and explore what is possible through digital transformation.

The Istio traffic management model basically allows for the decoupling of traffic from infrastructure scaling, allowing operations personnel to specify the rules to apply to traffic using Pilot instead of specifying which pods/VMS should receive traffic. Decoupling traffic from infrastructure scaling allows Istio to provide a variety of traffic management functions independent of application code. The Envoy sidecar proxy implements these functions.

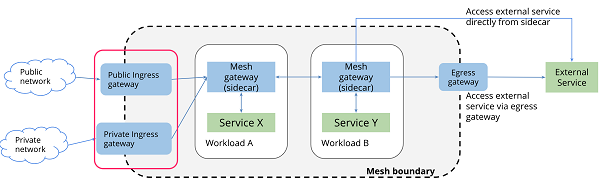

In a typical mesh, you often have one or more finalizing external TLS connections at the end to guide traffic into the mesh's load balancer (known as a gateway), the traffic then flows through internal services after the sidecar gateway. The following figure illustrates the use of gateways in a mesh:

The Istio Gateway configures load balancing for HTTP/TCP traffic. In most cases, these actions are performed on the mesh edge to enable ingress traffic for a service. A mesh can have any number of gateways, and multiple different implementations of the gateway can coexist. You may ask, why not just use the Kubernetes Ingress API to manage ingress traffic? The reason is that the Ingress API cannot express the routing needs of Istio. Ingress looks for a common intersection between different HTTP proxies, so it only supports the most basic of HTTP routing. The end result is that other advanced functions of proxies have to be put in annotations, but annotation formats are not compatible between many proxies so there is no way to migrate them.

In addition, Kubernetes Ingress itself does not support the TCP protocol. Although NGINX supports TCP, Ingress cannot be set up to configure an NGINX Ingress Controller for TCP load balancing. Of course, you can use NGINX's TCP load balancing functions by creating a Kubernetes ConfigMap. See "Support for TCP/UDP Load Balancing" for details. As you can imagine, such a configuration is not compatible with multiple proxies and cannot be migrated.

Unlike Kubernetes Ingress, Istio Gateway has overcome the above shortcomings of Ingress by separating the L4-L6 configuration from the L7 configuration. Gateway is only used to configure L4-L6 functions (for example, exposed ports, TLS configuration). These functions are implemented in a unified manner by all mainstream proxies. Then, by binding VirtualService to Gateway, you can use standard Istio rules to control HTTP and TCP traffic into Gateway.

This article gives an example of how to use a simple and standard Istio rule to route TCP ingress traffic, thus implementing unified management of TCP ingress traffic.

Alibaba Cloud Container Service for Kubernetes 1.11.2 is available and you can use the console to create a Kubernetes cluster quickly and easily. See Creating a Kubernetes Cluster for more information.

Install and configure kubectl, making sure that kubectl can connect to the Kubernetes cluster

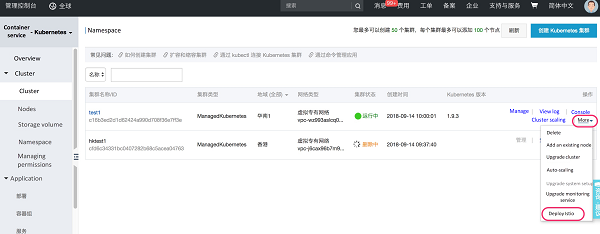

Open the container service console, select the cluster in the left navigation bar, click again on the right, and select from the pop-up menu to deploy Istio.

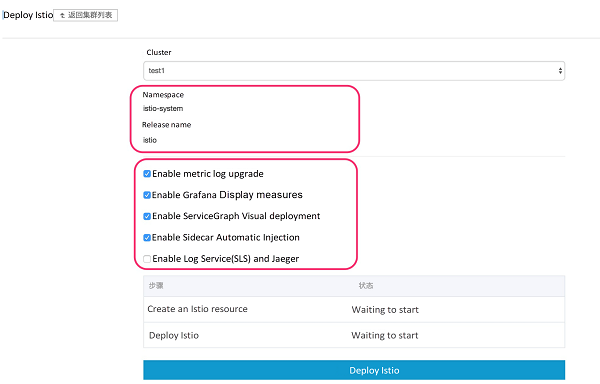

You can see the namespace and release that Istio installs by default on the page that opens.

Check to confirm installation of the corresponding modules. The first four items are checked by default.

The 5th option is the ability to provide distributed tracing based on a logging service, not enabled in this example.

Click the Deploy Istio button to complete the deployment in a matter of seconds.

Istio makes full use of Kubernetes Webhook for the automatic injection of Envoy Proxy Sidecar. Run the following command to add the flag istio-injection to the shell space default and set this flag to "enabled".

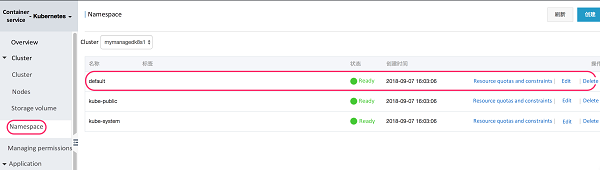

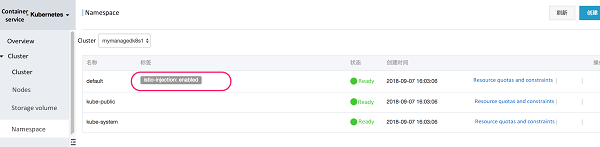

View namespace:

Click edit to label the default namespace istio-injection = enabled.

You can use the prebuilt mirror file:

registry.cn-hangzhou.aliyuncs.com/wangxining/tcptest:0.1.Or follow these steps to build your own.

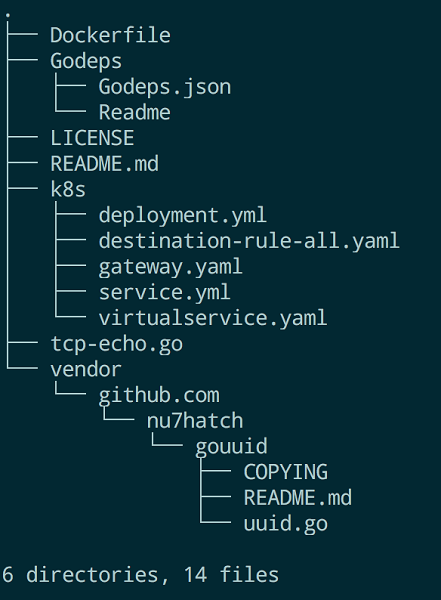

Clone the code base from:

https://github.com/osswangxining/Istio-TCPRoute-SampleIf you switch to the code directory, you will see a Dockerfile:

Run the following command to build a mirror, for example:

docker build-t {address of mirror warehouse}.Then push it to your own mirror warehouse address.

Deploy the service with kubectl

cd k8s

kubectl apply -f deployment.yml

kubectl apply -f service.ymlThis command creates 1 service (tcp-echo) and 2 deployments (tcp-echo-v1andtcp-echo-v2), both deployments include the labels app: tcp-echo, and service (tcp-echo) corresponding to the above two deployments:

selector:

app: "tcp-echo"To confirm the pod startup of the TCP server, run the following code:

kubectl get pods --selector=app=tcp-echo

NAME READY STATUS RESTARTS AGE

tcp-echo-v1-7c775f57c9-frprp 2/2 Running 0 1m

tcp-echo-v2-6bcfd7dcf4-2sqhf 2/2 Running 0 1mTo confirm the TCPServer is working, run the following code:

kubectl get service --selector=app=tcp-echo

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

tcp-echo ClusterIP 172.19.46.255 <none> 3333/TCP 17hCreate 2 gateways using the following command, one of which listens on port 31400 whereas the other listens on port 31401.

kubectl apply -f gateway.yamlThe gateway. yaml code is as follows:

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: tcp-echo-gateway

spec:

selector:

istio: ingressgateway # use istio default controller

servers:

- port:

number: 31400

name: tcp

protocol: TCP

hosts:

- "*"

---

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: tcp-echo-gateway-v2

spec:

selector:

istio: ingressgateway # use istio default controller

servers:

- port:

number: 31401

name: tcp

protocol: TCP

hosts:

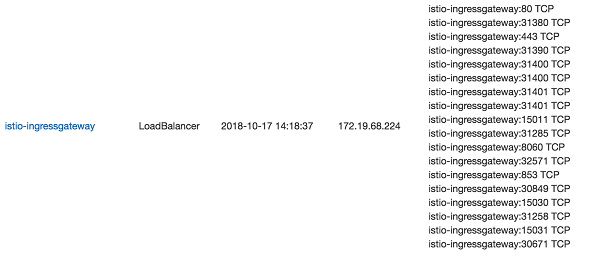

- "*"As shown below, the 2 gateways share the same ingressgateway service and the ingressgateway uses the loadbalancer approach to expose and provide the external IP addresses.

kubectl apply -f destination-rule-all.yaml

kubectl apply -f virtualservice.yamlThe example rules define two subsets (or versions). The first gateway forwards the TCP requests to port 31400 to the pod of version V1, and the second gateway forwards the TCP requests to port 31401 to the pod of version V2.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: tcp-echo

spec:

hosts:

- "*"

gateways:

- tcp-echo-gateway

- tcp-echo-gateway-v2

tcp:

- match:

- port: 31400

gateways:

- tcp-echo-gateway

route:

- destination:

host: tcp-echo.default.svc.cluster.local

subset: v1

port:

number: 3333

- match:

- port: 31401

gateways:

- tcp-echo-gateway-v2

route:

- destination:

host: tcp-echo.default.svc.cluster.local

subset: v2

port:

number: 3333 View the address of the Ingress Gateway

Click Services in the left-hand navigation bar to select the corresponding cluster and namespace on the top right, and locate the external endpoint address of the istio-ingressgateway in the list.

Open the terminal and run the following command (if nc is installed):

nc INGRESSGATEWAY_IP 31400Interact by entering the following text. You can see that the TCP traffic for this port is forwarded to the pod corresponding to version v1:

Welcome, you are connected to node cn-beijing.i-2zeij4aznsu1dvd4mj5c.

Running on Pod tcp-echo-v1-7c775f57c9-frprp.

In namespace default.

With IP address 172.16.2.90.

Service default.

hello, app1

hello, app1

continue..

continue..To view the log for the version v1 pod:

kubectl logs -f tcp-echo-v1-7c775f57c9-frprp -c tcp-echo-container | grep Received

2018/10/17 07:32:29 6c7f4971-40f1-4f72-54c4-e1462a846189 - Received Raw Data: [104 101 108 108 111 44 32 97 112 112 49 10]

2018/10/17 07:32:29 6c7f4971-40f1-4f72-54c4-e1462a846189 - Received Data (converted to string): hello, app1

2018/10/17 07:34:40 6c7f4971-40f1-4f72-54c4-e1462a846189 - Received Raw Data: [99 111 110 116 105 110 117 101 46 46 10]

2018/10/17 07:34:40 6c7f4971-40f1-4f72-54c4-e1462a846189 - Received Data (converted to string): continue..Open another terminal and run the following command:

nc INGRESSGATEWAY_IP 31401Enter the text to perform the following interaction. You can see that the TCP traffic for this port is forwarded to the pod corresponding to version v2:

Welcome, you are connected to node cn-beijing.i-2zeij4aznsu1dvd4mj5b.

Running on Pod tcp-echo-v2-6bcfd7dcf4-2sqhf.

In namespace default.

With IP address 172.16.1.95.

Service default.

hello, app2

hello, app2

yes,this is app2

yes,this is app2View the log of pod version v2:

kubectl logs -f tcp-echo-v2-6bcfd7dcf4-2sqhf -c tcp-echo-container | grep Received

2018/10/17 07:36:29 1a70b9d4-bbc7-471d-4686-89b9234c8f87 - Received Raw Data: [104 101 108 108 111 44 32 97 112 112 50 10]

2018/10/17 07:36:29 1a70b9d4-bbc7-471d-4686-89b9234c8f87 - Received Data (converted to string): hello, app2

2018/10/17 07:36:37 1a70b9d4-bbc7-471d-4686-89b9234c8f87 - Received Raw Data: [121 101 115 44 116 104 105 115 32 105 115 32 97 112 112 50 10]

2018/10/17 07:36:37 1a70b9d4-bbc7-471d-4686-89b9234c8f87 - Received Data (converted to string): yes,this is app2This article shows an example of how to use a simple and standard Istio rule to route TCP ingress traffic, thus implementing unified management of TCP ingress traffic.

We invite you to use Alibaba Cloud Container Service to quickly set up Istio, an open management platform for microservices that can be more easily integrated into any microservice projects you are working on.

Istio Practice in Alibaba Cloud Container Service for Kubernetes: Automatic Sidecar Injection

Traffic Management with Istio (2): Grayscale Release of Applications by Istio Management

56 posts | 8 followers

FollowAlibaba Developer - September 22, 2020

Alibaba Cloud Native - November 15, 2022

Alibaba Cloud Indonesia - April 10, 2023

Xi Ning Wang(王夕宁) - July 21, 2023

Xi Ning Wang(王夕宁) - December 16, 2020

Alibaba Cloud Native - January 23, 2024

56 posts | 8 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Xi Ning Wang(王夕宁)

manju February 11, 2020 at 10:42 am

I have used the Azure Kubernetes Service, created the client using .net.The request is hitting the istio-ingress gateway, but not sure whether its routing to actual tcp service.The client is throwing the exception as below - An established connection was aborted by the software in your host machine at System.Net.Sockets.Socket.Receive(Byte[] buffer) at xyz.Tcp.Client.Program.ExecuteClient() in C:...xyz.Tcp.ClientProgram.cs:line 87 The actual receive code is as below: byte[] messageReceived = new byte[1024]; int byteRecv = sender.Receive(messageReceived);