By Mingquan Zheng and Kai Yu

What problem are you talking about? How does it relate to the local network interface controller that my Kubernetes ECS node wants to access the CLB address?

The pod needs to access the port 443 listener of CLB. However, if it is being accessed within the cluster (referred to as the Kubernetes node or POD in the following article), it results in the error message Connection refused.

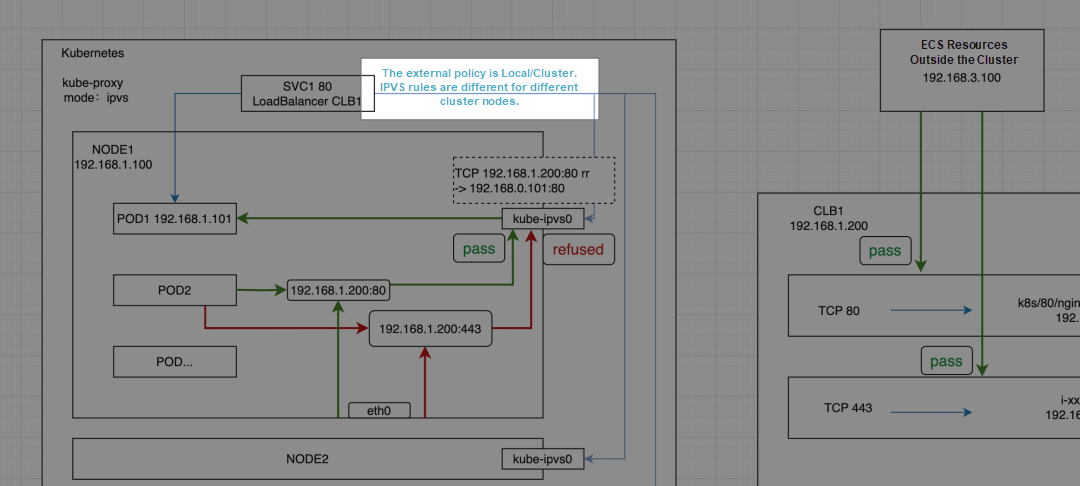

So, I have described the customer connection as follows:

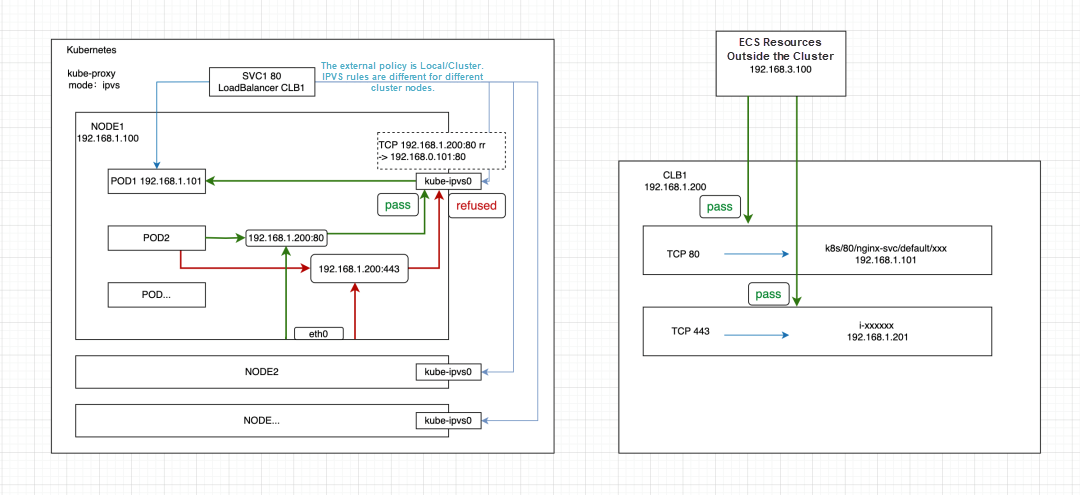

It is not possible to access 192.168.1.200:443 within a node or pod, but accessing 192.168.1.200:80 is allowed. At the same time, ECS 192.168.3.100 outside the cluster can access both 192.168.1.200:443 and 192.168.1.200:80.

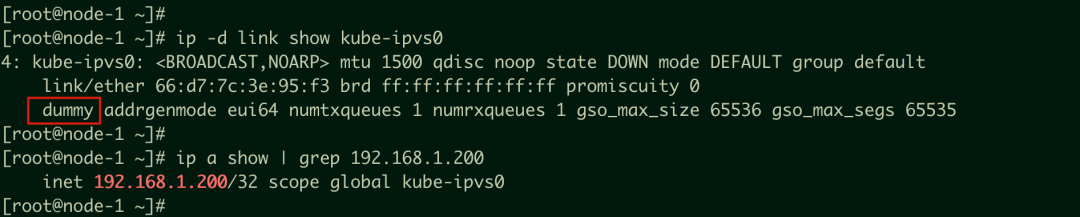

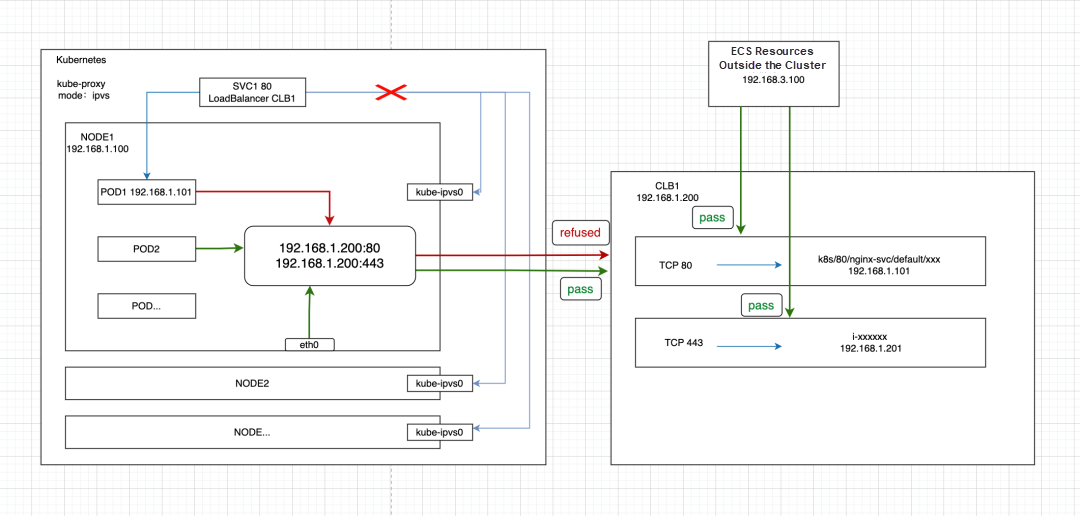

The IP address 192.168.1.200 of CLB1 is bound to the kube-ipvs0 network interface controller of the Kubernetes node, which is a dummy network interface controller. For more information, refer to the dummy interface. Since SVC1 is of LoadBalancer type and CLB1 is reused, associated with the endpoint POD1 192.168.1.101:80, it explains why accessing 192.168.1.200:80 is normal. The kube-proxy creates IPVS rules based on SVC1 configurations and mounts accessible backend services simultaneously. However, accessing 192.168.1.200:443 within the cluster is not possible. After the IP is bound to the dummy network interface controller, it does not go out of the node to access CLB1, and there is no IPVS rule corresponding to the port 443 listener. Hence, the access is directly rejected.

At this point, if there are no IPVS rules in the node (IPVS takes precedence over the listener), but it can still be accessed, you can check whether there is a local service listening to 0.0.0.0:443. In this case, all the IP addresses of the network interface controller and port 443 can be accessed. However, it is the local service that is being accessed rather than the actual CLB backend service.

The recommended solution is to use two separate CLBs for services inside and outside the cluster.

To address this, SVC1 can utilize the annotation "service.beta.kubernetes.io/alibaba-cloud-loadbalancer-hostname." This allows the CLB's IP to not be bound to the network interface controller of kube-ipvs0. Hence, accessing the CLB's IP within the cluster will route the traffic outside the cluster to the CLB. However, it should be noted that if the listening protocol is TCP or UDP, there may be a loopback access issue when accessing the CLB's IP within the cluster. For more information, please refer to the article "Client cannot access CLB [1]."

This annotation is supported only in CCM versions 2.3.0 and later. For detailed instructions, please refer to the documentation on "Add annotations to the YAML file of a Service to configure CLB instances [2]."

Demo:

apiVersion: v1

kind: Service

metadata:

annotations:

service.beta.kubernetes.io/alibaba-cloud-loadbalancer-hostname: "${your_service_hostname}"

name: nginx-svc

namespace: default

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

type: LoadBalancerWe all know that Kubernetes' Nodeport and load balancer modes allow for adjustments in the external traffic policies. How can we explain the difference in IPVS rule creation among cluster nodes when the external policy is set to "Local/Cluster," as shown in the diagram? Additionally, what happens when accessing Nodeport/CLBIP within the cluster?

The following scenarios consider svc internalTrafficPolicy set to Cluster mode or the default setting. Note that the ServiceInternalTrafficPolicy feature is enabled by default in Kubernetes 1.22. For more details, please see service-traffic-policy [3]

To learn more about the data links of Alibaba Cloud Containers in different Container Network Interface (CNI) scenarios, please see the following article series:

Analysis of Container Network Data Link Series: https://community.alibabacloud.com/series/158

In this article, we only discuss the behavior changes of IPVS TrafficPolicy Local when upgrading Kubernetes from version 1.22 to 1.24.

In the following example, the kube-proxy IPVS mode is used:

When the externalTrafficPolicy is set to Local:

https://github.com/kubernetes/kubernetes/pull/97081/commits/61085a75899a820b5eebfa71801e17423c1ca4da

If you access an SLB instance outside the cluster, CCM will only mount Local nodes. The situation is the same as in versions prior to Kubernetes 1.24. For more information, see the link above.

Versions prior to Kubernetes 1.24

• The NodePort of a node that has an endpoint can be accessed, and the source IP address can be reserved.

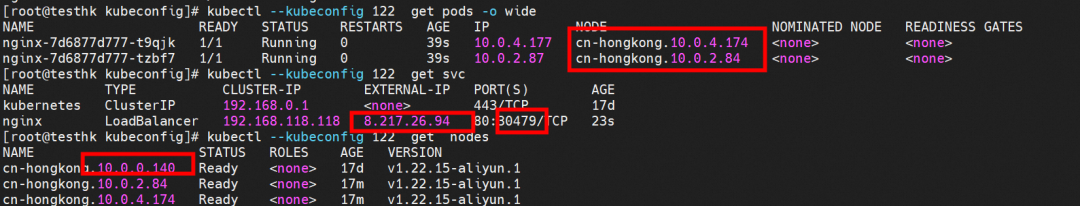

Nginx pods are distributed on the nodes of cn-hongkong. 10.0.4.174 and cn-hongkong. 10.0.2.84.

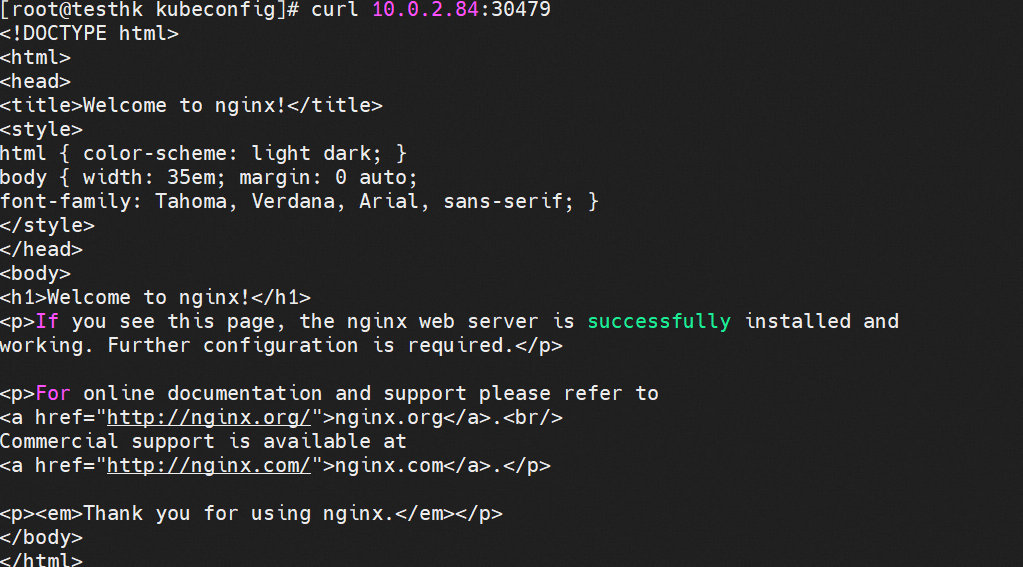

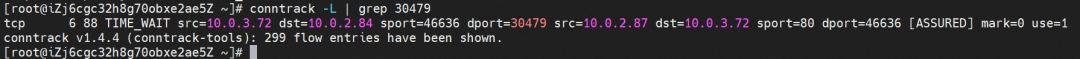

You can access port 30479 of the cn-hongkong.10.0.2.84 node, where there is the backend pod, through the external 10.0.3.72 node.

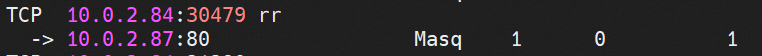

There are relevant IPVS rules on the cn-hongkong.10.0.0.140 node, but there is only the IP address of the backend pod on the node.

On the cn-hongkong.10.0.0.140 node, the relevant link receives Destination Network Address Translation (DNAT), which is returned to the source through nginx-7d6877d777-tzbf7 10.0.2.87 on the cn-hongkong.10.0.2.84 node. All relevant transformations are on this node. Through the conntrack table, we can know why the TCP layer 4 connection can be established.

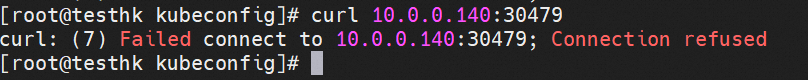

You cannot access port 30479 of the cn-hongkong.10.0.0.140 node, where there is no backend pod, through the external 10.0.3.72 node.

Check out the cn-hongkong.10.0.0.140 node, there is no relevant IPVS forwarding rule, so dnat cannot be performed, and the access will fail.

Versions after Kubernetes 1.24 (including Kubernetes 1.24)

The NodePort of a node that has an endpoint can be accessed, and the source IP address can be reserved.

Access a NodePort that does not have an endpoint node:

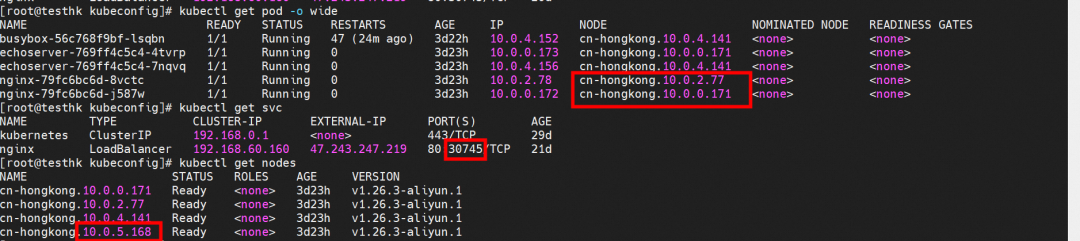

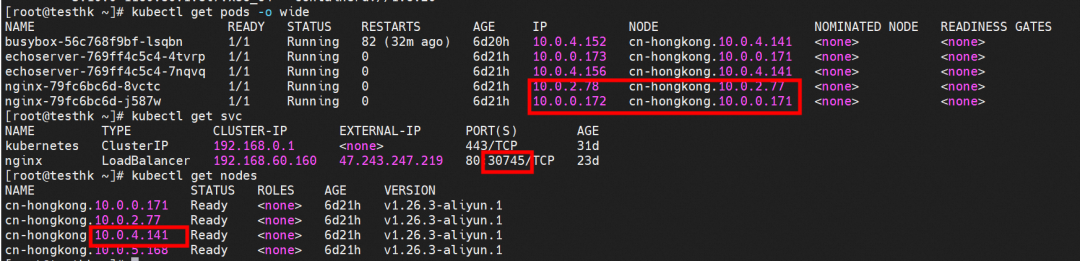

Nginx pods are distributed on the nodes of cn-hongkong.10.0.2.77 and cn-hongkong. 10.0.0.171.

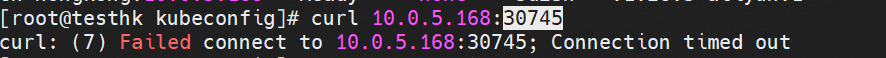

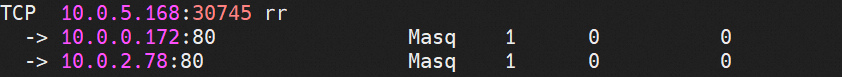

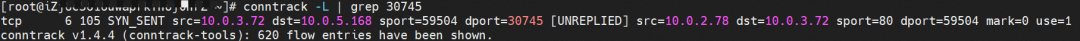

Access port 30745 of the cn-hongkong.10.0.5.168 node, where there is no backend pod, through the external 10.0.3.72 node. As you can see, the access failed.

There are relevant IPVS rules on the cn-hongkong.10.0.5.168 node, and all the IP addresses of backend pods are added to the IPVS rules.

On the cn-hongkong.10.0.5.168 node, the relevant link receives DNAT, which is returned to the source through nginx-79fc6bc6d-8vctc 10.0.2.78 on the cn-hongkong.10.0.2.77 node. After the source accepts this link, it will find that the link does not match its quintuple and will be discarded directly. As a result, the three-way handshake will inevitably fail. Through the conntrack table, we can know why the connection fails.

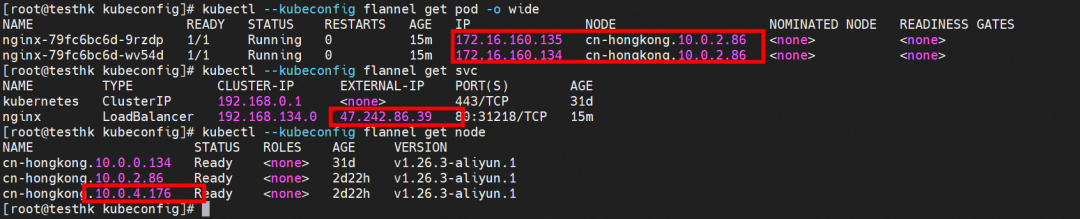

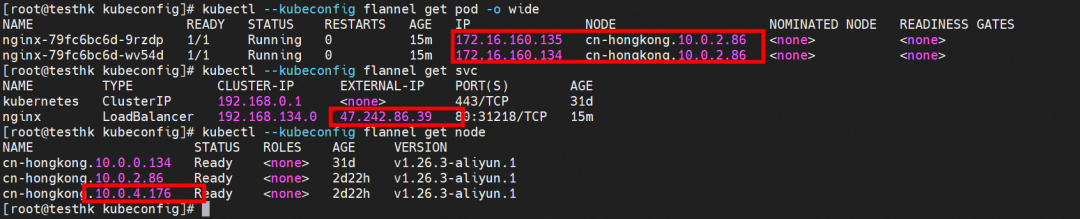

Nginx pods are distributed on the cn-hongkong.10.0.2.86 node.

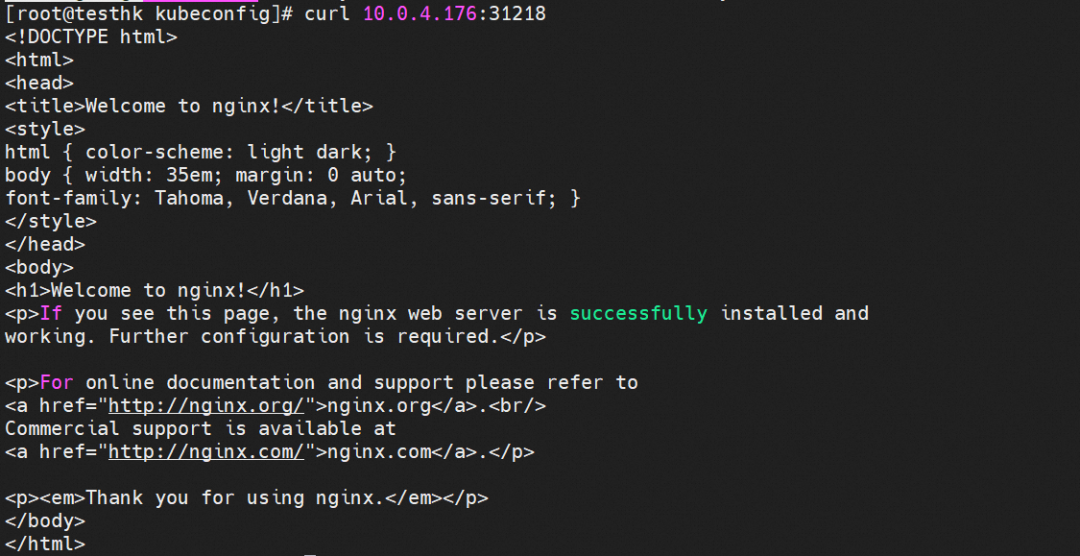

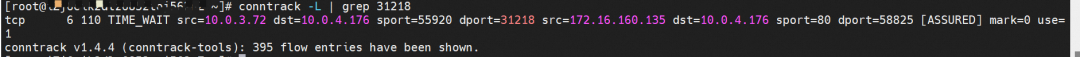

You can access port 31218 of the cn-hongkong.10.0.4.176 node from the outside cluster.

The cn-hongkong.10.0.4.176 node records that the src is 10.0.3.72 and performs DNAT for 172.16.160.135, expecting it to return the port 58825 of 10.0.4.176.

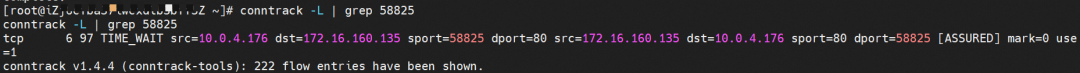

On the cn-hongkong.10.0.2.86 node lies the backend endpoint. The conntrack table records that src is 10.0.4.176, and sport is 58825. So you can see that the source IP address of the application pod is 10.0.4.176 and the source IP address is lost.

Versions prior to Kubernetes 1.24

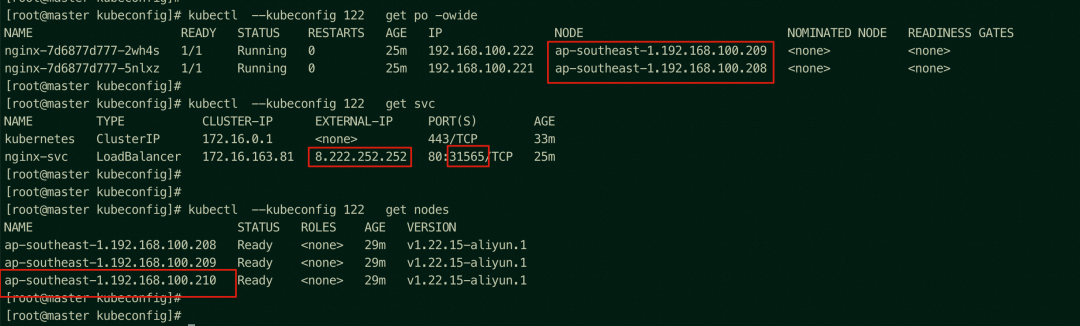

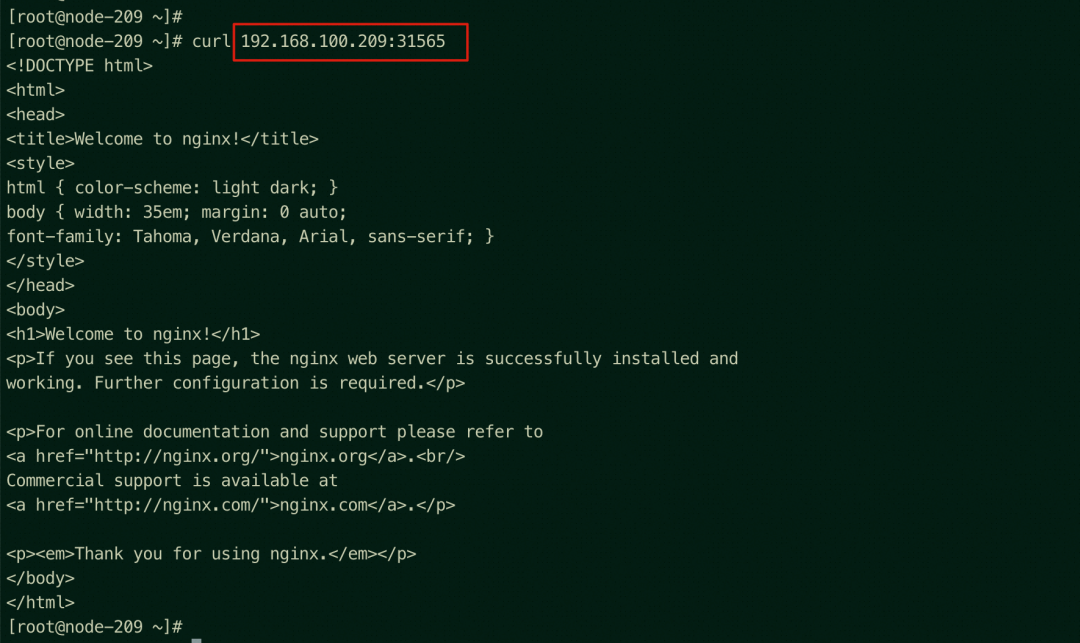

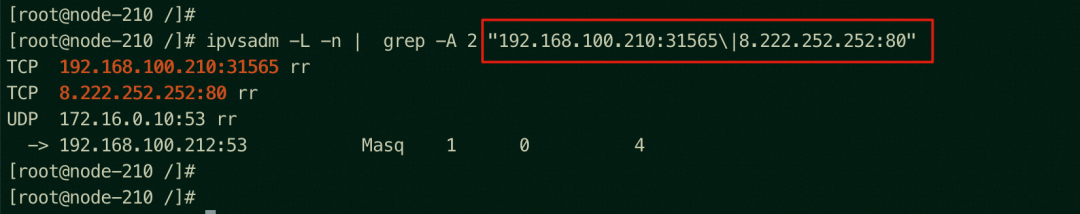

Nginx pods are distributed on the nodes of ap-southeast-1.192.168.100.209 and ap-southeast-1.192.168.100.208. ap-southeast-1.192.168.100.210 nodes do not have Nginx pods.

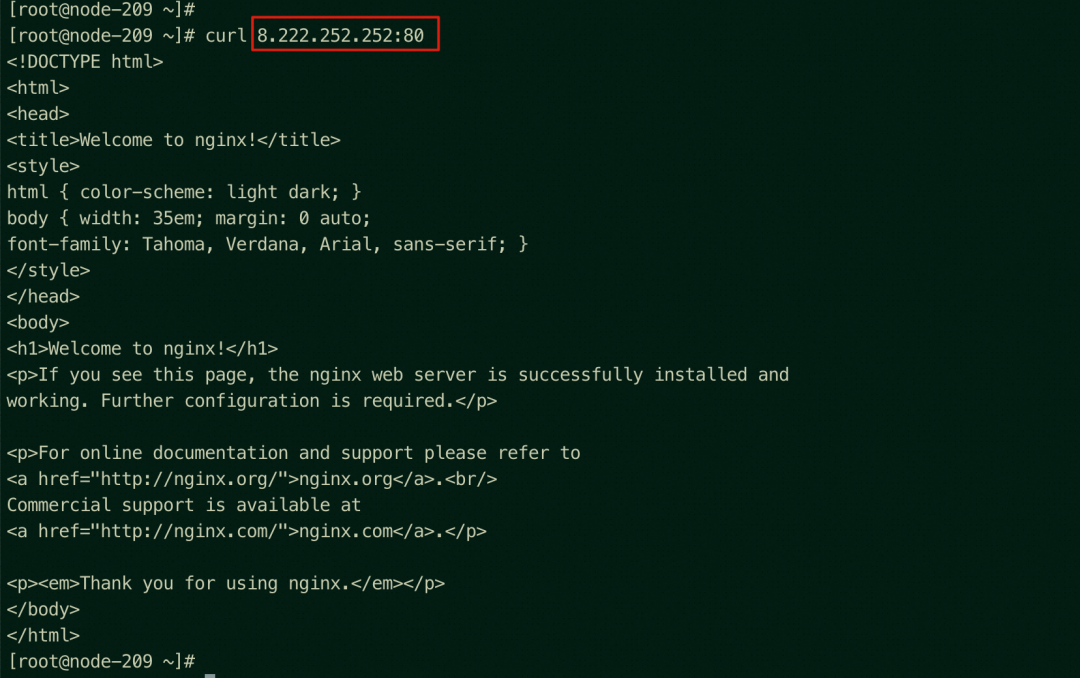

You can access the NodePort 31565 of the ap-southeast-1.192.168.100.209, where there is the backend pod, through any node in the cluster (on the 209 node in this example).

You can access port 80 of SLB 8.222.252.252 from the node ap-southeast-1.192.168.100.209 where there is the backend pod.

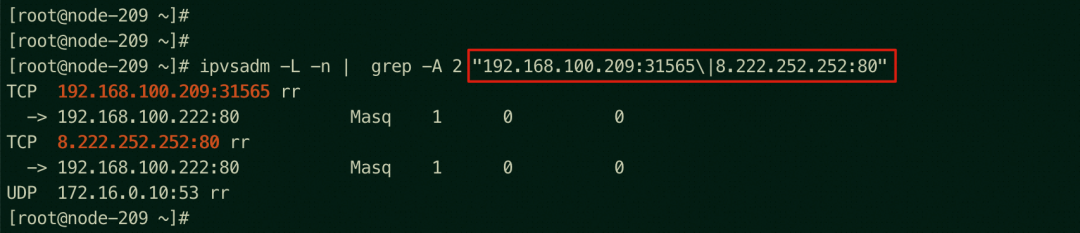

On the ap-southeast-1.192.168.100.209 node exists the NodePort and SLB IPVS rules, but only the IP address of the backend pod of the node exists.

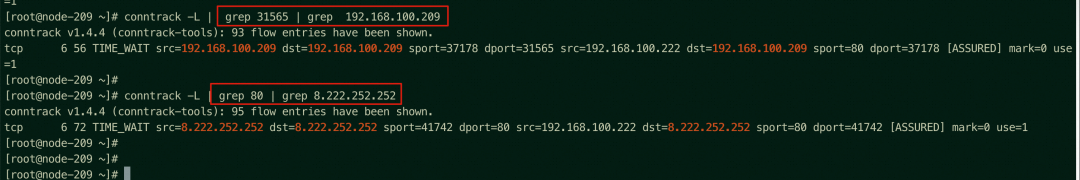

On the ap-southeast-1.192.168.100.209 node, the related link receives DNAT, which is returned to the source through nginx-7d6877d777-2wh4s 192.168.100.222 on the ap-southeast-1.192.168.100.209 node. All related transformations are on this node. Through the conntrack table, we can know why the TCP layer 4 connection can be established.

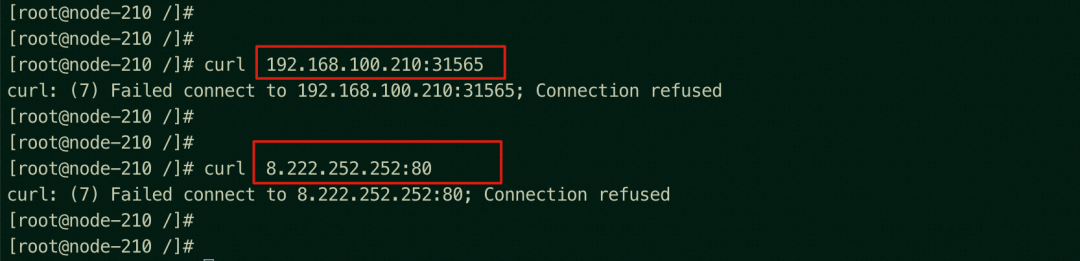

You cannot access the NodePort 31565 or the SLB instance of the ap-southeast-1.192.168.100.210 node, where there is no backend pod, from any node in the cluster (on the 210 node in this example).

It is also further confirmed that access to the node of the SLB instance associated with SVCs within the cluster is not allowed. Even if the SLB has other listening ports, access to other ports of the SLB will be denied.

Viewing the ap-southeast-1.192.168.100.210 node, there is no relevant IPVS forwarding rule, so DNAT cannot be performed, and the access will fail.

Versions after Kubernetes 1.24 (including Kubernetes 1.24)

• The NodePort of a node that has an endpoint can be accessed, and the source IP address can be reserved.

This is the same as the intra-cluster access of versions prior to Kubernetes 1.24. For more information, see the preceding description.

Nginx pods are distributed on the nodes of cn-hongkong.10.0.2.77 and cn-hongkong.10.0.0.171, so the test is conducted on the cn-hongkong.10.0.4.141 node without Nginx pods.

There are the following situations:

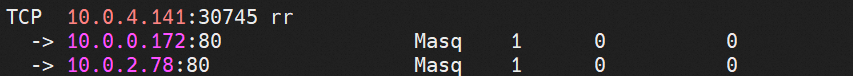

You can see all the backend pod nginx-79fc6bc6d-8vctc 10.0.2.78 and nginx-79fc6bc6d-j587w 10.0.0.172 of NGINX added by the IPVS rules of NodePort 110.0.4.141:30745 of the node without endpoints.

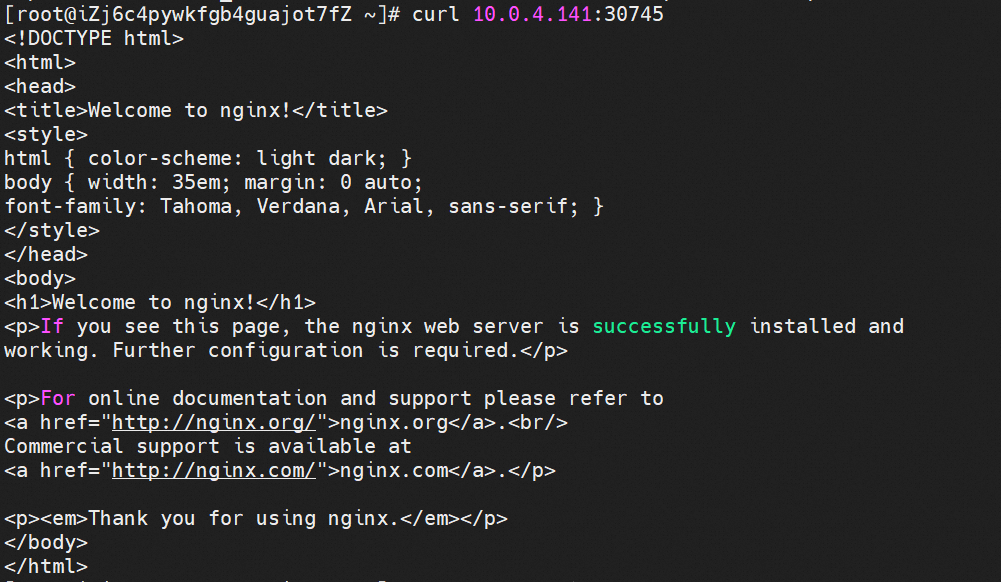

The node in the cluster can access the NodePort 30745 or TCP port of the cn-hongkong.10.0.4.141 node where has no backend pod.

Through the conntrack table, we can know that on the cn-hongkong.10.0.4.141 node, the relevant link receives DNAT, which is returned to the source through Nginx pod nginx-79fc6bc6d-8vctc 10.0.2.78 with the help of backup force, Nginx pods.

The conntrack table on the cn-hongkong.10.0.2.77 node where nginx-79fc6bc6d-8vctc 10.0.2.78 is located records that 10.04.141 accesses 10.0.2.78 and expects 10.0.2.78 to return directly to port 39530 of 10.0.4.141.

If a node with an endpoint in the cluster accesses the NodePort 32292 of the ap-southeast-1.192.168.100.131 node where there is no backend point, it is not allowed. It is the same as the out-of-cluster access of versions after Kubernetes 1.24 (including Kubernetes 1.24). For more information, see the preceding description.

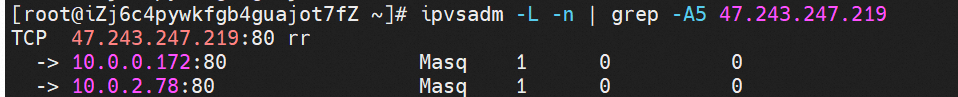

You can see all NGINX backend pod nginx-79fc6bc6d-8vctc 10.0.2.78 and nginx-79fc6bc6d-j587w 10.0.0.172 added by the IPVS rules of the SLB IP address of the node without endpoints.

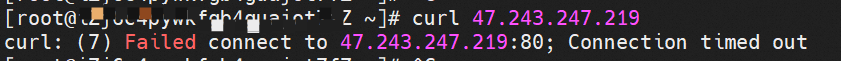

SLB 47.243.247.219 is accessed on a node without an endpoint, and the access is indeed timed out.

Through the conntrack table, we can know that when the SLB IP address is accessed on a node without an endpoint, it is expected to see that the backend pod is returned to the SLB IP address. However, the SLB IP address has been virtually occupied by kube-ipvs on the node, so SNAT is not performed, which makes it inaccessible.

Nginx pods are distributed on the cn-hongkong.10.0.2.86 node.

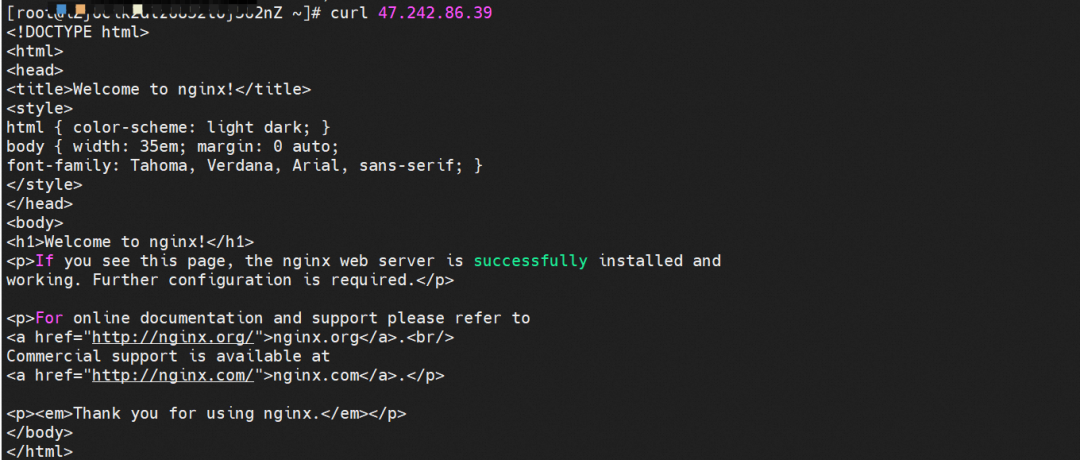

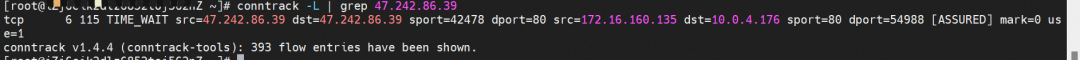

On the cn-hongkong.10.0.4.176 node, it is possible to access SLB 47.242.86.39.

Through the conntrack table of the cn-hongkong.10.0.4.176 node, we can see that both src and dst are 47.242.86.39, but it is expected that the Nginx pod 172.16.160.135 will be returned to port 54988 of 10.0.4.176, and the 47.242.86.39 will be performed SNAT to become 10.0.4.176.

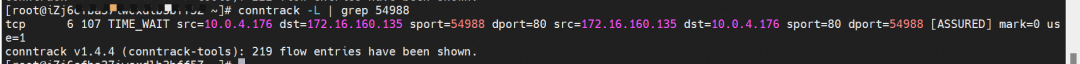

On the cn-hongkong.10.0.2.86 node lies the backend endpoint. The conntrack table records that src is 10.0.4.176, and sport is 54988. So you can see that the source IP address of the application pod is 10.0.4.176 and the source IP address is lost.

[1] Client cannot access CLB

https://www.alibabacloud.com/help/en/doc-detail/55206.htm

[2] Add annotations to the YAML file of a Service to configure CLB instances

https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/add-annotations-to-the-yaml-file-of-a-service-to-configure-clb-instances

[3] service-traffic-policy

https://kubernetes.io/docs/concepts/services-networking/service-traffic-policy/

[4] Community PR

https://github.com/kubernetes/kubernetes/pull/97081/commits/61085a75899a820b5eebfa71801e17423c1ca4da

Decipher the Elastic Technology of Knative's Open Source Serverless Framework

Dubbo-js Alpha Version: Enabling Direct Access to Backend Microservices via Browsers

664 posts | 55 followers

Followtianyin - November 8, 2019

kehuai - May 15, 2020

Alibaba Cloud_Academy - September 1, 2022

OpenAnolis - September 4, 2025

Alibaba Cloud Native Community - April 26, 2022

amap_tech - August 27, 2020

664 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native Community