By Buchen

How did serverless computing emerge? Where is it implemented? How will it develop in the future? In this article, a senior technical expert from Alibaba shares some views on serverless computing, reviews its development, and forecasts its future trends.

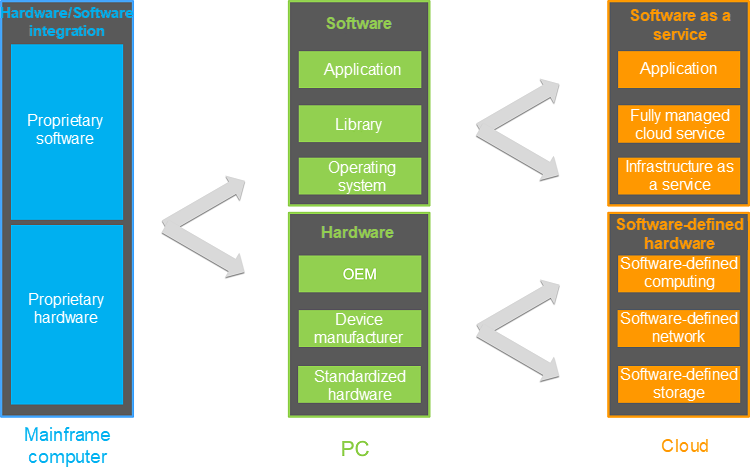

Looking back at the history of computing, you will find that the concepts of abstraction, decoupling, and integration run through it. Each time the industry undergoes abstraction, decoupling, and integration, innovation is pushed to new heights. This leads to huge market growth and new business models.

In the mainframe era, hardware and software were customized with proprietary hardware, operating systems, and application software.

In the PC era, hardware was abstracted and decoupled into standardized components, such as CPU, memory, hard disk, motherboard, and USB devices. Components produced by different manufacturers could be easily combined and assembled into a complete machine. The software was abstractly decoupled into reusable components, such as operating systems and libraries. The abstraction and decoupling of hardware and software created new business models, increased productivity, and contributed to the prosperity of the PC era.

In the cloud era, software-defined hardware and software as a service (SaaS) have become two notable trends.

When it comes to software-defined hardware, more hardware features are provided by the software, thus accelerating iteration and reducing costs. Take software-defined storage (SDS) as an example. SDS is an approach to building data storage that places a layer of software between storage and applications to help manage data storage methods and locations. The decoupling of hardware and software enables SDS to run on any industry-standard or x86 system. You can use any standardized commercial server to meet growing storage demands. The decoupling of hardware and software also enables SDS to scale out horizontally, simplifying capacity planning, and cost management.

SaaS allows the software to be centrally hosted and delivered to a large number of users remotely. Services have become the foundation on which applications are built. APIs have been implemented and provided to developers as services. The microservice architecture has achieved widespread success. Services have become a basic form of cloud products. All of these changes over the past ten years have contributed to the success of cloud computing. You can obtain access to servers by calling APIs without the need to build your own data center. Users have never had access to computing capabilities in such an accessible way.

Do you still remember Google's famous "The Datacenter as a Computer" thesis, in which the authors treated the data center as a massive warehouse-scale computer (WSC)? The cloud can be treated as a computer in the Data Technology (DT) era. More APIs are being implemented and services are becoming fully managed on the cloud. You may wonder what the most suitable programming model is for the cloud era and how to build cloud-based applications in a way that combines abstraction, decoupling, and integration.

Before answering the preceding questions, let's turn our attention to the SaaS field. Salesforce is a star enterprise in the SaaS field, and its experience in building capability-providing platforms provides us with an excellent example. Early SaaS products adopted a standardized delivery model and implemented integration through APIs. With more products being developed and the size of customers growing, Salesforce faced the following challenges:

Salesforce's strategy was to integrate the entire business, including technology and organization, and make it accessible using a platform. The platform enlarged the value of the enterprise and benefited the enterprise, the customers, and the developers. By continuously improving the application delivery capability of the platform, Salesforce greatly improved the research and development efficiency of products and strengthened the integration and combination of products. Salesforce also made products much easier to integrate with external systems, which contributed to establishing a developer community.

Since 2006, Salesforce has invested heavily in building capability-providing platforms. For example, it launched programming languages Apex and Visualforce to allow customers, partners, and developers to write and run custom logic code in a multi-tenant environment. As a result, Salesforce launched Force.com, a self-developed platform, as a service in 2008. On this platform, customers can build their own applications using Salesforce's products. In 2010, Salesforce acquired Heroku, a popular platform as a service (PaaS) provider. In 2019, Salesforce launched a serverless computing platform, named Evergreen, to strengthen the application building and integration capabilities. In addition to application building, Salesforce also invested heavily in promoting mobility, data management, and intelligence of applications in recent years, extending the platform's capabilities in related fields. This helps customers achieve data-driven and intelligent management and increases sales for customers through data analysis and transaction matching.

From the development of Salesforce, we can draw the following conclusions:

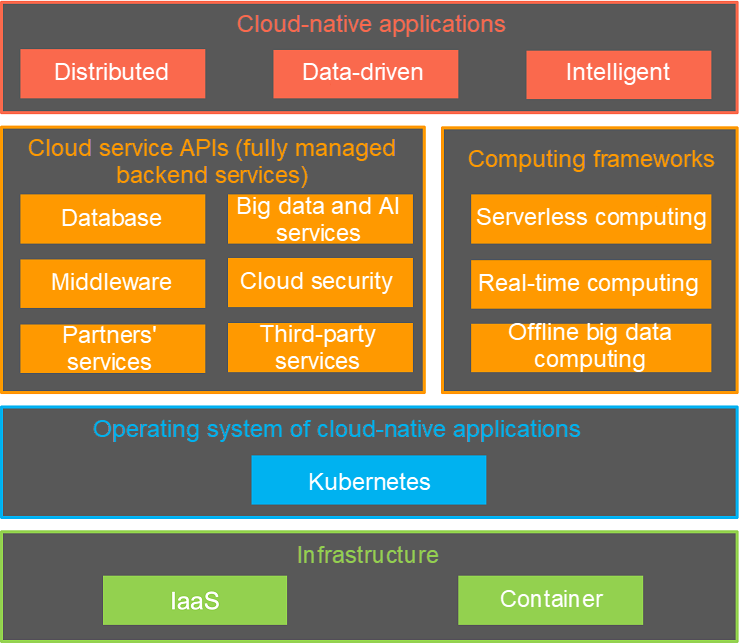

Although cloud computing can be more complicated than SaaS, it follows similar development logic. The features of almost all cloud services are implemented through APIs. Cloud service providers also take the development of platform programming models, improvement of value delivery capabilities, and establishment of application development ecosystems as their most important goals. We can have a clearer view of the positioning of numerous and complicated cloud services when we look at the cloud service system from the perspective of programming models.

Infrastructure as a Service (IaaS) and container technology are the foundations of cloud computing architecture. A container orchestration service, such as Kubernetes, serves as the operating system of cloud-native applications. Backend as a Service (BaaS) functions as the API for connecting applications in specific fields to cloud services. To achieve higher productivity, a large number of BaaS services are serverless and fully managed in specific fields, such as storage, database, middleware, big data, and AI. This has been going on for many years. For example, users are accustomed to using serverless object storage services instead of building their own data storage systems based on servers. With the cloud providing abundant serverless BaaS services, a new general-purpose computing service is needed to help users simplify infrastructure building and build applications based on cloud services. Serverless computing was created in response to the demands. Below are some of its characteristics:

With the development of users' knowledge systems and the improvement of product capabilities, serverless computing has become more popular in recent years. Serverless architecture can help users gain significant benefits in terms of reliability, cost, R&D, and O&M.

Mini programs, web applications, mobile applications, and APIs feature complex and variable logic. They have high requirements on fast iteration and publishing. In addition, the resource utilization rate of such online applications is usually lower than 30%. For long-tail applications, such as mini programs, the resource utilization rate is lower than 10%. Serverless computing can be free of O&M and can adopt the pay-as-you-go billing method, making it suitable for building backend systems for mini programs, web applications, mobile applications, and APIs. Based on reserved computing resources and real-time auto scaling, developers can build online applications that can provide stably low latency and cope with high traffic. At Alibaba, serverless computing is mostly used to build backend services, such as Serverless for Frontends, which gives frontend developers full-stack capabilities, machine learning algorithm services, and mini program platforms.

Typical offline batch task execution systems, such as large-scale audio and video file transcoding services, provide a series of features, such as computing resource management, priority-based task scheduling, task orchestration, reliable task execution, and task data visualization. If users build a batch task execution system from the machine or container level, they usually use message queues to store task information and allocate computing resources. They use container orchestration systems, such as Kubernetes, to achieve resource scaling and fault tolerance. Also, they need to build or integrate monitoring and alerting systems. If tasks involve multiple steps, users need to integrate workflow services to implement reliable step execution. However, with serverless computing platforms, users need to focus on implementing the task execution logic. The auto scaling features of serverless computing platforms enable users to meet computing power requirements with ease when burst tasks are involved. The table below summarizes the differences between a user-built system and a serverless computing platform:

| Item | User-Built System | Serverless Computing Platform |

| Task Execution Efficiency | The task execution efficiency depends on the auto scaling capability of the user-built system and the task scheduling efficiency. When a large number of tasks need to be executed simultaneously, the tasks need to be queued, which may cause delays. | The serverless computing platform is connected to resources in underlying resource pools, such as elastic computing resources. Therefore, the platform supports real-time scaling and is capable of handling tasks in a timely manner. With the help of serverless workflows, the platform can achieve complex task orchestration. In addition, the platform dynamically adjusts the computing resources allocated to different tasks based on their priorities. This ensures that tasks with higher priorities can be executed earlier. |

| Cost | The cost depends on the efficiency of the user-built system in terms of task scheduling and resource management. | The resource utilization rate is usually 10% to 30% higher than the user-built systems. Therefore, this solution is more cost-effective. |

| Development | In addition to the business logic, users also need to develop a task execution system to ensure the reliable execution of tasks. | The serverless computing platform allows users to deploy applications with one click. Therefore, users only need to focus on developing their business logic. |

| O&M | Users need to build a system from scratch. They may encounter various problems regarding software installation, service configuration, and security updates. | The platform is free of O&M. In addition, it provides powerful problem diagnosis and data visualization capabilities. |

| Project Launch Cycle | Users need to complete hardware purchases, software and environment configurations, system development, testing, monitoring and alerting, and a canary release before a project can be launched. At least 30 person-days (30*8 hours) are required for a product launch cycle. | Three person-days (3*8 hours) are required, including two person-days for development and debugging and one person-day for stress testing and observation. |

Serverless computing services are usually integrated with various types of services on the cloud in an event-driven manner. Users do not need to manage infrastructures, such as servers, or write glue code for integrating multiple services. Instead, they can build applications that adopt an event-driven architecture. These applications can easily integrate resources that are loosely coupled and distributed.

Take Alibaba Cloud's Function Compute as an example. To implement backend API services, users can integrate Function Compute with an API Gateway. To enable large-scale data processing centered on OSS, users can integrate Function Compute with OSS so that Function Compute can respond to OSS events, such as object creation and deletion, in real-time. To process a large number of messages, users can integrate Function Compute with message middleware so that Function Compute can respond to message-related events. Through integration with Alibaba Cloud EventBridge, Function Compute can process Alibaba Cloud events, third-party SaaS service events, or events related to user-built systems.

Time triggers allow users to use functions to schedule tasks without the need to manage the underlying servers that run the tasks. By using CloudMonitor triggers, users can get informed about IaaS O&M events, such as ECS restart or breakdown and OSS flow control. Users can also integrate CloudMonitor with Function Compute so that Function Compute can automatically deal with O&M events when they occur.

In recent years, serverless computing has been developing at a high speed, which has had a large influence on the computing industry. Mainstream cloud service providers are constantly enriching their cloud service systems and providing better development tools, more efficient assembly lines for application delivery, better monitoring tools, and more sophisticated integration between services. Serverless computing has come a long way, but this is just the beginning.

Complex technical solutions, not only cloud services but also partners' and third-party services, will be implemented as fully managed, serverless backend services. The cloud and its ecosystem will provide capabilities in serverless computing platforms in the form of APIs. For any platform that uses APIs to provide capabilities, such as DingTalk, WeChat, or Didi, serverless computing will be the most important part of its platform strategy.

Container technology brings disruptive innovation to applications regarding portability and agile delivery. It can be seen as a revolution in building and delivering applications in modern times.

Containers are the foundation for running applications in modern times, but users still need to manage infrastructures, such as servers. For example, users need to spend a lot of effort on resource usage and machine O&M. Hence, serverless container services, such as AWS Fargate and Alibaba Cloud ECI, have emerged in the industry to help users focus on building containerized applications without shouldering the infrastructure management costs. Serverless computing services, such as Function Compute, bring users automated scaling, ultimate elasticity, and on-demand billing. However, these services are faced with challenges in terms of compatibility with users' development habits, portability, tool chain, and ecosystem. Containers can perfectly handle these problems. With the development of container technology, a container image is sure to become the distribution method for more serverless applications, such as Function Compute. Combining the extensive tool ecosystem of the container technology with the free O&M and ultimate elasticity features of serverless computing will bring a brand-new experience to users.

We have already talked about the significance of connecting Function Compute with other cloud services through an event-driven approach in the previous sections. In the future, all services on the cloud and its ecosystem will be connected. All events related to users' own applications or partners' services can be processed in a serverless manner, regardless of whether the events occur in on-premises environments or public clouds. The cloud and its ecosystem will be more closely connected to build a solid foundation for users to build flexible and highly available applications.

Virtual machines and containers are two virtualization technologies with different orientations. The former has strong security and high overheads, while the latter has the opposite. Serverless computing platforms require the highest security and minimum resource overheads at the same time. Also, serverless computing platforms must be compatible with the original program execution methods. For example, a serverless computing platform must be able to support arbitrary binary files. This makes it impractical for users to build a serverless computing platform by using language-specific VMs. Hence, new lightweight virtualization technologies, such as AWS Firecracker and Google gVisor, have emerged. Take AWS Firecracker as an example. It provides a minimal required device model and optimizes kernel loading, enabling startup within 100 milliseconds and minimal memory overheads. A bare metal instance can support the running of thousands of instances. With the help of resource scheduling algorithms that are sensitive to the load of applications, cloud service providers are hopeful of increasing the oversell rate by an order of magnitude while maintaining stable performance.

As the scale and influence of serverless computing constantly expand, it becomes important to implement end-to-end optimization at the application framework, language, and hardware levels based on the load characteristics of serverless computing. A new Java VM technology improves the startup speed of Java applications. Non-volatile memory helps instances wake up from sleep mode faster. CPUs work together with operating systems to achieve fine-grained isolation of performance disturbance factors in high-density computing environments. All of these new technologies are contributing to new computing environments.

Another approach to achieve higher performance-to-power and performance-to-price ratios is to support heterogeneous hardware. For a long time, the performance of x86 processors has become increasingly difficult to improve. In certain scenarios that require high computing power, for example, when AI is involved, GPUs, FPGAs, and TPUs have more advantages in computing efficiency. With more mature virtualization of heterogeneous hardware, resource pooling, scheduling of heterogeneous resources, and application framework support, the computing power of heterogeneous hardware can be provided in a serverless way. This will give users easier access to serverless computing.

In 2009, the RAD Lab at UC Berkeley published a famous paper entitled, "Above the Clouds: A Berkeley View of Cloud Computing," in which UC Berkeley scientists shared their views on cloud computing, its value, challenges, and evolving path. Some of their views have proven to be true in the ten years since publication. Today, no one doubts the value of cloud computing and its profound impact on various industries. In 2019, UC Berkeley published a new paper entitled, "Cloud Programming Simplified: A Berkeley View on Serverless Computing." The paper predicted that serverless computing would dominate the development of cloud computing over the next decade and that the cloud computing industry would spiral upwards. The logic behind the creation and development of serverless computing has given birth to its success. Over the next decade, serverless computing is sure to reshape the way enterprises innovate and will help make the cloud a powerful driving force for social development.

Learn more about serverless computing on Alibaba Cloud at https://www.alibabacloud.com/product/function-compute

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

The Evolution and Real-World Applications of Function Compute

Architecture Methodology: How to Derive Application Logic from the Bottom Up

2,593 posts | 793 followers

FollowAlibaba Cloud Serverless - February 17, 2023

Apache Flink Community - December 17, 2024

Alibaba Cloud Serverless - April 7, 2022

Alipay Technology - November 6, 2019

Alibaba Clouder - February 3, 2021

Alibaba Cloud MVP Team - October 25, 2019

2,593 posts | 793 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn MoreMore Posts by Alibaba Clouder