By Liu Yu (Alibaba Cloud Serverless Product Manager)

From cloud computing to cloud-native to Serverless architecture, there are rules for rapid technological development. Why was Serverless architecture created?

Since the world's first general-purpose computer ENIAC, the development of computer science and technology has never stopped moving forward. It has changed a lot in recent years. Continuous breakthroughs and innovations are happening in artificial intelligence. 5G brings more opportunities in IoT, and cloud computing is stepping into people's life.

Three keywords can be seen in the figure. Google published three important papers from 2003 to 2006. These articles pointed out the technical foundation and future opportunities of HDFS (distributed file system), MapReduce (parallel computing), and HBase (distributed database), which formally indicated the development direction of cloud computing.

The development of cloud computing is rapid. With the process of cloud computing, cloud-native was born and has been more focused on by the public.

Through the analysis of the text composition structure of cloud computing and cloud-native, we can see that the word cloud-native is adding native between cloud and computing. Therefore, whether it is a technology iteration or a concept upgrade, it can be considered that cloud computing is developing rapidly and has finally developed into what is familiar today: cloud-native computing.

What is cloud computing? The embryonic concept of cloud computing was born as early as 1961. At the centenary ceremony of MIT, John McCarthy, the winner of the Turing Award in 1971, put forward a concept for the first time. This concept was later hailed as the initial and advanced reverie model of cloud computing. It stated, "In the future, computers will become a public resource, which will be used by everyone, like water, electricity, and gas."

In 1996, the word cloud computing was put forward. By 2009, UC Berkeley described cloud computing in detail in a published paper, which pointed out that cloud computing is an ancient dream to be realized soon and a new title for the long-standing dream of computing as infrastructure. It is rapidly becoming a business reality. In this article, cloud computing is clearly defined. Cloud computing includes application services on the Internet and software and hardware facilities that provide these services in data centers.

Today, the development of cloud-native technology is rapid, but what is cloud-native? The "What is the Real Cloud-native" article gives a clear explanation. The software, hardware, and architecture born from the cloud are the real cloud-native. The technology born from the cloud is cloud-native technology. Indeed, being born and growing up on the cloud is cloud-native.

What does cloud-native include? Adding the word cloud-native, familiar technologies become cloud-native-related technologies. For example, the database becomes a cloud-native database, and the network becomes a cloud-native network. In CNCF Landscape, you can see a description of the cloud-native product dimensions in the Cloud-Native Foundation, including databases, streams, messages, container images, servicemesh, gateways, Kubernetes, and (a popular word) Serverless.

In many cases, Serverless architecture is regarded as a kind of glue. It links many other products of cloud-native with the user's business while providing attractive technical dividends. It is selected by many projects and businesses for that reason. What is the Serverless Architecture?

Through the Serverless structure, we know that server refers to its literal meaning and less refers to less energy. Therefore, the mind conveyed by Serverless architecture is to give professional things to the professionals, so developers can pay less attention to the underlying related content (such as servers) and put energy into more valuable business logic.

In 2009, UC Berkeley published an article on cloud computing that made a clear definition. It also proposed ten difficulties and challenges faced by cloud computing, including service availability, data security, and auditability.

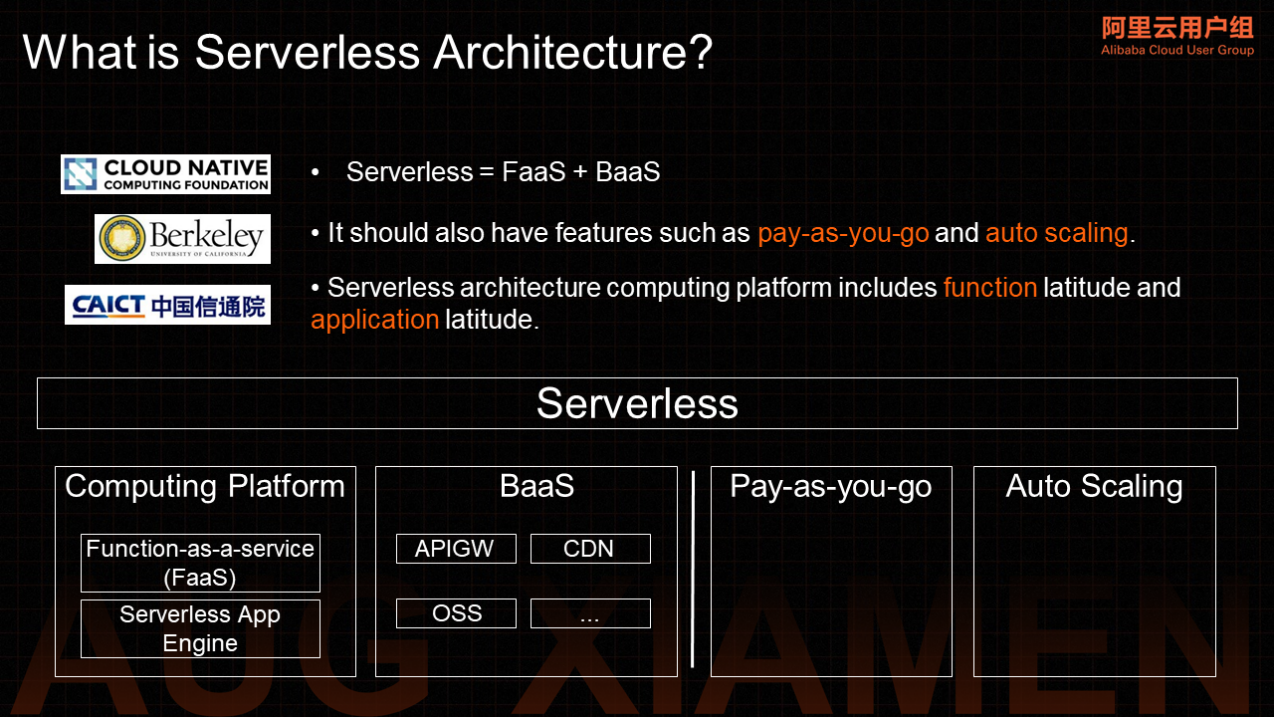

In 2019, ten years later, UC Berkeley issued another article that explained Serverless architecture from multiple angles. For example, from a structural point of view, it affirmed that Serverless is a combination of FaaS and BaaS. From the perspective of characteristics, products or services considered to be Serverless architecture need to have the characteristics of pay-as-you-go and auto scaling. It raised an idea that Serverless will become the default computing paradigm in the cloud era and replace Serverful computing. This means the end of the server-client mode.

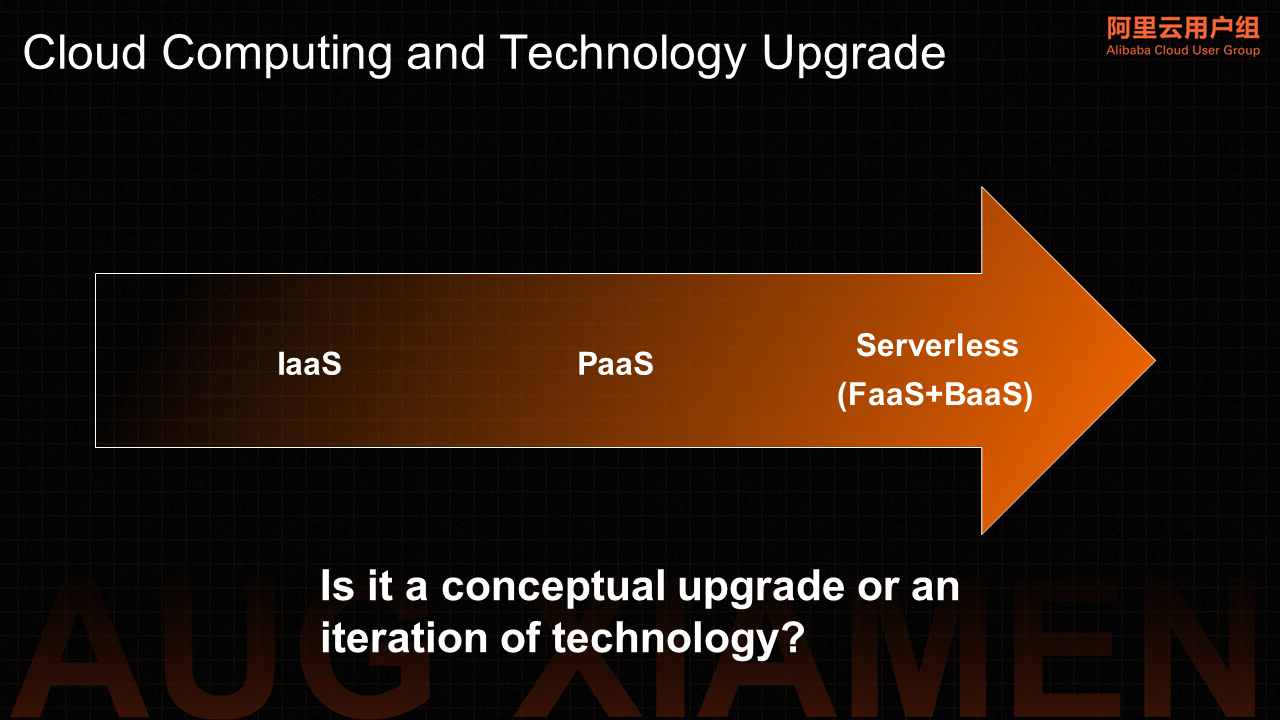

From IaaS to PaaS to Serverless, the development of cloud computing is becoming clear, and de-serverization is becoming obvious.

Regardless of cloud-native or Serverless architecture, the concept of the cloud is upgrading, and cloud technology is iterating. All these changes are for performance improvement, security enhancement, cost reduction, and productivity drive.

Although there is no clear definition of Serverless architecture, the statement that Serverless is a combination of FaaS and BaaS is accepted by many people.

FaaS refers to Function as a Service, while BaaS refers to the Backend as a Service. The combination of the two becomes an exclusive part of the Serverless architecture and provides developers with technical dividends that reduce costs and improve efficiency.

CNCF affirmed in the Serverless white paper that Serverless is a combination of FaaS and BaaS. In the white paper, UC Berkeley affirmed this statement but also pointed out from the perspective of characteristics that products or services considered to be Serverless architecture need to have the characteristics of pay-as-you-go and auto scaling. However, this was the same description in 2019.

Today, the Serverless architecture has completed self-renewal and iteration. In the Serverless white paper released by the CAICT, it pointed out that Serverless computing platforms include function latitude and application latitude.

Over time, Alibaba Cloud launched Serverless App Engine (SAE), a platform for Serverless applications. In other words, it can be a best practice for Serverless applications.

SAE is a fully managed, O&M-free, highly flexible, and general-purpose PaaS platform.

So far, the composition of the Serverless architecture has been clear.

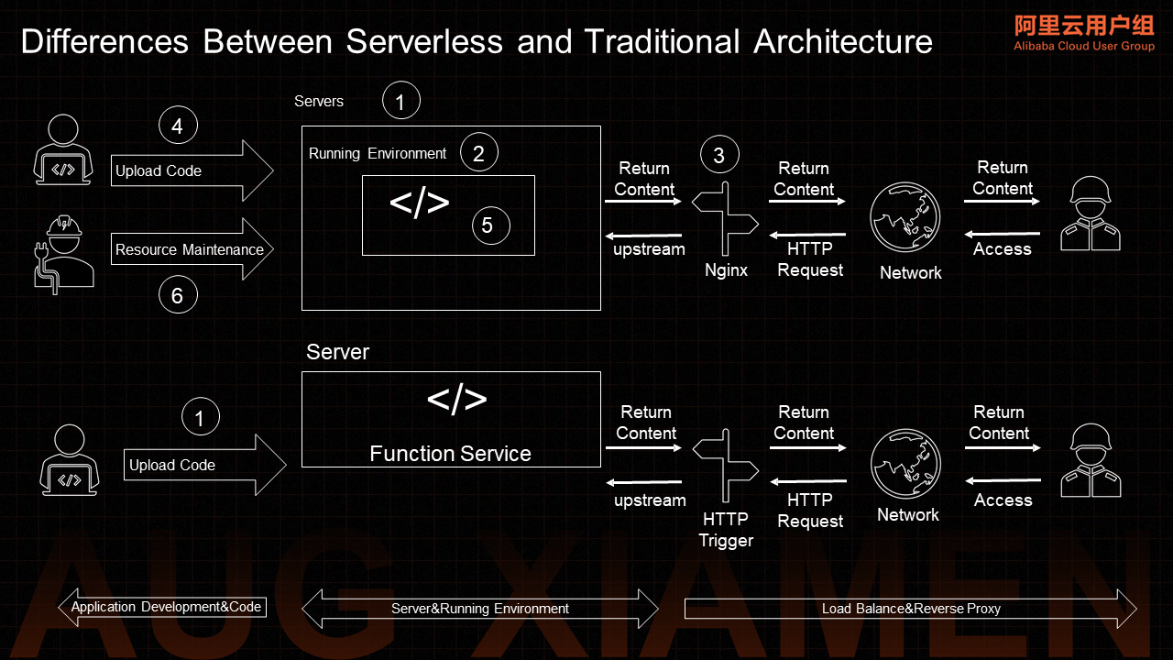

As a new computing paradigm in the cloud era, Serverless architecture is a naturally distributed architecture. Its working principle is slightly changed compared with traditional architectures.

In the traditional architecture, developers need to purchase virtual machine services, initialize the running environment, and install the required software (such as database software and server software). After preparing the environment, they need to upload the developed business code and start the application. Then, users can access the target application through network requests.

However, if the number of application requests is too large or too small, developers or O&M personnel need to scale the relevant resources according to the actual number of requests and add corresponding policies to the load balance and reverse proxy module to ensure the scaling operation takes effect timely. At the same time, when doing these operations, it is necessary to ensure online users will not be affected.

Under the Serverless architecture, the entire application release process and the working principle will change to some extent.

After developers develop the complete business code, they only need to deploy or update it to the corresponding FaaS platform. After completion, they can configure relevant triggers based on business requirements. For example, you can configure HTTP triggers to provide web application services. As such, users can access applications published by developers through the network.

In this process, developers do not need to pay additional attention to server purchase, operation and maintenance, and other related operations, nor do they need to spend additional energy on the installation of some software and the scaling of application resources. Developers need to pay attention to their business logic. As for the various server software that needs to be installed under the traditional architecture, it has become configuration items managed by cloud vendors. Similarly, the scaling of resources according to the utilization of servers is automatically handed over to cloud vendors in the traditional architecture.

Auto scaling in the traditional sense refers to when there is a contradiction between the capacity planning and the actual cluster load of the project, which means when the resources of the existing cluster cannot bear the pressure, adjust the size of the cluster or allocate the corresponding resources to ensure the stability of the business. When the cluster load is low, the system can reduce the resource allocation of the cluster as much as possible to reduce the waste of idle resources and save costs.

However, under the Serverless architecture, auto scaling is further generalized, which means the performance on the user side cancels the process of capacity planning of the project itself. The increase and decrease of resources are determined by platform scheduling.

In UC Berkeley's article, there is a description of the features and advantages of Serverless architecture. "The execution of code no longer requires manual allocation of resources. You do not need to specify the required resources (such as how many machines, how much bandwidth, and how much disk) to run the service. You only need to provide the code, and the rest is handled by the Serverless platform. In the current stage, the implementation platform also requires users to provide some policies, such as the specifications and maximum parallelism of a single instance and the maximum CPU utilization of a single instance. The ideal case is for fully automatic adaptive allocation by some learning algorithm."

The fully automatic adaptive allocation described by the author refers to the auto scaling characteristics of the Serverless architecture.

Auto scaling under the Serverless architecture means the Serverless architecture can automatically allocate and destroy resources according to business traffic fluctuations. It can balance stability and high performance and improve resource utilization to the greatest extent.

When the developer completes the development of business logic and deploys the business code to the Serverless platform, the platform does not immediately allocate computing resources but persists the business code, configuration, and other related content. When a traffic request arrives, the Serverless platform will automatically start the instance according to the actual traffic and configuration. Otherwise, it will reduce the instance. At some point, the number of instances can be reduced to 0, which means the platform does not allocate resources to the corresponding function.

The core technology dividend of Serverless architecture, auto scaling, represents the process of improving resource utilization and moving towards green computing to a certain extent.

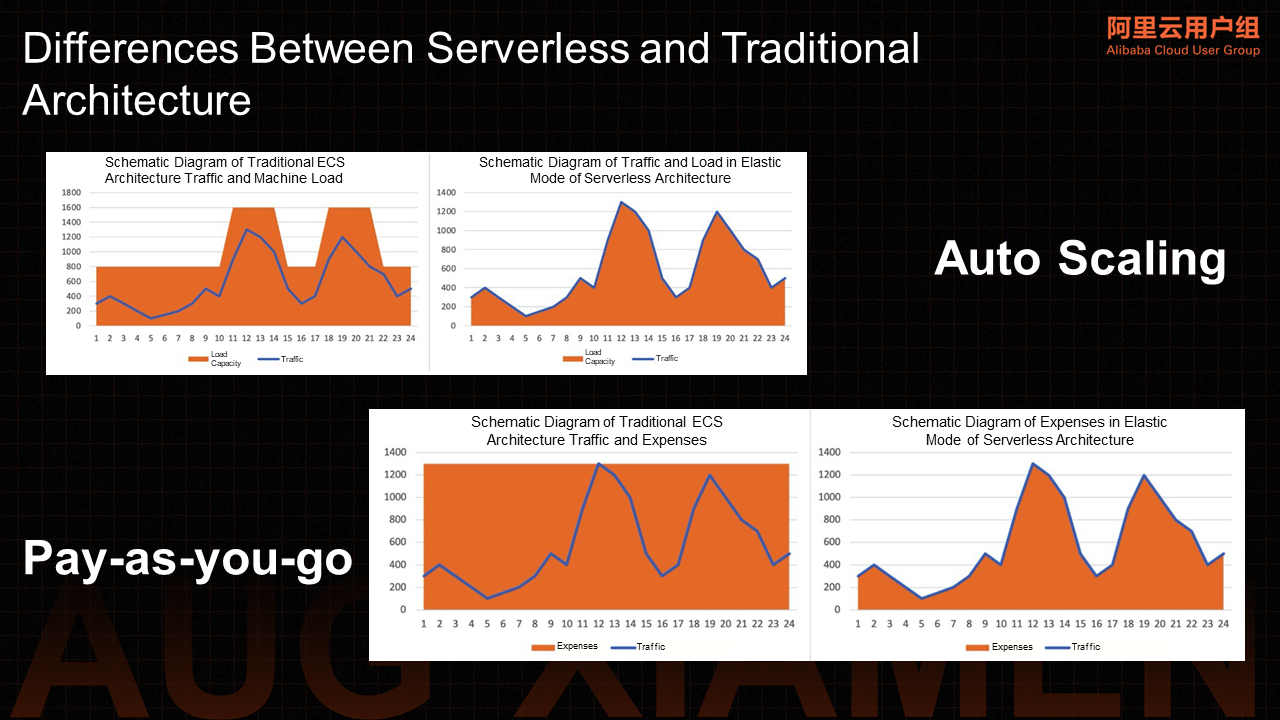

In the auto scaling part of the figure above, the left side is the schematic diagram of traffic and machine load under the traditional ECS architecture. The right side is the schematic diagram of traffic and load under the elastic mode of Serverless architecture. The orange area represents the resource load capacity perceived by the user side, and the blue polyline represents the traffic trend of a website on a certain day.

Through the comparison of these two charts, we can find that under the traditional ECS architecture, resources need to be increased and reduced manually. The granularity of the change is the host rating, so the realization is severely tested. The granularity is too coarse to effectively balance the relationship between resource waste and stable performance.

The orange area above the blue line is a wasted resource. On the right is a schematic diagram of traffic and load in the elastic mode of Serverless architecture. In this diagram, the load capacity is matching the traffic, which means there is no need to deal with the peaks and troughs of traffic under technical support (like the traditional ECS architecture on the left). All these elastic capabilities (including scaling) are provided by cloud vendors.

On the one hand, the benefit of this mode is to reduce the pressure on business O&M personnel and their work complexity. On the other hand, the user's perception is that real resource consumption is positively related to the required resource consumption, which can reduce resource waste and conform to the idea of green computing to a certain extent.

The pay-as-you-go billing method means using it before paying. Users do not need to purchase a large number of resources in advance but can pay based on the usage of resources. Even products or services that do not have a Serverless architecture have certain pay-as-you-go capabilities. For example, ECS and other products have this option. However, the Serverless architecture can use pay-as-you-go as a technical bonus. A large part of the reason is that the pay-as-you-go granularity is finer, and the resource utilization on the user side is almost 100%. (The resource utilization does not reach 100%, which only refers to the perception of the user side under the Serverless architecture and the requested granularity.)

Let’s take a website as an example, the resource utilization rate is relatively high during the daytime, and the resource utilization rate is relatively low during the night. However, once resources (such as servers) are purchased, no matter how much traffic is in the daytime, the cost is a process of continuous expenditure. If the pay-as-you-go model is adopted, since the billing granularity is too coarse, there is no way to improve the resource utilization rate as much as possible.

According to Forbes, typical servers in commercial and enterprise data centers only provide 5% to 15% of the average maximum processing power output, which undoubtedly proves that the resource utilization rate of traditional servers is too low and causes too much waste.

The emergence of Serverless architecture allows users to entrust service providers to manage servers, databases, applications, and even logic. This approach reduces the trouble of maintaining for users on the one hand. On the other hand, users can pay for the cost according to the granularity of their actual use of functions. Service providers can process more idle resources for additional processing, which is good from the perspective of cost and green computing. From another perspective, although the pay-as-you-go model of the Serverless architecture charges based on resource usage, the billing granularity is finer.

The volume-based part in the figure is the traffic chart of the website on a certain day. The blue line in the figure is the traffic trend of a website on a certain day. After comparing the traffic and expense schematic diagram of traditional ECS architecture with the expense schematic diagram of Serverless architecture elastic mode, we can find that the traffic and expense schematic diagram is under the traditional ECS architecture on the left side. Usually, the service needs to be evaluated for resource usage before it goes online. After the resource usage of the website is evaluated, a server that can withstand a maximum of 1300PV per hour is purchased. Then, within a whole day, the total amount of computing power provided by this server is an orange area, and the required cost is the cost corresponding to the computing power of the orange area.

However, the real effective resource usage and cost expenditure are only the areas below the flow curve, while the orange area above the flow curve is the resource loss and additional expenditure. In the schematic diagram of cost expenditure under the flexible mode of Serverless architecture on the right, the cost expenditure is proportional to the flow, which means when the flow is at a low water level, the corresponding resource usage is relatively small. Similarly, the corresponding expenses are relatively small.

When the traffic volume is at a relatively high value, with the auto scaling capability and pay-as-you-go capability of the Serverless architecture, resource usage and expenses will increase in a positive correlation. In the whole process, you can see the traffic and expenses of the traditional ECS architecture on the left, resulting in obvious resource waste and additional costs.

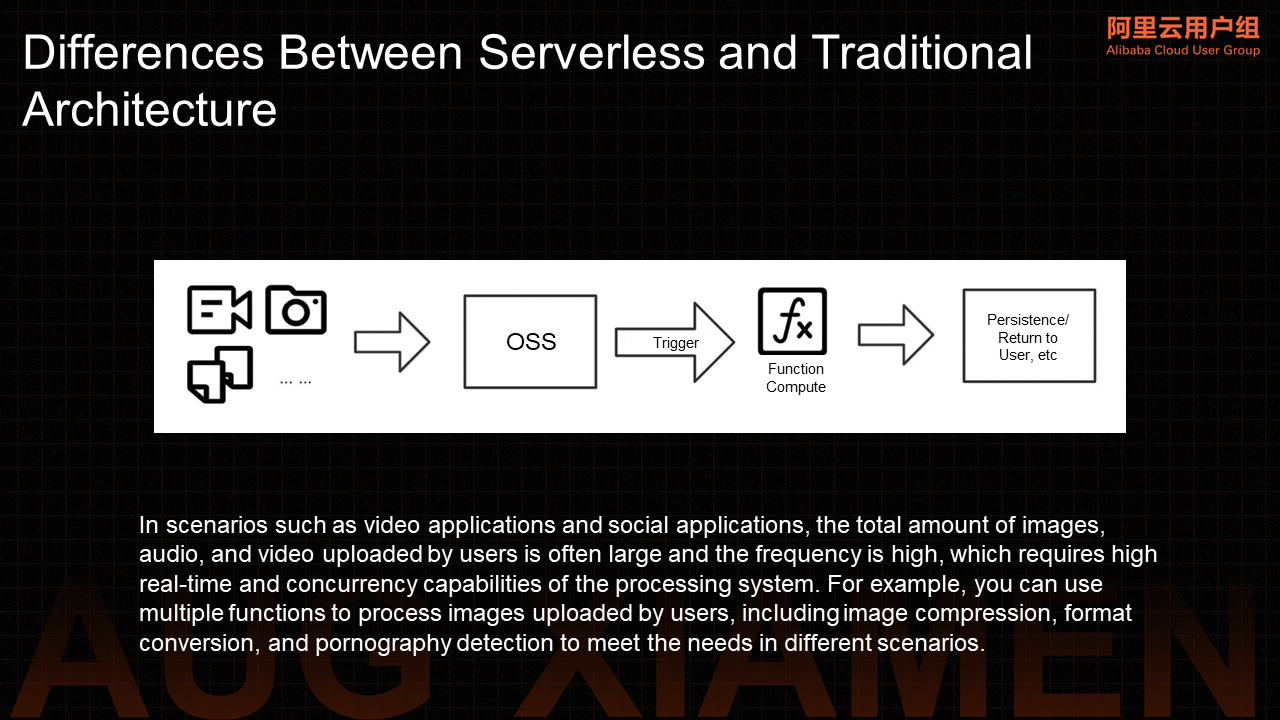

In video and social application scenarios, the total amount of images, audio, and video uploaded by users are often large, and the frequency is high, which requires high real-time and concurrency capabilities of the processing system. For example, you can use multiple functions to process images uploaded by users, including image compression, format conversion, and pornography detection to meet the needs in different scenarios. For example:

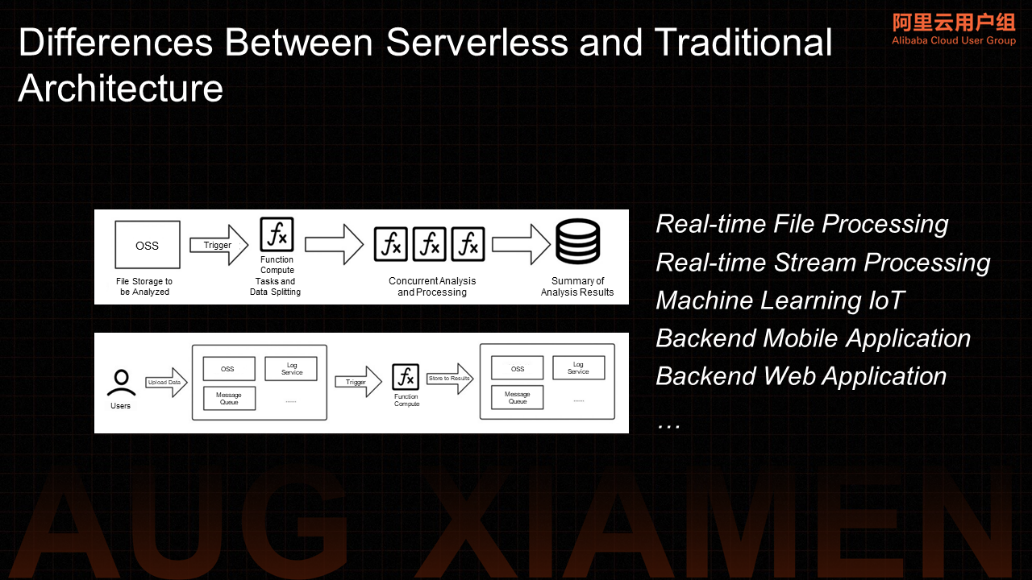

Serverless can also be used in real-time file processing, real-time stream processing, machine learning, IoT backend, mobile application backend, web application, and other scenarios.

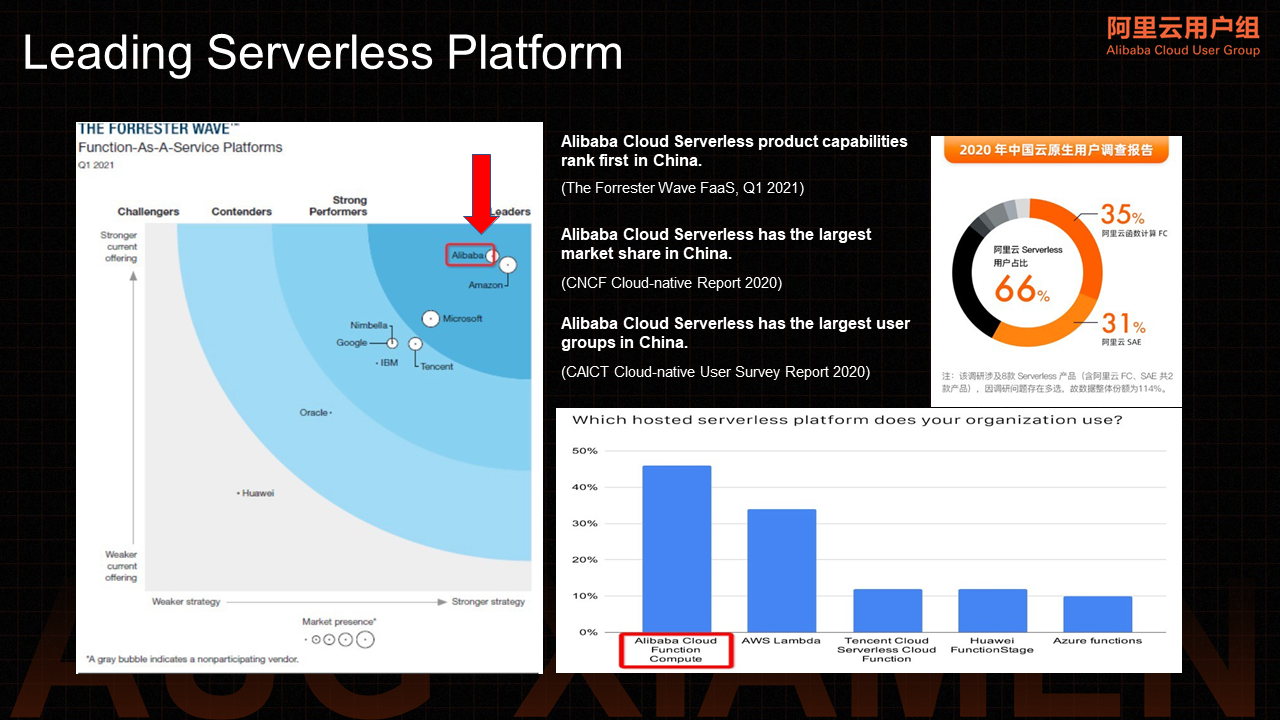

Alibaba Cloud is one of the earliest domestic vendors to provide Serverless services. Over the past few years, Alibaba Cloud has achieved excellent results. For example, in Q1 of 2021, in Forrester's evaluation, Alibaba Cloud's Serverless product capability ranked first in China. In CNCF's cloud-native research report in 2020, Alibaba Cloud Serverless market share was the first in China. In the 2020 China Cloud-Native User Research Report of CAICT, Alibaba Cloud Serverless users account for first place in China.

The fundamental reason why Alibaba Cloud Serverless products and services can be recognized by the majority of developers is that Alibaba Cloud Serverless architecture is secure, technically stable and leading, and user-centered.

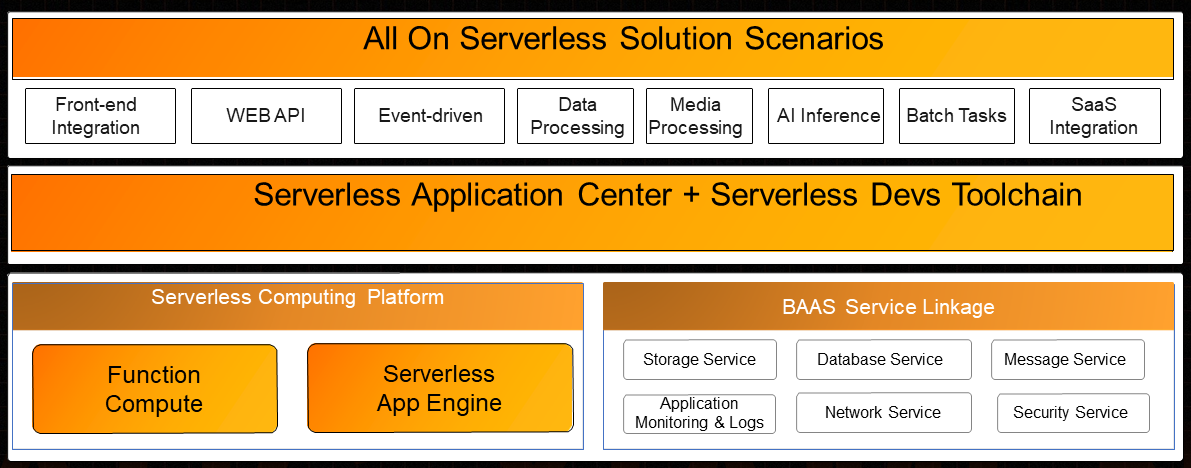

The bottom of the above figure shows the computing platform and BaaS product layer. There is an event-driven Function Compute and a best practice for Serverless applications-SAE in the computing product section.

There are different levels of services, databases, networks, and messages in the BaaS service linkage part. These products are continuously becoming Serverless from the cloud to cloud-native to Serverless. There are developer tools and application centers in the upper layer, providing developers with a series of All-on-Serverless solutions and scenarios (such as frontend integration, WebAPI, database processing, and AI inference).

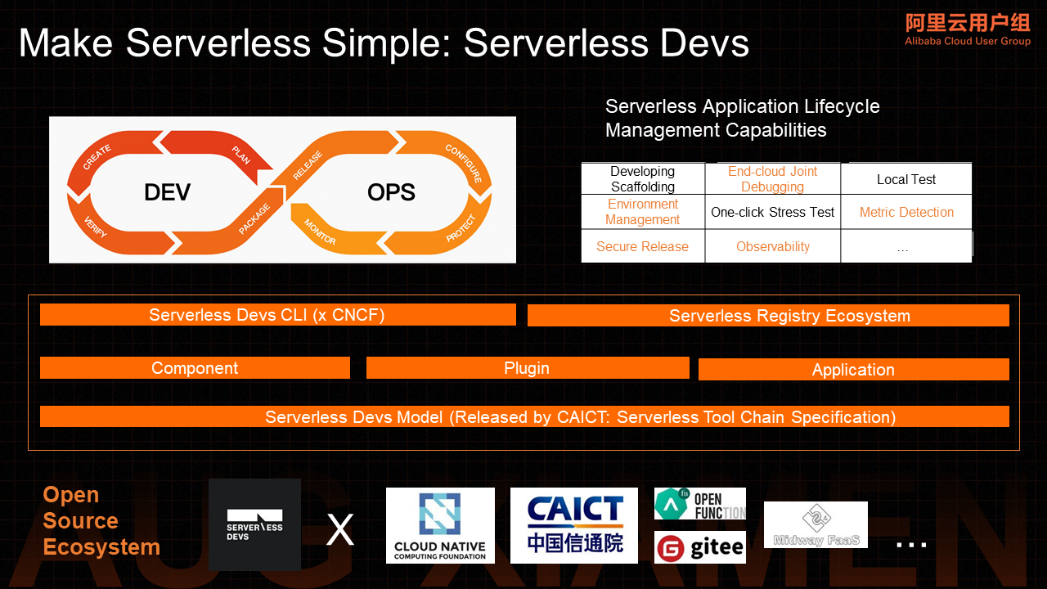

At the ecological level, the Alibaba Cloud Serverless Team has opened up a no vendor lock-in tool, Serverless Devs, adhering to the attitude of making Serverless simple and capable of playing a role in the entire lifecycle of Serverless applications. Serverless Devs promotes the release of a Serverless tool chain model together by the CAICT on the underlying specification model but also donates projects to CNCF Sandbox at the tool level, becoming the first Sandbox project in CNCF Serverless Tools.

Thank you for your attention to the Serverless architecture of Alibaba Cloud.

Serverless Devs Enters the CNCF Sandbox and Becomes the First Selected Serverless Tool Project!

From Technical Upgrades to Cost Reduction and Efficiency Improvement

99 posts | 7 followers

FollowAlibaba Clouder - November 11, 2020

Apache Flink Community - December 17, 2024

Alibaba Clouder - November 24, 2020

Alibaba Cloud Serverless - April 7, 2022

Alipay Technology - November 6, 2019

Alibaba Cloud Serverless - May 9, 2020

99 posts | 7 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Cloud Serverless