In this article, we're going to hear from Alibaba Cloud engineers on why they wanted to partner with Microsoft's teams to create the Open Application Model (OAM), which is a new industry-wide, open standard for developing and operating applications on Kubernetes and other platforms.

For some background, you can check out the original announcment from here

Alibaba co-announced the Open Application Model (OAM) with Microsoft on October 17th. OAM is a specification for describing application as well as its operational capabilities so that the application definition is separated from the details of how the application is deployed and managed.

In OAM, an Application is made from three core concepts. The first is the Components that make up an application, which might comprise a collection of microservices, a database and a cloud load balancer.

The second concept is a collection of Traits which describe the operational characteristics of the application such as capabilities like auto-scaling and ingress which are important to the operation of applications but may be implemented in different ways in different environments.).

Finally, to transform these descriptions into a concrete application, operators use a configuration file to assemble components with corresponding traits to form a specific instance of an application that should be deployed.

We are putting its experience of running both internal cluster and public cloud offerings, specifically, moving from defining in-house application CRD to a standard application model into OAM. As engineers, we thrive on innovation-based learning from past failures and mistakes.

In this article, we share our motivations and the driving force behind this project, in the hope of helping the wider community better understand the OAM.

We are "infra operators" in Alibaba. Specifically, we are responsible for developing, installing, and maintaining various platform capabilities. Our work includes, but is not limited to, operating K8s cluster, implementing controllers/operators, and developing K8s plugins. Internally, we are more often called "platform builders." However, to differentiate us from the PaaS engineers working on top of our K8s clusters, we are referred to as "infra operators" in this article. We've had many past successes with Kubernetes, and we've learned a lot from the issues we encountered when using it.

We operate arguably the world's largest and most complicated Kubernetes clusters for Alibaba e-commerce business; these clusters:

At the same time, we support the Alibaba Cloud Kubernetes service, which is similar to other public cloud Kubernetes offerings for external customers, where the number of clusters is huge (~10,000) but size of each cluster is typically small or moderate. Our customers, both internal and external, have very diverse requirements and use cases, in terms of workload management.

Similar to the application management stack in other Internet companies, the stack at Alibaba is done cooperatively by infra operators, application operators, and application developers. Application developers' and application operators' roles can be summarized as follows:

Application Developers - Deliver business value in the form of code. Most are not aware of infrastructure or K8s and they interact with PaaS and CI pipeline to manage their applications. The productivity of developers is highly valuable.

Application Operators - Serve developers with expertise of capacity, stability and performance of the clusters so to help developers configure, deploy, and operate applications at scale (e.g. updating, scaling, recovery). Note that although application operators understand APIs and capabilities of K8s, they do not work on K8s directly. In most cases, they leverage the PaaS system to serve developers with underlying K8s capabilities. In this case, many application operators are in fact PaaS engineers as well.

In one word, infra operators, like us, serve application operators, who in turn serve developers.

From the description above, it's obvious the three parties bring different expertises, but need to work in harmony to make sure everything works well. That can be difficult to achieve!

We'll go through the pain points of the various players in the following sections, but in a nutshell, the fundamental issue we found is the lack of a structured way to build efficient and accurate interactions among the different parties. This leads to inefficient application management process or even operational failures.

A standard application model is our approach to solve this problem.

Kubernetes is highly extensible, and this enables infra operators to build extended operational capabilities. Despite this great flexibility, some issues come up for the users of these capabilities - application operators.

One example of such as issue is that at Alibaba, we developed CronHPA CRD to scale application based on CRON expressions. It's useful when an application's scaling policy differs between day and night. CronHPA is an optional capability, and deployed only on-demand in some of our clusters.

A sample CronHPA specification yaml looks like this:

apiVersion: "app.alibaba.com/v1"

kind: CronHPA

metadata:

name: cron-scaler

spec:

timezone: America/Los_Angeles

schedule:

- cron: '0 0 6 * * ?'

minReplicas: 20

maxReplicas: 25

- cron: '0 0 19 * * ?'

minReplicas: 1

maxReplicas: 9

template:

spec:

scaleTargetRef:

apiVersion: apps/v1

name: php-apache

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50This a typical Kubernetes Custom Resource and should be straightforward to use.

However, we quickly get notified about several problems from application operators when they use customized plugins like CronHPA:

1. Discovering the specification of new capability is difficult.

Application operators often complained that the specification of a capability can be anywhere. It is sometimes in its CRD, sometime in ConfigMap, and sometimes in configuration file in a random place. They are also confused – why do we not have every extension in K8s described by CRD (e.g., CNI and CSI plugins) so that it could be learned and used easily?

2. Confirming the existence of specific capability in a particular cluster is difficult.

Application operators are unsure if an operational capability is ready in a given cluster, especially when this capability is provided by a newly developed plugin. Multiple rounds of communication between infra operators and application operators are needed to bring clarity to the concerns.

Besides the discoverability problems above, there is an additional challenge with regards to manageability.

3. Conflicts in capabilities could be troublesome

Usually there are many extended capabilities in a K8s cluster. The relationships between those capabilities could be summarized into the following three categories:

Orthogonal and composable capabilities are less troublesome. However, conflicting capabilities can lead to unexpected/unpredictable behaviors.

The problem - it's difficult for application operator to be warned of conflicts beforehand. Hence, they may apply conflict capabilities to the same application. When conflict actually happens, resolving it comes with a cost, and in extreme cases, conflicts can result in catastrophic application failures. Naturally, application operators don't want to feel as if the Sword of Damocles is hanging over their heads when managing platform capabilities, hence they want a better methodology to avoid conflict scenarios beforehand.

How can application operators discover and manage capabilities that could potentially be in conflict with each other? In other words, as infra operators, can we build discoverable and manageable capabilities for application operators?

In OAM, "Traits" are how we create capabilities with discoverability and manageability.

These platform capabilities are essentially operational characteristics of the application, and this is where the name "Trait" in OAM comes from.

In our K8s cluster, most traits are defined by infra operators and implemented using customized controllers in Kubernetes or external services, for example:

Note that traits are not equivalent to K8s plugins; one cluster could have multiple networking related traits like "dynamic QoS trait", "bandwidth control trait" and "traffic mirror trait" which are provided by one CNI plugin.

In practice, traits are installed in the K8s cluster and used by application operators. When capabilities are presented as traits, an application operator can discover the supported capabilities by a simple kubectl get command:

$ kubectl get traits

NAME AGE

cron-scaler 19m

auto-scaler 19mThe above example shows that this cluster supports two kinds of "scaler" capabilities. One could deploy an application that requires CRON-based scale policy to this cluster.

This description makes it easy for an application operator to understand a particular capability accurately, with a simple kubectl describe command, without digging into its CRD or documentation. The description of capability includes "what kind of workload this trait applies to," and "how to use it," etc.

For example, kubectl describe trait cron-scaler:

apiVersion: core.oam.dev/v1alpha1

kind: Trait

metadata:

name: cron-scaler

spec:

appliesTo:

- core.oam.dev/v1alpha1.Server

properties: |

{

"$schema":"http://json-schema.org/draft-07/schema#",

"type":"object",

"properties":{

"timezone":{

"description":"Timezone for the CRON expressions of this scaler.",

"type":"string"

},

"schedule":{

"type":"array",

"items":[

{

"type":"object",

"properties":{

"cron":{

"description":"CRON expression for this scaling rule.",

"type":"string"

},

"minReplicas":{

"description":"Lower limit for the number of replicas.",

"type":"integer",

"default":1

},

"maxReplicas":{

"description":"Upper limit for the number of replicas.",

"type":"integer"

}

}

}

]

},

"template":{

...

}

}

}Note that in OAM, the properties of trait spec could be json-schema.

The Trait spec is decoupled from its implementation by design. This is helpful considering there could be dozens of implementations. for a specific capability in K8s. Trait provides a unified description to help application operators understand and use the capability accurately.

An application operator will apply one or more installed traits to an application, by using the ApplicationConfiguration (described in detail in the next section). ApplicationConfiguration controller will handle the traits conflict, if any.

Take this sample ApplicationConfiguration as an example:

apiVersion: core.oam.dev/v1alpha1

kind: ApplicationConfiguration

metadata:

name: failed-example

spec:

components:

- name: nginx-replicated-v1

instanceName: example-app

traits:

- name: auto-scaler

properties:

minimum: 1

maximum: 9

- name: cron-scaler

properties:

timezone: "America/Los_Angeles"

schedule: "0 0 6 * * ?"

cpu: 50

...In OAM, it's required for ApplicationConfiguration controller to determine traits compatibility and fail the operation if the combination cannot be satisfied. Upon submitting the above YAML to Kubernetes, the controller will report failure due to "conflicts between traits." Application operators will then be notified of the conflicts beforehand, and will not find any surprises due to conflicting traits afterward.

Overall, instead of providing lengthy maintenance specifications and operating guidelines, which are still unable to prevent application operators from making mistakes, we use OAM traits to expose discoverable and manageable capabilities on top of Kubernetes. This allows our application operators to "fail fast" and have the confidence to assemble capabilities to construct conflict-free operational solutions, as simple as playing "Legos."

As "platform for platform," Kubernetes does not restrict the role of the user who calls the core APIs. This means anyone can be responsible for any field in the API object. It is also called an "all-in-one" API, which makes it easy for a newbie to start. However, this poses a disadvantage when multiple teams with different focuses are required to work together on the same Kubernetes cluster, especially where application operators and developers need to collaborate on the same API set.

Let's first look at a simple deployment YAML file:

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

deploy: example

template:

metadata:

labels:

deploy: example

spec:

containers:

- name: nginx

image: nginx:1.7.9

securityContext:

allowPrivilegeEscalation: falseIn our clusters, it's the application operator works cooperatively with developer to prepare this yaml. This cooperation is time-consuming and not easy, but we have to. Why?

Instead of having the application operator prepare this yaml cooperatively with developers, the most straightforward way is to ask the developers to fill the deployment yaml by themselves. But, developers may find fields that are not associated with their concerns at all.

For example, how many developers know allowPrivilegeEscalation?

While not known by many, it is utterly important to have this field set to false, to ensure the application has proper privileges in the real host. Typically, application operators configure this field. But, in practice, fields like this end up becoming "guessing games," or they may even be completely ignored by developers. As a result, this can cause potential troubles if application operators do not validate those fields.

There are fields in K8s workload yaml that are not explicitly controlled by only one party. For example, when a developer sets replicas:3, he assumes it's a fixed number during the application lifecycle. But, most developers don't realize this field can be taken over by HPA controller, which may change the number according to Pod load. This conflict is problematic: when a developer wants to change the replica number later, the change may not take effect permanently.

In this case, the workload spec cannot represent the workload's final state and this can be very confusing from developer's perspective. We once attempted to use fieldManager to deal with this issue. The processes of resolving such conflict is still challenging, because it's hard to figure out the intention of the other modifier.

As shown above, when using K8s APIs, the concerns of developers and operators are inextricably mixed together. It could be painful for several parties to work on the same API set. Furthermore, our past experience shows that sometimes application management systems (e.g., PaaS) may be hesitant to expose more K8s capabilities, because they don't want to reveal more operational/infrastructure details to developers.

A straightforward solution is to draw a "clear boundary" between the developers and operators. For example, we can only allow developers to set part of the deployment yaml (this is exactly our PaaS was once doing). But, before applying the "clear cut" solutions, we may want to consider other scenarios.

There are cases where a developer wants to have their "opinions" heard by an operator, on behalf of their application. For example, assuming a developer defined several parameters for an application, then realized that application operator may rewrite them to fit different runtime environments. The issue - the application developer may only allow certain parameters to be modified. How could this information be conveyed efficiently to application operators?

In fact, the "clear cut" application management process will make it even harder to express developers' operational opinions. There are many similar examples, where a developer might want to convey that their application:

All these requests are valid, because the developer, the author of the application, best understands his or her application. This raises a fundamental problem which we seek to resolve: Is it possible to provide separated API subsets for application developers and operators, while allowing developers to claim operational requirements efficiently?

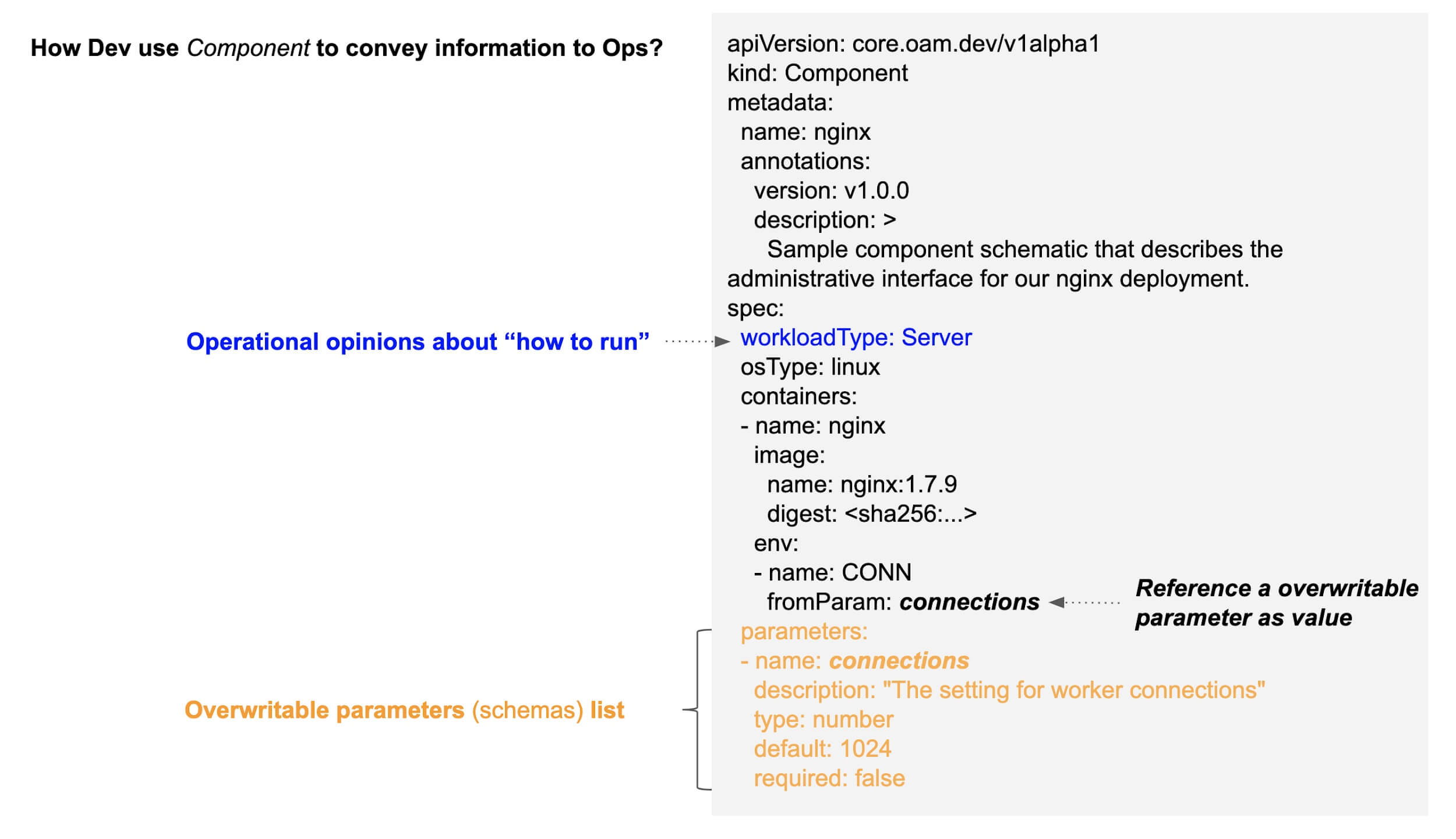

In OAM, we try to logically decouple K8s API objects, so developers can fill in their own intentions, and still be able to convey information to operators in a structured manner.

Components are designed for developers to define an application without considering operational details. One application is composed of one or many components, for example, a Java web component and a database component.

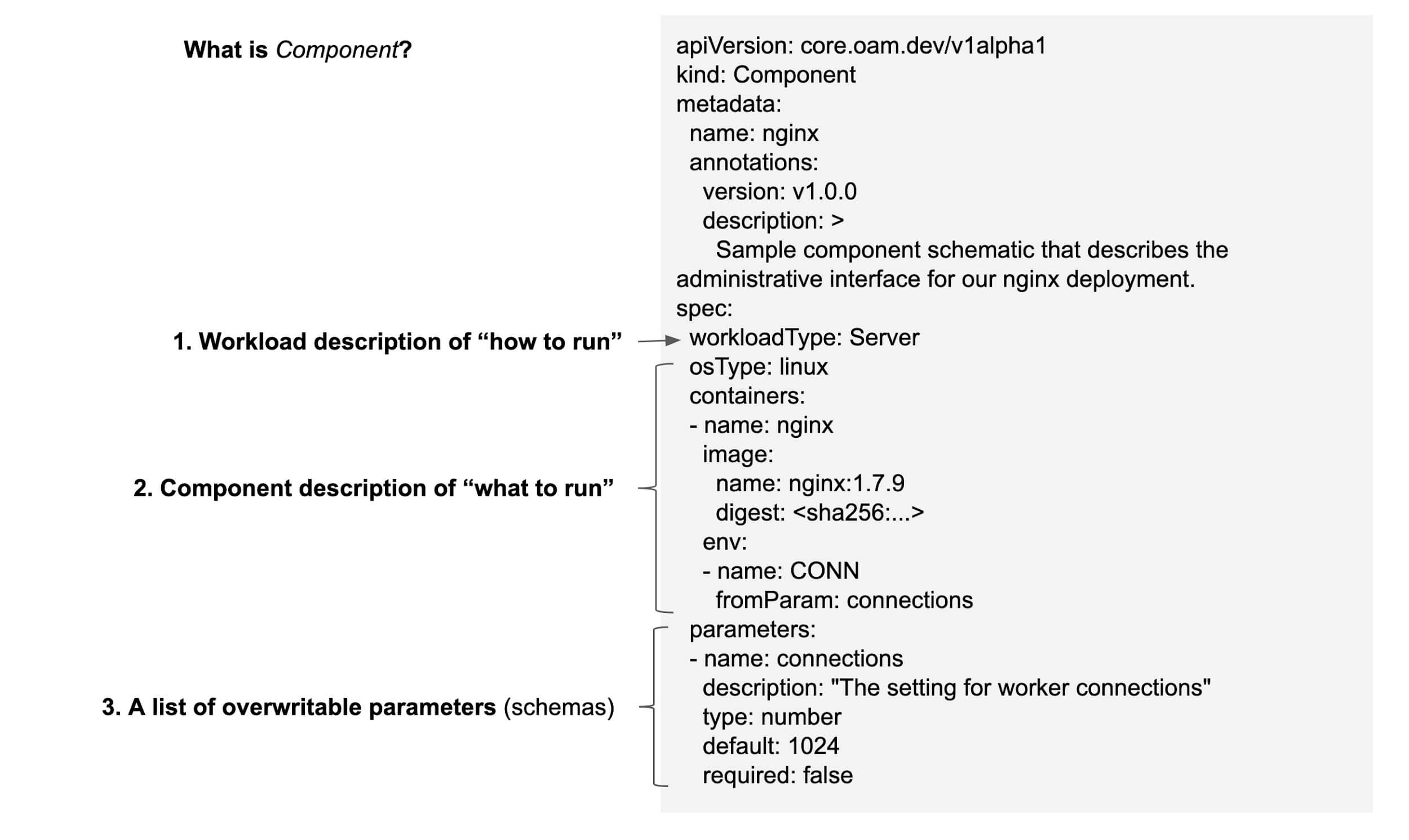

Here's a sample of the Component defined by developer for an Nginx deployment:

A component in OAM is composed of three parts:

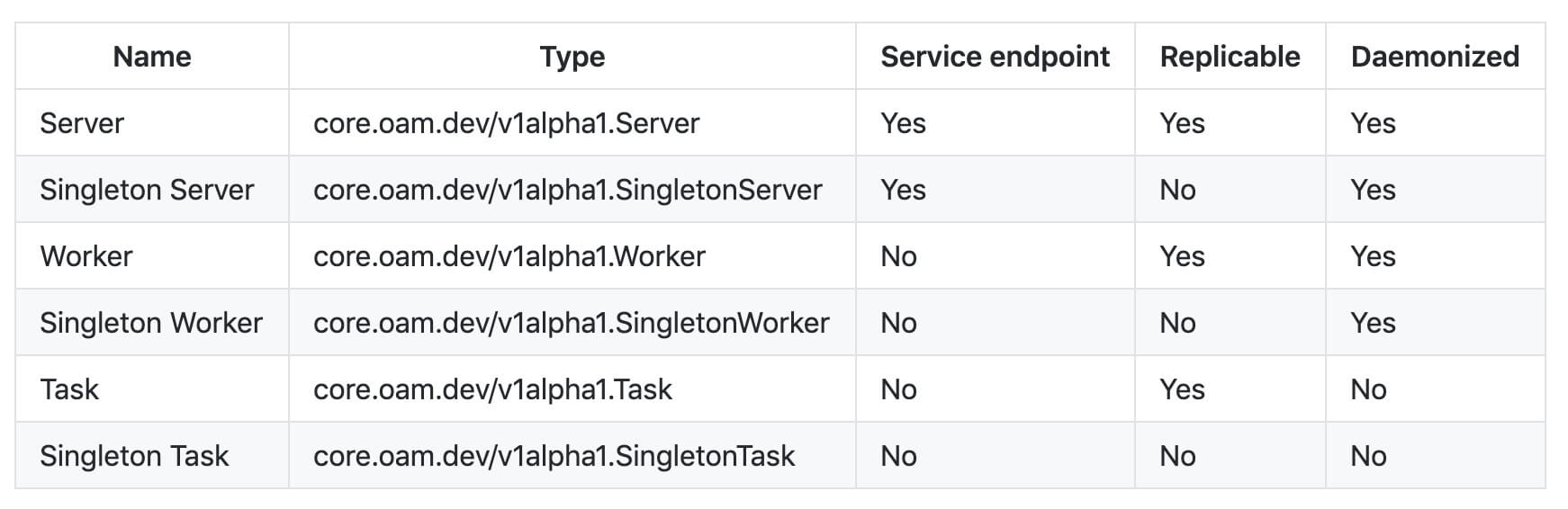

First of all, in Component spec the description of "how to run" is fully decoupled from "what to run." This decoupling makes the workloadType field a straightforward way to convey developer's opinions about how to run his application to the operator. Among these types, core workloads are pre-defined in OAM to cover typical patterns of cloud native applications:

That being said, the implementation of OAM is free to claim its own workload types by defining Extended Workloads. In Alibaba, we rely heavily on the extended workloads to enable developers to define cloud service base components like Functions etc.

Secondly, let's go into some details about "overwritable parameters":

parameters: the parameters in this list are allowed to be overwritten by operators (or by the system); schemas are defined for operators to follow during overwriting.fromParam: indicates the value of CONN, and actually comes from a parameter named connections in the parameters list, i.e. this value could be overwritten by operators.connections is default as 1024.Overwritable parameters in Component is another field which allows developers to claim their opinions about "which part of my app definition is overridable" to operators (or to the system).

Note that in the above example, the developer does not need to set replicas anymore; it's essentially not his concern, and he will let HPA or application operator fully control the replica number.

Overall, Component allows developers to define application specification with his own api set, but at the same time, provides abilities for him or her to convey opinions or information to operators accurately. This information includes both operational opinions, such as, "how to run this application," and "overwritable parameters," like those shown below.

In addition to these operational hints, the developer could have many more types of requirements to claim in application definitions, and an operational capability, a.k.a trait, should have corresponding ability to claim it matches to given requirements. Hence we are actively working on "policies" in Component so a developer can say "my component requires some traits that satisfy this policy," and a trait can list all of the policies it supports.

Ultimately, the operators would use ApplicationConfiguration to instantiate the application, by referring to components' names and applying traits to them.

The usage of Component and ApplicationConfiguration forms a practice of cooperative workflow:

component.yaml with selected workload type.kubectl apply -f component.yaml to install this component.app-config.yaml to instantiate the application.kubectl apply -f app-config.yaml to trigger the deployment of the whole application.A sample of app-config.yaml is below:

apiVersion: core.oam.dev/v1alpha1

kind: ApplicationConfiguration

metadata:

name: my-awesome-app

spec:

components:

- componentName: nginx

instanceName: web-front-end

parameterValues:

- name: connections

value: 4096

traits:

- name: auto-scaler

properties:

minimum: 3

maximum: 10

- name: security-policy

properties:

allowPrivilegeEscalation: falseLet's highlight several details from the above ApplicationConfiguration YAML:

parameterValues - Used by the operator to overwrite the connections value to 4096, which was 1024 initially in the component.

4096 instead of string "4096", because the schema of this field is clearly defined in Component.auto-scaler - Used by the operator to apply autoscaler trait (e.g. HPA) to the component. Hence, its replica number will be fully controlled by autoscaler.security-policy - Used by the operator to apply the security policy rules to the component.Note that the operator could also amend more traits to the traits list as long as they are available. For example, the "Canary Deployment Trait" will make the application follow the canary rollout strategy during upgrade later.

Essentially, ApplicationConfiguration is how application operator (or the system) consume information conveyed from developer, and then assemble operational capabilities to achieve his final operational intention accordingly.

As we've described so far, our primary goal in using OAM is to fix the following problems in application management:

In this context, OAM is the CRD specification for Alibaba's Kubernetes team to define application as well as its operational capabilities in standard and structured approach.

Another strong motivation for us to develop OAM is software distribution in hybrid cloud and multi-environments. With the emerging of Google Anthos and Microsoft Arc, we did see the trend of Kubernetes is becoming the new Android with the value of cloud native eco-system is moving to the application layer. We will talk about this part later.

Real world use cases in this article are contributed by Alibaba Cloud Native Team and Ant Financial.

For now, the specification and model of OAM is indeed solving many existing problems, but we believe there is still a long way to go. For example, we are working on practices of handling dependencies with OAM, integration of Dapr workload in OAM and many others.

We look forward to working with the community on OAM spec as well as its K8s implementation. OAM is a neutral open source project and all its contributors are under CLA from non-profit foundation.

Six Typical Issues when Constructing a Kubernetes Log System

How Kubernetes and Cloud Native's Working out in Alibaba's Systems

664 posts | 55 followers

FollowAlibaba Cloud Community - March 9, 2023

Aliware - May 15, 2020

Alibaba System Software - November 29, 2018

Alibaba Developer - January 13, 2020

Amuthan Nallathambi - May 12, 2024

Alibaba Clouder - June 18, 2018

664 posts | 55 followers

Follow DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Cloud Native Community