By Liu Tianyuan, Senior Product Manager of Computing Platform Department, Alibaba Cloud

Released by DataWorks Team

This article describes the intermediate-to-advanced features of DataWorks Advanced Edition, and introduces the features and applicable scenarios for each feature of DataWorks Basic Edition, Standard Edition, Professional Edition, and Enterprise Edition. It helps you select the most suitable DataWorks edition to solve your problems.

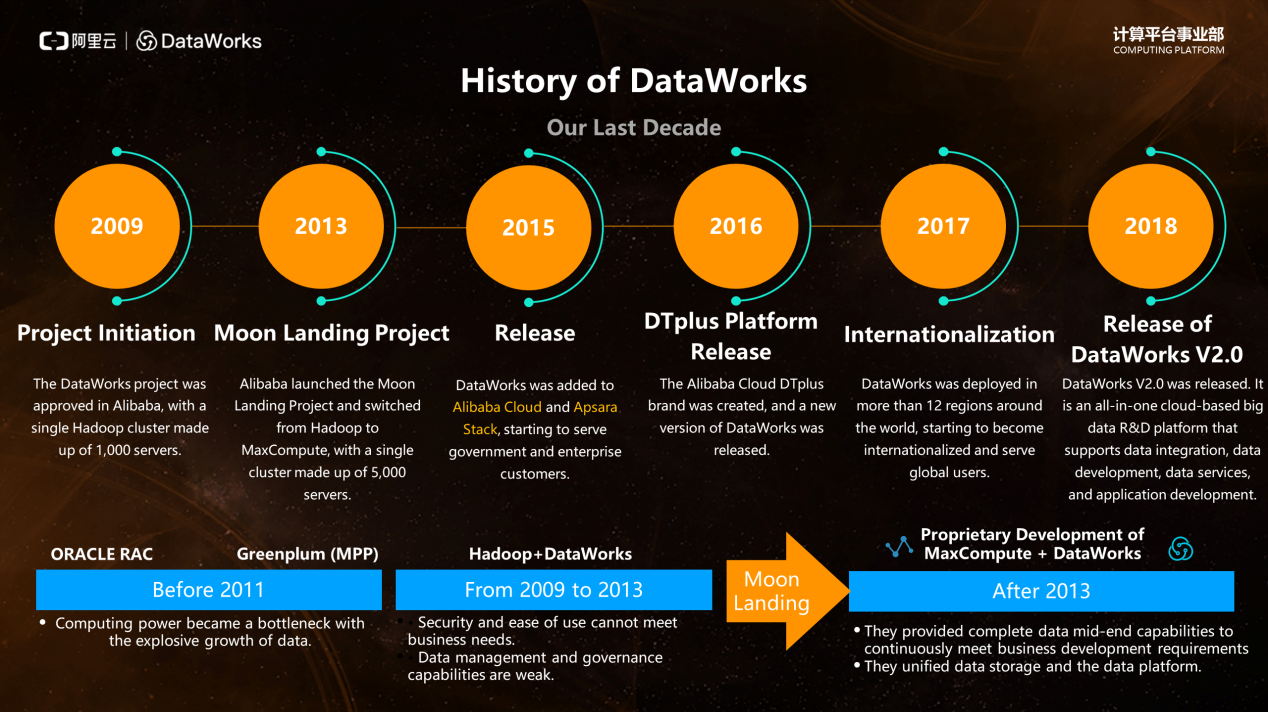

More than a decade has elapsed since the DataWorks project was established in 2009, followed by Alibaba's Moon Landing Project, the release of Alibaba Cloud and Apsara Stack, and the release of DataWorks V2.0 in 2018. The entire process includes several key milestones. From 2009 to 2013, DataWorks became able to schedule Hadoop cluster tasks. Afterwards, Hadoop clusters could no longer support Alibaba's increasingly massive amounts of data. Therefore, the company began to explore the combination of MaxCompute and DataWorks. After 2013, DataWorks began to support scheduling MaxCompute tasks Based on MaxCompute and DataWorks, Alibaba built its Data Mid-End.

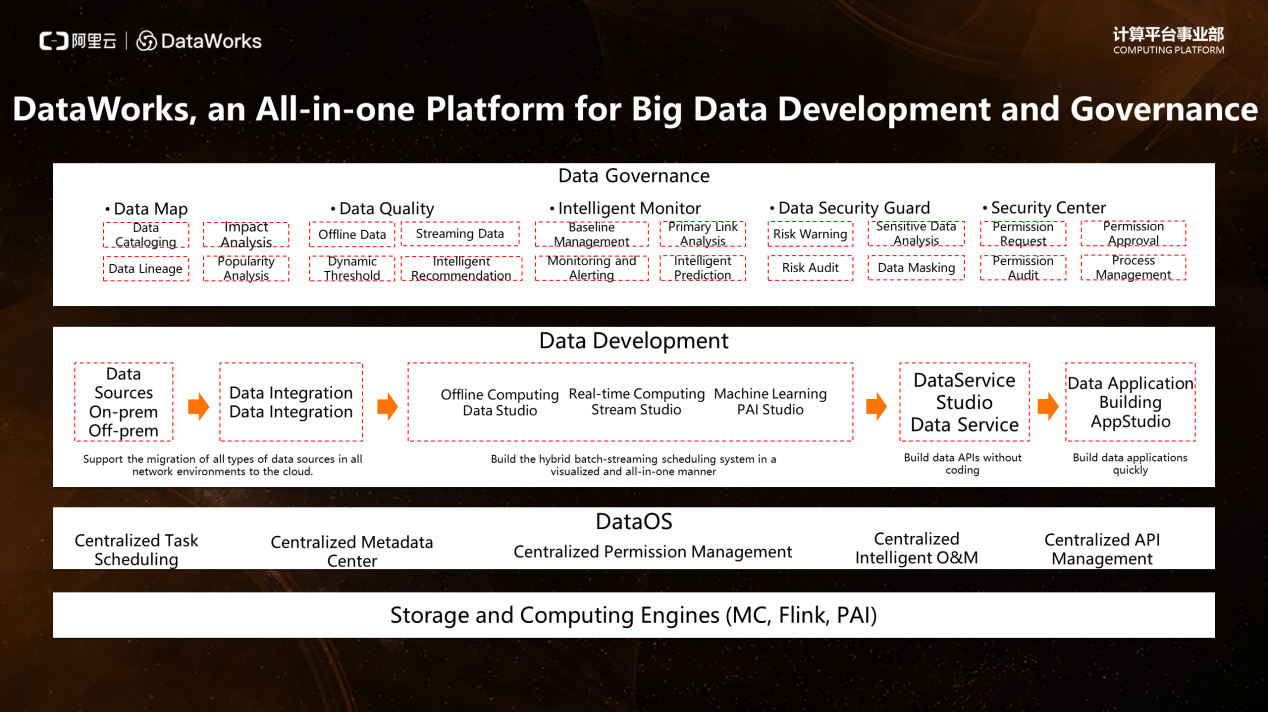

As Alibaba's all-in-one big data development platform, DataWorks provides core capabilities in two aspects: data development and data governance. Before the first half of 2018, most users used DataWorks for data development. In this aspect, DataWorks transfers data sources to MaxCompute by using data integration and conducts timed scheduling with DataStudio offline computing nodes. Since the second half of 2018, DataWorks V2.0 has seamlessly deployed Alibaba's internal data governance features to Alibaba Cloud. This provides every Alibaba Cloud user with comprehensive data governance capabilities in DataWorks Basic Edition, including data lineage, data quality monitoring, task monitoring, data audit, and secure data permission control.

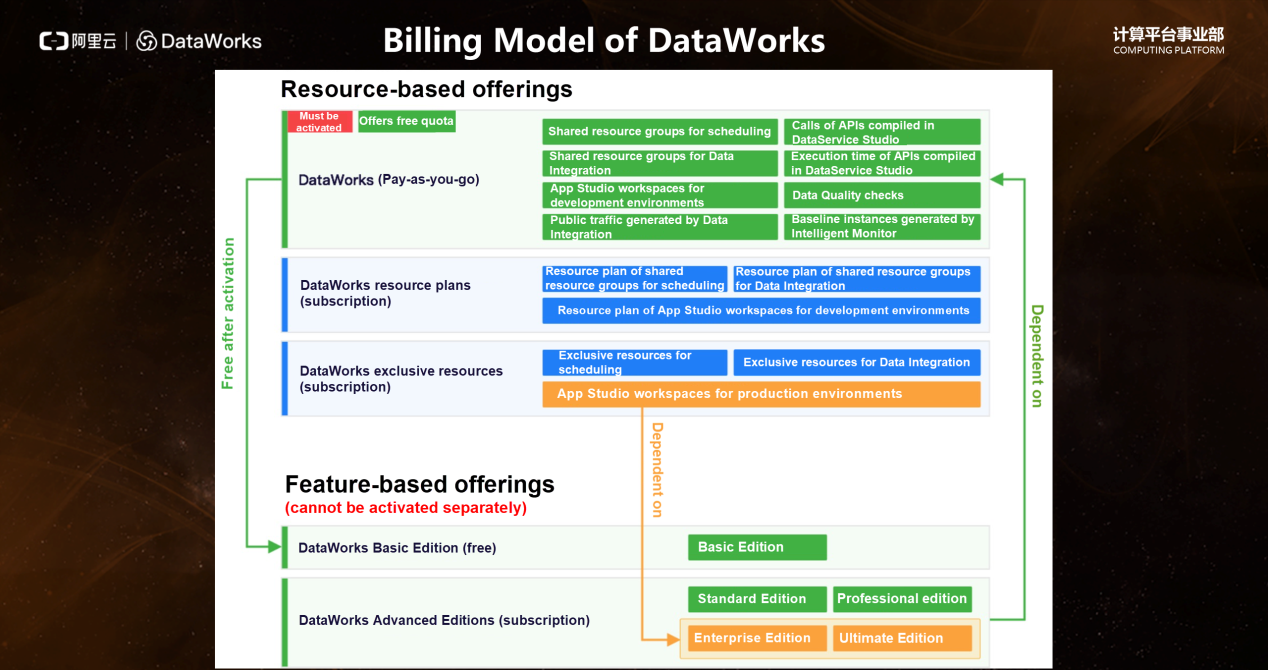

To offer users better business-class services, DataWorks was commercialized in June, 2019. The pricing documentation of DataWorks shows the overall pricing model. In this model, the offerings of DataWorks fall into two types: resource-based and feature-based. Feature-based offerings provide users with the features of DataWorks modules. For example, you can go to the DataWorks console, where you can view features such as DataStudio - Node Types and Operation Center - Intelligent Monitor. These interfaces are displayed to users based on a specific edition of DataWorks, such as the Basic Edition, Standard Edition, Professional Edition, or Enterprise Edition. If you use a feature that is not covered by the current edition, the pay-as-you-go billing method is used to deduct fees for your use of the feature.

Note: All users must activate the pay-as-you-go DataWorks service type, and then they can use DataWorks Basic Edition permanently.

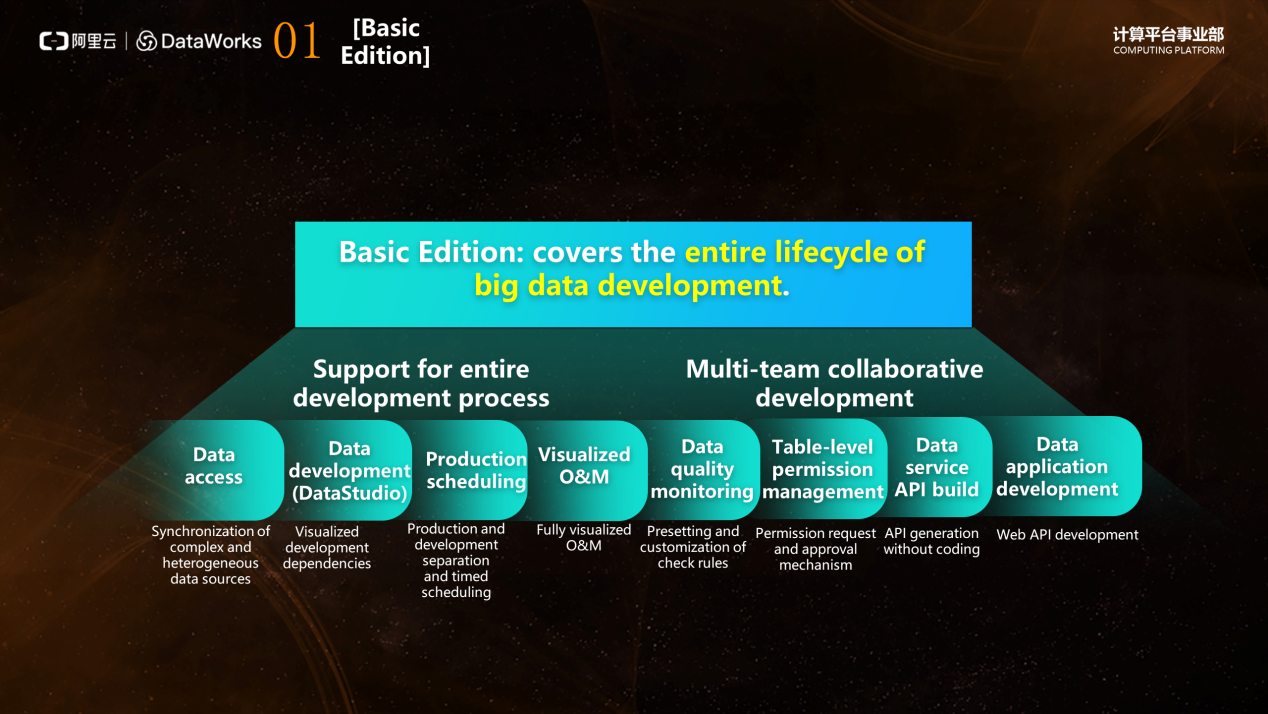

DataWorks Basic Edition provides practical features to help users build data warehouses quickly. DataWorks Basic Edition covers the entire lifecycle of big data development, including all modules from data access, data development, production scheduling, visual O&M, data quality monitoring, table-level permissions management, and data service API build, through to final data presentation in application development. It is worth mentioning that the "batch migration to cloud" feature has been added to data access of DataWorks. For example, your data is from multiple MySQL databases. Each database contains multiple DBs, and each DB has multiple tables. If this is the case, you can use this feature to upload the data in Excel format, quickly create multiple data synchronization tasks, and migrate data to the cloud. Currently, this new feature only supports Oracle, MySQL, and SQL Server. DataWorks Basic Edition also provides data quality monitoring, allowing you to set custom rules for check.

DataWorks Standard Edition provides more complex and specialized node types for development, and better visual support for the real-time Flink engine than the Basic Edition. DataWorks Standard Edition are oriented to enterprises that have fast-growing big data systems. When an enterprise's data system is developing rapidly, data quality and security problems are gradually exposed, causing quality and security risks. In terms of data governance, DataWorks Standard Edition provides corresponding capabilities to help you solve your problems.

The following are some scenario-based cases of DataWorks Standard Edition:

Scenario 1 Task execution with a branch node at a specific time

For example, you initiate a request for whether you can decide to run a task in the downstream of a workflow based on a specific judgment. As shown in the following figure, you need to determine whether today is the last day of the month. The last day of each month is not always the 30th.

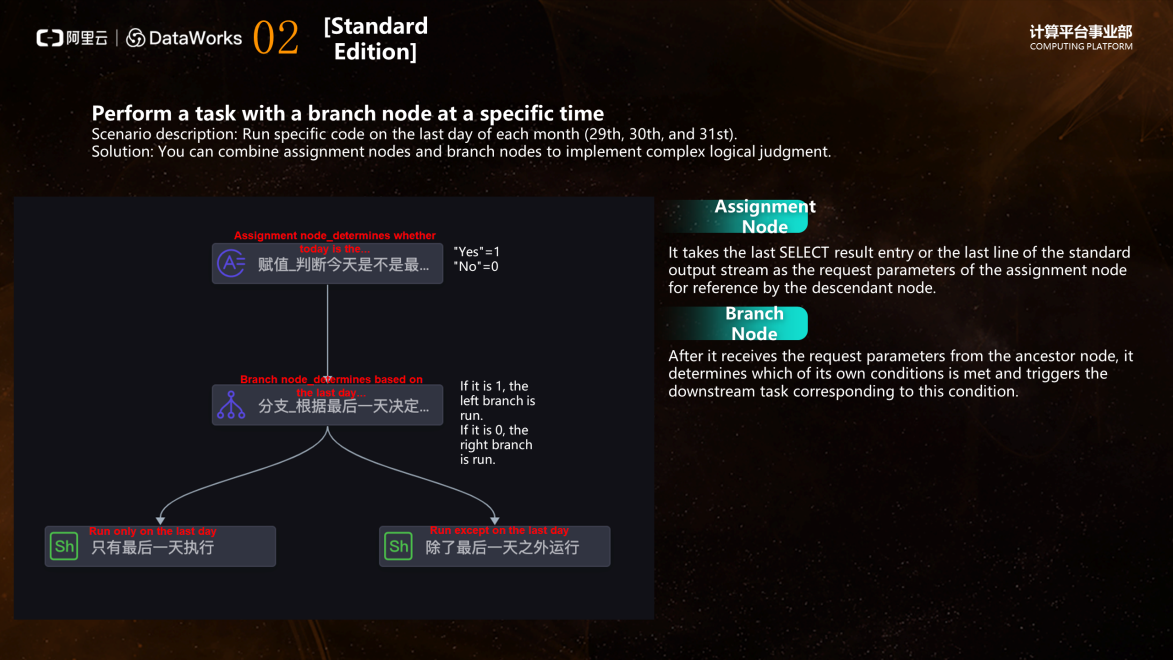

In this situation, the linear dependency of traditional simple scenarios of DataWorks is not likely to meet your need. DataWorks Standard Edition provides nodes of several load types, including loop, traverse, assignment, branch, and merge nodes. You can combine the nodes for more complex scenarios.

How can complex nodes help meet the preceding need? As shown in the following figure, two node types are required in this case: the assignment node and the branch node. In the assignment node, you can determine whether today is the last day of the month with Python. If today is the last day of the month, the assignment node passes 1 as a request parameter to the descendant node. If today is not the last day of the month, the assignment node passes 0 to the descendant node. When the branch node receives the request parameter "1" from the ancestor node, the left branch is run. If the branch node receives 0, the right branch is run.

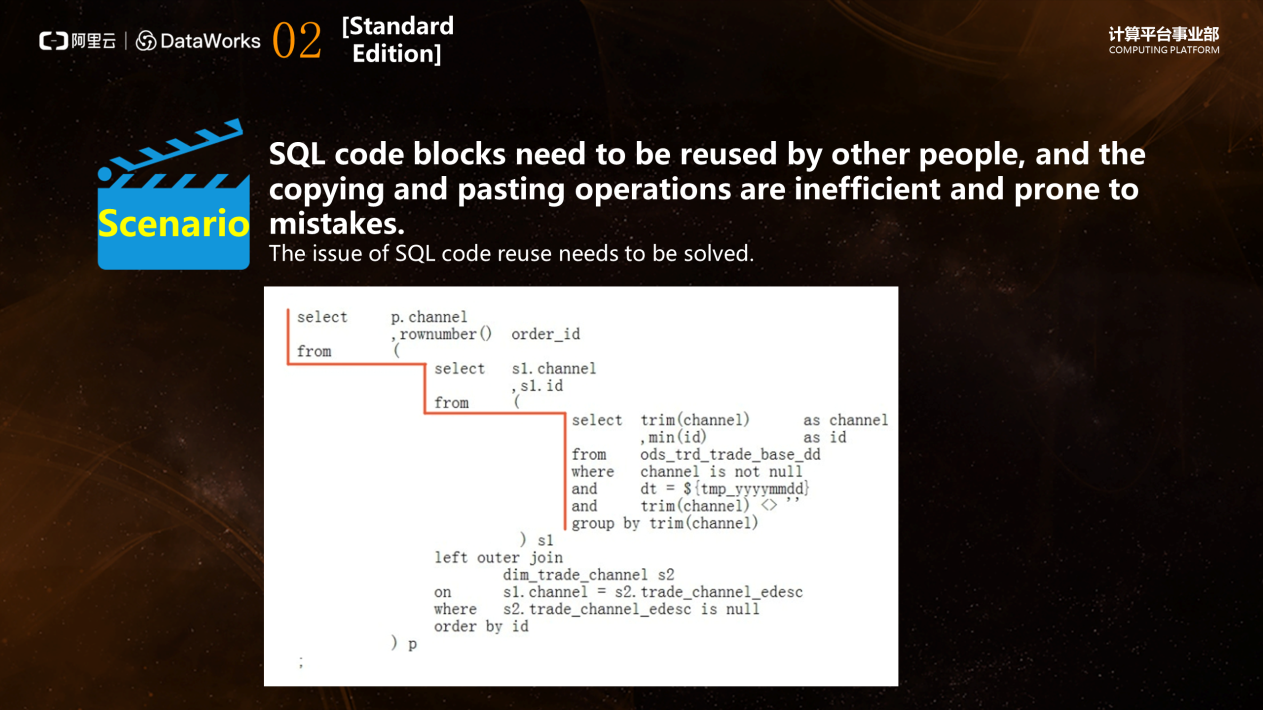

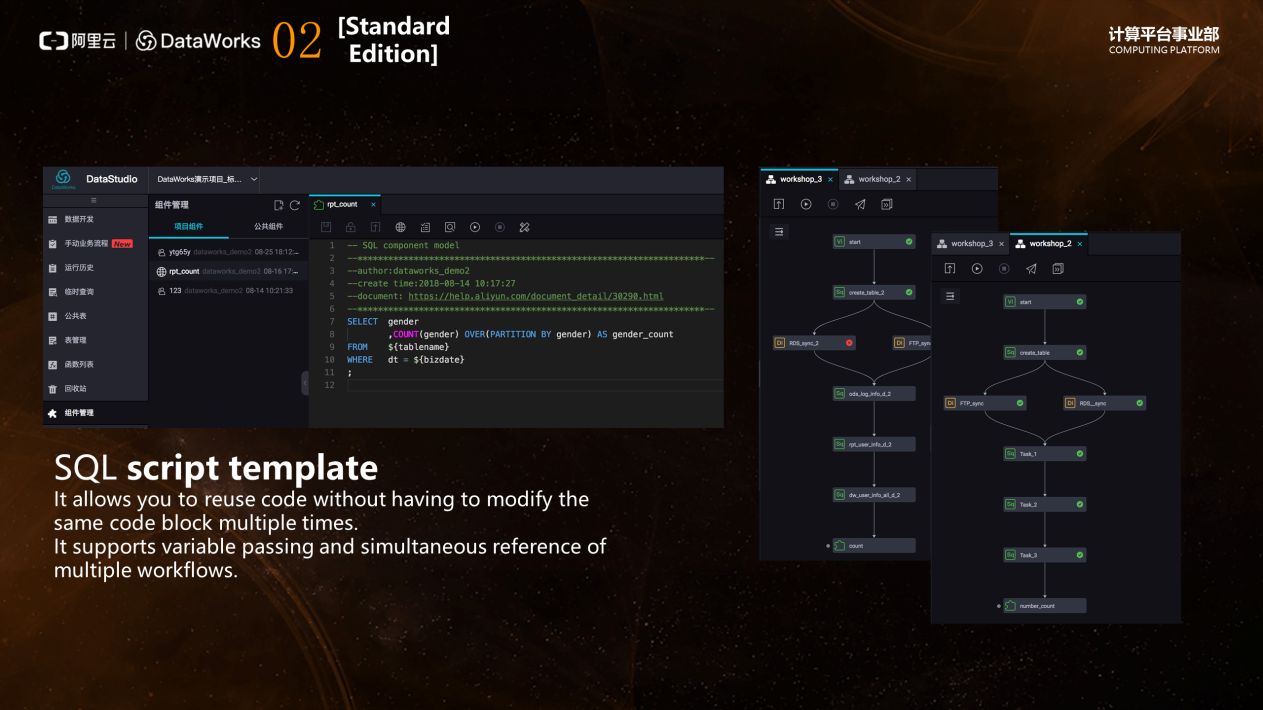

Scenario 2 Reuse of SQL code

As your SQL code grows, some code segments may become common code segments. You need to make them public so that other people can reuse them. The traditional method is to copy code, which is highly risky. Copying code is inefficient, and the original code can be easily cropped and therefore changed.

DataWorks Standard Edition provides the SQL script template feature that allows you to define a common SQL block and set variables as needed in the block. If other people need this SQL code block, they invest it in a workflow as a node and assign values to the configured parameters to reuse the SQL code.

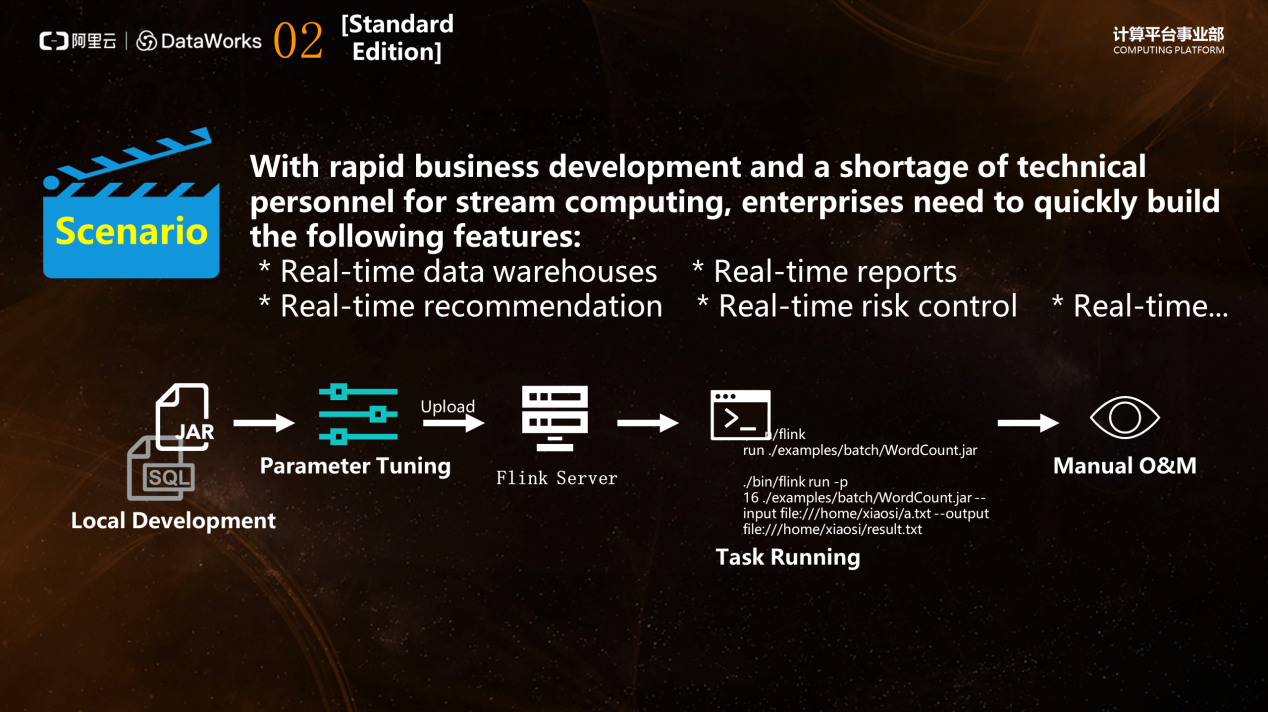

Scenario 3 Real-time data warehouses

Some enterprises need to build real-time data warehouses. Usually, a group of skilled professionals are needed to build the entire stream computing system. The entire process of Flink includes developing stream tasks and tuning parameters locally, uploading the tasks to Flink clusters after the development is completed, and then running the tasks with command lines. The O&M process is also very complex. If you encounter problems such as timeouts, Flink has to check logs in multiple places, so it is difficult to troubleshoot the problems.

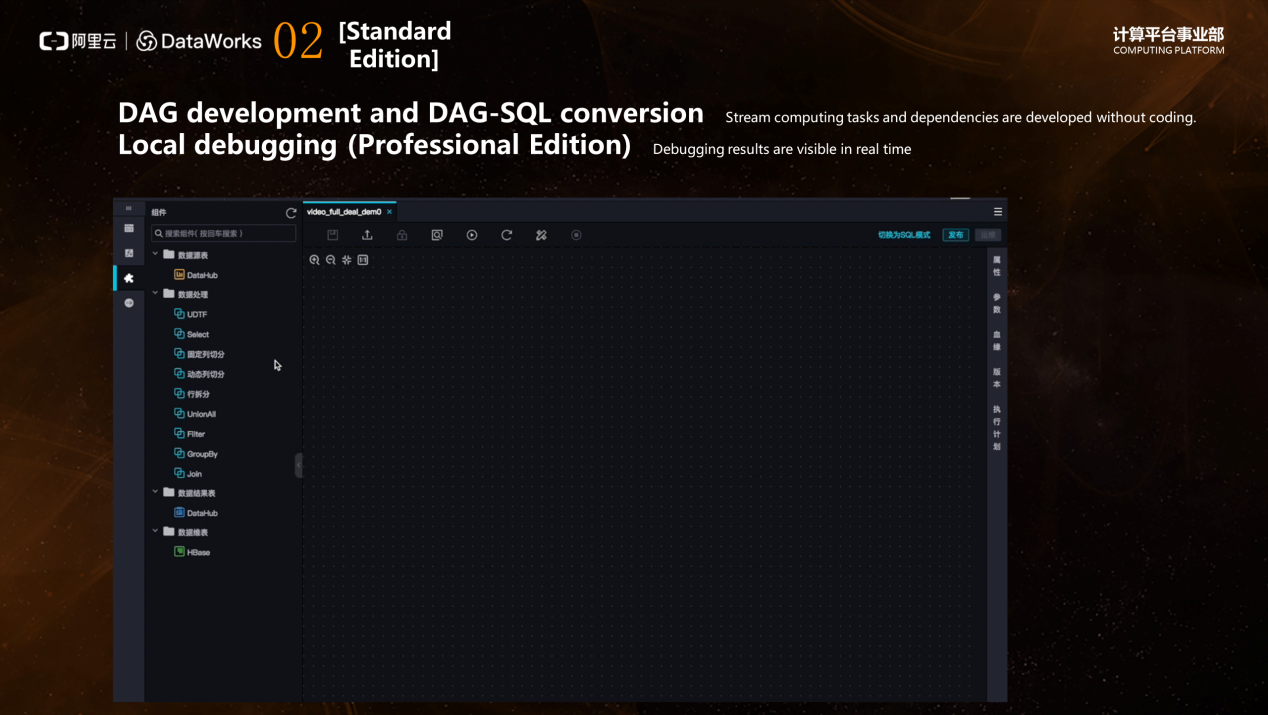

DataWorks Standard Edition provides directed acyclic graph (DAG) development and allows DAG-SQL conversion. You can drag each data record to be processed and each processing action into a node, connect all nodes to form a workflow, and process data records in turn. As shown in the following figure, click each node and expand the structure. For example, the first datahub is a data source, and it contains an input field, which is split into multiple fields in the second fixed chain. You can configure fields online in a visualized way. After configuration is complete throughout the process, click Switch to SQL Mode in the upper-right corner to convert to Flink SQL. In addition to configuring the processing flow of Flink's stream computing with a graphical interface, DataWorks Standard Edition also allows you to write tasks by using native SQL statements.

DataWorks Professional Edition provides the local debugging feature. You can click Sampling on each node to view the sampled data and sampling time. If it does not meet expectations, you can make adjustments in time. The traditional Flink does not have this feature. Before you can check whether the result data meets your expectations, you must wait until all tasks are executed. The local debugging feature can help you whether data is produced as expected before the entire task is deployed. When building a real-time stream computing system, DataWorks greatly lowers the threshold for you to use the real-time stream computing engine.

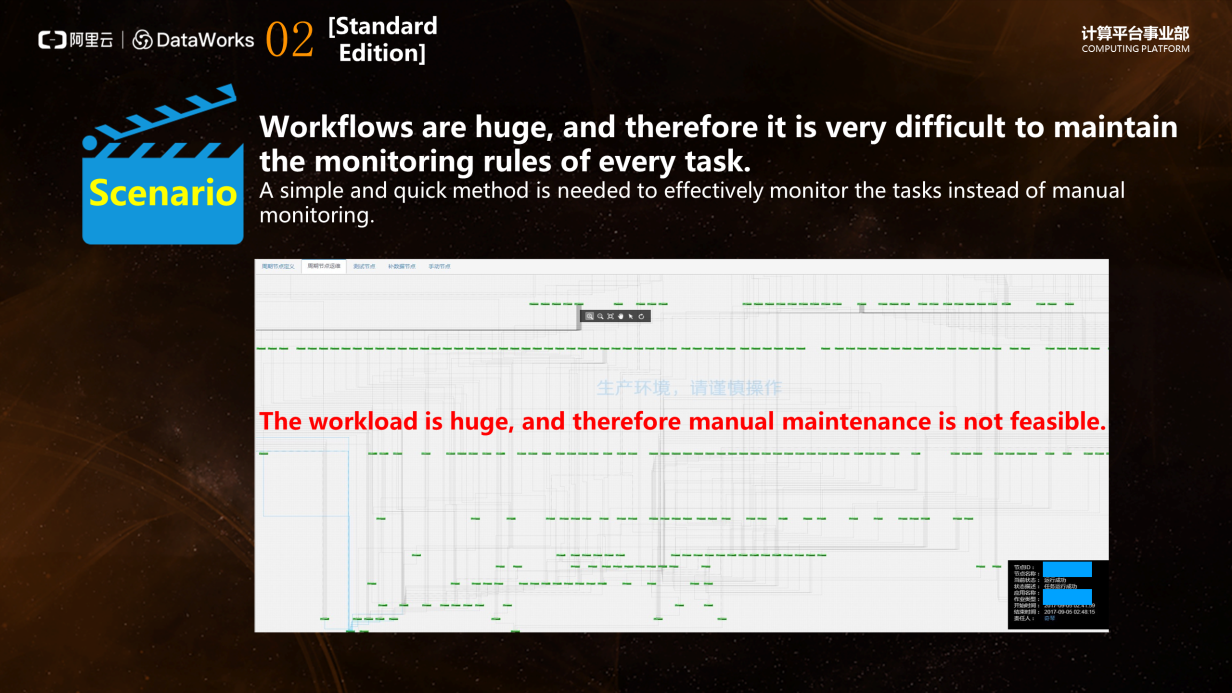

Scenario 4 Task monitoring

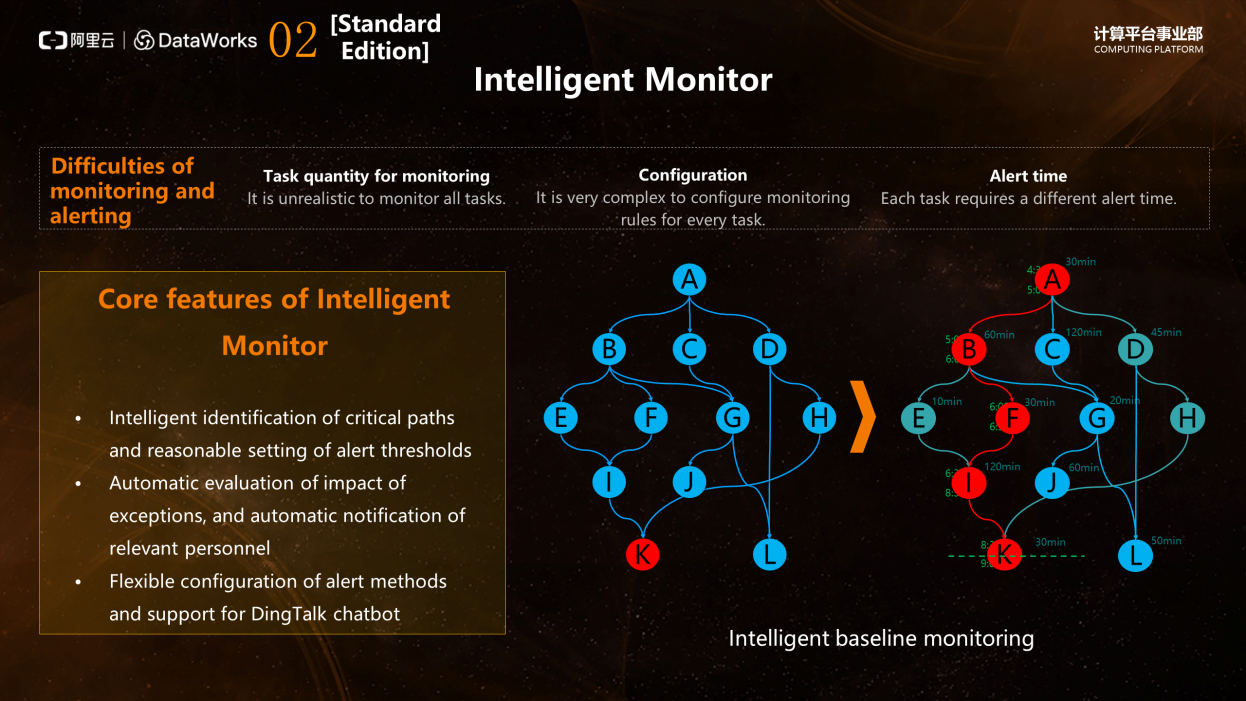

As your workload grows in size and complexity, it becomes very difficult to monitor each task manually. As shown in the following figure, the dense nodes are the DAG screenshots expanded in the Alibaba's internal operations center. The traditional monitoring configuration method reports an error when the configuration process of each task times out, but when the workflow volume is very large, the traditional configuration method is unrealistic. The data volume of each task is not fixed, so the task time may increase, and you cannot adjust the alert threshold of each task. Therefore, a fast and intelligent method is required. You only need to configure a task once, and then reasonably monitor it to implement overall monitoring for the entire workflow.

The intelligent monitor feature of DataWorks Standard Edition allows you to configure a time limit for only the last node of the output data. Then, the intelligent monitoring system analyzes the running time of each task and SQL semantics at the backend, plans the key path, and analyzes the latest start time and end time of each node. If an intermediate task in the upstream becomes slow or encounters an error, the intelligent monitoring system sends an alert to you through an email, an SMS message, or DingTalk. When you receive an event that occurred during an intermediate task, you can respond and resolve the problems swiftly. If the problems can be solved promptly, the final output can be generated at the time point that you set. If you set a baseline for the entire workflow and the baseline is not broken, the Intelligent Monitor feature can ensure that data is generated punctually.

Scenario 5 Search for the source of dirty data

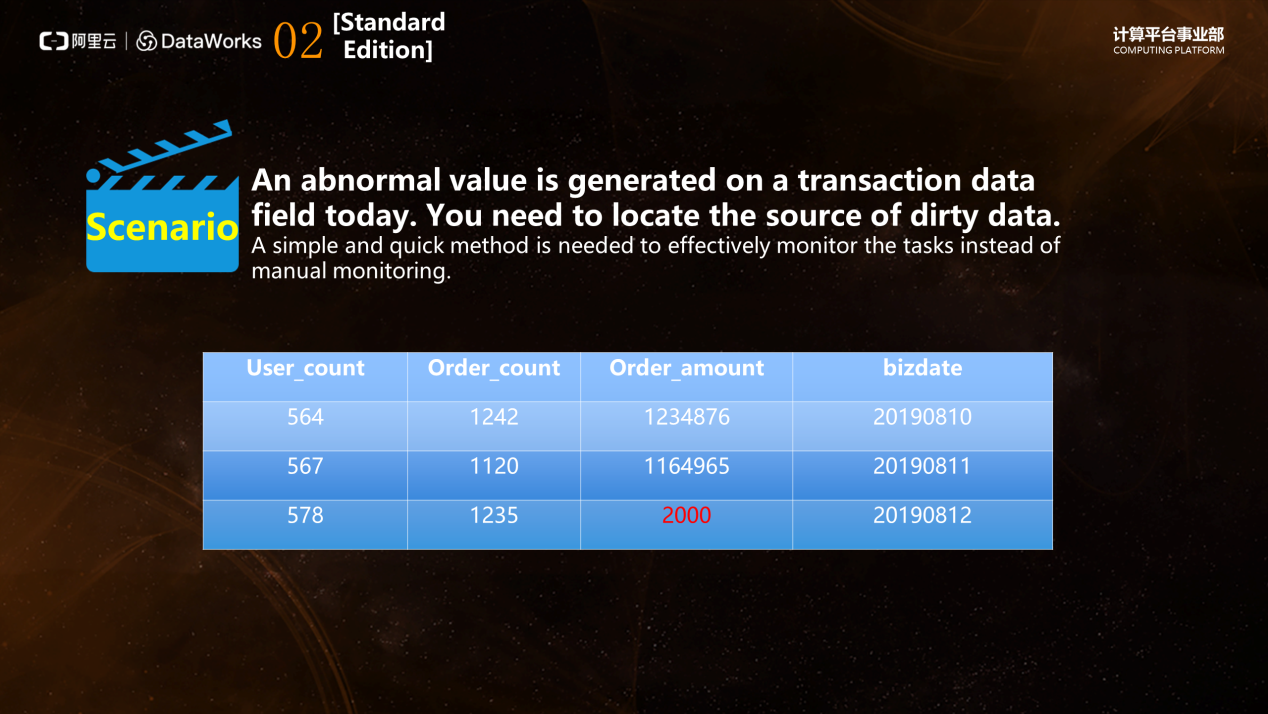

As your data volume grows rapidly, data quality problems become prominent. DataWorks Standard Edition allows you to configure and customize rules or preset templates to monitor data quality. As shown in the following figure, in the user consumption table , the order amount used to be more than a million per day. However, one day the order amount is 2,000, which is unexpected.

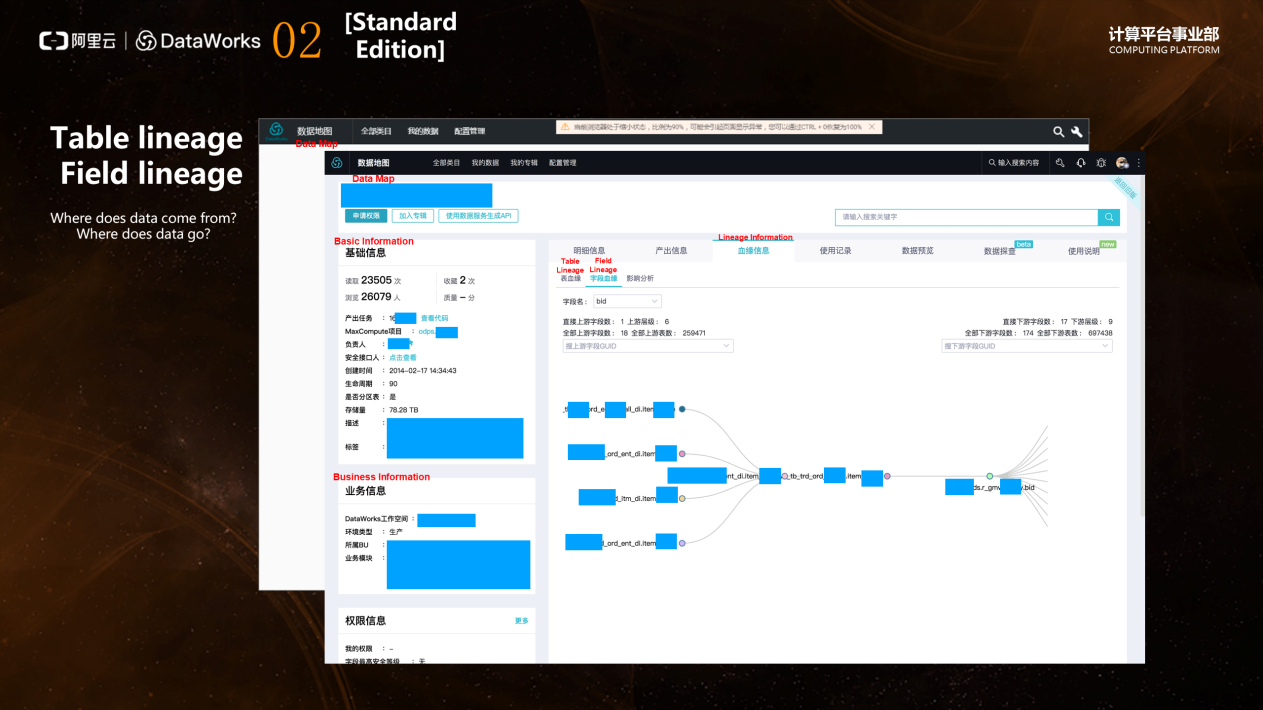

The data lineage feature of DataWorks Standard Edition can help you solve data quality problems. The data lineage feature provides Data Map to identify the ancestor and descendant tables of each table, and the ancestor table field and descendant field of each field. That is, the data history is presented in the form of fields. Data Map has other uses. For example, all users added to the current project in the same region under an Alibaba Cloud account can search tables in Data Map. Also, in the details of each table, you can view its output information generated on the same day. Data Map is an essential feature for you to use tables. You can use it to view information such as field descriptions and daily partition output.

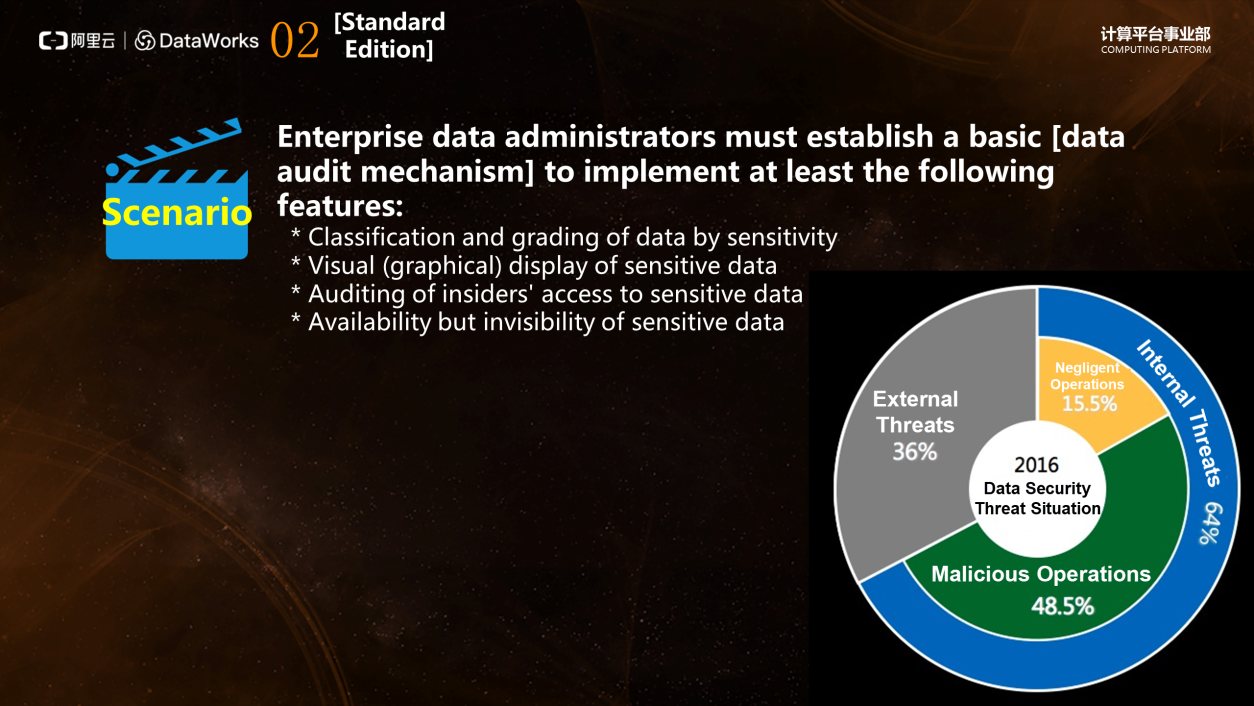

Scenario 6 Data audit

How can we ensure that data is not lost after it is produced punctually and accurately? To ensure data completeness, enterprise managers usually push enterprises to build data auditing systems. The Data Security Guard module of DataWorks helps you grade and classify data by sensitivity, display sensitive data in a visual (graphical) manner, and audit the internal staff's behaviors of access to sensitive data. Meanwhile, this module ensures that sensitive data is available but invisible (or specifically, data masking). DataWorks Standard Edition provides the preceding four features to help you implement the most basic data auditing capabilities.

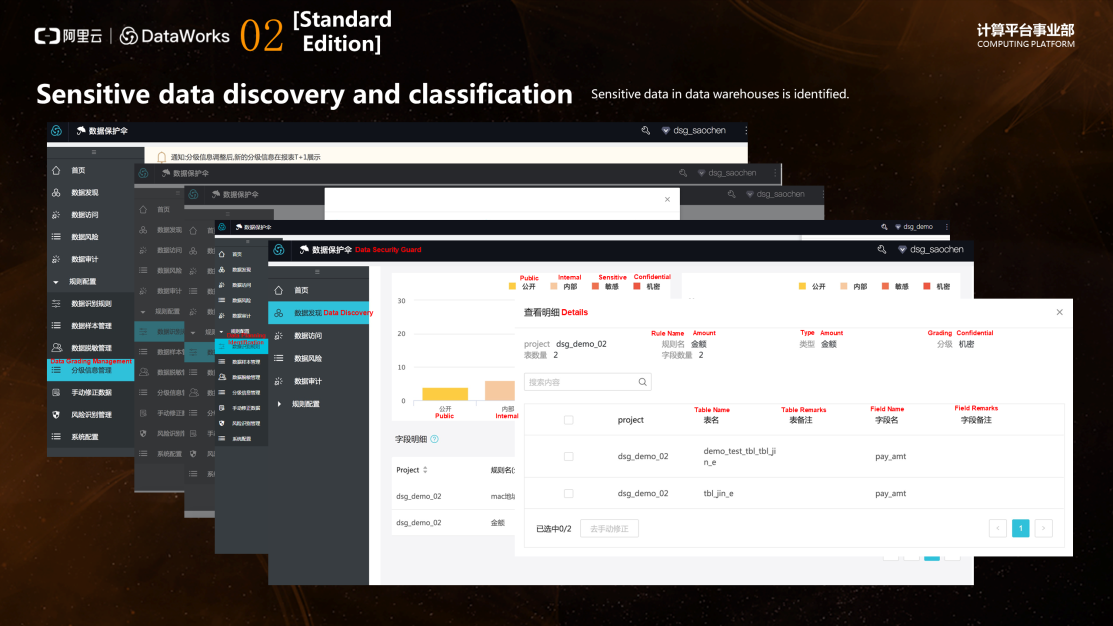

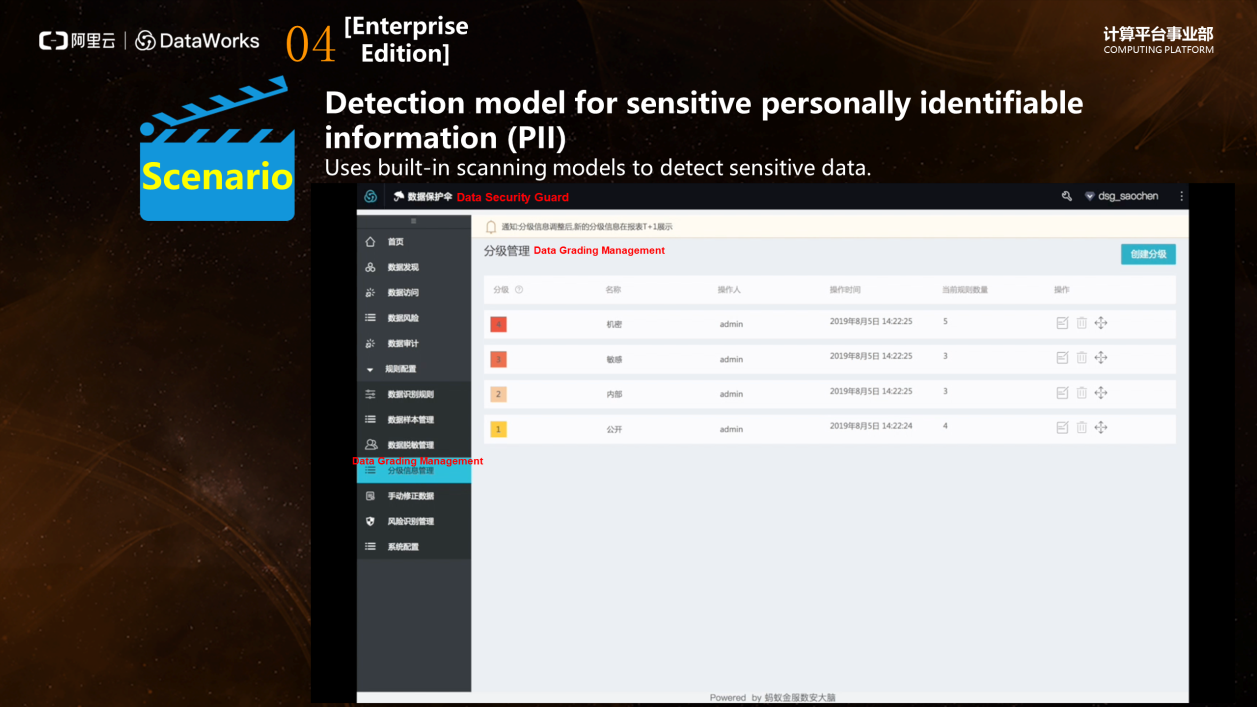

First, DataWorks Standard Edition allows you to define fields and scan fields, and classify the sensitivity levels of fields. As shown in the following figure, you can create several levels in the page, including public, internal, sensitive, and confidential. Then, set the data type. When a payment field is located during the scan, define it as a monetary field (amount). The specified rule becomes effective on the next day. The scan starts at 00:00, and after the scan, related fields in the project are displayed.

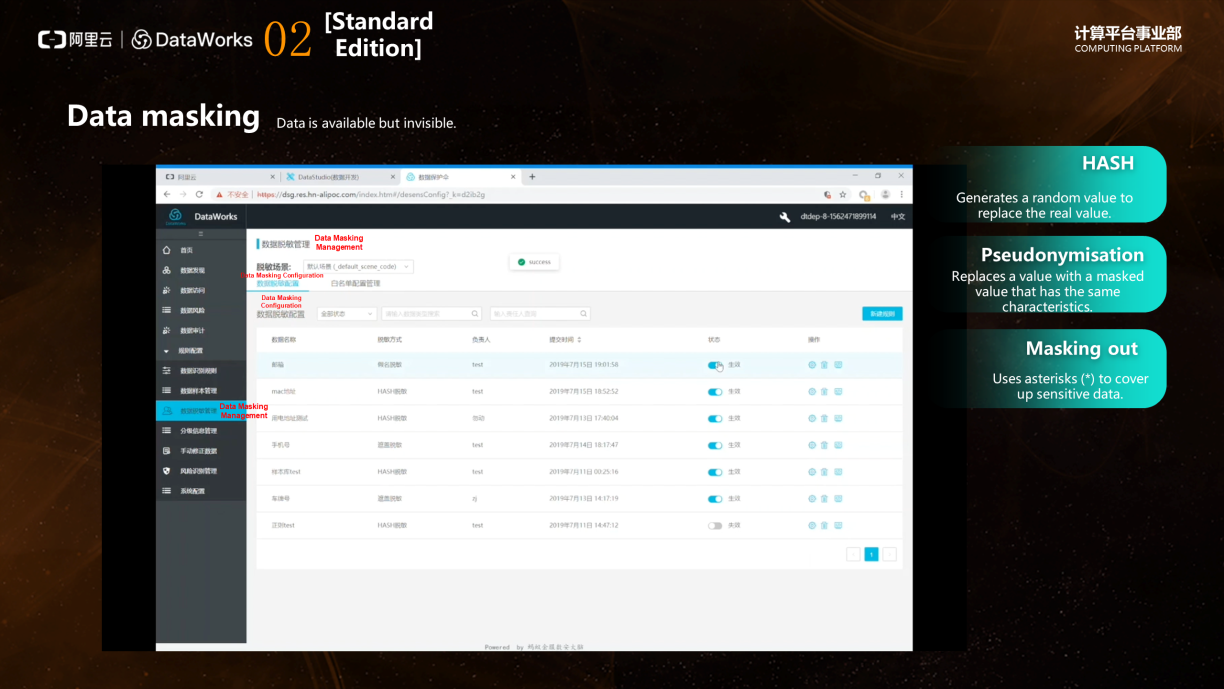

The data audit feature for access to sensitive data records the access behaviors and helps administrators identify the behaviors of users who access sensitive fields. When necessary, administrators communicate with the related personnel to establish an internal communication mechanism. Currently, three data masking methods are available, including HASH, pseudonymisation, and masking out.

Currently, DataWorks Standard Edition provides most features of data development and basic data governance. If the data volume of your corporation grows rapidly and you have certain requirements for data governance, you can use DataWorks Standard Edition at the initial stage.

DataWorks Professional Edition provides APIs for extending data services to offer more flexible and high-availability service capabilities. In terms of data governance, the security of DataWorks Professional Edition has been enhanced.

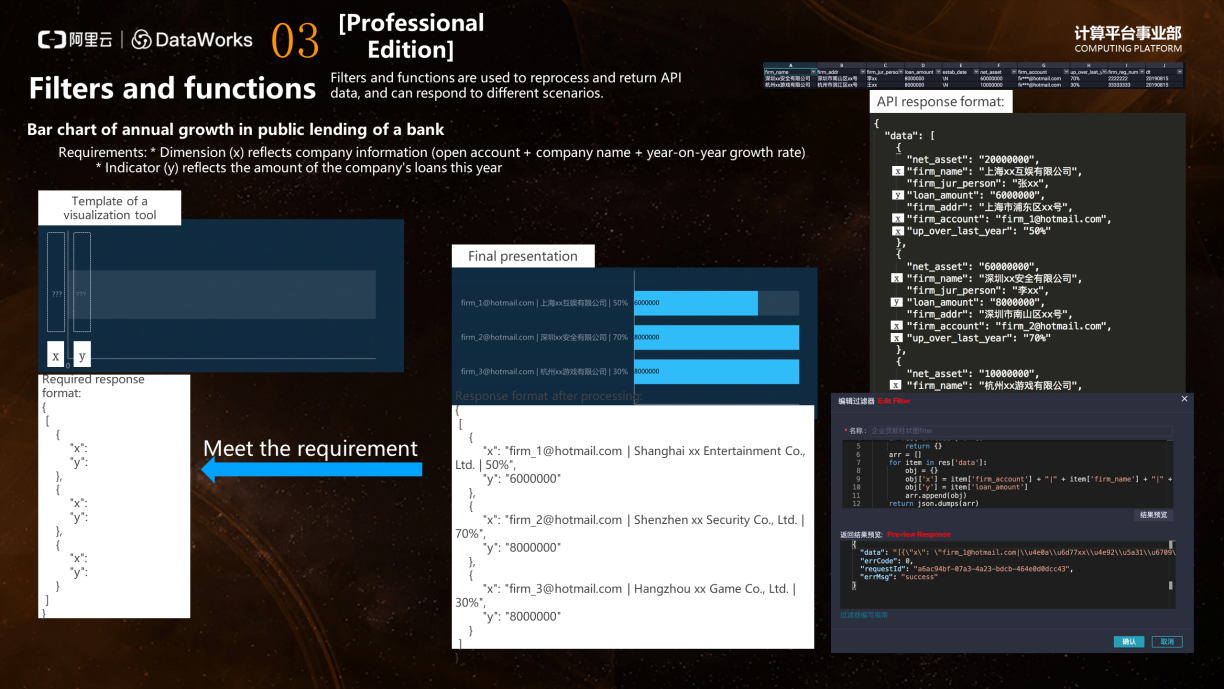

Scenario 1 Connection of APIs to report systems

Assume that an enterprise has report systems with different data structures and needs to display data in a visualized manner. For example, a bank needs to make bar charts for the year-on-year lending growth. The bank has a visual system that includes a bar chart component. If the component can receive data sets in JSON format, the data visualization system can be easily connected to data service APIs. You can use filters and functions in DataService Studio to further process the data returned by APIs, so that you can meet various data structure requirements flexibly. A loan information table of an enterprise is saved in MaxCompute. The traditional method is to create an API. After the rightmost date filed is passed to the API, the other fields can be returned. A total of seven fields are returned for each data entry, where x is company information and y is loan amount. In the following figure, the firm_name, firm_account, and up_over_last_year fields are combined and placed in x, and the result of the loan_amount field is placed in y. At this point, the API has been built, but it is not cost-effective to build the API based on the traditional format. You can define a function in Python 3 to process data and the response format by using filters and functions in DataService Studio, and concatenate the three fields to finally form a data structure required by the visualized template to present data.

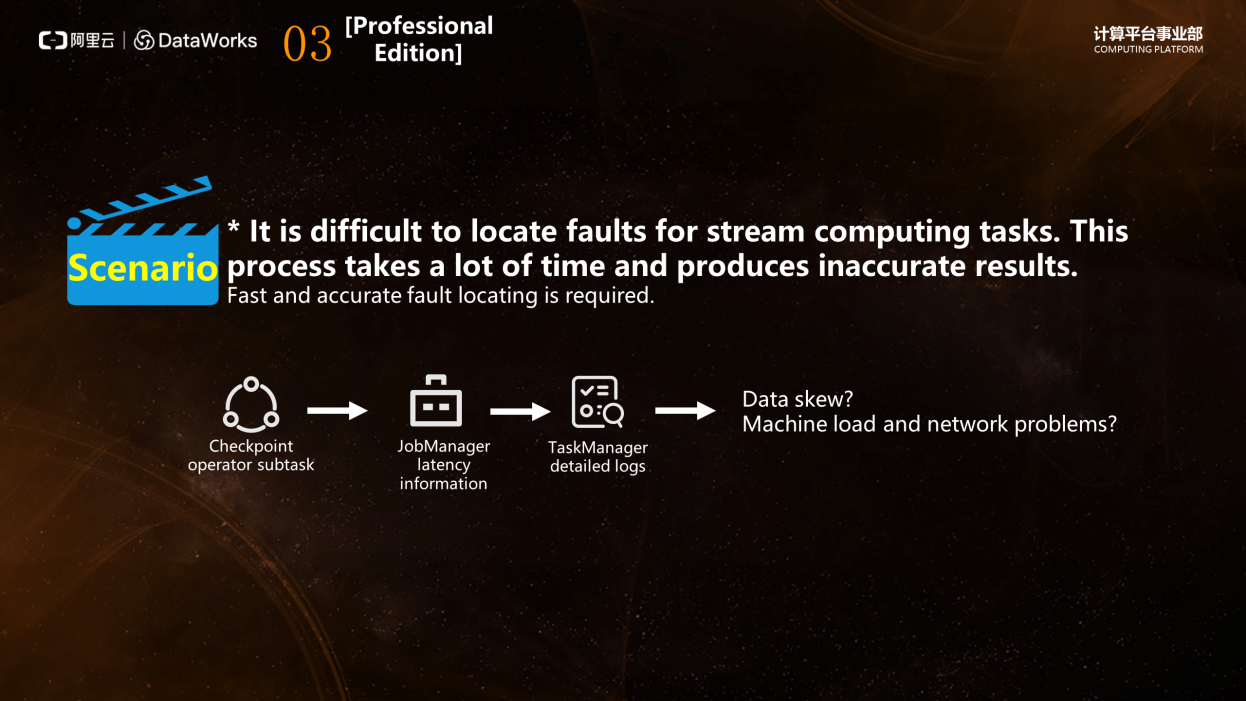

Scenario 2 Real-time troubleshooting

In traditional Flink scenarios, the O&M work is difficult, especially troubleshooting. For example, to troubleshoot timeout issues, you must check whether an operator in the Checkpoint is faulty. If the operator is not faulty, check the delay information of the JobManager, and then check whether the TaskManager is overloaded on the machine, whether a network problem occurred, or whether a data skew exists. The accuracy of troubleshooting depends on the experience and the understanding of Flink's technical architecture.

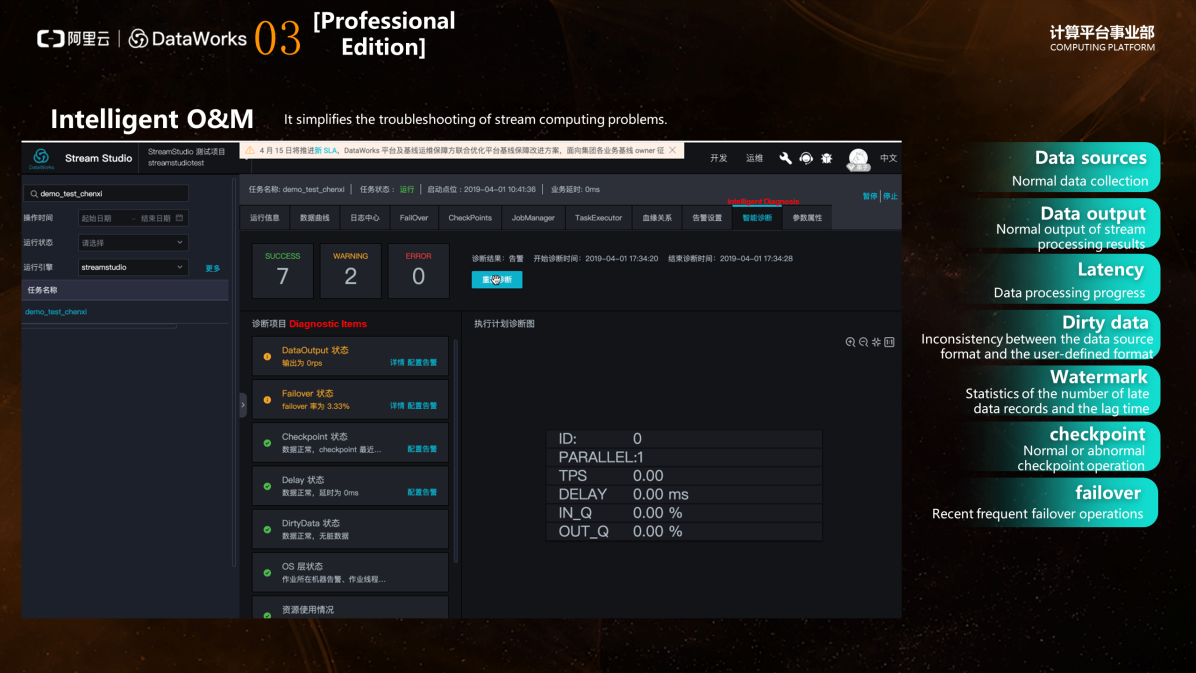

As shown in the following figure, the intelligent O&M feature of DataWorks provides visual inspections on data sources, data output, latency, dirty data, Watermark, Checkpoint, and failover. This feature notifies you of all errors discovered to help you locate errors.

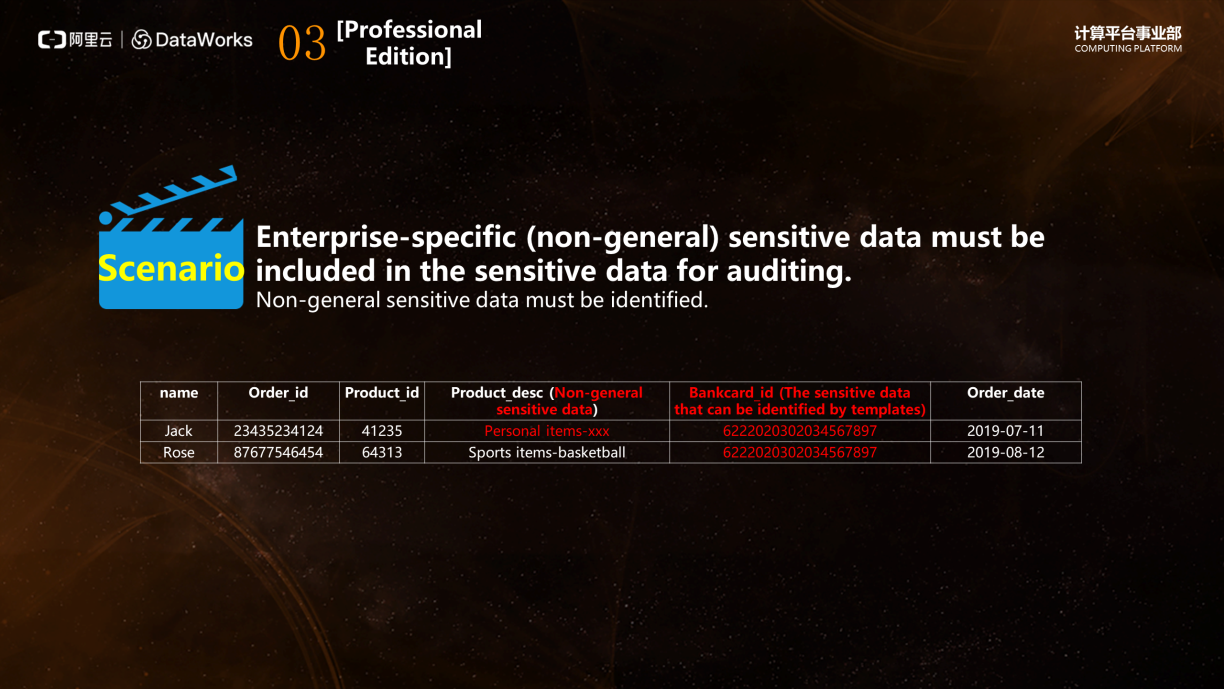

Scenario 3 Audit of non-general masked data

DataWorks Professional Edition includes essential improvements in data security. Sensitive data that is uncommon in enterprises must be included among sensitive data to be audited. Then a function is needed, which enables you to customize the scan field by selecting the type matching field of a certain type of sensitive data. As shown in the following figure, the Bankcard id is the bank card number field. DataWorks Standard Edition is able to identify this field and lists it as a sensitive field. However, the Product description field in the following table indicates products that users have purchased. Some values are sensitive, whereas some are not. As such, you do not need to list the entire field as sensitive information. DataWorks Professional Edition implements regular expression matching by using custom content scans. You can select Customize and click Content Scan to perform matching for regular expression rules that meet the criteria. This meets the need to identify sensitive data in specific scenarios.

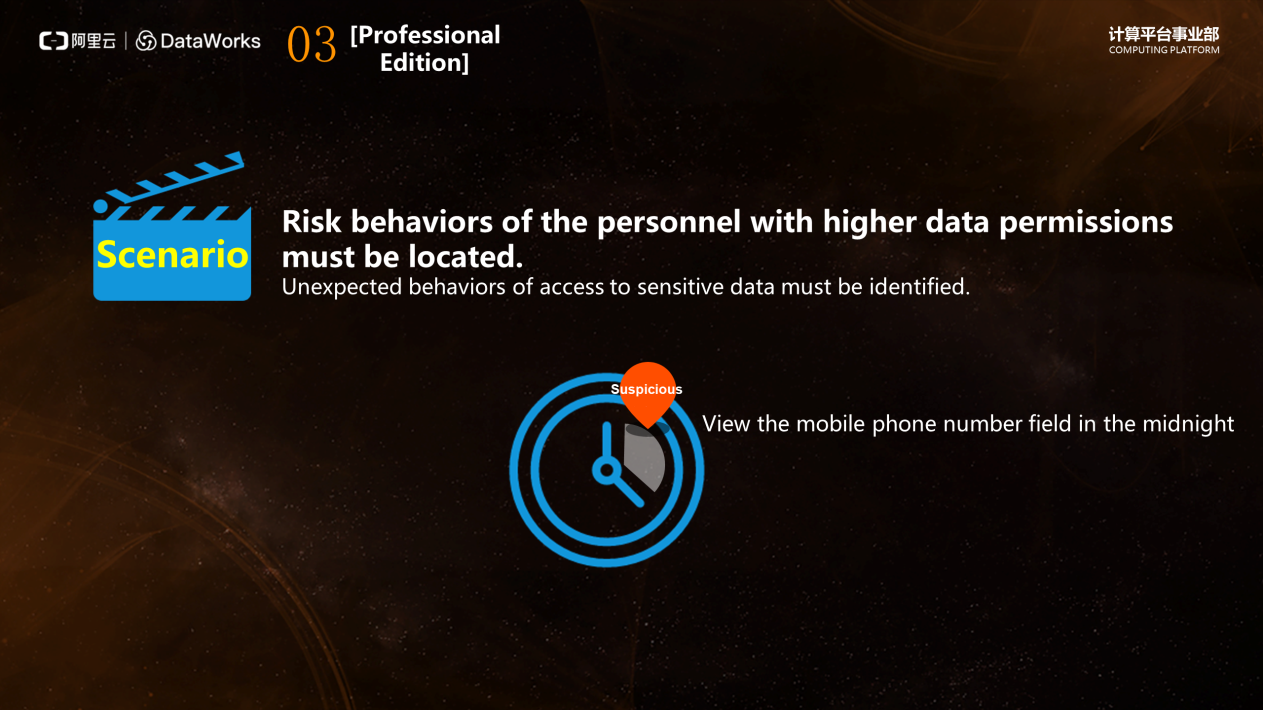

Scenario 4 Audit of user access to sensitive data

In addition to auditing user access to sensitive data, administrators must review the risky behaviors of users who have permissions on a specific table to check whether sensitive data is used at unexpected times. DataWorks Professional Edition can help administrators identify risky access behaviors and display the accessed SQL, access time, and sensitive data accessed on the page. If the data is unexpected, the administrator needs to conduct an internal review.

DataWorks Enterprise Edition adds the API orchestration feature for DataService Studio. The data mid-end solves the problems of storing, connecting, and using data. That is, data specifications are stored in a centralized manner, data between various systems is connected, planned, and processed in a centralized manner, and the final data is used by the business side. The teams of the business side that use the data have diversified needs. If the data mid-end can respond to the needs of the teams swiftly, the data system will gain more trust and more strategic importance in the enterprise. If the data mid-end cannot meet the needs of the teams in time or does not meet their expectations, the data system will be increasingly marginalized. How can we meet the complex and diverse needs of the business side? The DataService Studio module of DataWorks Enterprise Edition currently provides service orchestration that can fit into various complex scenarios.

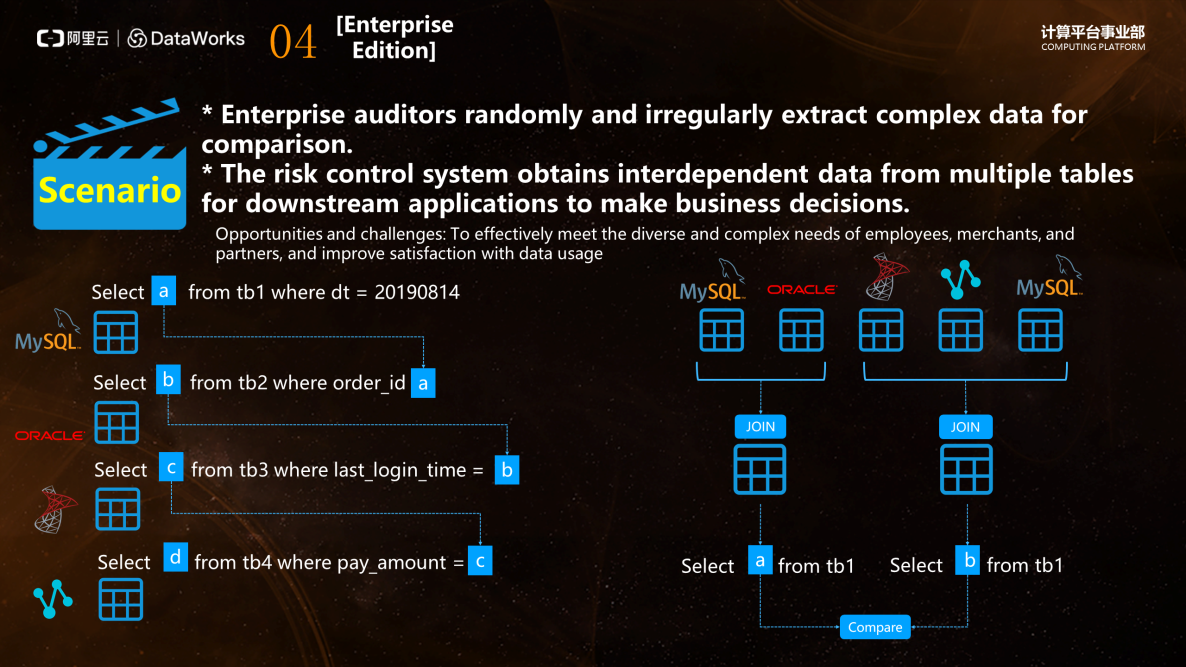

Scenario 1 Temporary requirements

Some temporary requirements are complex. For example, auditors randomly and irregularly select data sources for comparison, or the risk control system needs to obtain interdependent data from multiple tables and obtain the final downstream data for decision-making.

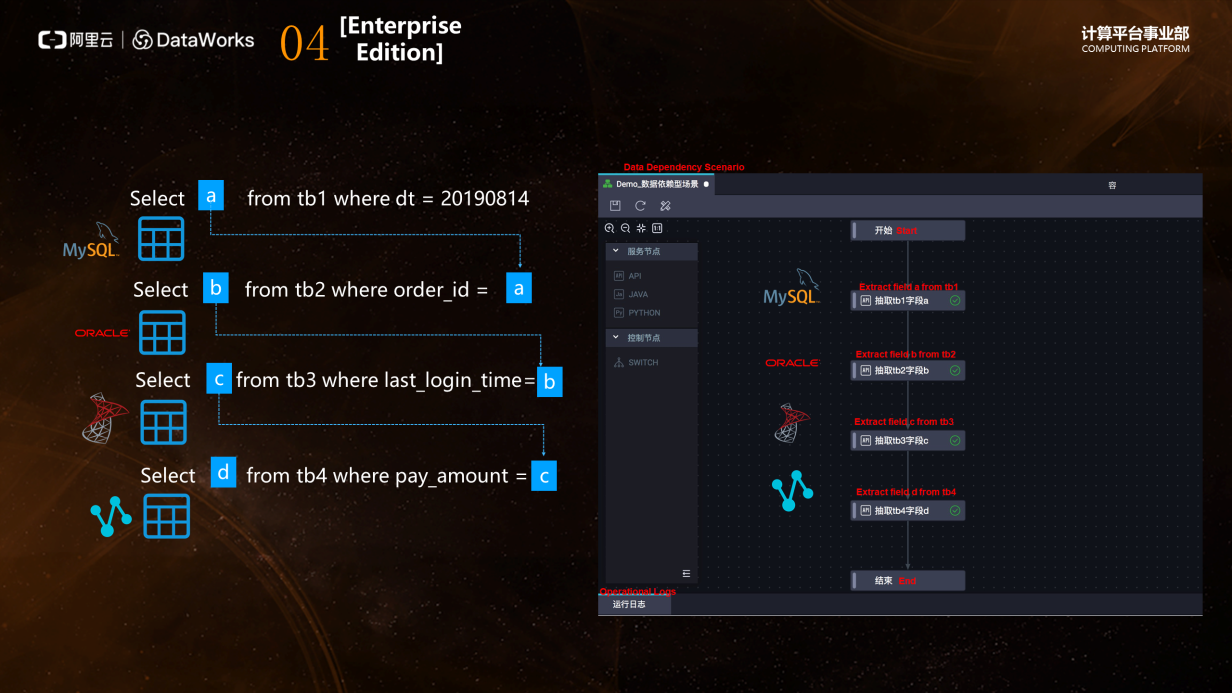

As shown in the following figure, the auditors need to join several tables to compare them, or obtain interdependent data from multiple tables to find out the final downstream data and make the decision. The result of the SELECT statement for each table is the condition that follows the WHERE statement of the next table. From the perspective of data development, a common method is to pull data into a data warehouse, join the tables and produce results, and finally compare the results. However, this consumes a lot of time and labor, as well as computing and storage resources, and the cost-effectiveness of task maintenance is not very high.

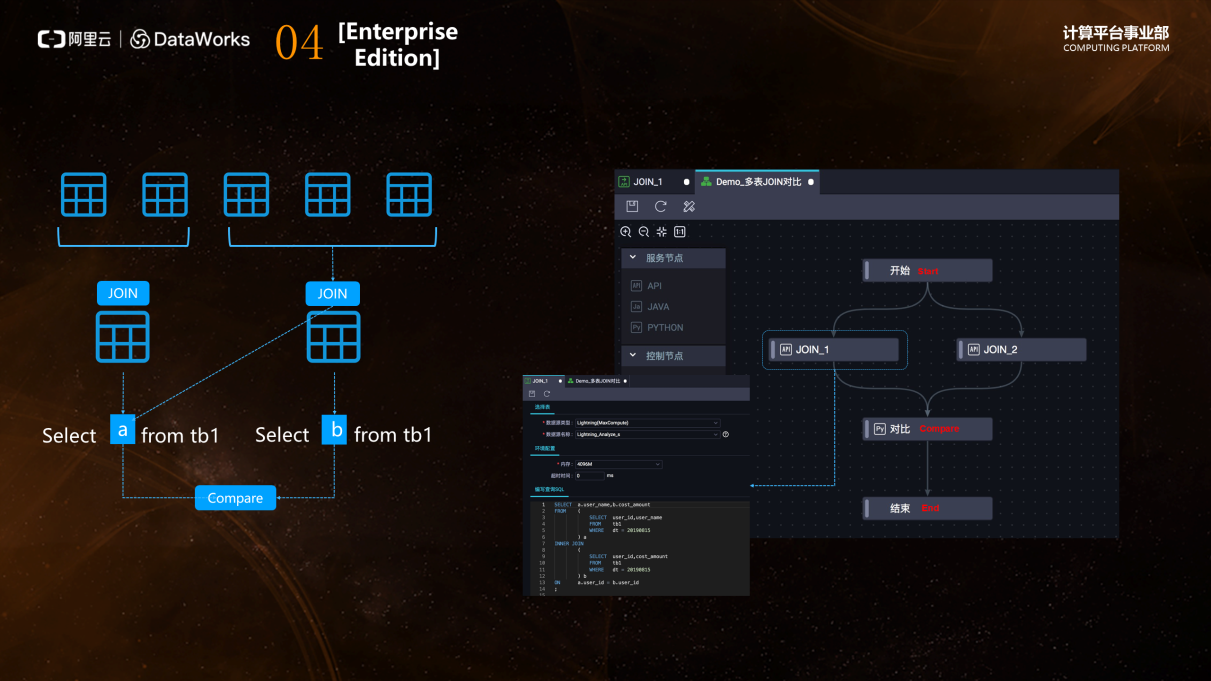

The service orchestration feature of DataStudio Service of DataWorks Enterprise Edition allows you to build multiple APIs and compose them into compound services that have complex logic. You only need to pass in one parameter so that the downstream APIs can output results based on the output of each upstream API. As shown in the following figure, you can create an orchestrated workflow in the database and drag and drop four APIs into the workflow. Each API draws a field from a table. The drawn results are passed in to the next API as the request parameters. After the API conducts a series of computations after it receives the request parameters, it sends the results as response parameters to the downstream for which these parameters are request parameters. After a series of processes, the entire logic is implemented, and you do not need to export all data to the data warehouse for centralized processing. DataService Studio supports a variety of data sources, including MySQL,Oracle, and other engines.

You can also create a workflow as shown in the following figure in DataService Studio. The join API is shown on the left of the following figure. The API returns two fields after it joins two tables to form a field table. Use Python to compare the results returned by the two APIs and find their output This will meet the demand of auditors of the risk control system to extract multiple tables irregularly and review data comparison.

Scenario 2 Upgrade of security capabilities

DataWorks Enterprise Edition has upgraded its security capabilities in data governance and provided a sensitive data identification model for scenario PII. You only need to set a field type. DataWorks Enterprise Edition can then identify sensitive fields. DataWorks Enterprise Edition presets algorithms for sensitive fields such as the email address, landline, and IP address. DataWorks Enterprise Edition can also export security knowledge. With this feature enabled, you can query high-similarity SQL statements that are used multiple times on the same day. DataWorks Enterprise Edition can meet customized needs. You can create your own sample library, define sensitive data, and maintain your sample library in the content scan module.

Scenario 3 Customized development

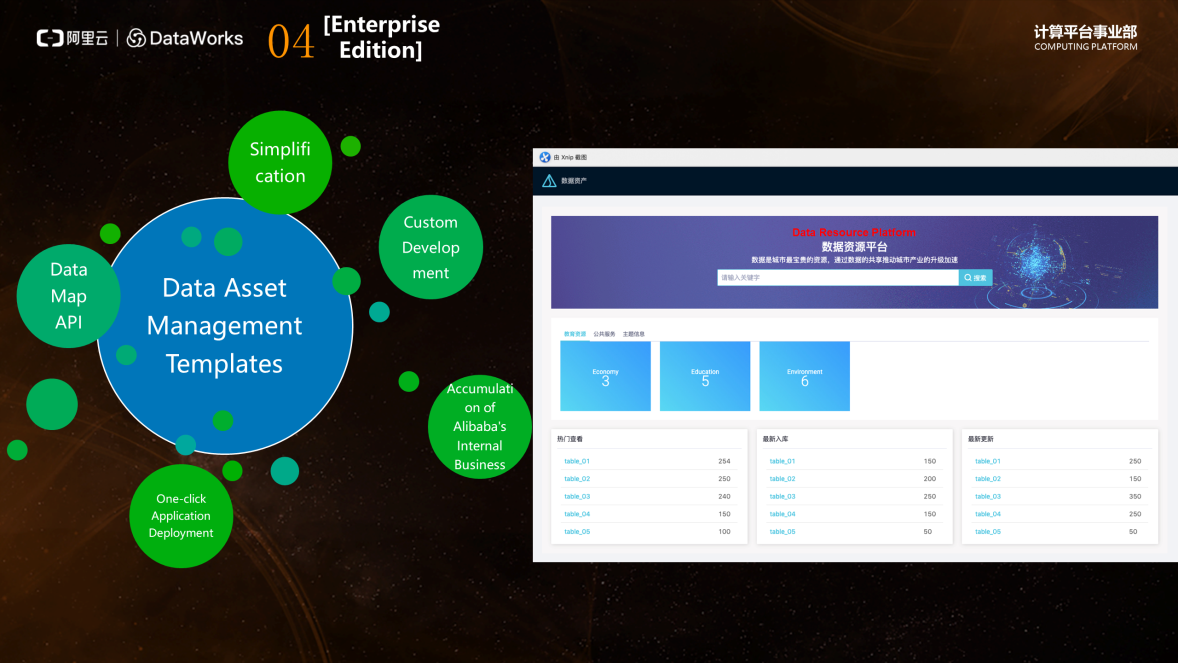

Some users require customized development of the DataWorks UI for their enterprises. DataWorks Enterprise Edition allows you to use the advanced APIs in App Studio to perform custom development. Currently, DataWorks Enterprise Edition provides MaxCompute data table APIs. You can call the APIs with the App Studio module in DataWorks Enterprise Edition to create the data asset page shown in the following figure.

To learn more about Alibaba Cloud DataWorks, visit the official product page

Evolution of the Frontend Architecture and Serverless Practices in DataWorks

2,593 posts | 792 followers

FollowAlibaba Clouder - March 9, 2021

Alibaba Cloud Community - March 29, 2022

Alibaba Cloud Community - March 4, 2022

JDP - November 11, 2021

JDP - March 19, 2021

Alibaba Cloud MaxCompute - September 12, 2018

2,593 posts | 792 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Alibaba Clouder