The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

Surpassing Best-Fit: How Does Alibaba Cloud Optimize Online Scheduling Policies on Sigma to Save Costs by Hundreds of Millions of RMB

Alibaba's 2018 Double Eleven Shopping Festival saw a new transaction amount record of RMB 213.5 billion. Compared with the performance a decade ago, the total transaction amount for this year's festival increased by more than 360 times and the peak transaction amount increased by more than 1,200 times. The explosive growth of these numbers also mean that the complexity and difficulty in supporting the transactions on Double Eleven has exponentially soared.

As the container scheduling system leveraged across the Alibaba Group, Sigma provides solid support for all containers during Double Eleven and covers more than 20 services such as transaction line middleware, databases, and advertising. It is a critical underlying infrastructure for Alibaba Cloud's O&M system. Because Sigma is playing a core role in online service control of all IDCs across the Alibaba Group and involves millions of host resources, its algorithm efficiency is critical to the overall business stability and resource utilization of the Group.

In this article, Wang Mengchang, Expert of Machine Intelligence Technology Algorithms of Alibaba DAMO Academy, and Han Tang, Technical Expert of Alibaba Group, will be sharing about the technologies behind Sigma and describing how to apply SwarmRL to scheduling policy optimization.

When you apply for computing resources (such as CPUs, memory, and disk resources) that are required for containers on Sigma, the scheduler selects the physical machines with required specifications to deploy these containers. Typically, many physical machines may meet the requirements. The distribution level (ratio of remaining resources to occupied resources) of each physical machine is different. Different distribution methods of these physical machines may have different distribution ratio. Therefore, the core task of the scheduler is to select optimal physical machines from a number of alternatives based on a specific policy.

In IT literature, computing resource scheduling is generally described as a vector bin packing issue. If the number of containers for each application is known (such as in a promotion scenario), the scheduler generates an optimized scheduling solution for all containers at a time. In this case, the issue can be described as an integer programming and solved by using a general solver or a special algorithm.

When an application sends a request to Sigma (such as in the daily scenario), the scheduler must instantly (online) make a deployment decision upon the arrival of the request. In this case, the issue can be described as the Markov Decision Process (MDP) and generally solved based on the best policy obtained from value iteration or policy iteration.

The most common scheduling policies are First-Fit (FF) and Best-Fit (BF). If you use the First-Fit algorithm, the scheduler deploys a container to the first qualified physical machine retrieved during the traversal. If you use the Best-Fit algorithm, the scheduler deploys a container to the physical machine with the highest distribution level among the qualified physical machines. For the typical bin packing issue (namely the one-dimensional vector packing issue), the approximation ratios of First-Fit and Best-Fit are both 1.7. This means that both algorithms can guarantee a lower number of used physical machines than 170% of that in the best solution. For two- and higher-dimensional vector packing issues, it has been proven that there is no polynomial approximation algorithm with a fixed competitive ratio.

When one resource dimension of a physical machine becomes a bottleneck and results in resource surplus in other dimensions, the effective dimension of the physical machine is considered to be one. Although both algorithms generally can ensure a good distribution ratio, the bottleneck that involves more than one dimension can compromise their effectiveness.

In addition to the requirements on resource dimensions, disaster tolerance and interference isolation also must be considered during resource scheduling. For example, the containers of the same application cannot be all deployed on the same physical machine, and for many applications, only one instance is allowed on one physical machine. Some applications are mutually exclusive due to resource contention and other reasons, which seriously affects the performance of the applications and thus they cannot be deployed on the same physical machine. These restrictions make the common policies become increasingly inapplicable. Through repeated manual trial and error, the common policies had barely withstand the site deployment pressure during promotion events for some times. However, as businesses expand, online containers increasingly scale up and compete for resources. Manual parameter tuning becomes unsatisfied in efficiency.

To mitigate the workload of parameter tuning personnel and support greater pressure with limited resources, the decision-making intelligence algorithm team of Machine Intelligence Technologies Laboratory (MIT Lab) and the Sigma scheduling team collaborated closely on researching online scheduling policy issues and developing the SwarmRL-based algorithm.

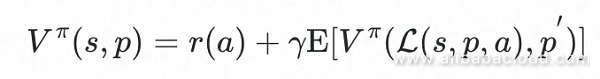

Assume that the specification of the container to be deployed is vector p° P, the cluster status during resource allocation is vector s° S, and the set of alternative physical machines is A?A, the policy can be expressed as the following function: ¶–:S°¡P°˙A(¶–° ¶∞). When the scheduler selects the physical machine (a=¶–(s,p)) to deploy the container based on policy ¶–, the immediate cost is r(a), and the new cluster status (s°‰) is determined jointly by state quantities s and p and action a, namely, s°‰=L(s,p,a). Also, assume that the subsequent container specification is p°‰, which is a random quantity for online scheduling. After you introduce the discount coefficient (¶√° [0,1]), the Bellman equation is as follows:

In this case, the best scheduling policy can be expressed as follows:

In theory, as the stochastic gradient descends, we can seek for a better policy in policy space °«. However, to further optimize the policy and even obtain the global best policy, other methods are required, especially when the best policy can be multi-modal.

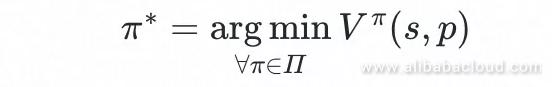

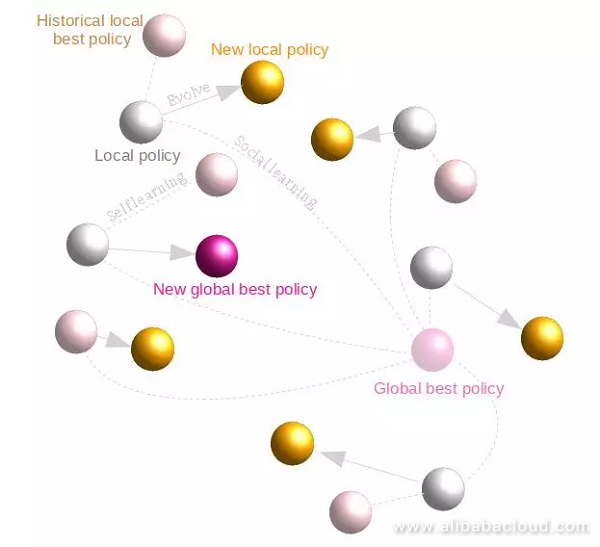

To prevent the policy optimization from falling into a poor local best solution and achieve a higher convergence rate, algorithms are designed in the SwarmRL framework. Compared with traditional reinforcement learning methods, SwarmRL uses multiple agents to explore the policy space and establishes a mutual learning mechanism among these agents, preventing algorithms from falling into local best solutions. An accurate Sigma simulator is essential for calculating the estimated status values (V¶–). Thanks to the team, Cerebro, a "full-fidelity" simulator based on the Sigma scheduler, is available for this purpose.

During the calculation, the algorithm initializes the policies of a random swarm of agents, retrieves the estimated status value for each policy through the simulator, and records the current global best policy. In subsequent iterations, the agents constantly update their local best policies. Based on these local best policies and the current global best policy, the algorithm updates and simulates the agents' current policies to retrieve the estimated status values for new policies and update the global best policy. Then, the agents repeat the preceding process until the convergence conditions are met.

During the status value estimation of each agent, the samples (multiple randomly sampled cluster snapshots and expansion request sequences) and the current policy of each agent are input into Cerebro to track the cluster status trajectory during simulation. In this way, you can obtain the total cost for the trajectory. By averaging the gross cost for all trajectories based on multiple samples, you can obtain the estimated status value for the corresponding policy.

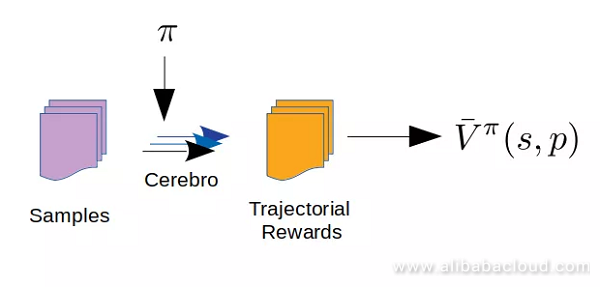

In SwarmRL, the policy evolution direction and step are expressed in the "speed" (v). Any change to the speed is associated with the difference between the local best policy (¶–L) or global best policy (¶–G) and the agent's current policy (¶–). In addition, the speed is controlled by some other parameters, such as the policy inertia factor w, local learning factor C1 (self-learning), and swarm learning factor C2 (social-learning):

where, ¶Œ1 and ¶Œ2 (° [0,1]) are random quantities and ¶µ is a feasible preserving mapping for pulling the agent out of the feasible region back to the region. During the iteration, the local best policy (¶–L) and the global best policy (¶–G) are constantly updated:

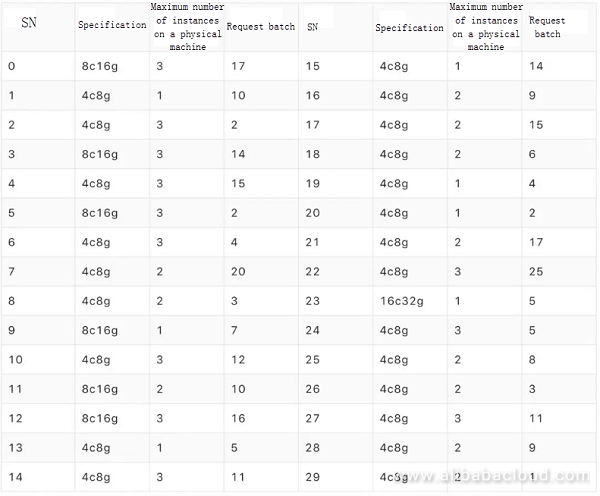

The following compares the results of both algorithms through a randomly generated example. The example includes 30 applications (see the following table for details). The container specifications are mainly 4c8g and 8c16g and the host specifications are all 96c512g.

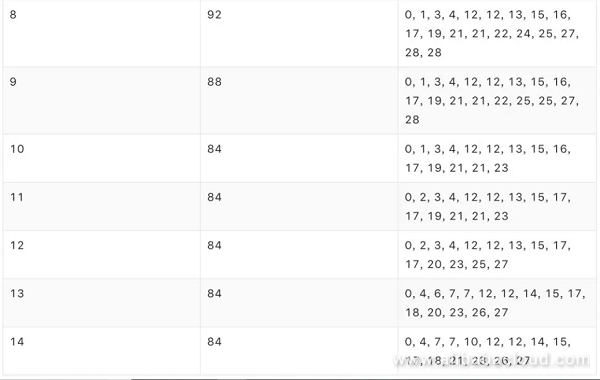

If the sequence and quantity of requests are known (from the God's Perspective) during scheduling, namely, post-scheduling is performed, the distribution ratio in the best solution obtained through integer programming is 94.44% (which is also the upper limit based on all scheduling policies in the example) and total 15 hosts are used. In this case, the scheduling solution is as follows:

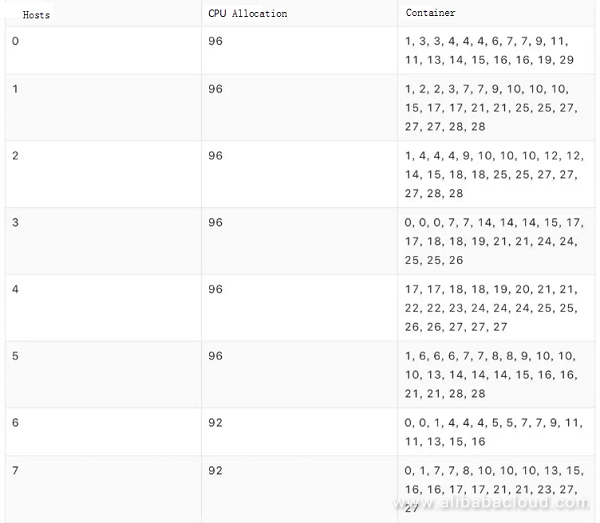

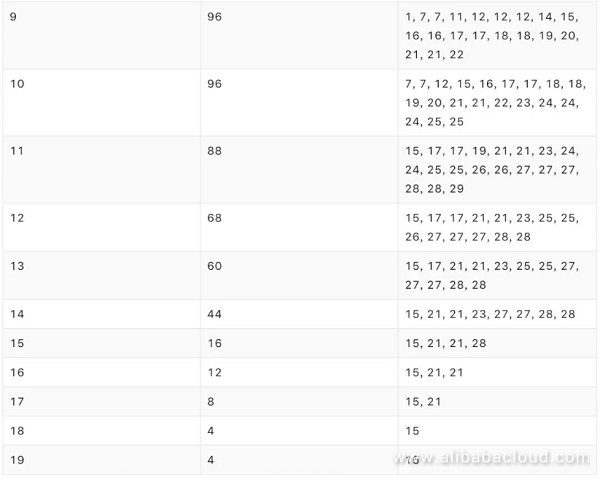

In an actual scenario, the sequence and container quantity of each request are known only when the request reaches Sigma. If Best-Fit is used for dynamic scheduling, the distribution ratio is 70.83% and total 20 hosts are used. In this case, the scheduling solution is as follows:

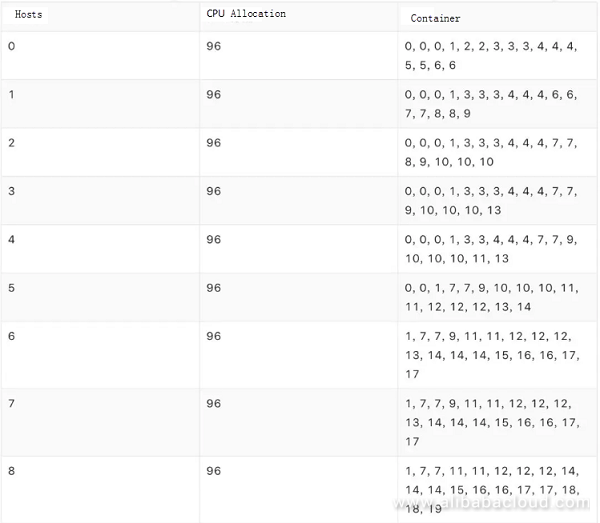

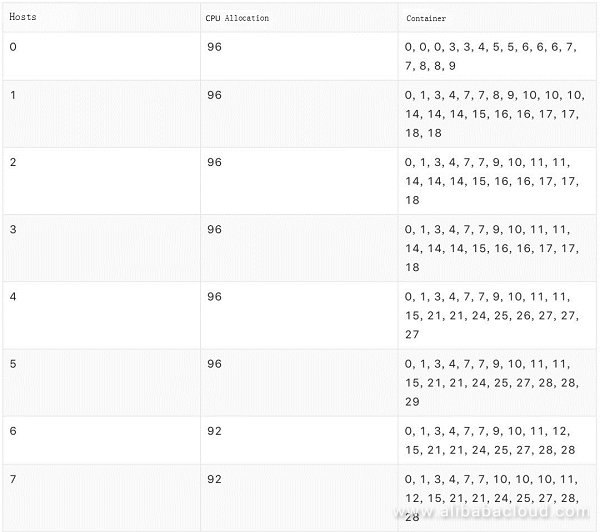

If the policy learned by SwarmRL is used for dynamic scheduling, the distribution ratio is 94.44% and total 15 hosts are enabled. In this case, the final container scheduling solution is as follows:

In this example, the performance of SwarmRL (94.44%) and the best scheduling solution based on the "God's Perspective" (upper limit) are the same and much better than the performance of Best-Fit (70.83%). The performance improves by 23.61%.

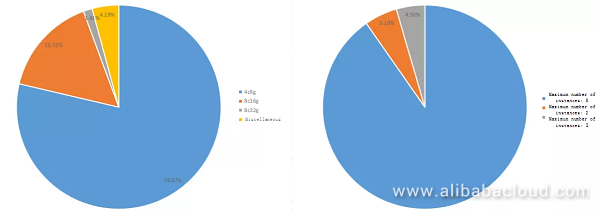

Next, randomly generate a large scale of request data including 3,000 requests and 5,000 containers. In this case, the specification distribution is as follows:

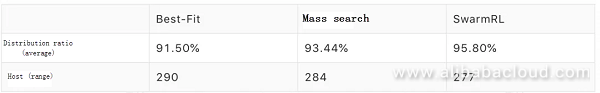

Because the integer programming model has a large variable size in this scenario, it is impossible to obtain the best scheduling solution based on the "God's Perspective" within short time. Compared with Best-Fit and manual scheduling, SwarmRL can achieve the following result by using the newly learned policy:

Compared with Best-Fit, the new policy saves 13 hosts (4.48%) and improves the distribution ratio by 4.30%. Compared with manual scheduling, the new policy saves 7 hosts (2.46%) and improves the distribution ratio by 2.36%.

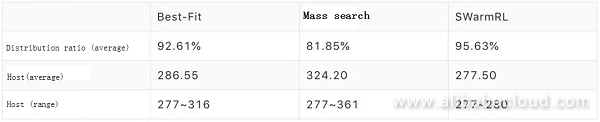

Considering the randomness of application request arrival sequence in the actual scenario, we randomly disturb the requests to generate multiple request sequences and then apply the three policies to dynamic scheduling in different request sequences:

Compared with manual scheduling (host range: 84), Best-Fit provides a relatively stable performance (host range: 39) with a lower fluctuation range of about 50%. The average distribution ratio obtained by manual scheduling is as low as 81.85%, which is less stable than the 93.44% distribution ratio obtained in the original sequence. Comparatively, the new policy learned by SwarmRL shows a very stable performance (host range: 3) with a much lower fluctuation range that is about one thirtieth of that for manual scheduling. On average, the distribution ratio of the new policy is 13.78% higher than that of manual scheduling and 3.02% higher than that of Best-Fit.

From the perspective of distribution ratio improvement and resource saving, SwarmRL can generate better policies with stable performance than common scheduling and manual scheduling policies. After the algorithm is deployed to the online environment, the peak distribution ratio of public resource pools is significantly improved.

With the development of CPU sharing and mixed deployment, in addition to the distribution ratio, the new scenarios also involve many other targets such as spreading and load balancing. Although these targets can contradict with each other, the operating mechanism of SwarmRL is suitable for policy optimization with multiple targets and can easily build Pareto Front in the policy space. For this reason, we will continue to research online scheduling policy issues in new scenarios to fully exploit SwarmRL and further improve Sigma's scheduling capability.

Alibaba Cluster Data: Using 270 GB of Open Source Data to Understand Alibaba Data Centers

508 posts | 49 followers

FollowAlibaba Clouder - November 8, 2018

Alibaba Container Service - November 15, 2024

ApsaraDB - November 28, 2019

Alibaba Clouder - November 13, 2018

Alibaba Cloud Native - July 29, 2024

Alibaba Container Service - December 30, 2019

508 posts | 49 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native Community