As an architecture model, cloud-native architecture controls application architecture based on several principles. These principles can help technical leaders and architects become more efficient and accurate when selecting technologies.

In the process of software development, when the amount of code and the scale of the development team have expanded to a certain level, it is necessary to refactor the application. It is also necessary to reduce the complexity of the application, improve software development efficiency, and reduce maintenance costs to achieve separation of concerns through modularization and componentization.

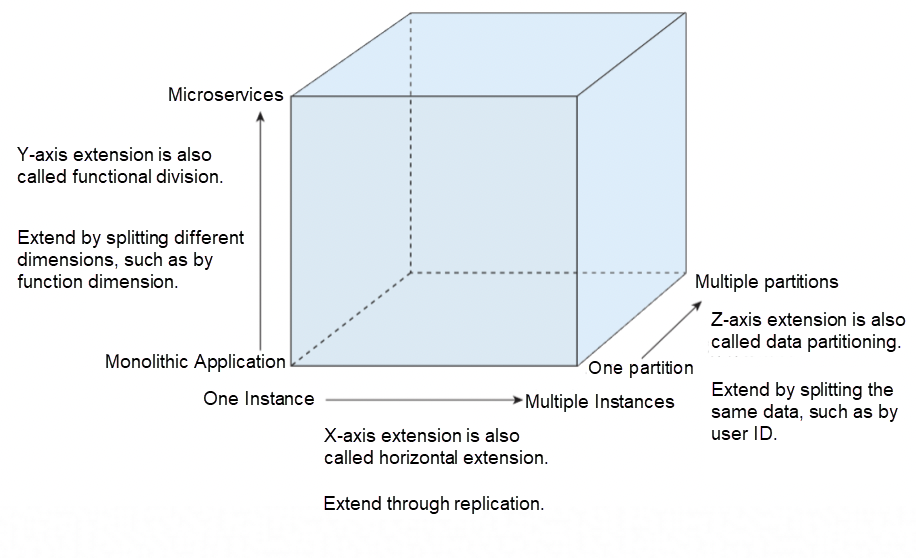

As Figure 1 shows, as the business continues to develop, the capacity that a monolithic application can bear will gradually reach the upper limit. The bottleneck of scaling up may be broken through application transformation and converted into the ability to support scaling out. However, the problem of data computing complexity and storage capacity still exists in global concurrent access. Therefore, it is necessary to further split the monolithic application into distributed applications based on the business boundaries. As such, each application communicates with the other based on the agreed rules instead of sharing data directly, thus improving scalability.

Figure 1: Application Service-Oriented Extension

The service-oriented design principle refers to splitting business units of different life cycles using service-oriented architecture. By doing so, independent iteration of the business units is realized, thereby accelerating the overall iteration speed and ensuring the stability of iteration. In addition, service-oriented architecture uses interface-oriented programming, which increases software reuse and enhances the capabilities of scaling out. The service-oriented design also emphasizes the abstraction of relationships between business modules at the architecture level. This will help business modules achieve strategy control and governance based on service traffic rather than network traffic. The programming language these services were developed with doesn't matter in this process.

There have been many successful practices in the industry regarding service-oriented design principles. Among them, Netflix's large-scale microservice-oriented practice on the production system is the most influential and recognized. Through this practice, Netflix has 1.67 billion subscribers worldwide and carries over 15% of network traffic of the global Internet bandwidth. In the open-source area, Netflix contributes excellent microservice components, such as Eureka, Zuul, and Hystrix.

Overseas companies are carrying out service-oriented practices, and domestic companies have recognized its importance. Emerging Internet companies and traditional large enterprises have good practices and successful cases in service-oriented practices based on the Internet-based development of recent years. Alibaba's service-oriented practice originated from the Colorful Stone Project in 2008. After ten years of development, it has stably supported major promotion activities over the years. Let's take November 1, 2019 as an example. Alibaba's distributed system reached a peak of 54.4 million orders per second and calculated and processed 2.55 billion orders per second in real-time. Alibaba has shared its achievements in service-oriented practices with the industry through open-source projects, such as Apache Dubbo, Nacos, Sentinel, Seata, and Chaos Blade. These components have been integrated with Spring Cloud to form Spring Cloud Alibaba, which has become the successor to Spring Cloud Netflix.

With the rise of cloud-native, the service-oriented principle has been evolved and applied in practical businesses. However, enterprises face many challenges during implementation. For example, compared with self-built data centers, the service-oriented practice on the public cloud may have a huge resource pool, which improves the machine error rate significantly. The pay-as-you-go mode increases the frequency of scale-in and scale-out. A new environment requires applications to start faster with no strong dependencies between applications and be scheduled freely between nodes with different specifications. However, it is expected that these problems will be solved as cloud-native architecture evolves.

Elasticity refers to the capability to adjust the deployment scale of a system as the business volume changes. This eliminates the need to prepare fixed hardware or software resources based on capacity planning. Excellent elasticity changes the IT cost model of enterprises and enables enterprises to support the explosive expansion of business scale better. There is no need to consider additional hardware and software resource costs (idle costs). As such, enterprises' development will no longer be limited by hardware and software resources.

The cloud-native era has lowered the threshold for enterprises to build IT systems to a great extent. This improves the efficiency of enterprises in transforming business planning into products and services, which is particularly prominent in the mobile Internet and gaming industries. After an application becomes popular, the number of users will increase exponentially, which is a common phenomenon. The exponential growth of the business will pose great challenges to the performance of enterprises' IT systems. It is the development and O&M personnel who are usually facing these challenges in traditional architecture, aiming to improve the system performance. However, even if they do all they can, they may not be able to completely solve the bottleneck problem of the system. In the end, the system will be unavailable because it cannot cope with the influx of massive users.

The peak traffic features of the business will be another significant challenge in addition to facing the test of exponential growth of the business. For example, the traffic of the movie ticket booking system in the afternoon is far more in the early hours of the morning. The traffic on weekends is several times more than on weekdays. Takeaway ordering systems also face the problem of peak traffic periods during lunch and supper time. Enterprises need to prepare and pay for a large number of computing, storage, and network resources in traditional architectures to cope with scenarios with peak traffic features, but these resources remain idle most of the time.

Therefore, enterprises should apply elasticity to their application architectures when building IT systems in the cloud-native era. By doing so, they can deal with different scenarios with a fast-growing business scale and leverage cloud-native technologies and cost advantages.

Elastic system architecture must adhere to the following four principles.

A large, complex system may consist of hundreds or thousands of services. When an architect designs its architecture, some principles must be followed. Put the relevant logic together and split the unrelated logic into independent services. Services can discover each other through standard service discovery and communicate through standard interfaces. Services must be loosely coupled. Thus, each service can achieve auto scaling independently, avoiding upstream and downstream relevant failures.

Splitting applications by function does not solve the problem of elasticity completely. When an application is split into multiple services, a single service eventually encounters system bottlenecks as user traffic grows. Therefore, each service must be designed to support horizontal splitting to split services into different logical units. Each unit processes a part of user traffic, which provides service with excellent scalability. The biggest challenge lies in the database system. The database system itself is stateful, so splitting data properly and providing a correct transaction mechanism is very complex. However, in the cloud-native era, the cloud-native database services provided by the cloud platform can solve most of the complex distributed system problems. Therefore, if enterprises build an elastic system with the capabilities of cloud platforms, their database system will have elasticity.

System burst traffic is usually unpredictable. A common solution is to scale out the system manually to support larger-scale user access. After the architecture splits, the elastic system must have automatic deployment capability. This will shorten the impact time of burst traffic in a timely manner based on established rules or external traffic that triggers the system's automatic scaling out function. Meanwhile, it also helps automatically scale in the system after burst traffic to reduce the resource consumption cost.

Exception response plans need to be designed for an elastic system in advance. For example, when carrying out hierarchical management of services, system architecture needs to have the capability of service degradation to deal with exceptions, such as the failure of the elastic mechanism, insufficient elastic resources, or unexpected peak traffic. Resources are released by lowering the quality of some non-critical services or disabling some enhanced functions. Then, the service capacity for important functions is scaled out to prevent impact on the main functions of the product.

There are many successful cases in building large-scale elastic systems worldwide, best represented by Alibaba's annual Double 11 Global Shopping Festival. Alibaba buys elastic resources from Alibaba Cloud and deploys its applications every year to handle peak traffic that is hundreds of times higher than normal times. After this peak traffic period, Alibaba releases these resources. Resources are charged in the pay-as-you-go billing mode to reduce resource costs during big promotion activities. Another example is the elastic architecture of Sina Weibo. When a major event happens, Sina Weibo uses an elastic system to scale out application containers on Alibaba Cloud to cope with the large number of search and forwarding requests resulting from major events. The system reduces the resource costs incurred from trending searches by scaling out on-demand in minutes.

The technical ecosystems, such as FaaS and Serverless, gradually become mature through the development of cloud-native technologies, making it less difficult to build large-scale elastic systems. When enterprises adopt technical concepts, such as FaaS and Serverless as the design principles of their system architectures, the system will have the capability of elasticity. As such, there is no need to pay additional costs for maintaining the elastic system itself.

Observability attaches more importance to proactivity, which is different from the passive capabilities provided by monitoring, business detection, and Application Performance Management (APM). In distributed systems (such as cloud computing), methods (such as logging, tracing, and metrics) are proactively adopted so that the time consumption of multiple service calls, return values, and parameters generated by one app click is clearly recorded. More deeply, even each third-party software call, SQL request, node topology, and network response can be observed. O&M, development, and business personnel can obtain the running information of software in real-time with such observability. They will have the high associative analysis capability to optimize business health and user experience continuously.

With the all-around development of cloud computing, application architectures of enterprises have changed significantly and are gradually transitioning from monolithic applications to microservices. In microservice architecture, the design mode of loose coupling between services makes version iteration faster and iteration cycles shorter. Kubernetes in the infrastructure layer has become the default platform for containers. Services can be integrated and deployed through pipelines continuously. These changes can minimize the risk of service changes and improve the efficiency of R&D.

In microservice architecture, system faults may occur in any place. Therefore, we need to systematically design observability to reduce the mean time to repair (MTTR).

The following three principles must be adhered to while building the observability system.

Metric, tracing, and logging are the three pillars to building a complete observability system. The observability of a system requires the complete collection, analysis, and presentation of these three types of data.

(1) Metric

Metric is the KPI values used for measurement in multiple consecutive time periods. Metric is typically divided into several layers according to software architecture. These layers include system resource metrics (such as CPU usage, disk usage, and network bandwidth), application metrics (such as error rate, SLA, APDEX, and average latency), and business metrics (such as the number of user sessions, order quantity, and turnover.)

(2) Tracing

Tracing refers to recording and restoring the complete process of a distributed call by using the unique trace identity of TraceId. Tracing is in the entire process from processing data on the browser or mobile platform to executing SQL or initiating a remote call.

(3) Logging

Logging is used to record information, such as application running processes, code debugging, and errors and exceptions. For example, NGINX logs can record remote IP, request time, and data size. Log data needs to be stored centrally and be retrievable.

It is especially important for an observability system to create more associations between data. When a fault occurs, effective associative analysis can help define and locate the fault quickly, improving the fault handling efficiency and reducing unnecessary losses. Generally, server address, service interface, and other information of an application are taken as additive attributes and bound with information, such as metrics, call chain, and logs. In addition, the observability system is endowed with a certain degree of customization capabilities to meet the requirements of more complex O&M scenarios flexibly.

Multiple-dimensional and multi-form monitoring views allow O&M and development personnel to identify system bottlenecks and eliminate potential risks quickly. Monitoring data can be displayed in metric trend charts, bar charts, and other forms. Monitoring views require the capabilities of in-depth analysis and customization in combination with complex actual application scenarios. Thus, monitoring views will meet the needs of several scenarios, such as O&M monitoring, version release management, and troubleshooting.

With the development of cloud-native technologies, more complex scenarios will be based on heterogeneous microservice architectures. Observability is the foundation for all automation capabilities. We can only improve the stability of the system and reduce MTTR by realizing comprehensive observability. Therefore, enterprises need to think about how to build a full-stack observable system for system resources, containers, networks, applications, and services.

Resilience is the capability of software to remain unaffected when exceptions of software and hardware components occur. These exceptions generally include potential factors that may cause business interruption, such as software and hardware faults or hardware resource bottlenecks (like exhaustion of CPU or NIC bandwidth.) Sometimes, the service traffic may exceed the designed software carrying capacity, the operation of IDCs may be affected, and faults on dependent software may occur.

After the business is launched, there may be various uncertain inputs and unstable dependencies during most of the operation time. When these scenarios occur, the business needs to ensure the service quality as much as possible to meet the always online requirement represented by networking services nowadays. Therefore, the core design concept of resilience is failure-oriented design. In other words, we need to consider how to reduce the impact of exceptions on the system and service quality and restore them as soon as possible under various abnormal dependencies.

Practices and common architectures based on the resilience principle include service asynchronization, retry, throttling, degradation, circuit breaking, back pressure, primary/secondary mode, cluster mode, high availability of multiple availability zones (AZs), unitization, cross-region disaster recovery, and geo-redundancy for disaster recovery.

The following content is a detailed description of how to design resilience in a large system with specific cases. The Double 11 Global Shopping Festival is extremely important for Alibaba, so its system design must strictly follow the principle of resilience. For example, traffic cleansing is used at the unified access layer to implement security policies to prevent hacker attacks. Fine-grained throttling is used to ensure that peak traffic is stable and backend servers run properly. Alibaba implements cross-region disaster recovery based on the unitization mechanism and zone-disaster recovery with two IDCs based on the zone-disaster recovery mechanism to improve global high availability. As such, the service quality of IDCs will improve significantly. Stateless business migration is achieved using microservices and container technology in the same IDC. High availability is improved through multi-copy deployment. Messages are used to implement asynchronous decoupling between microservices to reduce service dependencies and improve system throughput. It is necessary to sort out the dependencies of each application, set the degradation switch, and continuously strengthen system robustness through fault drills. The purpose is to ensure the normal and stable operation of Alibaba's Double 11 Global Shopping Festival.

More digital services have become the infrastructure for the running of the whole social economy through the acceleration of digitalization. However, the risk of uncertainty in the quality of dependent services is becoming higher as the systems supporting these digital services become more complex. Therefore, the system must be designed with full resilience to cope with various uncertainties better. In particular, resilience design is crucial for core business procedures of core industries (such as financial payment procedures and e-commerce transaction procedures), business traffic portals, and procedures with complex dependencies.

Technology is a double-edged sword. The use of containers, microservices, DevOps, and a large number of third-party components reduces the complexity of distributed services and improves the iteration speed. It also increases the complexity of software technology stacks and the scale of components. This inevitably leads to the complexity of software delivery. Improper management will prevent applications from enjoying the advantages of cloud-native technologies. Enterprises can standardize the internal software delivery process through the practices of IaC, GitOps, OAM, Operator, and a large number of automated delivery tools in the CI/CD (Continuous Integration/Continuous Delivery) pipelines. They can also achieve automation based on standardization. Enterprises can automate software delivery and O&M through configuration data self-description final state-oriented delivery.

The following four principles must be followed to implement large-scale automation.

Enterprises must first standardize the infrastructure for business operation through containers, IaC, and OAM and further standardize the application definition and delivery process to achieve automation. Standardization allows enterprises to relieve business dependence on specific people and platforms and automate the unified and large-scale operation of businesses.

Final state orientation refers to a declarative description of the desired configurations of the infrastructure and applications and a constant focus on the actual running status of the applications. It makes the system change and adjust repeatedly until it approaches the final state. The principle of final state orientation emphasizes letting the system make decisions on how to implement the changes by setting up the final state. The system should avoid changing applications by assembling a series of procedural commands through the ticket system and workflow system.

The final result of automation depends on the capabilities of tools and systems and the people who set goals for the system. Therefore, it is necessary to ensure that the goal-setting person has much experience. When describing the final state of the system, the configurations that application developers, application O&M, and infrastructure O&M personnel focus on must be separated. By doing so, they only need to set configurations they are concerned about and good at to ensure that the set final state of the system is reasonable.

The automation process must be controllable, and the impact on the system must be predictable to realize the automation of all procedures. We cannot expect that errors will not occur in automatic systems, but we can ensure that even in the case of exceptions, the scope of influence of the error is controllable and acceptable. Therefore, when an automatic system executes changes, it also needs to follow the best practices of manual changes. This is to make sure that changes can be executed in gray mode, the execution results can be observed, and changes can be rolled back and traced quickly.

Fault automatic recovery of business instances is a typical process automation scenario. After a business is migrated to the cloud, although the probability of server failure on the cloud platform is reduced by various technical means, the software fault of the business cannot be eliminated. Software fault includes shutdown caused by software defects, Out of Memory (OOM) caused by insufficient resources, and service interruption caused by excessive loads. Problems of system software related to kernel, daemon process, and interference of other applications or tasks in the hybrid deployment are also included. The risk of software failure is increasing with the increase of business scale. Traditional troubleshooting methods of O&M require the intervention of O&M personnel to perform repair operations. However, O&M personnel are often unable to cope with large numbers of faults in large-scale businesses, and their service quality cannot be guaranteed.

Cloud-native applications require developers to use standard declarative configuration to enable automatic recovery of the fault and describe the methods of application health detection and startup. The service discovery that needs to be mounted and registered after the application is started and Configuration Management Database (CMDB) also need to be described. The cloud platform can detect applications repeatedly and perform repair operations automatically when faults occur with these standard configurations. In addition, O&M personnel can set the proportion of service unavailable instances based on the capacity to prevent possible false positives of fault detection itself. This allows the cloud platform to ensure service availability while automating fault recovery. The automatic recovery of faults frees developers and O&M personnel from tedious O&M operations and helps them handle faults in a timely manner, ensuring business continuity and high service availability.

Traditional security architecture is based on the boundary model to erect a wall between trusted and untrusted resources. For example, a company's intranet is trusted, while the Internet is not. In this architecture design, once intruders penetrate the intranet, they can access the resources within the intranet. The application of cloud-native architecture, the popularity of remote working, and the use of mobile devices have completely broken the physical boundaries of traditional security architecture. When working at home, employees can also share data with their partners as the applications and data are hosted on the cloud.

Today, boundaries are no longer defined by the physical location of an organization but have expanded to all places where access to organization resources and services is required. Traditional firewalls and VPNs can no longer reliably and flexibly cope with challenges brought by this new boundary. Therefore, brand-new security architecture is needed to flexibly adapt to the characteristics of the cloud-native and mobile era. Data security can be protected with this new architecture no matter where employees work, where devices are accessed, and where applications are deployed. The implementation of this new security architecture requires a zero-trust model.

Traditional security architecture believes everything in a firewall is secure, but a zero-trust model assumes that the firewall boundary has been breached, and every request has come from untrusted networks. Therefore, every request must be verified. Simply put, this model adheres to the principle of never trust, always verify. In the zero-trust model, each request must be authenticated, verified, and authorized based on a security policy. Core information, such as the user identity, device identity, and application identity related to the request is used to determine whether the request is secure.

If we discuss security architecture from the perspective of boundary, then the boundary of traditional security architecture is a physical network. The boundary of zero-trust security architecture is identity, which includes the identities of the user, device, and application. You must adhere to three basic principles to implement the zero-trust security architecture:

Each access request must be authenticated and authorized. Authentication and authorization need to be based on information, such as user identity, location, device information, service and workload information, data grading, and exception detection. For example, access authorization for communications between enterprise internal applications cannot be directly performed simply by determining whether the source IP address is an internal IP address. Instead, authorization requires checking the identity, device, and other information of the source application in combination with current policy.

For each request, only the permissions that are required for the moment are granted. The permission policy must be adaptive based on the current request context. For example, employees in the HR department should have permission to access HR-related applications but should not have permission to access finance department applications.

If the physical boundary is breached, it is necessary to strictly control the impact scope by cutting the network into multiple parts that are perceived by users, devices, and applications. All sessions are encrypted, and data analysis is conducted to ensure the visibility of the security status.

The evolution from traditional security architecture to zero-trust architecture has had a profound impact on software architecture. This is reflected in the following three aspects.

Security policies cannot be configured based on IP addresses. In cloud-native architecture, an IP address is not necessarily bound to a service or application. This happens because the IP address can change at any time with the application of technologies, such as auto scaling. Therefore, IP addresses cannot be used to represent application identity, and security policies cannot be established based on this assumption.

Identity should become infrastructure. The communication between services and the access to services are authorized if the identity of the user is known. In an enterprise, human identity management is usually part of the security infrastructure, but the identity of applications also needs to be managed.

Standard Release Pipeline: In an enterprise, R&D is generally done in a distributed manner, including version management, building, testing, and launch of the code. These procedures are independent. This decentralized mode will result in the security of services running in real-world production environments not being effectively guaranteed. If the procedures of code version management, building, and launching can be standardized, then the security of application release can be enhanced.

In general, the construction of the zero-trust model includes identity, equipment, application, infrastructure, network, data, and other parts. The implementation of zero-trust is a step-by-step process. For example, when all the traffic transmitted within the organization is not encrypted, the first step should be to ensure that the traffic of the application accessed by users is encrypted. Then, the encryption of all traffic can be implemented gradually. Cloud-native architecture allows enterprises to use the security infrastructure and services provided by the cloud platform to implement zero-trust architecture quickly.

Nowadays, technology and business are developing very fast. In engineering practice, few architecture models are clearly defined from the beginning and applicable to the entire software lifecycle. Instead, they need to be constantly refactored within a certain scope to adapt to the changing technical and business requirements. Similarly, cloud-native architecture must possess the capability of continuous evolution instead of being designed as a closed, unchanging architecture. Therefore, factors, such as incremental iteration and proper target selection need to be considered in the design. Architectural governance, risk control specifications, and characteristics of the business itself at the organization level (such as the architecture control committee) also need to be considered. We need to focus on how to ensure the balance between architectural evolution and business development, especially when the business is in rapid iteration.

Evolutionary architecture refers to architecture with the design of scalability and loose coupling in the early stages of development. This simplifies subsequent changes and reduces the cost of upgrade and refactoring, which can be conducted at any stage of the software life cycle, such as development, release, and overall agility.

Evolutionary architecture is important in industrial practice as changes are hard to predict in modern software engineering, and the cost of transformation is extremely high. Evolutionary architecture cannot avoid refactoring. However, it emphasizes the possibility of architectural evolution. When the entire architecture needs to evolve due to changes in technologies, organizations, or the external environment, the whole project can still follow the principle of strong boundary and context. This ensures that the logical partition described in domain-driven design becomes physical isolation. Evolutionary architecture achieves the physical modularization, reusability, and separation of duties of the entire system architecture through standardized and highly scalable infrastructure systems. In this process, it adopts a large number of advanced cloud-native application architecture practices, such as standardized application models and modular O&M capabilities. In evolutionary architecture, each system service is decoupled from other services at the structural level. Service replacement is as convenient as replacing Lego blocks.

In modern software engineering practice, evolutionary architecture has different practices and manifestations at different levels of the system.

In business-oriented application architecture, evolutionary architecture is closely related to microservice design. For example, the entire system architecture is finely designed into thousands of components with clear boundaries in Alibaba's Internet e-commerce applications, such as Taobao and Tmall. Its purpose is to facilitate developers that want to make non-destructive changes and avoid unpredictable changes due to improper coupling, which hinders the evolution of the architecture. As we can find out, all the software in evolutionary architecture supports a certain degree of modularization. This modularization is usually reflected in the best practices of classic layered architectures and microservices.

In terms of platform R&D, evolutionary architecture is mainly reflected in the Capability Oriented Architecture (COA). After the gradual popularization of cloud-native technologies, such as Kubernetes, the standardized cloud-native infrastructure is rapidly becoming a capability provider for platform architecture. Based on cloud-native, the Open Application Model (OAM) carries out modular COA practices on standardized infrastructure according to capabilities from the perspective of application architecture.

Currently, evolutionary architecture is still in the rapid growth and popularization stage. The software engineering industry has reached a consensus that the software world is constantly changing and is dynamic rather than static. Architecture is also not a simple equation but a snapshot of a continuous process. Therefore, evolutionary architecture is an inevitable trend in both business application and platform R&D layers. A large number of engineering practices that involve architecture updates show that the cost of managing application versions is huge if the implementation of the architecture is ignored. Good architecture planning can help reduce the cost of introducing new technologies into applications. This requires applications and platforms to meet the following requirements at the architecture layer: standardized architecture, separation of duties, and modularization. In the cloud-native era, OAM is rapidly becoming an important driving force for evolutionary architecture.

The construction and evolution of cloud-native architectures are based on the core features of cloud computing, such as elasticity, automation, and resilience, in combination with business goals and characteristics. This allows enterprises and technicians to fully utilize the benefits of cloud computing. The continuous expansion of technologies, the continuous enrichment of scenarios, and cloud-native architectures will continue to evolve along with the development of cloud-native. During these changes, the principles of typical architecture design are always of great significance and will guide us in architecture design and technical implementation.

668 posts | 55 followers

FollowAlibaba Cloud Native Community - December 16, 2021

Alibaba Cloud Native - October 27, 2021

Alibaba Cloud Community - September 1, 2022

ApsaraDB - December 21, 2022

Alibaba Cloud Native Community - July 6, 2022

Xi Ning Wang(王夕宁) - July 21, 2023

668 posts | 55 followers

Follow DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn MoreMore Posts by Alibaba Cloud Native Community