By Simian and Zhouyou

In OLAP business scenarios, data needs to be stored and processed efficiently. To improve data processing performance in ClickHouse, Materialized View is an effective approach. This article delves into the working principles and best practices of Materialized View (hereinafter referred to as MV) and addresses common issues that may arise during its usage.

Materialized View can be seen as a special type of trigger. When data is inserted, MV stores the result of SELECT query performed on the data into a target table.

It is designed to accelerate queries by preprocessing data during insertion.

CREATE MATERIALIZED VIEW mv TO dest

AS SELECT a, b, count() AS cnt

FROM source

GROUP BY a, bAs shown above, source is the source table of MV and dest is the target table of MV.

When using MV, the following three principles need to be kept in mind:

Here, the target table engines for MV are mainly SummingMergeTree or AggregatingMergeTree. MV stores the aggregation result of the data in the source table. The Insert performed on the source table triggers the updates to the MV aggregation data. The amount of data in MV may be significantly reduced compared to that in the source table. Querying MV can directly acquire the aggregation results without scanning a large amount of data in the source table each time.

For example, the following example uses SummingMergeTree to aggregate the hits value for each date/project.

-- Create the target table of SummingMergeTree.

CREATE TABLE wikistat_top_projects

(

`date` Date,

`project` LowCardinality(String),

`hits` UInt32

) ENGINE = SummingMergeTree

ORDER BY (date, project);

-- Create an MV.

CREATE MATERIALIZED VIEW wikistat_top_projects_mv TO wikistat_top_projects AS

SELECT

date(time) AS date,

project,

sum(hits) AS hits

FROM wikistat

GROUP BY date, project;The query efficiency of ClickHouse MergeTree is greatly affected by the design of the primary key and the order by key of the table. We can create a replication table through MV. The two tables have different primary keys and order by keys to meet different query requirements.

For example, there is a Null table where data from multiple data sources is inserted. The Null table has multiple MVs. They perform different data transformations and write the results to different destinations.

Kafka and RabbitMQ do not have data persistence capabilities. They can be used together with MV for data persistence.

The logic of MV can be summarized in just one simple sentence: when the data is inserted into the source table, MV replicates the data to the target table.

ClickHouse provides almost no restrictions on usage. Users can flexibly use MV according to their needs and can match it with other capabilities, such as MV+Distributed Table and MV+ReplicatedMergeTree. In practice, we often encounter the following situations: MV has been constructed, and the data has indeed been inserted into the source table. However, when we query within MV, the results fail to match expectations. Data is lost or duplicated.

Consider the need to use MV to aggregate data in the source table and the customer mistakenly uses MergeTree as the target table of MV.

✖ Error Example: Use common MergeTree for aggregation

CREATE TABLE source (a Int64, b Int64)

ENGINE = MergeTree PARTITION BY (a) ORDER BY (a,b);

-- The target table incorrectly uses MergeTree.

CREATE TABLE dest (a Int64, b Int64, cnt Int64)

ENGINE = MergeTree PARTITION BY (a) ORDER BY (a,b);

-- Create an MV based on the dest table.

CREATE MATERIALIZED VIEW mv to dest

AS SELECT a, b, count() as cnt

FROM source GROUP BY a, b;

-- Insert data into the source table.

insert into source(a,b) values(1, 1), (1,1), (1,2);

insert into source(a,b) values(1, 1), (1,1), (1,2);

-- The actual MV query result does not meet expectations.

SELECT a,b,count() FROM dest GROUP BY a, b;

┌─a─┬─b─┬─count()─┐

│ 1 │ 2 │ 2 │

│ 1 │ 1 │ 2 │

└───┴───┴─────────┘

-- Expected results

SELECT a, b, count() as cnt FROM source GROUP BY a, b

┌─a─┬─b─┬─sum─┐

│ 1 │ 2 │ 2 │

│ 1 │ 1 │ 4 │

└───┴───┴─────┘✅ Correct Example 1: Use SummingMergeTree

Since MV involves aggregation operations, SummingMergeTree or AggregatingMergeTree should be used.

First, if the aggregation operation is sum, you can use SummingMergeTree.

CREATE TABLE source (a Int64, b Int64)

ENGINE = MergeTree PARTITION BY (a) ORDER BY (a,b);

-- Create the target table of SummingMergeTree.

-- Note that ORDER BY(a,b ) specifies a,b as the aggregation key of SummingMergeTree.

CREATE TABLE dest_2 (a Int64, b Int64, sum UInt64)

ENGINE = SummingMergeTree ORDER BY (a, b);

-- Create an MV.

CREATE MATERIALIZED VIEW mv_2 to dest_2

AS SELECT a, b, sum(a) as sum

FROM source GROUP BY a, b;

insert into source(a,b) values(1, 1), (1,1), (1,2);

insert into source(a,b) values(1, 1), (1,1), (1,2);

-- Why is the data not aggregated according to a,b?

-- The reason is that SummingMergeTree is aggregated asynchronously during merge in the backend.

select * from mv_2;

┌─a─┬─b─┬─sum─┐

│ 1 │ 1 │ 2 │

│ 1 │ 2 │ 1 │

│ 1 │ 1 │ 2 │

│ 1 │ 2 │ 1 │

└───┴───┴─────┘

-- Therefore, group by needs to be added when querying SummingMergeTree.

select a,b,sum(a) as sum from mv_2 group by a;

┌─a─┬─b─┬───sum──┐

│ 1 │ 1 │ 2 │

│ 1 │ 2 │ 4 │

└───┴───┴────────┘

-- Alternatively, you can manually perform optimize table to trigger the SummingMergeTree aggregation.

optimize table dest_2 final;

-- The group by is not required after optimize.

select * from mv_2;

┌─a─┬─b─┬─sum─┐

│ 1 │ 1 │ 4 │

│ 1 │ 2 │ 2 │

└───┴───┴─────┘✅ Correct Example 2: Use AggregatingMergeTree

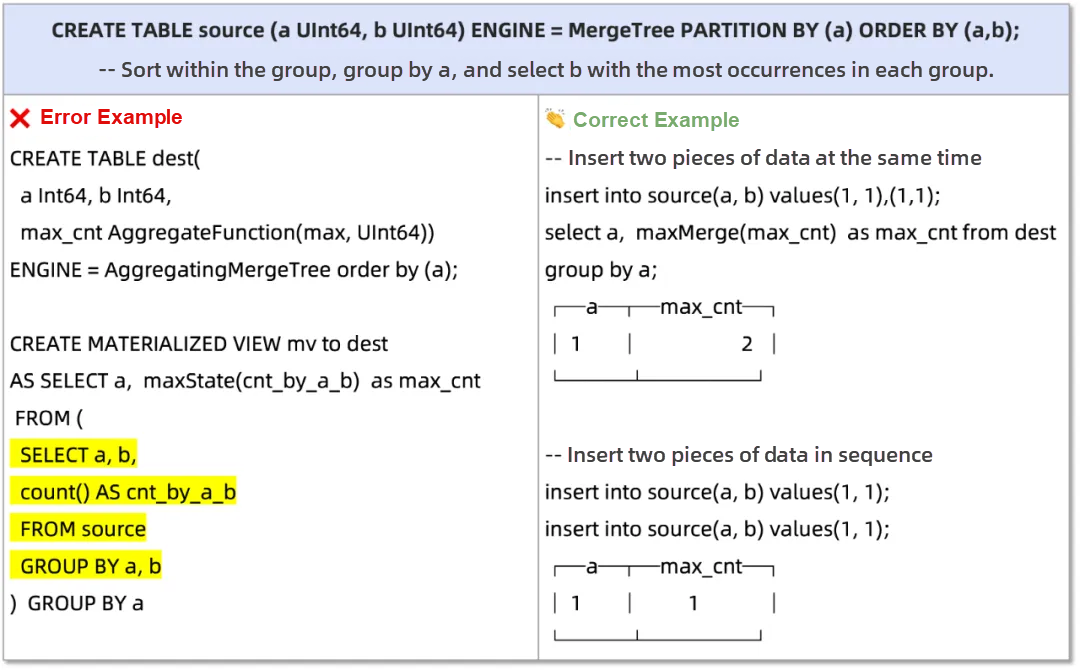

If there are other aggregation operations, select AggregatingMergeTree. The following describes the implementation of AggregatingMergeTree.

CREATE TABLE source (a Int64, b Int64)

ENGINE = MergeTree PARTITION BY (a) ORDER BY (a,b);

-- Create the target table of AggregatingMergeTree.

CREATE TABLE dest_3(

a Int64, b Int64,

cnt AggregateFunction(count, Int64))

ENGINE = AggregatingMergeTree order by (a, b);

-- Create an MV.

CREATE MATERIALIZED VIEW mv_3 TO dest_3 AS

SELECT a, b, countState(a) AS cnt

FROM source GROUP BY a, b

insert into source(a,b) values(1, 1), (1,1), (1,2);

insert into source(a,b) values(1, 1), (1,1), (1,2);

-- Use Merge to query data.

select a,b,countMerge(cnt) from mv_3 group by a,b;

┌─a─┬─b─┬─countMerge(cnt)─┐

│ 1 │ 2 │ 2 │

│ 1 │ 1 │ 4 │

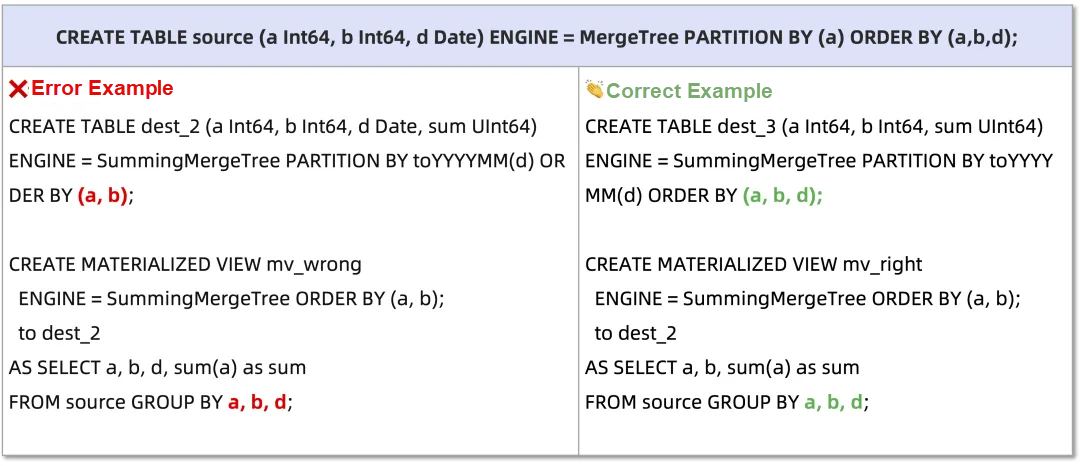

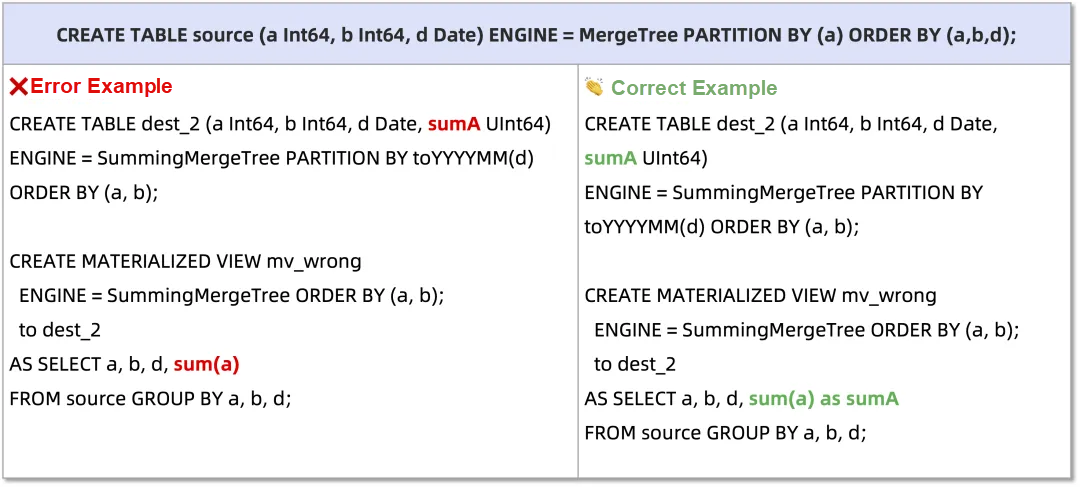

└───┴───┴─────────────────┘When you use SummingMergeTree, make sure that its ORDER BY is consistent with the GROUP BY fields.

As shown in the MV definition below, each insert only performs SELECT on the inserted data, rather than querying the entire source table.

Note:

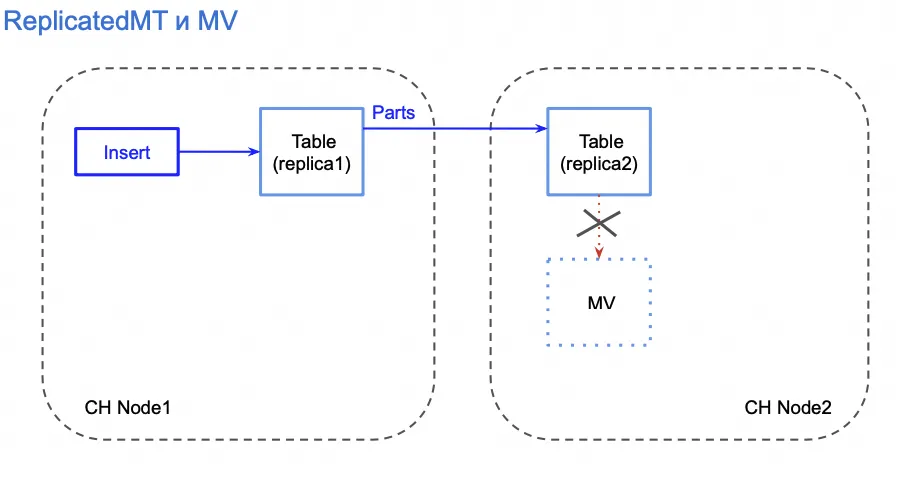

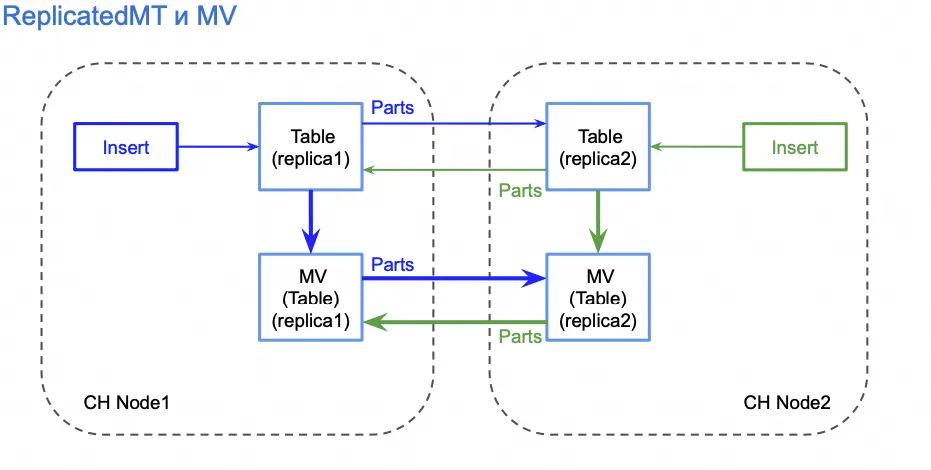

If you need to keep the data consistency of MV between replicas, define the target table of MV as ReplicatedMergeTree.

The data replication process is as follows:

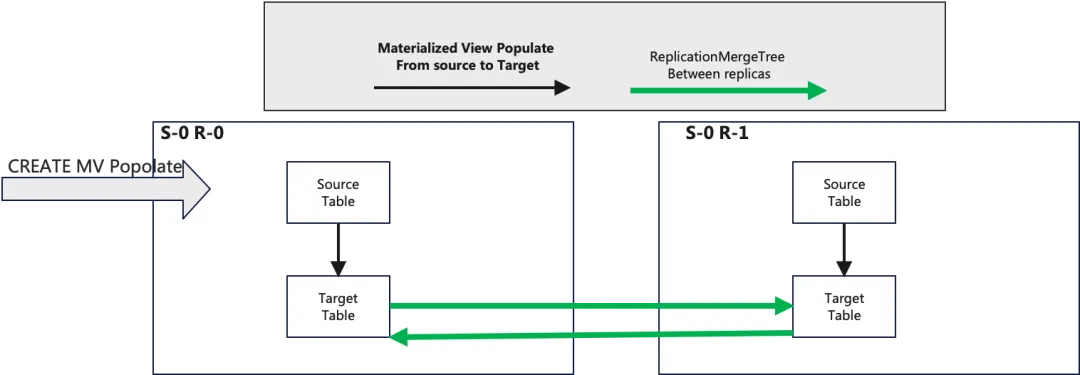

There is one scenario in MV and ReplicatedMergeTree that causes data duplication.

If you perform Populate on ReplicatedMergeTree, data duplication is triggered.

For details on this issue, please refer to: https://github.com/ClickHouse/ClickHouse/issues/9081

Note that only INSERT of the driving table triggers MV updates, but not that of the driven table.

If you want the MV to be updated when both the driving table and the driven table are inserted, you need to create two MVs. The example is as follows:

CREATE MATERIALIZED VIEW mv1 TO dest

SELECT ...

FROM source left join some_dimension on (...)

CREATE MATERIALIZED VIEW mv2 TO dest

SELECT ...

FROM some_dimension right join source on (...)It can be divided into four cases to discuss:

| Solution | MV Source | MV Target | INSERT INTO | Data in Target | Data Replication Process, Distributed(D), Local(L) |

| ① | Local | Local | Distributed | YES | 1. Write data to Table L and insert data into Table D asynchronously. 2. Trigger MV updates of Table L. |

| Local | YES | 1. Write data to Table L and trigger MV updates. | |||

| ② | Distributed | Distributed | Distributed | YES | 1. Write data to Table D and MV of Table D is updated. 2. Write data to Table L. |

| Local | NO | 1. Write data to Table L and MV updates of Table D are not triggered. | |||

| ③ | Local | Distributed | Distributed | YES | 1. Write data to Table D and asynchronously insert Table D into Table L. 2. Trigger MV updates of Table L. |

| Local | YES | 1. Write data to Table L and trigger MV updates. | |||

| ④ | Distributed | Local | Distributed | YES | 1. Write data to Table D and asynchronously insert Table D into Table L. 2. Trigger MV updates of Table L. |

| Local | NO | 1. Write data to Table L and MV updates of Table D are not triggered. |

Solution ① is more common and is used in single-shard scenarios. This solution is the simplest and most efficient. It does not cause data asynchrony.

Solutions ② and ③ are used in multi-shard scenarios. Data is distributed to different shards based on the sharding key rule.

In solution ②, if data is written to Local, data in MV is not replicated.

[1] https://clickhouse.com/blog/using-materialized-views-in-clickhouse

[2] https://developer.aliyun.com/article/1327456

[3] https://dencrane.github.io/Everything_you_should_know_about_materialized_views_commented.pdf

[4] https://github.com/ClickHouse/ClickHouse/issues/9081

[5] https://github.com/ClickHouse/ClickHouse/issues/8336

Discover what and how you can use our products to build >>

Develop Data ETL Links in AnalyticDB for PostgreSQL Using DMS

ApsaraDB - May 7, 2021

Alibaba Cloud Data Intelligence - August 8, 2024

ApsaraDB - September 22, 2025

ApsaraDB - March 15, 2024

ApsaraDB - August 4, 2023

Alibaba Cloud MaxCompute - September 7, 2022

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More ApsaraVideo Media Processing

ApsaraVideo Media Processing

Transcode multimedia data into media files in various resolutions, bitrates, and formats that are suitable for playback on PCs, TVs, and mobile devices.

Learn MoreMore Posts by ApsaraDB