By Sajid Qureshi, Alibaba Community Blog author.

In this tutorial, you will learn how to install Hadoop and its components on a multinode cluster using Apache Ambari.

Apache Ambari is an open-source tool that allows you to deploy, manage, and monitor a Hadoop cluster. You can also integrate Hadoop with your existing infrastructure through using Ambari. This is especially useful for an enterprise-level infrastructure.

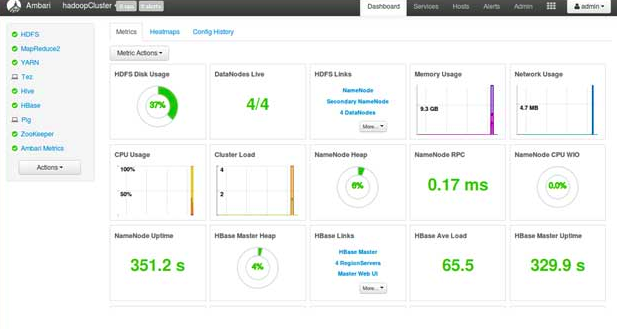

Moreover, you can use Ambari to check and review cluster health, with things like heatmaps, and view MapReduce from Ambari's dashboard. Ambari comes with a very simple and easy-to-use, user-friendly interface, where you can complete various management, configuring and monitoring tasks.

For this tutorial, you will need the following items:

Note: For this tutorial, we will be using four Alibaba Cloud ECS instances, corresponding to node01, node02, node03, node04, to deploy a Hadoop cluster. Next, our Ambari server will be installed on node01, and there will also be Ambari agents running on every single node in our Hadoop cluster.

For this tutorial, before you do anything else, it's recommended that you upgrade all the available packages before installing any new package on the system. To do that, run the sudo apt-get update command.

Apache Ambari doesn't require any special hardware or software configurations. So, let's get directly started and install Apache Ambari on node01. To do this, you'll need to add the Ambari repository to your system using the following command:

sudo wget -O /etc/apt/sources.list.d/ambari.list http://public-repo-1.hortonworks.com/ambari/ubuntu18/2.x/updates/2.7.3.0/ambari.listNext, you will need to add the key using the following command:

sudo apt-key adv --recv-keys --keyserver keyserver.ubuntu.com B9733A7A07513CADAfter this, run the sudo apt-get install ambari-server command to install the Apache Ambari server. Note that this command may take some time and will install Apache Ambari on your system. Then, once the installation is complete, you will need to configure it and set up the Ambari server.

You can do so by running the sudo ambari-server setup command and it will do the job for you. After doing this, you'll be prompted to customize the user account for the Ambari server daemon. You can choose any option as your wish. If you don't already have the Java Development Kit (JDK) installed on your system, then you'll be asked for that, too.

Checking JDK...

[1] Oracle JDK 1.8 + Java Cryptography Extension (JCE) Policy Files 8

[2] Custom JDK

==============================================================================

Enter choice (1): 1Apache Ambari, by default, uses the PostgreSQL database to store the configuration data. Now that the configuration is complete, and we are ready now to start the Ambari server, so let's start the ambari server by using the sudo ambari-server start command.

After running this command, you should see the following output:

Server started listening on 8080

DB configs consistency check: no errors and warnings were found.

Ambari Server 'start' completed successfully.

sajid@testalibaba:~$ Following this, you can check the status of the Ambari server using the sudo ambari-server status command. As the ouput of this command, you should see Ambari Server running.

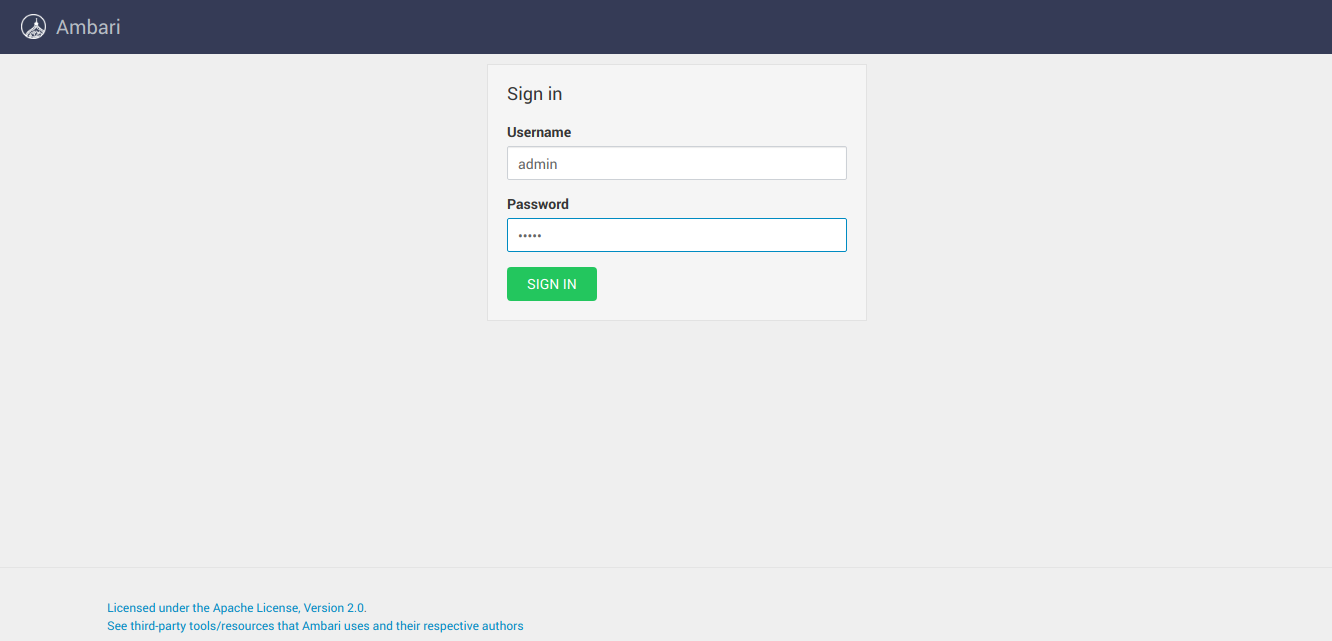

Now that you've set up the Ambari server, you can access its interface on port 8080. So now go ahead and open up a web browser and visit http://YourServerIP:8080 or http://YourDomain.com:8080. Of course, you'll need to change the address to your actual one.

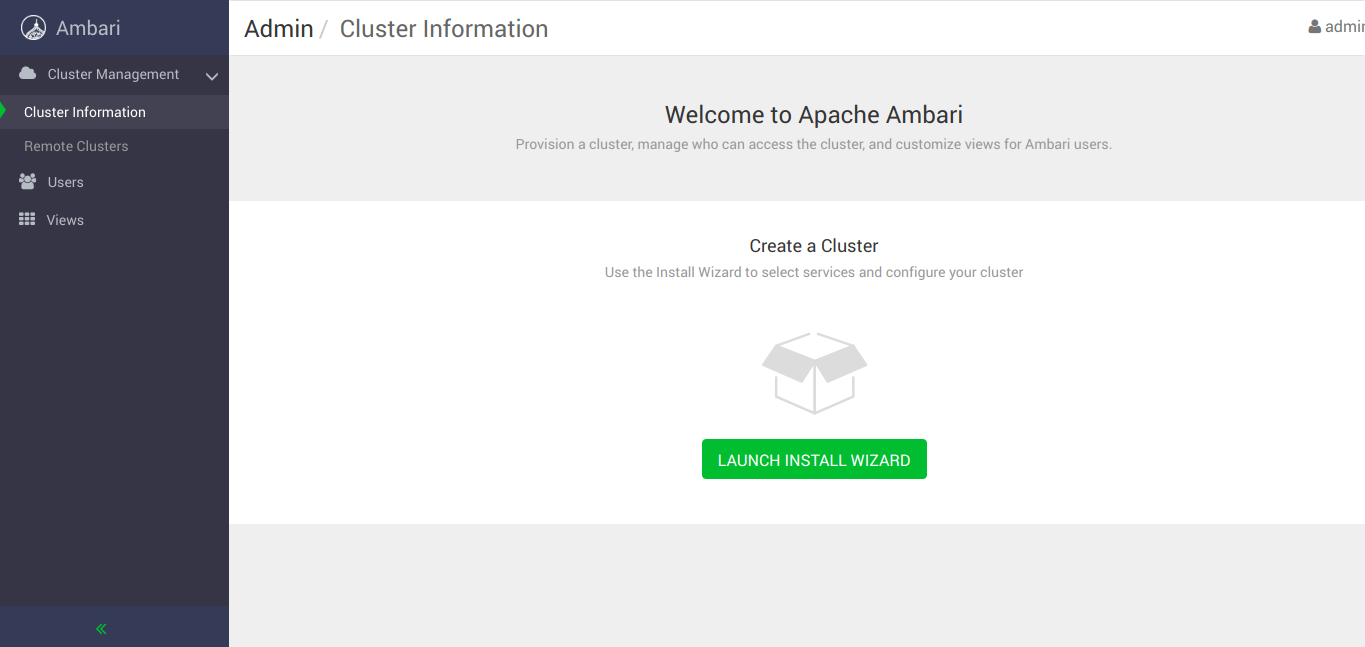

Now, you'll want to log in to Ambari using the default credentials admin as both the username and password. Then, following this, you should see the Apache Ambari homepage, which should look something like this:

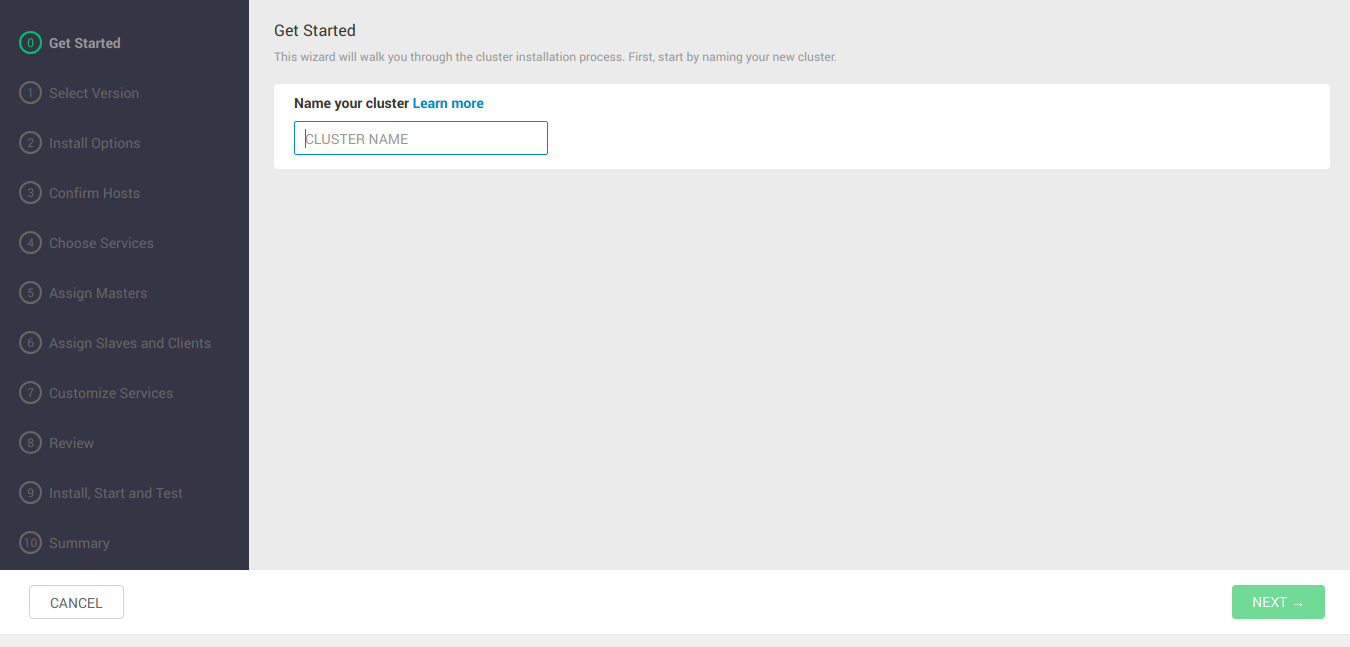

Now, click on the Launch Install Wizard button to proceed. From there, you'll be prompted to enter a name for your Hadoop cluster. After doing so, click Next, and select a stack, then click Next again. Then, you'll want to select HDP 3.1.

On the next interface, you will be prompted to enter the hosts' details. For this, simply, enter the FQDN (fully qualified domain name) of each host. Then, you'll need to select the private key used when creating these instances and the username to log in to these nodes. Finally, click Register and Confirm.

Then, on the next interface, we will see host registration status. When you see all hosts entries are in the green, go on to the next interface. There you will be asked to choose services to install for your Hadoop cluster according to the stacks that you've selected earlier. Based on our selections, Ambari will list other dependent services that are needed to be included in our cluster.

On the following interface, you will need to associate master services with the nodes. For this, select the nodes where namenode, resourcemanager, secondary namenode, zookeeper, so on, will run. Keep in mind that the primary and secondary services like namenode and secondary namenode are not on the same machine, whereas zookeeper will run on all the machines.

Following this, you will then need to choose where data nodes and node managers will run. Here, we will run data nodes and node manager on all the machines. You can choose any different configuration setup according to your choice.

On the next interface, we will need to customize services for our cluster. We can change any configuration value in this step. Also, if there is any error or requirement in the configuration, then you can find them on this page. You will need to resolve the errors before proceeding further.

After this, you will need to review all the services and configuration setup. If nothing goes wrong and everything looks good then proceed further and start the installation. Note that it may take a some time.

Then, once the installation is completed, click Next to proceed to the summary page, then click Complete to finish this process. Finally, you will see the cluster homepage with charts like this:

In this tutorial, you've learned how to install Hadoop and its components on a multinode cluster using Apache Ambari. Hopefully, now you can deploy, manage, and monitor your Hadoop cluster using the Apache Ambari.

2,593 posts | 793 followers

FollowAlibaba Clouder - August 26, 2019

Alibaba Clouder - April 9, 2019

Alibaba Clouder - May 22, 2019

Alibaba Clouder - September 29, 2019

Apache Flink Community China - January 9, 2020

Alibaba Clouder - December 26, 2017

2,593 posts | 793 followers

Follow E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB supports multiple storage engines, including MySQL InnoDB to meet different user requirements.

Learn More ApsaraDB for MongoDB

ApsaraDB for MongoDB

A secure, reliable, and elastically scalable cloud database service for automatic monitoring, backup, and recovery by time point

Learn MoreMore Posts by Alibaba Clouder