By Mingquan Zheng, Kai Yu

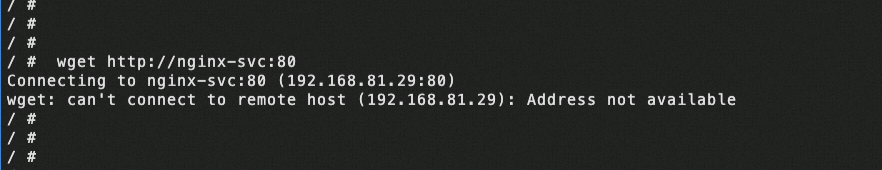

The pod has been running normally for a certain period of time after it was created. However, one day it was observed that no new connections were being established. In the business context, pod A accessed the svc name of svc B. When manually applying the wget command within the pod, an error message "Address not available" was encountered. Why did this error occur?

The rough example is depicted below:

I conducted extensive research to understand the reasons behind the "Address not available" error and which specific address is considered unavailable. Despite finding relevant definitions based on the POSIX (Portable Operating System Interface for UNIX) error standard, the explanation remains unclear.

Reference of error code: [errno.3 [1]]

EADDRNOTAVAIL

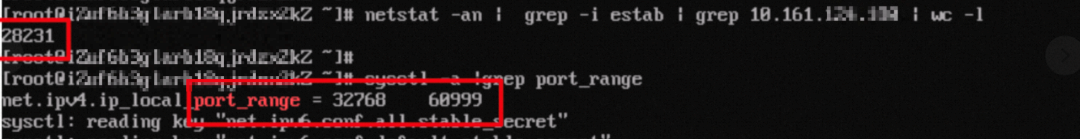

Address not available (POSIX.1-2001).By using netstat –an, I checked the address of the connection svc. The number of connections in the estab state has reached the threshold of available random ports. Therefore, no new connection can be created.

The problem is finally resolved by modifying the port range of the kernel parameter random port: net.ipv4.ip_local_port_range.

We know that the port range of random ports defined by the Linux kernel is from 32768 to 60999. This range is often overlooked in business design scenarios. As we all know, each TCP connection is composed of four tuples: source IP, source port, destination IP, and destination port. If any of these tuples change, a new TCP connection can be created. In the case where a pod needs to access a fixed destination IP and port, the only variable for each TCP connection is the source port, which is randomly assigned. Therefore, when a large number of long connections need to be established, it is necessary to either increase the kernel's range of random ports or adjust the service configuration.

Related kernel reference: [ip-sysctl.txt [2]]

ip_local_port_range - 2 INTEGERS

Defines the local port range that is used by TCP and UDP to

choose the local port. The first number is the first, the

second the last local port number.

If possible, it is better these numbers have different parity

(one even and one odd value).

Must be greater than or equal to ip_unprivileged_port_start.

The default values are 32768 and 60999 respectively.Let’s manually narrow the port range of net.ipv4.ip_local_port_range, and then reproduce it.

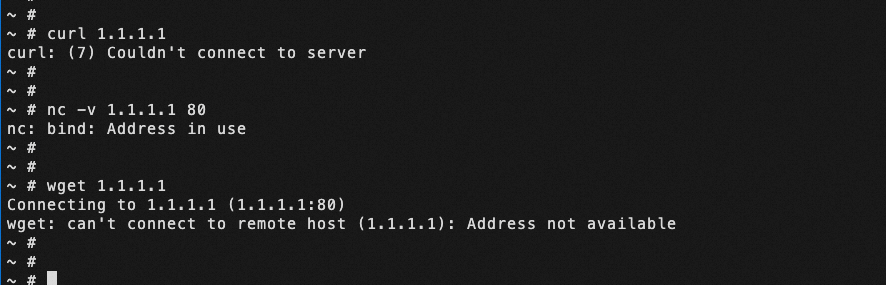

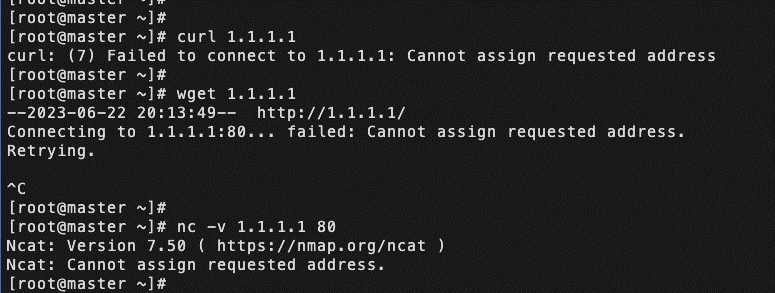

For the same problem, I tried curl, nc, and wget commands respectively, and different errors were reported, which confused me.

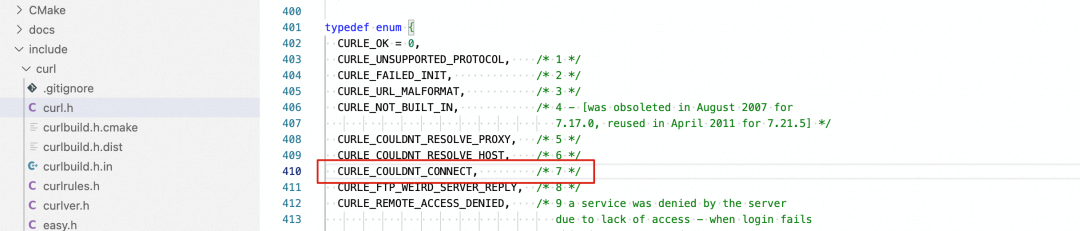

• curl: (7) Couldn't connect to server

• nc: bind: Address in use

• wget: can't connect to remote host (1.1.1.1): Address not available

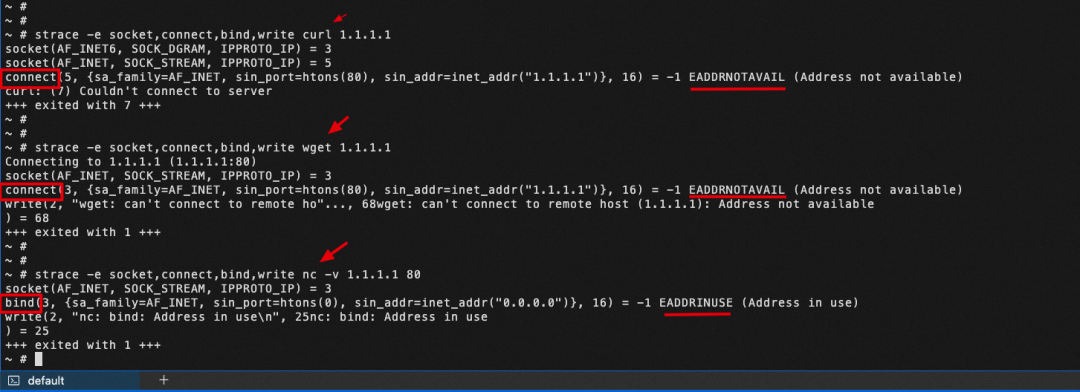

Let's analyze the process using the strace command to trace the call name of the specified system. cutl, wget and nc commands all create socket(). But I find out that the wget and curl commands call the connect() function. In contrast, the nc command first call the bind() function, and if an error is reported, It will not call the connect() function.

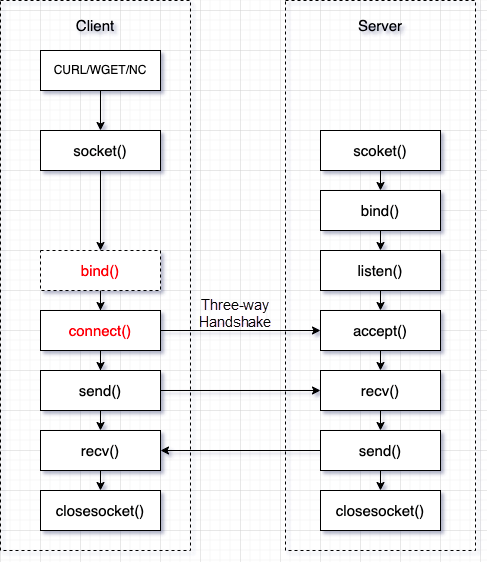

As shown in the figure, through the analysis of the B/S architecture, connect() is established after the client creates a socket.

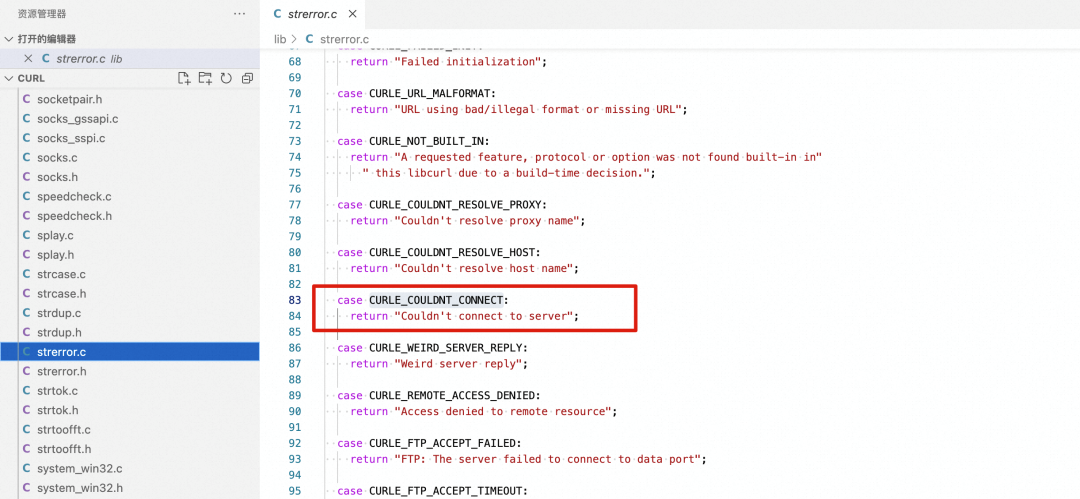

Why do wget and curl report different errors when they call the same connect() function?

Why do the connect() function and the bind() function report different errors?

EADDRINUSE

Address already in use (POSIX.1-2001).

EADDRNOTAVAIL

Address not available (POSIX.1-2001).If we find a machine with the Centos7.9 system, install curl, wget, nc, and other tools, we may encounter the error message "Cannot assign requested address" when narrowing the port range. In this scenario, we know that in some images (alpine and busybox), using the same command tool will report different errors under the same circumstances. This is because these images may choose the busybox toolbox for some basic commands to reduce the size of the entire image, making it difficult to locate problems (wget and nc are tools of the busybox toolbox. For more information, see the busybox document: Busybox Command Help [3]).

Definitions related to error codes in the Linux system: /usr/include/asm-generic/errno.h

#define EADDRNOTAVAIL 99 /* Cannot assign requested address */Theoretically, ports ranging from 0 to 65535 can be used. The ports ranging from 0 to 1023 are privileged and are available only for privileged users, which have been reserved for the following standard services, such as HTTP:80 and SSH:22. To avoid unauthorized users from attacking through traffic characteristics, it is suggested that the random port range can be limited to 1024 to 65535 if the port range needs to be larger.

According to the information provided by the Kubernetes community, we can modify the source port through securityContext [4] and by setting the initContainers to the privileged mode, mount -o remount rw /proc/sys. This modification only takes effect in the network namespace of the pod.

...

securityContext:

sysctls:

- name: net.ipv4.ip_local_port_range

value: 1024 65535initContainers:

- command:

- /bin/sh

- '-c'

- |

sysctl -w net.core.somaxconn=65535

sysctl -w net.ipv4.ip_local_port_range="1024 65535"

securityContext:

privileged: true

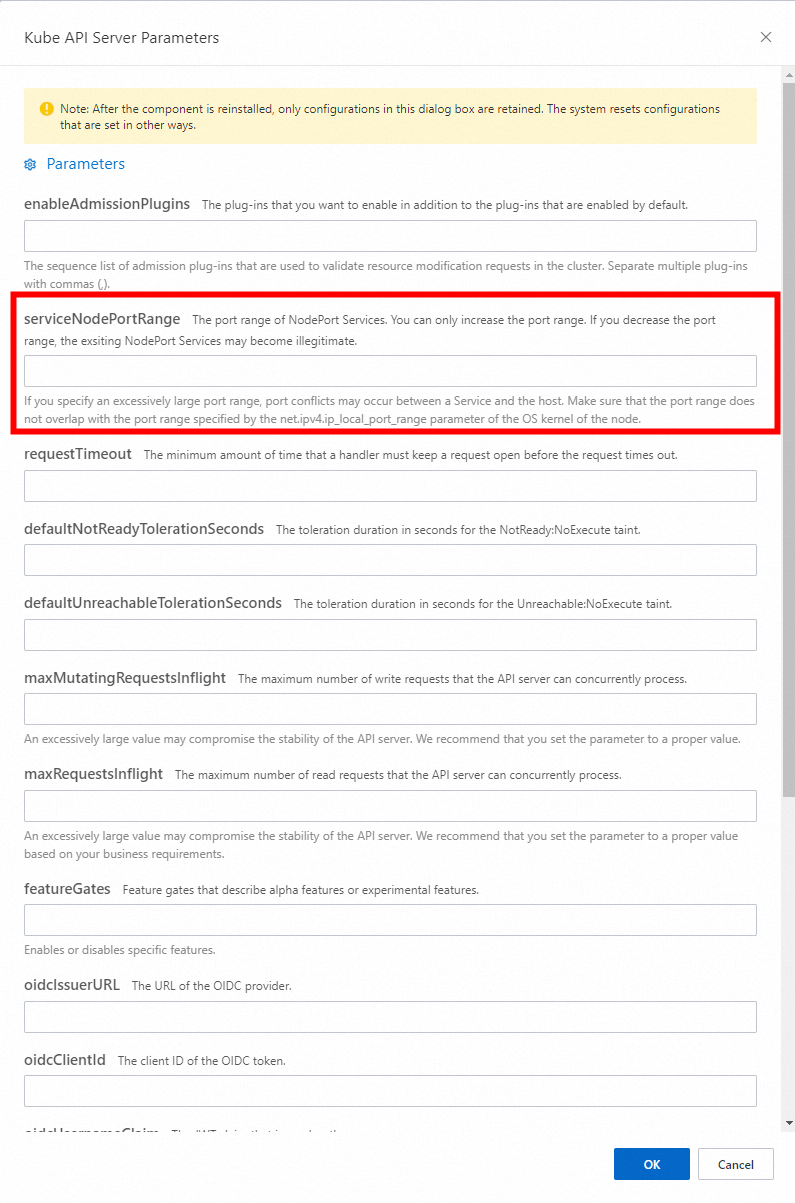

...It is not recommended to modify net.ipv4.ip_local_port_range for clusters later than version 1.22, because it will conflict with ServiceNodePortRange.

The default ServiceNodePortRange of Kubernetes is from 30000 to 32767. Kubernetes 1.22 and later versions remove the logic of kube-proxy monitoring NodePort. If there is a monitor, the application will avoid the monitored ports when selecting random ports. If the net.ipv4.ip_local_port_range and the ServiceNodePortRange share the same range, due to the removal of the logic of monitoring NodePort, the application may select the overlapping part when selecting random ports, such as the range from 30000 to 32767. When NodePort conflicts with the kernel parameter, net.ipv4.ip_local_port_range, it may lead to occasional TCP connection failure, health check failure, abnormal service access, and so on. For more information, see the Kubernetes Community PR [5].

When creating a large number of SVCs, one way to reduce the number of monitors is to submit ipvs/iptables rules. This optimization improves connection performance and addresses issues such as a high number of CLOSE_WAIT states occupying TCP connections in certain scenarios. It's worth noting that the PortOpener logic was removed after version 1.22.

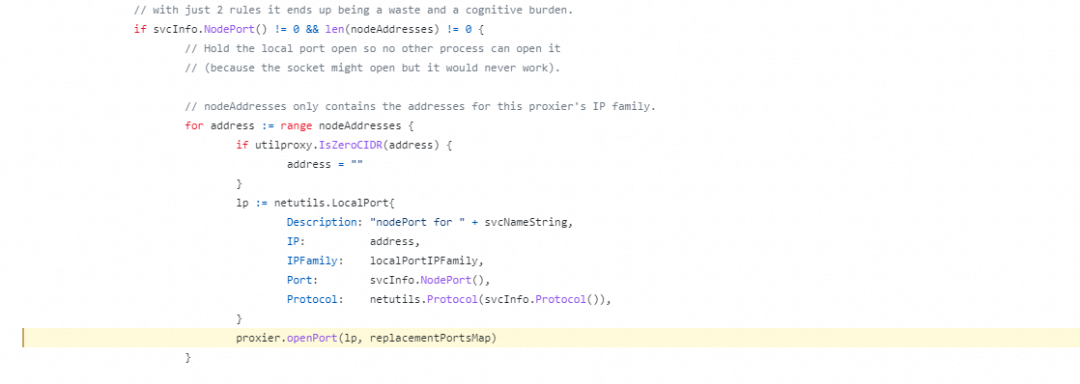

kubernetes/pkg/proxy/iptables/proxier.go

Line 1304 in f98f27b[6]

1304 proxier.openPort(lp, replacementPortsMap)

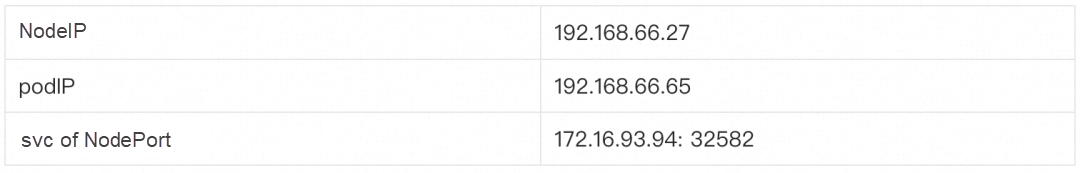

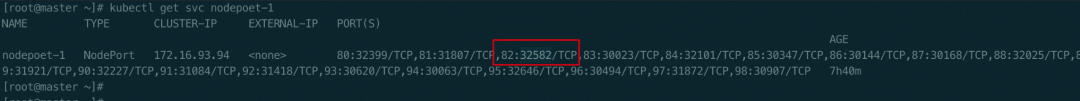

How exactly do these conflicts occur? The testing environment is Kubernetes 1.22.10, and the network type of kube-proxy is IPVS. Take the Kubelet health check as an example. The kernel parameter of the node, net.ipv4.ip_local_port_range, is adjusted to 1024~65535.

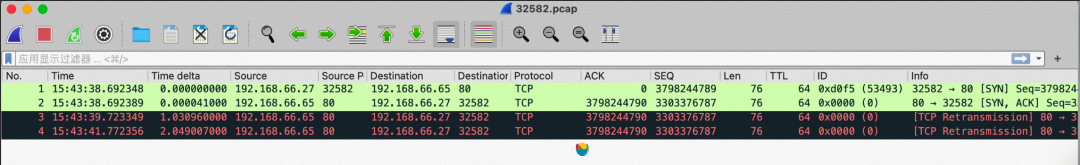

To analyze the issue, we deploy tcpdump to capture packets and stop capturing when an event with a health check failure is detected. Here's what we observe:

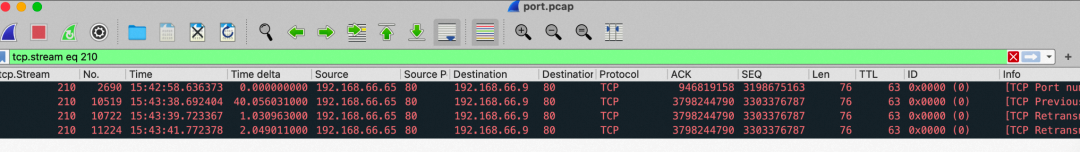

The kubelet uses the node IP address (192.168.66.27) and random port 32582 to initiate a TCP handshake with the pod IP address (192.168.66.65)+80. However, when the pod sends an SYN ACK to the kubelet during the TCP handshake, the destination port is 32582, but the pod keeps retransmitting. Because this random port happens to be the nodeport of a service, it is preferentially intercepted by IPVS and given to the backend service of the rule. However, this backend service (192.168.66.9) does not initiate a TCP connection with podIP (192.168.66.65), so the backend service (192.168.66.9) is directly discarded. Then, the kubelet cannot receive the SYN ACK response, and the TCP connection cannot be established, making the health check fail.

From this message, we can conclude that the kubelet initiates a TCP handshake but encounters repeated retransmission when the pod responds with a SYN ACK.

In fact, the handshake is sent to the backend pod of the port 32582 SVC, which is directly discarded.

To solve this problem, a judgment can be added to hostnework. When initContainers is used to modify kernel parameters, the parameter, net.ipv4.ip_local_port_range, will be modified only if the podIP and hostIP are not equal, so as to avoid modifying the kernel parameters of nodes due to misoperation.

initContainers:

- command:

- /bin/sh

- '-c'

- |

if [ "$POD_IP" != "$HOST_IP" ]; then

mount -o remount rw /proc/sys

sysctl -w net.ipv4.ip_local_port_range="1024 65535"

fi

env:

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: HOST_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

securityContext:

privileged: true

...In Kubernetes, APIServer provides the ServiceNodePortRange parameter (command line parameter - service-node-port-range), which restricts the port range that Nodes use to monitor the NodePort of Services with NodePort or LoadBalancer type. By default, this parameter is set to 30000 to 32767. In an ACK Pro cluster, you can customize the control plane component parameters to modify the port range. For more information, see Customize the Parameters of Control Plane Components in ACK Pro Clusters [7].

[1] errno.3

https://man7.org/linux/man-pages/man3/errno.3.html

[2] ip-sysctl.txt

https://www.kernel.org/doc/Documentation/networking/ip-sysctl.txt

[3] Busybox Command Help

https://www.busybox.net/downloads/BusyBox.html

[4] securityContext

https://kubernetes.io/docs/tasks/administer-cluster/sysctl-cluster/

[5] Kubernetes Community PR

https://github.com/kubernetes/kubernetes/pull/108888

[6] f98f27b

https://github.com/kubernetes/kubernetes/blob/f98f27bc2f318add77118906f7595abab7ab5200/pkg/proxy/iptables/proxier.go#L1304

[7] Customize the Parameters of Control Plane Components in ACK Pro Clusters

https://www.alibabacloud.com/help/en/ack/ack-edge/user-guide/customize-ack-pro-control-plane-component-parameters-1693463976408

Exploration and Practice of Cloud-native Observability of Apache Dubbo

Towards Native: Examples and Principles of Spring and Dubbo AOT Technology

664 posts | 55 followers

FollowOpenAnolis - February 2, 2026

OpenAnolis - June 25, 2025

Alibaba Cloud Blockchain Service Team - September 6, 2018

Alibaba Clouder - March 6, 2018

Data Geek - May 29, 2024

Alibaba Clouder - June 24, 2019

664 posts | 55 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native Community