By John Hua, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

This article is to discuss the technical feasibility of building immutable infrastructures on Alibaba Cloud at multiple layers. Various examples will be given to set the context that helps you understand what immutable infrastructure is and why it is important.

In a traditional cluster environment, engineers and administrators are randomly sshing into their servers, installing packages or even tweaking configuration on a server-by-server basis. Applications are deployed manually. Dependencies are introduced accidentally. Configurations are modified and drifted unpredictably. Evidently human factors will sooner or later become a catastrophe.

In order to reduce inconsistency that are unnecessarily introduced during development, deployment or operation, immutable infrastructure tends to build a series of isolated snapshots, which namely will not be modified, but could be easily automated for atomic state transfer, to eliminate unwanted behaviors. So if there is anything wrong you can always roll back to the previous known-good states.

In a cloud-based ecosystem, we can simply find the truth might be summarized this way, Consistency => Portability => Automatbility => Scalibity. Immutable infrastructure might be the best model to achieve consistency, which build the corner stone of this chain.

As a paradigm rather than concrete technology, immutable infrastructure can be approached and implemented differently. In this article, we will categorize them into these four layers: application platform, file system, package management and provisioner.

Application Platform here can refer to specific runtimes like Java, Python, etc.., or generic ones like container technologies. In this section we will only talk about Docker considering its popularity and relevancy.

Immutability is the main principle of container technologies, once an Docker image is built it will never change. Changes that are really required need to be built into a new image and encapsulated into separate image layer. So it forcibly makes all application environment same and portable.

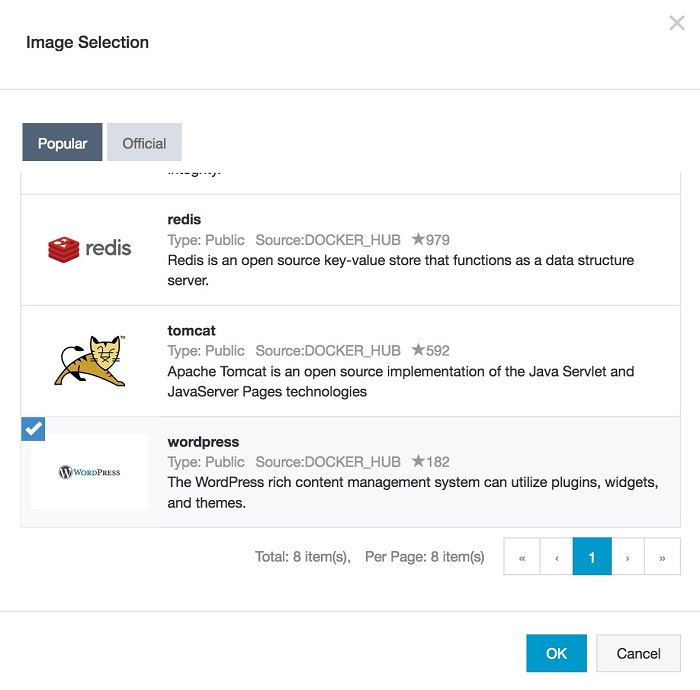

The container service are simply ready for out-of-box use on Alibaba Cloud so there are too much for setting yourself. After turning on hosting node and VPC, then a bunch of mostly commonly used application platforms, including datastore mongoDB or MySQL, web platform like nginx or php, are already for running your applications.

Besides you can always customize your own environment by editing the Dockerfile, which records the metadata of the Docker image.

While Docker and other container technologies provides application runtime immutability, which cannot cover all the scenario, as Docker, at the file system layer, uses OverlayFS or UnionFS driver which are not persistent and only suit for stateless and volatile applications. Although this is regarded as one of the best practices for micro-services based architecture, we cannot assume it will be best for all applications, typically the legacy ones. In this section we will introduce OSTree, which works in Linux userspace as an abstraction tier and can be on top of any existing file systems, and basically without any dependency.

Under OSTree's root directory, only /etc and /var are writable, which usually maps to configuration and application directories. We will have an experiment environment set up on Alibaba Cloud Elastic Compute Service with a CentOS 7.3 instance, so we can go through a simple example step by step.

In this example, we assume we have a application running under /var/demo/demo.py with configuration loaded from /etc/demo/demo.conf. We want to test if the configuration switching can really take effect and be agnostic to application itself.

To simplify the case, the example will be as abstract as possible. /var/demo/demo.py will read environment name and port from /etc/demo/demo.conf, and then start a http server running on that port and print the environment name.

/etc/demo/demo.conf [main]

env = dev

port = 8000/var/demo/demo.py: #!/usr/bin/env python

import SimpleHTTPServer

import SocketServer

import ConfigParser

config = ConfigParser.RawConfigParser()

config.read('/etc/demo/demo.conf')

env = config.get('main', 'env')

port = config.getint('main', 'port')

Handler = SimpleHTTPServer.SimpleHTTPRequestHandler

httpd = SocketServer.TCPServer(("", port), Handler)

print("serving %s at port %d" % (env, port))

httpd.serve_forever()Firstly installed the required package

$ yum install -y ostreeThen initialize a new ostree repository

$ mkdir -p /etc/demo && cd /etc/demo

$ ostree --repo=.demo initUsing .demo starting with . is because we want to hide the ostree internal directory.

Commit the first version of demo.conf to a new branch dev

$ ostree --repo=.demo commit --branch=dev ./

8d7cefc677593c16ecd9eada965fb1ac53d6ae96a6af9fef49a22d164a06e6e2List existing commits

$ ostree --repo=.demo refs

devList directory within branch dev

$ ostree --repo=.demo ls dev

d00755 0 0 0 /

-00644 0 0 29 /demo.conf

d00755 0 0 0 /.rdemoDisplay the content of the first version of demo.conf within branch dev, assuring it is properly commited

$ ostree --repo=.demo cat dev /demo.conf

[main]

env = dev

port = 8000Test /var/demo/demo.py

chmod +x /var/demo/demo.py

/var/demo/demo.py

serving dev at port 8000Modify demo.conf and commit it to branch uat

$ cat /etc/demo/demo.conf

[main]

env = uat

port = 80Commit work directory to branch uat

$ ostree --repo=.demo commit --branch=uat ./

3aca353878a754a887a0308ff5ca6f8ad86057a2175e3f0b194ff51c5e471116Run /var/demo/demo.py again

/var/demo/demo.py

serving uat at port 80Now we see the change takes effect. Actuall we can always checkout the content of the file if it is commited.

Print demo.conf on branch dev

$ ostree --repo=.demo cat dev /demo.conf

[main]

env = dev

port = 8000Print demo.conf on branch uat

$ ostree --repo=.demo cat uat /demo.conf

[main]

env = uat

port = 80OSTree fundamentally gives you atomic transition power from one deliberately defined state to another with one simple command. This power eliminates the risks during an online upgrade and safeguards all your data from being mistakenly modified.

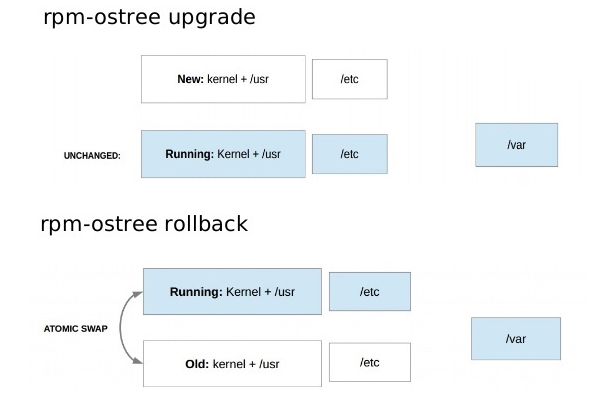

Below diagram shows how ostree rotates the file system to switch deployed configurations and applications between old and new.

Like Git, OSTree also stores the configuration of repo under specified directory. On upgrade, OSTree will perform a basic 3-way diff, and apply any local changes to the new copy, while leaving the old untouched. While compatible with all kinds of file systems, OSTree can take advantage of some native features if installed on top of some curtain ones like BTRFS.

If you are interested about the anatomy of OSTree, you may checkout https://ostree.readthedocs.io/en/latest/manual/repo/ for more specific details.

OSTree enables atomic upgrades of complete file system trees, and its subsystem OSTree-RPM primarily provides package management functionalities at a higher level. If you are a system admin, operating system patching is a struggle. Taking the example of OpenSSL, without the latest patch, your infrastructure may be exposed to security volunerlity like the heartbleed bug. With the latest patch, you might encounter a large range of compatability issues. With rpm-ostree, you may feel confident to upgrade and test, because reverting the package system is so painless here.

We will reuse our previous ECS instance for testing.

rpm-ostree see any change to the package system as a deployment. To show all the deployments, run

$ rpm-ostree statusPerform a yum update-like new deployment

$ rpm-ostree upgradeYou will be reminded to reboot operating system to take effect. As rpm-ostree aims to take over the local package system and make it always resetable, all modifications requires performing systemctl reboot to reload the init system and package management system.

$ systemctl rebootThen we test installation of openssl. Before we start, print the current openssl version.

$ openssl version

OpenSSL 1.0.1e-fips 11 Feb 2013

$ rpm-ostree install openssl

Checking out tree 67d659b... done

Inactive requests:

openssl (already provided by openssl-1:1.0.2k-12.el7.x86_64)

Enabled rpm-md repositories: base updates extras

Updating metadata for 'base': [=============] 100%

rpm-md repo 'base'; generated: 2018-11-25 16:00:34

Updating metadata for 'updates': [=============] 100%

rpm-md repo 'updates'; generated: 2019-01-24 13:56:44

Updating metadata for 'extras': [=============] 100%

rpm-md repo 'extras'; generated: 2018-12-10 16:00:03

Importing metadata [=============] 100%

Resolving dependencies... done

Checking out packages (2/2) [=============] 100%

Running pre scripts... 0 done

Running post scripts... 1 done

Writing rpmdb... done

Writing OSTree commit... done

Copying /etc changes: 22 modified, 8 removed, 41 added

Transaction complete; bootconfig swap: no; deployment count change: 0

Freed: 39.0 MB (pkgcache branches: 2)

Run "systemctl reboot" to start a reboot

$ openssl version

OpenSSL 1.0.2k-fips 26 Jan 2017Rollback to previous state and test if wget is removed

$ rpm-ostree rollback

Moving '67d659bc257b7d47f638f9d7d2146401b85eec7c7eef0122196d72c70553ae66.0' to be first deployment

Transaction complete; bootconfig swap: no; deployment count change: 0

Removed:

openssl-1.0.2k-12.el7.x86_64

Run "systemctl reboot" to start a reboot

$ systemctl reboot

$ openssl version

OpenSSL 1.0.1e-fips 11 Feb 2013Generally, a provisioner may refer to a host in a hypervisor pool, a compute node in OpenStack, or a Worker Node in Kubernetes. These are like the infrastructures under the infrastructures, the host of the host, or the machine who builds machines.

Ideally this provisioner can provide immutable infrastructures as a platform, and being immutable meanwhile. Here we can refer to Atomic Host, which is generally available at Fedora/CentOS/RHEL platform whether the compute resources are any kind of ec2 instances or any kind of hypervisors or any kind of bare metal machines. Atomic Host also keeps itself immutable by containerizing its system services with runC, using ostree to version control its file and package systems, and enabling different kernel namespaces that provide isolation and security to the container host.

You might have a question that system services usually need to have direct access to the host which obviously obey the principles of container technology. So how can they be containerized. That is because Atomic Host introduces Super-privileged containers which have extra privileges to access or modify the host system. And these system containers instead of system services are set up in an install step to be managed by systemd, so they can start automatically when the system boots.

Let us check out an example of rsyslog, which is an extension of the basic syslog protocol with more powerful filtering capabilities, and flexible configuration.

$ atomic install registry.access.redhat.com/rhel7/rsyslog

Pulling registry.access.redhat.com/rhel7/rsyslog:latest ...

…

Creating directory at /host//etc/pki/rsyslog

Installing file at /host//etc/rsyslog.conf

Installing file at /host//etc/sysconfig/rsyslog

Installing file at /host//etc/logrotate.d/syslog

$ atomic run registry.access.redhat.com/rhel7/rsyslog

docker run -d --privileged --name rsyslog --net=host --pid=host -v

/etc/pki/rsyslog:/etc/pki/rsyslog ...To use Atomic Host on Alibaba ECS, we can download the qcow2 version of image at CentOS Atomic Host or Fedora Atomic Host and follow Import custom images to import on-premises image files to your ECS environment to create ECS instances or change system disks.

In this article, we briefly introduced how Docker, ostree, ostree-rpm and Atomic Host can help you build immutable infrastructure layers, from application platform and file system to package management and provisioner, on Alibaba Cloud, in order to lower the complexity of system and risk of human factors by making everything consistent.

Hybrid CI/CD - Hosting Dockerized Jenkins Slaves On Alibaba Cloud

2,593 posts | 793 followers

FollowAlibaba Developer - April 26, 2022

Alibaba Cloud Community - March 8, 2022

Alibaba Developer - February 26, 2020

Alibaba Developer - January 9, 2020

Alibaba Cloud Native Community - January 5, 2022

Alibaba Clouder - November 23, 2020

2,593 posts | 793 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Clouder