Whenever we have a database with a large number of users, it is not unusual to come across hotspots in the database. For Redis, frequent access of the same key in a partition is known as a hotspot key. In this article, we will discuss the common causes of hotspot keys, evaluate the impact of this problem, and propose effective solutions to handle hotspot keys.

Reason 1: The size of user consumption data is much greater than that of production data, and includes hot items, hot news, hot reviews, and celebrity live broadcasts.

An unexpected event in your daily work and life, such: cut prices and promotion of certain popular commodities during the day of sticks, when one of these items is browsed or purchased tens of thousands of times, there will be a larger demand, and in this case it will cause hot issues.

Similarly, it has been published and viewed by a large number of hot news, hot comments, Star live broadcast, and so on, these typical read-write-less scenes also create hot issues.

Reason 2: The number of request slices exceeds the performance threshold of a single server.

When a piece of data is accessed on the server, the data is normally split or sliced. During the process, the corresponding key is accessed on the server. When the access traffic exceeds the performance threshold of the server, the hotkey key problem occurs.

As mentioned earlier, when the number of hotspot key requests on a server exceeds the upper limit of the network adapter on the server, the server stops providing other services due to the excessive concentration of traffic.

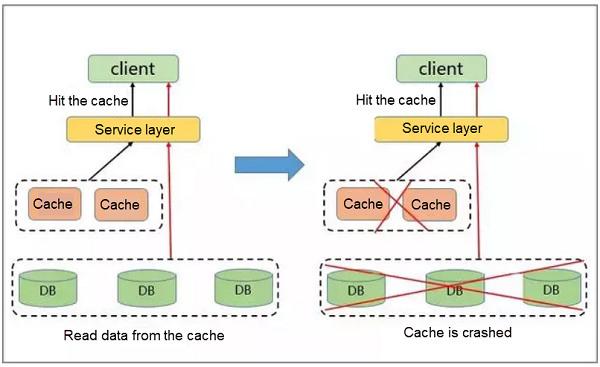

If the distribution of hotspots is too dense, a large number of hotspot keys are cached, exhausting the cache capacity and crashing the sharding service of the cache.

After the cache service crashes, the newly generated requests are cached on the background database. Due to the poor performance of this database, it is prone to exhaustion from a large number of requests, leading to a service avalanche and dramatic downgrading of performance.

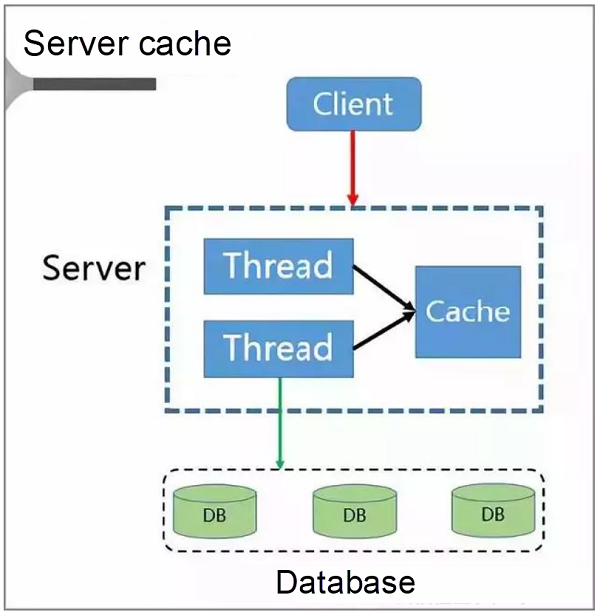

A common solution to improve performance is through reconstruction on the server or client.

The client sends the requests to the server. Given that the server is a multi-thread service, a local cache space based on the cache LRU policy is available.

When the server becomes congested, it directly repatriates the requests rather than forwarding them to the database. Only after the congestion is cleared later does the server send the requests from the client to the database and re-write the data to the cache.

The cache is accessed and rebuilt.

However, the programme also has the following problems:

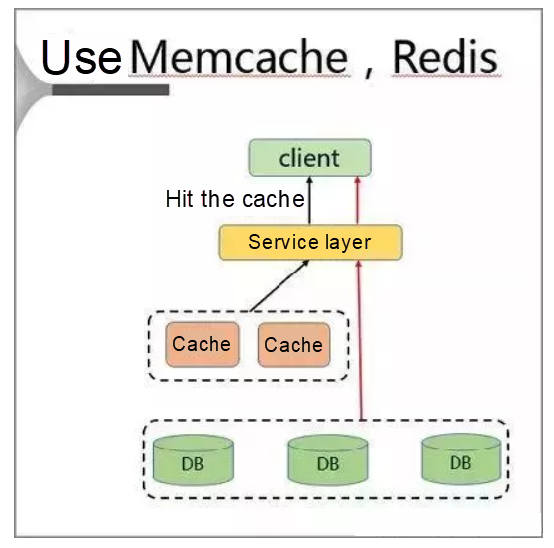

In this solution, a separate cache is deployed on the client to resolve the hotspot key problem.

When adopting this solution, the client first accesses the service layer and then the cache layer on the same host.

This solution offers the following advantages: nearby access, high speeds, and zero bandwidth limitation. However, it also suffers from the following disadvantages:

Using the local cache incurs the following problems:

If traditional hotspot solutions are all defective, how can the hotspot problem be resolved?

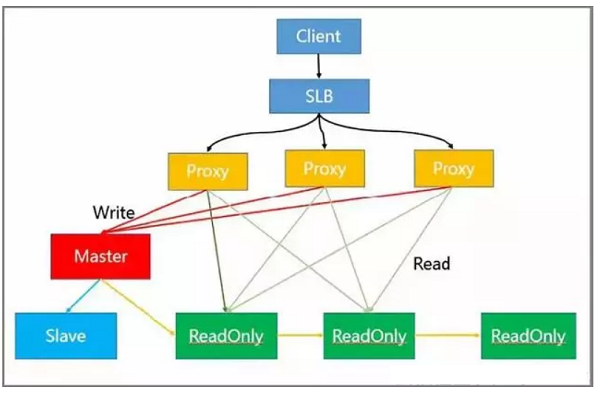

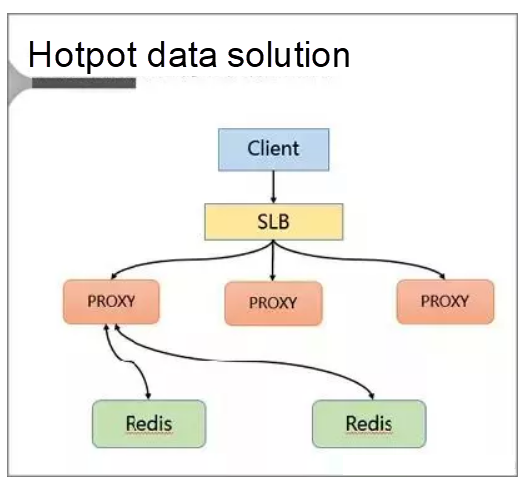

This solution resolves the hotspot reading problem. The following describes the functions of different nodes in the architecture:

In practice, the client sends requests to SLB, which distributes these requests to multiple proxies. Then, the proxies identify and classify the requests and further distribute them.

For example, a proxy sends all write requests to the master node and all read requests to the read-only node.

But the read-only node in the module can be further expanded, thus effectively solving the problem of hot-spot reading.

Read and Write separation also has the ability of flexible capacity expansion reading hotspots, can store a large number of hotspots key, client-friendly advantages such.

In this solution, hotspots are discovered and stored to resolve the hotspot key problem.

Specifically, the client accesses SLB and distributes requests to a proxy through SLB. Then, the proxy forwards the requests to the background Redis by the means of routing.

In addition, a cache is added on the server.

Specifically, a local cache is added to the proxy. This cache uses the LRU algorithm to cache hotspot data. Moreover, a hotspot data calculation module is added to the background database node to return the hotspot data.

The key benefits of the proxy architecture are:

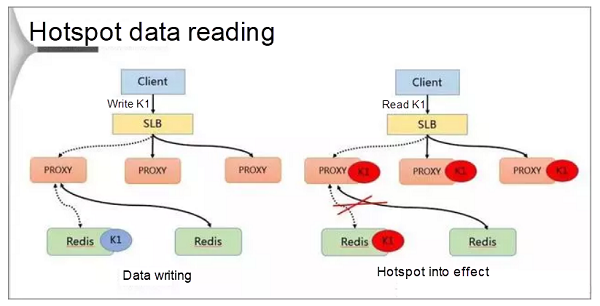

Hotspot key processing is divided into two jobs: writing and reading. During data writing, SLB receives data K1 and writes it to a Redis database through a proxy.

If K1 becomes a hotspot key after the calculation conducted by the background hotspot module, the proxy caches the hotspot. In this way, the client can directly access K1 the next time, bypassing Redis.

Finally, because the proxy can be horizontally expanded, the accessibility of the hotspot data also can be enhanced infinitely.

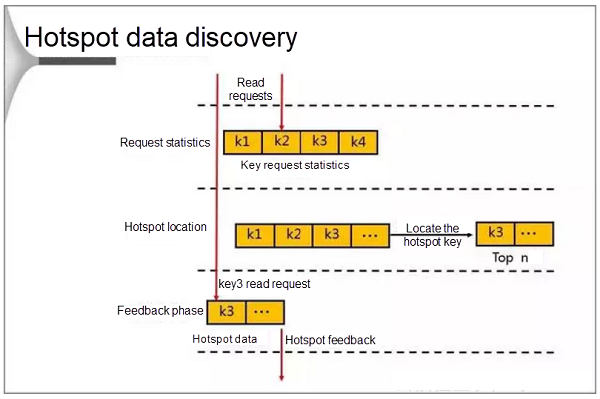

During the discovery, the database first counts the requests that occur in a cycle. When the number of requests reaches the threshold, the database locates the hotspot keys and stores them in an LRU list. When a client attempts to access data by sending a request to the proxy, Redis enters the feedback phase and marks the data if it finds that the target access point is a hotspot.

The database uses the following methods to calculate the hotspots:

From the preceding analysis, you can see that both solutions are improvements over traditional solutions in resolving the hotspot key problem. In addition, both the read/write separation and the hotspot data solutions support flexible capacity expansion and are transparent to the client, though they cannot ensure 100% data consistency.

The read/write separation solution supports the storage of a large hotspot data volume, while the proxy-based hotspot data solution is more cost-effective.

Exploration and Practice of Database Disaster Recovery in the DT Era

2,593 posts | 792 followers

FollowApsaraDB - July 12, 2018

kehuai - May 15, 2020

DavidZhang - July 5, 2022

kehuai - May 15, 2020

ApsaraDB - July 10, 2019

ApsaraDB - October 12, 2021

2,593 posts | 792 followers

Follow Tair (Redis® OSS-Compatible)

Tair (Redis® OSS-Compatible)

A key value database service that offers in-memory caching and high-speed access to applications hosted on the cloud

Learn More Data Transmission Service

Data Transmission Service

Supports data migration and data synchronization between data engines, such as relational database, NoSQL and OLAP

Learn More Tablestore

Tablestore

A fully managed NoSQL cloud database service that enables storage of massive amount of structured and semi-structured data

Learn MoreMore Posts by Alibaba Clouder