The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

Download the "Cloud Knowledge Discovery on KDD Papers" whitepaper to explore 12 KDD papers and knowledge discovery from 12 Alibaba experts.

By Chen Xu, Quan Li, Junfeng Ge, Jinyang Gao, Xiaoyong Yang, Changhua Pei, Fei Sun, Jian Wu, Hanxiao Sun, and Wenwu Ou

In the industry of recommendations, the discrimination of features determines the capacity of models and algorithms. To ensure the consistency of offline training and online serving, we usually use the same features that are available in both environments. However, the consistency, in turn, neglects some discriminative features. For example, when we estimate the conversion rate (CVR), the probability that a user would purchase an item if the user clicked it, features like dwell time on the item details page are informative. However, CVR prediction should be conducted for online ranking before the click happens. Thus, we cannot get such post-event features during serving.

We define the features that are discriminative but only available during training as the privileged features. A straightforward way to utilize the privileged features is multi-task learning (MTL), predicting each feature with an additional task. However, in MTL, each task does not necessarily satisfy a no-harm guarantee. Privileged features can harm the learning of the original model. More importantly, the no-harm guarantee will very likely be violated since estimating the privileged features might be more challenging than the original problem. From the practical point of view, when dozens of privileged features are used at once, it would be a challenge to tune all the tasks.

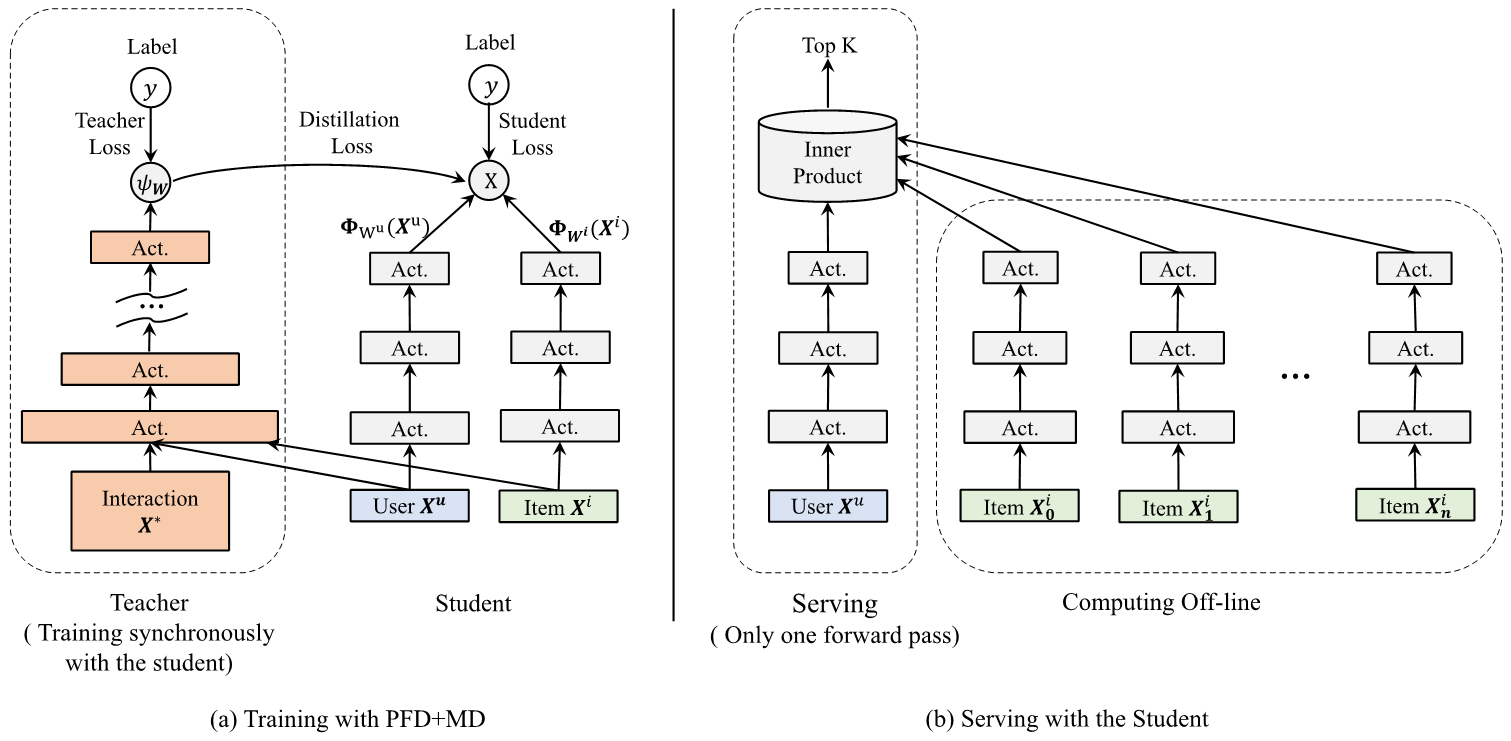

Inspired by learning using privileged information (LUPI), here we propose privileged features distillation (PFD) to take advantage of such features. During offline training, we train two models: a student model and a teacher model. The student model is the same as the original one. The teacher model processes all features, which include the privileged ones. Therefore, the prediction accuracy is higher. Knowledge distilled from the teacher model, the output of the last layer of the teacher model, is transferred to the student model to supervise its training, which additionally improves its performance. During online serving, only the student part is extracted, which relies on non-privileged features as the input and guarantees the consistency with training. Compared with MTL, PFD mainly has two advantages. On the one hand, the privileged features are combined in a more appropriate way for the prediction task. Generally, adding more privileged features will lead to more accurate predictions. On the other hand, PFD only introduces one extra distillation loss no matter what the number of privileged features is, which is much easier to balance.

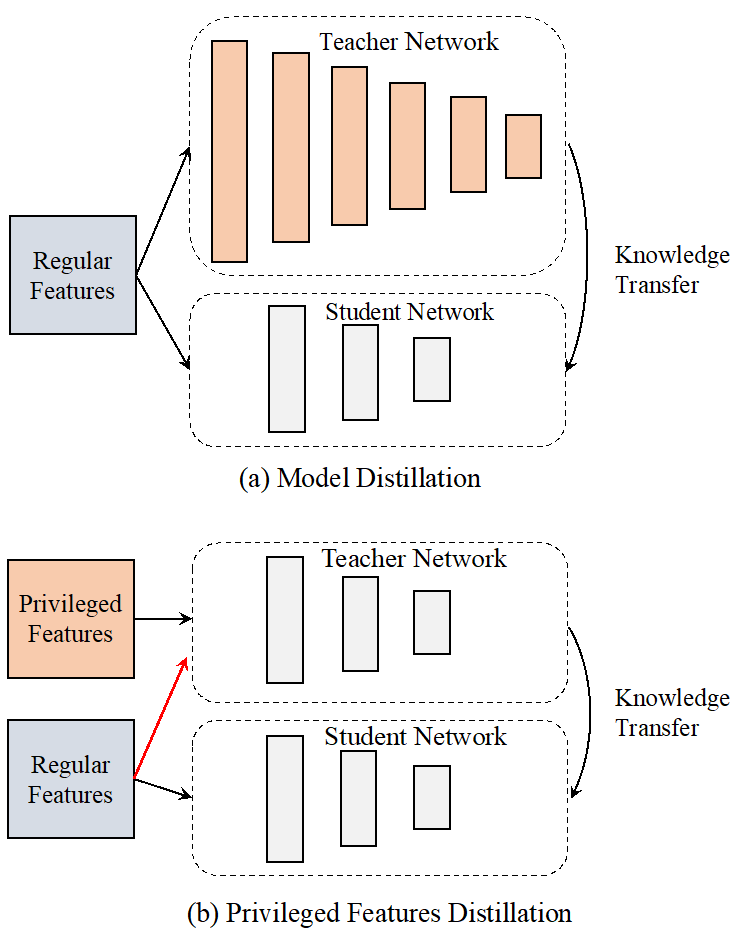

PFD is different from the commonly used model distillation (MD.) In MD, both the teacher and the student process the same inputs. The teacher uses models with more capacity than the student. For example, the teacher can use deeper networks to instruct the shallower student, whereas in PFD, the teacher and the student use the same models but differ on the inputs. PFD is also different from the original LUPI, where the teacher network in PFD additionally processes the regular features. Figure 1 gives an illustration of the differences.

Figure 1. Illustration of MD and PFD proposed in this work. In MD, knowledge is distilled from the more complex model. In PFD, knowledge is distilled from both the privileged and the regular features (indicated by the red arrow.) PFD also differs from LUPI, where the teacher only processes the privileged features.

We conduct PFD experiments on two fundamental prediction tasks at Taobao recommendations, CTR prediction at coarse-grained ranking, and CVR prediction at fine-grained ranking. By distilling a more powerful MPL model and the interacted features that are prohibited due to online construction features and high model inference latency, the click metric is improved by 5.0% in the CTR task. By distilling the post-event features, such as dwell time, the conversion metric is improved by 2.3% in the CVR task without a drop of the click metric.

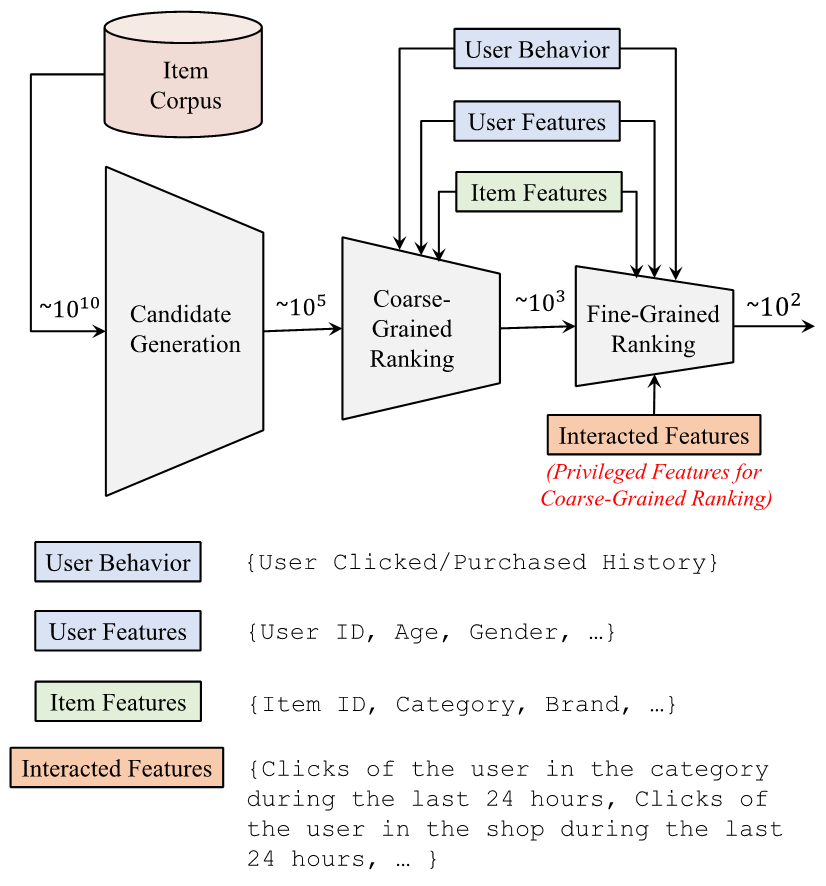

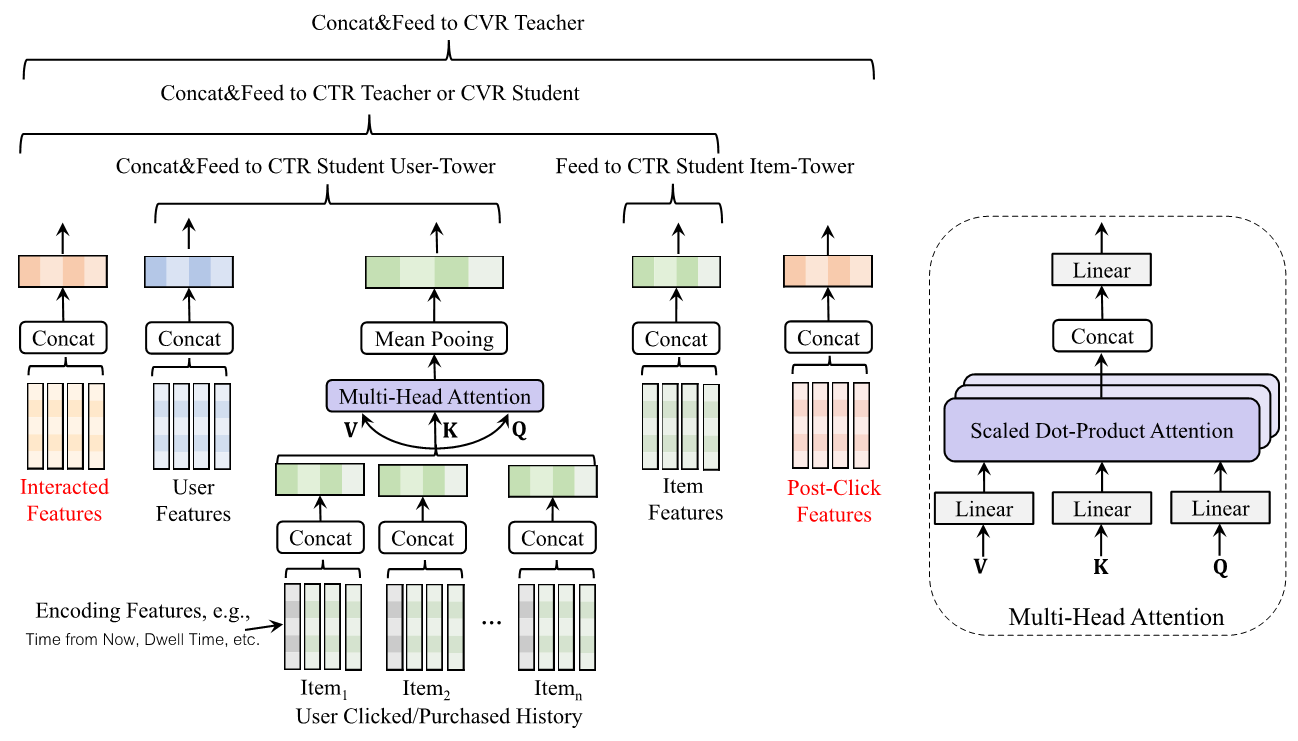

To better understand the privileged features exploited in this work, we give an overview of Taobao's recommendations in Figure 2. We adopt the cascaded learning framework. As the number of items to be scored decreases, the model becomes more complex. In this experiment, all features require one-hot coding and mapping to embeddings (continuous features can be discretized by Bin division). Based on user behaviors, Recurrent Neural Network (RNN) or Transformer is usually adopted to model the user's long- and short-term interests.

Figure 2. Overview of Taobao recommendations. We adopt a cascaded learning framework to select/rank items before presenting them to users. At coarse-grained ranking, the interacted features, although they are discriminative, are prohibited as they greatly increase the latency at online serving. In this work, interacted features are used as privileged features for coarse-grained ranking.

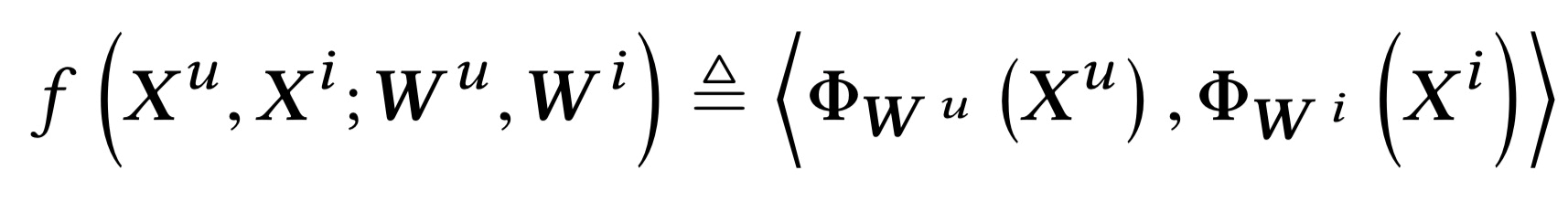

We adopt two stages for ranking: coarse-grained ranking and fine-grained ranking. In the coarse-grained ranking stage, the complexity of the prediction model is strictly restricted. We use the inner product model to measure the item scores:

where u and i denote the user and item, respectively. X represents the input, and W represents the model parameter of the non-linear mapping Φ, where Φ uses a multi-layered MLP. As the user side and the item side are separated in the preceding equation during serving, we can compute the mappings of all items offline in advance and create an index for the results in the memory. When a request comes, we only need to execute one forward pass to get the user mapping and compute its inner product with all candidates, which is extremely efficient. For more details, see the illustration in Figure 4.

As shown in Figure 2, the coarse-grained ranking does not utilize any interacted features, such as clicks of the user in the item category in the last 24 hours and clicks of the user in the item shop in the last 24 hours. Adding these features greatly enhances the prediction performance. However, it in turn greatly increases the latency during serving in the coarse-grained ranking stage. If either of them is put at the item side or the user side, the inference of the mappings Φ_W (⋅) needs to be executed as many times as the number of candidates, that is, 10^5 here. Generally, the non-linear mapping (multi-layered MLP) costs several orders more computation than the simple inner product operation. It is thus unpractical to use the interacted features during serving in the coarse-grained ranking stage. Here we regard interacted features as the privileged features for CTR prediction at coarse-grained ranking.

In the fine-grained ranking stage, besides estimating the CTR as done in the coarse-grained ranking, we need to estimate the CVR for all candidates, the probability that the user would purchase the item if the user clicked it. Under the definition of CVR, it is obvious that user behaviors on the details page of the clicked item can be rather helpful for the prediction, which is illustrated in Figure 3. However, CVR needs to be estimated for ranking before any future click happens. Features describing user behaviors on the detailed page are not available during inference. Here, we denote these features as the privileged features for CVR prediction.

Figure 3. Illustrative features describing the user behavior on the details page of the clicked item. Including the dwell time (that is not shown), these features are rather informative for CVR prediction. However, during serving, we need to use CVR to rank all candidate items before any item is clicked. We denote these post-event features as the privileged features for CVR prediction.

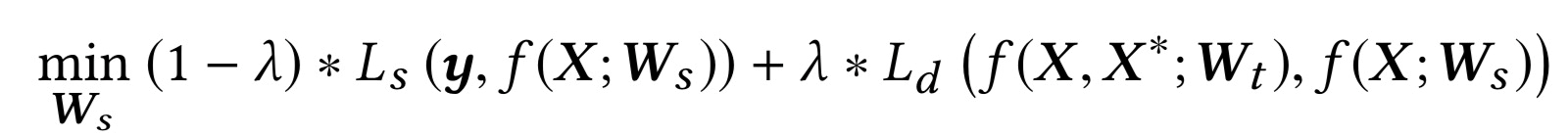

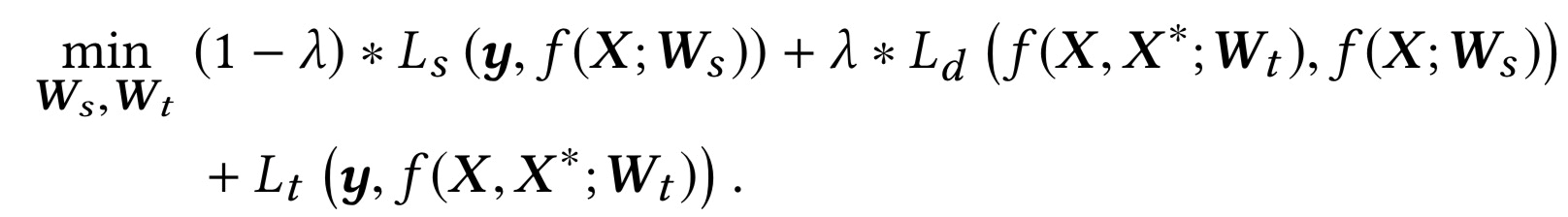

Next, we will describe the privileged feature distillation in detail. Both model distillation and feature distillation aim to help the non-convex student models to train better. For privileged feature distillation, the objective function can be abstracted as follows:

where X denotes the regular input features, X^* denotes the privileged features, y denotes the labels, f(⋅) denotes the model output, and L represents the loss functions. In the subscripts, s stands for student, d stands for distillation, and t stands for teacher. λ is the hyper-parameter to balance the two losses. Note: We feed the regular features to the teacher model in addition to the privileged features. As the preceding equation indicates, the teacher model is trained in advance, which increases the model training time. A more plausible way is to train the teacher and the student synchronously. The objective function is then modified as follows:

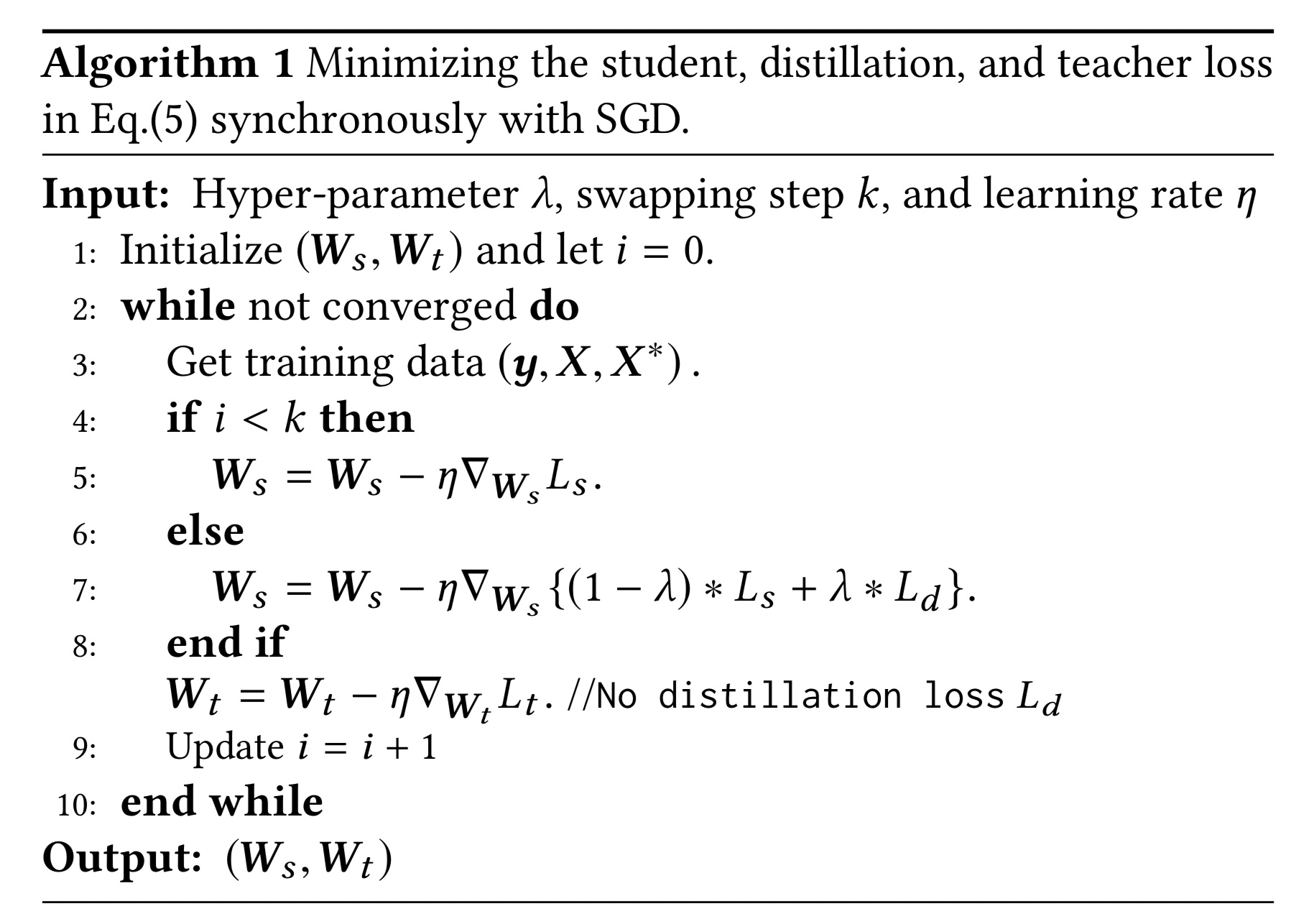

Although it significantly reduces the training time, synchronous training can be unstable. In the early stage when the teacher is not well-trained, the student may be distracted if it is trained directly by the outputs of the teacher. To solve this problem, we set λto 0 in the early stage and fix it to the pre-defined value with the swapping step. For more information on the operation steps, see Algorithm 1. Note: We let the distillation error affect only the update of the parameters of student and do not return the teacher parameters by gradient to avoid the loss of accuracy due to the co-adaption of the teacher networks and student networks.

Extension: Unified Distillation

Figure 1 illustrates the differences between MD and PFD. The two distillation techniques are complementary and can improve the student performance. A natural extension is to combine MD and PFD by forming a more accurate teacher to instruct the student. In the CTR prediction at coarse-grained ranking, we use this unified distillation (UD) technique.

As shown in Equation 1, in this work, we use the inner product model to score candidate items. No matter how we map the user and item inputs, the model capacity is intrinsically limited by the bi-linear structure at the inner product operation. The inner product model at coarse-grained ranking can be regarded as the generalized matrix factorization that contains side information. According to the universal approximation theorem of neural networks, the non-linear MLP has stronger expression ability than the bi-linear inner product operation. Therefore, it is naturally selected as the stronger teacher model here. Figure 4 illustrates the UD at coarse-grained ranking. The teacher model with privileged features in the figure is the same as the model used for CTR prediction at fine-grained ranking. The distillation technique here can be regarded as distilling knowledge from the fine-grained ranking to improve the coarse-grained ranking.

Figure 4. (a) UD framework at coarse-grained ranking and (b) its deployment during serving. At the training time, the interacted features (the privileged features) of the user and the item and the complex non-linear network form a strong teacher to instruct the bi-linear inner model operation. During serving, we compute the mappings of all items offline in advance and create an index for them in the memory. When a request comes, we only need to execute one forward pass to derive the user mapping.

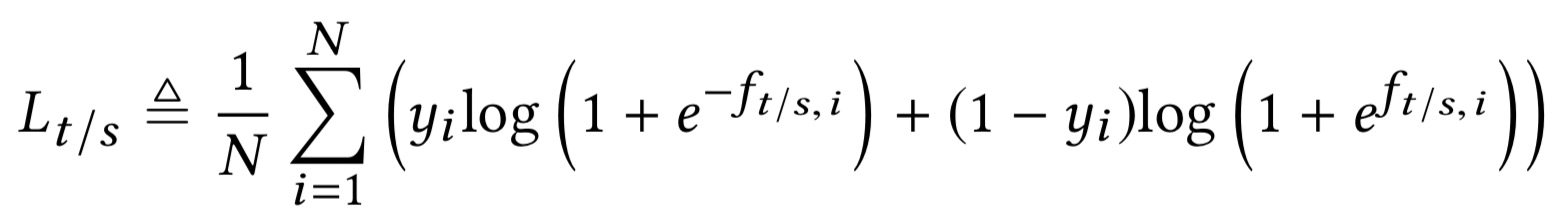

Since we want to test whether the users clicked/purchased the item or not, we use the log-loss for both the teacher and the student.

where f_(t/s,i) denotes the output of the ith sample from the teacher or student model. For the distillation loss, we use the cross-entropy by replacing y_i with 1/(1 + e^(-f_{t,i} )). Here we measure the performance of models with the widely-used areas under the curve (AUC) in the next-day held-out data. Additionally, the common input components between the teacher and the student are shared during training, as illustrated in Figure 5.

Figure 5. Illustration of the overall network. The common input components between the teacher and the student are shared during training.

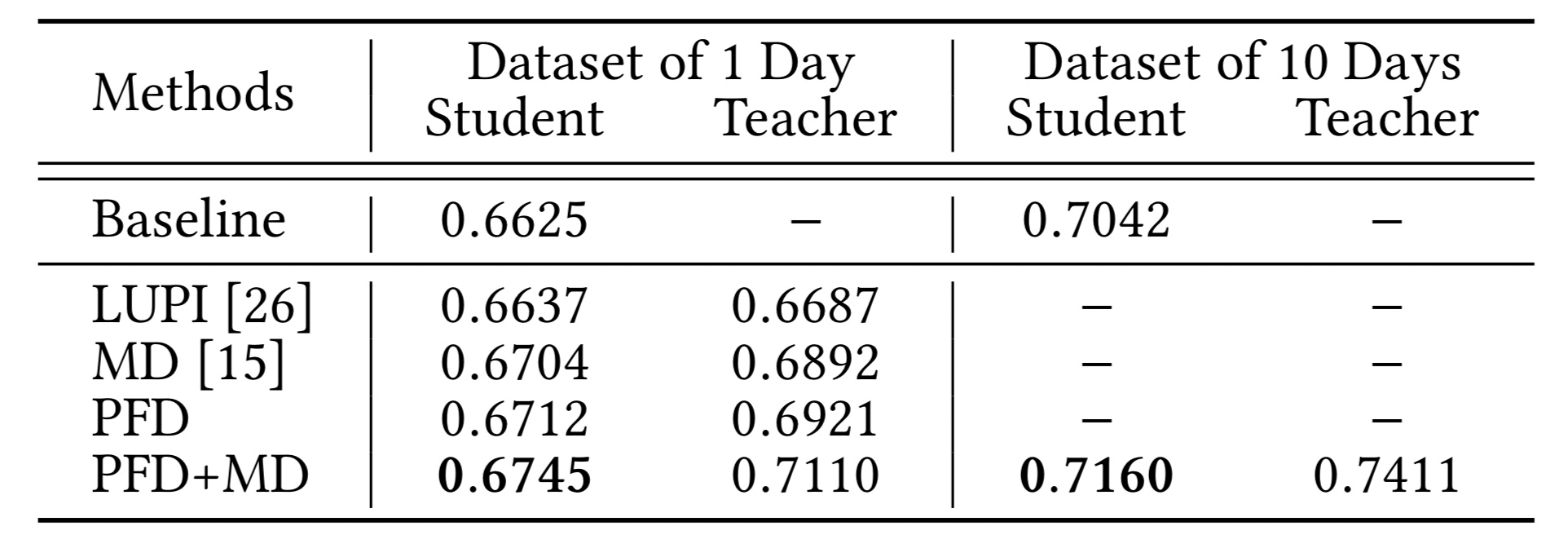

Experiment results of CTR at coarse-grained ranking: As shown in Figure 1, in MD, the teacher only processes privileged features. In PFD, the teacher processes both privileged features and regular features. We do not include MTL as it is too cumbersome to predict dozens of interacted features (privileged features.) As shown in the following table, the MD+PFD combination significantly improves the training performance at coarse-grained ranking. The superiority of the technique still holds when the training is longer with more data. In our online A/B tests, MD+PFD improves the click metric by 5.0%, without the conversion metric falling.

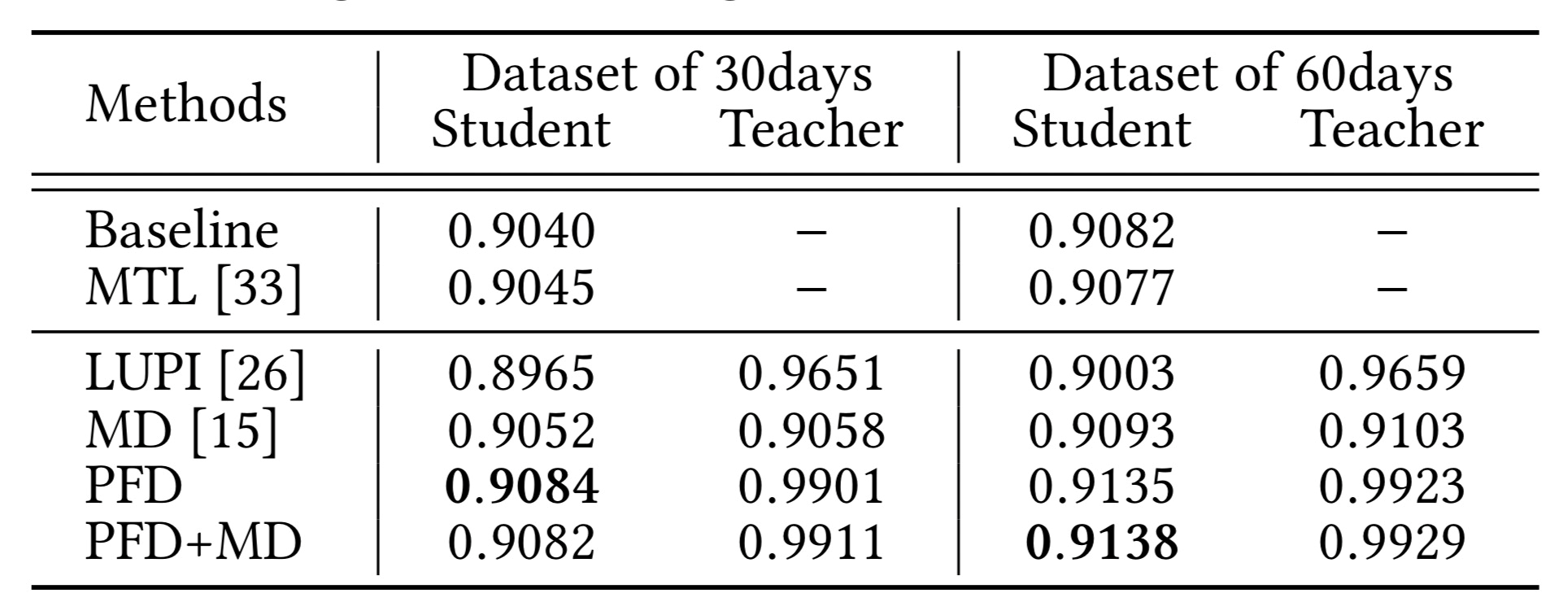

Experiment results of CVR at fine-grained ranking: Multiple tasks of MTL share outputs of the underlying neural network. In MD, a broader and deeper neural network is used but the teacher performance is not obviously superior to the student performance. Therefore, in the online A/B tests, only PFD is used. Compared with the baseline, PFD improves the conversion metric by 2.3% without the click metric falling.

In this article, we focused on the feature input in the prediction task. Specifically, we propose PFD to maximize the use of privileged features. In both, the two fundamental prediction tasks at Taobao recommendations, PFD can significantly improve the effect of the corresponding model. PFD is complementary to the commonly used MD. In coarse-grained ranking, the PFD+MD framework can additionally improve the training performance. For more information on the technical and experimental details, read our paper by clicking this link.

[15] Geoffrey Hinton, Oriol Vinyals, and Jeff Dean. 2015. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531 (2015).

[26] David Lopez-Paz, Leon Bottou, Bernhard Scholkopf, and VladimirVapnik. 2016. Unifying distillation and privileged information. In ICLR.

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

AntChain Upgrades BaaS Platform and Initiates the "1 + X + Y" Blockchain Mode

2,599 posts | 768 followers

FollowAlibaba Cloud MaxCompute - February 28, 2020

Alibaba Clouder - April 1, 2021

Alibaba Cloud Community - December 8, 2021

Alibaba Cloud Community - June 14, 2024

Alibaba Clouder - May 11, 2020

Alibaba Cloud Data Intelligence - July 18, 2023

2,599 posts | 768 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Machine Translation

Machine Translation

Relying on Alibaba's leading natural language processing and deep learning technology.

Learn More 1688 Cloud Hub Solution

1688 Cloud Hub Solution

Alibaba Cloud 1688 Cloud Hub is a cloud-based solution that allows you to easily interconnect your 1688.com store with your backend IT systems across different geographic regions in a secure, data-driven, and automated approach.

Learn MoreMore Posts by Alibaba Clouder