By Kuang Dahu (Changlv)

Solving the security isolation problem of multi-tenant clusters is key for enterprises striving to migrate to the cloud. This article discusses the concepts and common application patterns of Kubernetes multi-tenant clusters. In addition, it explores business scenarios where clusters are shared within an enterprise, the existing security management capabilities of Kubernetes native and quick implementation of multi-tenant clusters using Alibaba Cloud Container Service for Kubernetes (ACK) clusters.

First, let's discuss the tenants. The concept of a tenant is not only used for cluster users, but also includes the workload set constituting computing, network, storage, and other resources. In a multi-tenant cluster, it is necessary to isolate different tenants within a single cluster whenever possible (tenants may be spread across multiple clusters in the future.) In this way, malicious tenants cannot attack others, and shared cluster resources are rationally distributed among tenants.

Based on the security level provided by isolation, it's easy to divide clusters into soft multi-tenancy and hard multi-tenancy clusters. Soft isolation is more suitable to multi-tenancy within an enterprise, wherein by default, there are no malicious tenants. In this scenario, isolation aims to protect business between internal teams and defend against possible security attacks. Hard isolation is designed for service providers that provide external services. Due to the business pattern, the security backgrounds of business users of different tenants cannot be guaranteed. Therefore, tenants and Kubernetes systems within the cluster may attack each other. As a result, rigid isolation is required to ensure security. The next section will give a more detailed description of different multi-tenant scenarios.

The following describes two typical enterprise multi-tenant scenarios with different isolation requirements:

In this scenario, all cluster users come from the enterprise. This is the scenario of many Kubernetes cluster customers. Since the identity of service users is controllable, security risks in this business pattern are relatively controllable. After all, the employer may simply fire employees who misuse the service. Configure namespaces according to the internal staff structure of the enterprise to logically isolate the resources of different departments or teams. Also, define business personnel with the following roles:

In addition to access control based on user roles, ensure network isolation between namespaces. Thus, only whitelisted cross-tenant application requests are allowed between different namespaces.

Moreover, for applications with high business security requirements, limit the kernel capabilities of the application container using Seccomp, AppArmor, SELinux, or other policy tools to limit the container runtime capabilities.

The current single-tier logical isolation of namespaces in Kubernetes cannot meet the isolation requirements of the complex business models of some large enterprise applications. To address this issue, turn to virtual clusters, which abstract a higher-level tenant resource model to implement more refined multi-tenancy management. This compensates for the weakness of native namespaces.

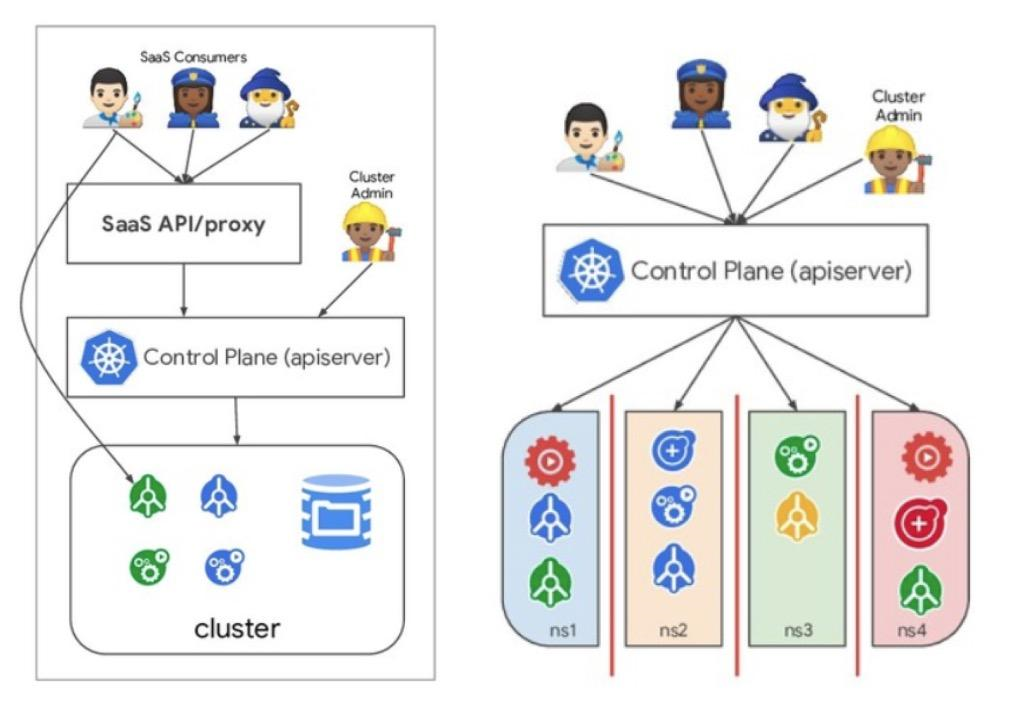

In the Software as a Service (SaaS) multi-tenancy scenario, tenants in a Kubernetes cluster are the service application instances on the SaaS platform and the SaaS control plane itself. In this scenario, service application instances of the platform are divided into different namespaces. The end-users of the service cannot interact with Kubernetes control plane components. These end-users may access and use the SaaS console and use services or deploy businesses through the customized SaaS control plane, as shown in the left figure below. For example, assume a blog platform is deployed and run on a multi-tenant cluster. In this scenario, tenants are the blog instances of each customer and the control plane of the platform. The platform control plane and each hosted blog run in different namespaces. Customers create and delete blogs and update blog software on the interfaces of the platform, but do not see how the cluster works.

The Knowledge as a Service (KaaS) multi-tenancy scenario often involves cloud service providers. In this scenario, the services of the business platform are directly exposed to users of different tenants through the Kubernetes control plane. End-users use Kubernetes native APIs or other extension APIs from service providers based on custom resource definitions (CRDs) and controllers. To meet basic isolation requirements, different tenants must use namespaces to logically isolate access and ensure the isolation of the network and resource quotas of different tenants.

In contrast to shared clusters within an enterprise, all the end-users in this scenario come from non-trusted domains. Thus, it's not feasible to block malicious tenants who run malicious code on the service platform. Therefore, better security isolation is needed for multi-tenant clusters in SaaS and KaaS service models. The existing native capabilities of Kubernetes cannot meet the security requirements in these scenarios. For this reason, enhance tenant security in this business pattern by isolating containers at the kernel level during runtime, such as by using security containers.

When planning and implementing a multi-tenant cluster, first use the resource isolation layer of Kubernetes by implementing resource isolation models that place the cluster itself, namespaces, nodes, pods, and containers in different tiers. When the application loads of different tenants share the same resource model, security risks occur between them. Therefore, control the resource domains that each tenant accesses when multi-tenancy is implemented. At the resource scheduling level, ensure that containers that process sensitive information run on relatively independent resource nodes. When loads from different tenants share the same resource domain, reduce the risk of cross-tenant attacks by using runtime security and resource scheduling control policies.

Although the existing security and scheduling capabilities of Kubernetes are insufficient to implement completely secure isolation between tenants, isolate the resource domains used by tenants through namespaces and use policy models such as RBAC, PodSecurityPolicy, and NetworkPolicy to control the resource access scope and capabilities of tenants as in the intra-enterprise cluster sharing scenario together with existing resource scheduling capabilities. So far, this approach already provides considerable security isolation capabilities. For service platform formats such as SaaS and KaaS, implement container kernel-level isolation through the security container product to be released by Container Service in August. This product minimizes cross-tenant attacks from malicious tenants through the escape technique.

The following section focuses on multi-tenant practices based on the native security capabilities of Kubernetes.

ACK cluster authorization is divided into two steps: RAM authorization and RBAC authorization. RAM authorization provides access control for the cluster management API and includes Create, Read, Update and Delete (CRUD) permissions for the cluster, such as cluster visibility, scaling, and node addition. RBAC authorization provides access control for the Kubernetes resource model within the cluster. It implements precise authorization for specified resources at the namespace level.

ACK authorization management provides intra-tenant users with preset role templates of different levels, supports binding multiple user-defined cluster roles, and enables batch user authorization. For more information about access control authorization for clusters on ACK, see the relevant help document.

NetworkPolicy controls network traffic between business pods of different tenants and implements cross-tenant business access control through a whitelist. Configure NetworkPolicy on a container service cluster that uses the Terway network plug-in. Click here for policy configuration examples.

PodSecurityPolicies (PSPs) are native cluster-dimension resource models in Kubernetes. They verify whether pod behaviors meet the requirements of the corresponding PSP during the admission phase where a pod request is created. For example, check whether the pod uses the host's network, file system, specified port, or PID namespace. Also, restrict intra-tenant users to enable privileged containers and restrict drive types to enhance read-only attachment and other capabilities. In addition, PSPs add corresponding SecurityContext to pods based on the bound policies. This configuration includes the UID during the runtime of the container, GID, addition or deletion of kernel capabilities, and other settings. For more information about how to enable PSP admission and bind relevant policies and permissions, click here.

Open Policy Agent (OPA) is a powerful policy engine that supports the decoupled policy decision service. So far, the community already has a mature integration solution for Kubernetes. When the existing RBAC isolation capabilities at the namespace level do not meet the complex security requirements of enterprise applications, OPA provides fine-grained access policy control at the object model level. OPA also supports layer-7 NetworkPolicy definition and cross-namespace access control based on labels and annotations. It effectively enhances Kubernetes native NetworkPolicy.

In the multi-tenant scenario, different teams or departments share cluster resources, which leads to resource competition. Address this by limiting the resource usage quota of each tenant. ResourceQuota is used to limit the total resource request and limit values for all pods under the tenant's corresponding namespace. LimitRange is used to set the default resource request and limit values for pods deployed in the tenant's namespace. In addition, limit the storage resource quota and object quantity quota of tenants.

For more information about resource quotas, click here.

Starting from version 1.14, the pod priority and preemption features have become a constant part of the service. The pod priority indicates the priority of pods in the pending status in the scheduling queue. When pods with high priorities cannot be scheduled due to insufficient node resources or other reasons, the scheduler attempts to evict pods with lower priorities to ensure that higher priority pods are first scheduled and deployed.

In the multi-tenant scenario, the availability of important business applications in the tenant is protected through priority and preemption settings. In addition, the pod priority is used together with ResouceQuota to limit tenant quotas at a specified priority.

Note: Malicious tenants may bypass policies enforced by the node taint and tolerance mechanism. The following applies only to clusters of trusted tenants within the enterprise or clusters where tenants cannot directly access the Kubernetes control plane.

By adding taints for some nodes in a cluster, reserve these nodes for exclusive use by specified tenants. In the multi-tenant scenario, such as when GPU nodes are included in a cluster, use taint to reserve these nodes for the service teams of business applications that need GPU resources. The cluster administrator may add a taint to a node by using tags such as effect: "NoSchedule". Then, only pods with corresponding tolerance settings can be scheduled to the node.

However, malicious tenants add the same tolerance configuration to their pods to access this node. Therefore, using the node tainting and tolerance mechanism alone cannot ensure the exclusivity of target nodes in a non-trusted multi-tenant cluster.

For more information about how to use the node taint mechanism to control scheduling, click here.

In a multi-tenant cluster, different tenant users share the same ETCD storage. When end-users access the Kubernetes control plane, protect the data in secrets. This prevents the leak of sensitive information when access control policies are improperly configured. For more details, see the native secret encryption capability of Kubernetes here.

ACK also provides an open source secret encryption solution based on Alibaba Cloud KMS. For more information, click here.

While deploying a multi-tenant architecture, determine the corresponding scenarios, including determining the trustworthiness of users and applications under a tenant and determining the degree of security isolation. In addition, perform the following operations to meet basic security isolation requirements:

When service models such as SaaS and KaaS are used, or when the trustworthiness of users under a tenant cannot be guaranteed, take more effective isolation measures:

Are you eager to know the latest tech trends in Alibaba Cloud? Hear it from our top experts in our newly launched series, Tech Show!

Knative on Alibaba Cloud: The Ultimate Serverless Experience

228 posts | 33 followers

FollowAlibaba Developer - June 22, 2020

Alibaba Container Service - April 28, 2020

Alibaba Developer - August 19, 2021

Alibaba Container Service - June 26, 2025

Alibaba Clouder - July 12, 2019

Alibaba Clouder - July 26, 2019

228 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Container Service