By Wu Genze

AliSQL is an independent branch of MySQL that is deeply optimized by the RDS team. In addition to the features of Community Edition, AliSQL provides many functions similar to MySQL enterprise edition, such as enterprise-level backup and restoration, audit logs, thread pools, and Binlog in Redo. RDS MySQL uses AliSQL kernel and provides all features of MySQL and a wide range of advanced features developed by Alibaba Cloud. These advanced features include enterprise-grade security, backup and restoration, monitoring, performance optimization, read-only instances, and Serverless.

Binlog is a binary log maintained by the MySQL server layer. It records all modification operations on the database at the transaction level.

Transaction level refers to the log of a transaction, which is written to the binlog file when the transaction is committed. Specifically, during the execution of a transaction, binlog events are continuously generated and temporarily stored in the session-level binlog cache. When the transaction is committed, all contents in the binlog cache are written to the binlog file at a time.

In MySQL, binlog is one of the few logs that can be accurate (The other accurate one is the redo log). It can ensure the modifications in the log exist in the data and vice versa. Since it can be accurate, MySQL can do replication and backup based on binlog.

Sync_binlog is an important parameter related to binlog, which controls the disk brushing mode of binlog. Before understanding the specific meaning of this parameter, we must understand the page cache mechanism of the Linux system.

We know that the storage medium of a computer is generally divided into three layers, and its reading and writing speeds are CPU cache, memory, and disk from high to low. The Linux kernel adds a layer of cache called page cache before the disk to optimize the reading and writing speed of files.

Page cache is essentially a piece of memory space directly managed by the operating system. When an application writes a file, it will write it to the page cache first. Then, the operating system selects a machine to brush the disk. Such a mechanism brings a hidden danger. When the machine crashes, the data that is not brushed from the page cache to the disk will be lost. Many applications are unable to tolerate such data loss, so the operating system provides an interface for applications to actively brush the disk.

In binlog, the behavior of writing binlog events to the page cache of the binlog file is called flush, and the behavior of brushing the disk of the binlog file is called sync. It should be noted that this name is a habit in the context of binlog and is called in another way in the redo log.

The Sync_binlog parameter controls the frequency at which binlog disks are brushed. When the value is set to 0, binlog disks are not actively brushed. When the value is set to n (n > 0), binlog disks are brushed together for every n transaction.

When sync_binlog is set to 1 and each transaction is committed, the binlog will be actively brushed. Under this configuration, the binlog of the committed transaction will not be lost. The reliability of binlog-based replication and backup can be guaranteed. With the parameter innodb_flush_log_at_trx_commit = 1 in innodb (each transaction redo log is actively brushed), high reliability can be achieved with the same data and logs. This configuration is commonly known as double-one. In the process of the discussion below, we think that instances are in double-one configuration by default.

In the double-one configuration, MySQL uses a two-phase mechanism to ensure the crash consistency between binlogs and innodb (crash safe). In other words, no matter when the instance crashes (after the restart or through crash recovery), Binlog and innodb can reach a consistent state.

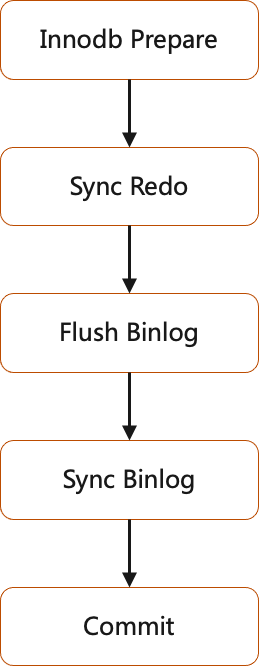

The preceding figure shows a two-phase commit protocol diagram. The two-phase transaction commit is divided into two phases: prepare and commit, and the transaction is divided into active and prepared states.

The most important action in the Prepare phase is to set the transaction from the active state to the prepared state and set the undo rollback segment to the prepared state. This action means all operations on marking transactions have ended. In the crash recovery process, transactions in the prepared state can be rolled back or committed. Active state transactions can only be rolled back but not committed. With this status, the redo log and binlog can coordinate with each other during the commit phase and crash recovery process. Note: The action of modifying the transaction status at this stage is in the memory state. The transaction status is persisted to the disk only if the redo log disk brush in the undo rollback segment is recorded. This is due to the WAL mechanism of innodb. Those interested in it can consult relevant materials to learn.

In the early MySQL, the prepare phase performs the redo log disk brushing operation. However, with the birth of binlog group commit optimization, the performance of the disk brushing operation has been improved, so the disk brushing operation of the redo log has been moved to the binlog group commit process of the commit stage to optimize IO performance. This part of the details will be explained in the chapter on binlog group commit optimization. It is worth mentioning that this change is closely related to Yinfeng, an early member of AliSQL. He first discovered this problem in his performance testing and contributed ideas and code patches to MySQL officials.

In addition to the transaction commit operation, the commit phase includes the disk brush of the redo log and the write and disk brush of the binlog. The order of operating these two log files is important here. Binlog disk brushing means the transaction can be passed to the secondary library. At this time, even if the instance does not complete the commit, it is necessary to be able to commit this transaction during the crash recovery process to not cause the primary-secondary inconsistency. When a transaction can be committed during crash recovery, the prepared state of the transaction must be persisted to the disk, which means the redo log has been brushed. Therefore, you must first brush the redo log before you can write the binlog file and brush the disk. Therefore, binlog disk brushing is used as the flag in the commit phase. Crash and transaction rollback occur before disk brushing is completed. The crash occurs after disk brushing is completed and the transaction is committed.

After the binlog disk is brushed, the transaction can be committed. When committing, the transaction will be changed from the prepared state to the commit state. This operation requires writing a redo log but the transaction does not need to wait for the redo log to be brushed. As mentioned earlier, as long as the binlog disk is brushed, it can be considered that the transaction has been committed persistently. Therefore, it is unnecessary to wait for the redo log disk to be brushed again. It directly returns that the transaction is committed successfully.

When an instance crashes (Let's assume the most serious crash here -- a machine failure causes a restart, and all page caches are lost):

In the preceding mechanism, each transaction needs to wait for two IO operations, one redo log, and one binlog when it is committed to ensure the integrity of the binlog and crash safe. This causes great performance problems.

MySQL officially introduces Binlog Group Commit optimization to incorporate IO operations and optimize performance.

BGC optimization is officially done by MySQL. It is a performance optimization for binlog submission. The purpose is to merge IO operations.

IO operations are carried out in batches in the file system. In general, ten times of 1KB IO is much slower than one time of 10KB IO, which is the meaning of merging IO operations. This idea is reflected in many designs of MySQL servers and innodb.

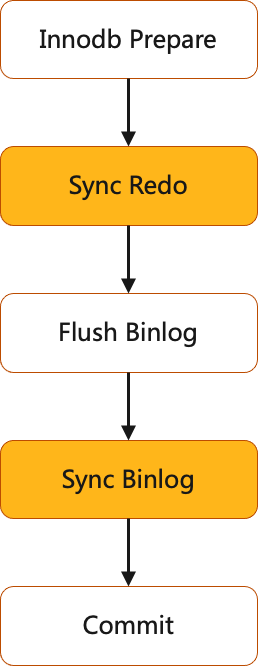

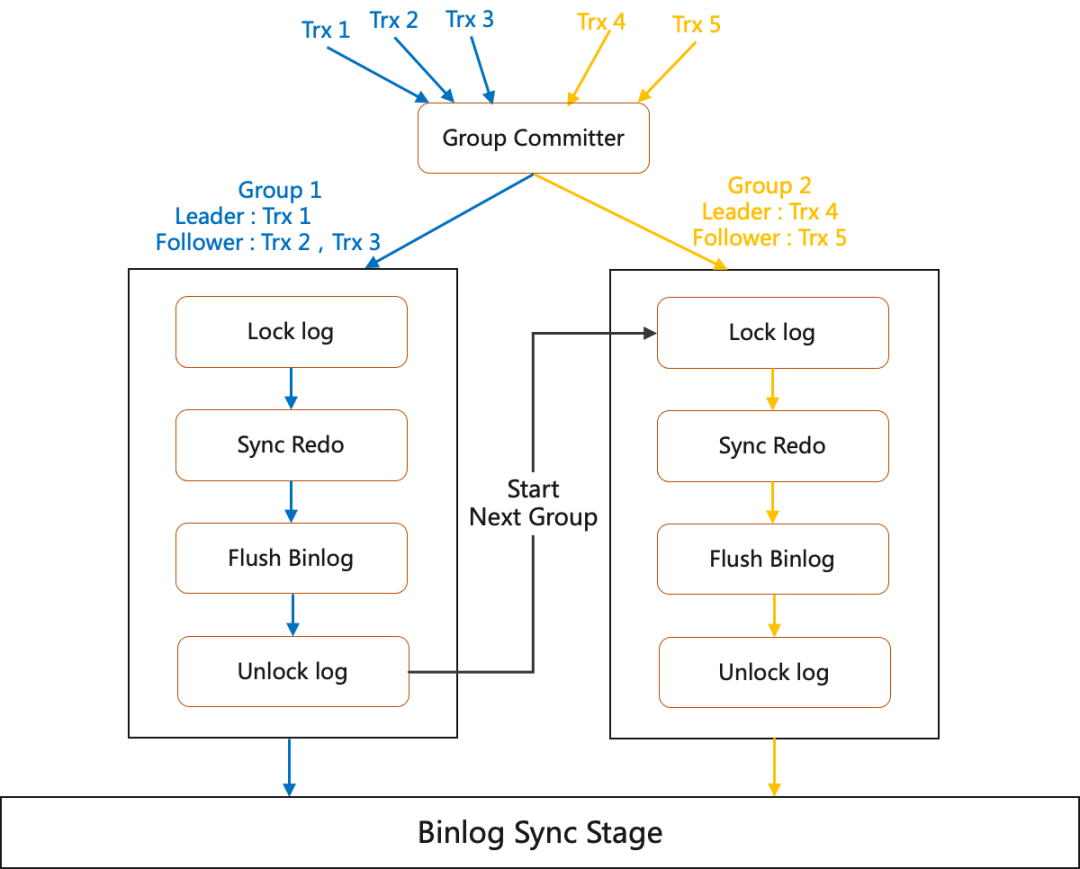

Group Commit, as the name implies, forms a group of transactions and commits them together. MySQL divides the binlog commit stage into the flush stage, sync stage, and commit stage.

The flush stage contains sync redo and flush binlog. A transaction that enters the commit phase will first wait to enter the flush stage. Why? Specifically, the group performing sync redo and flush binlog in the flush stage will hold a lock_log lock, thus blocking new transactions. They need to wait for the current group to end and release the lock_log lock before entering the flush stage. The system can accumulate many transactions waiting to enter the flush stage and form all of them into a group during the waiting process. The first transaction in the group is used as the leader, and all operations in the flush stage are completed by the leader's thread. As such, the original operation of multiple transactions performing multiple sync redo becomes a sync redo, which merges IO operations to the maximum extent.

After the lock-free design is introduced for redo logs on the MySQL-80, the action of sync redo becomes the background thread completion. The active operation of sync redo in the flush stage becomes waiting for the background thread to complete the sync redo. Under this design, group commit is meaningful because waiting background threads need to take locks, and many transactions will cause serious lock conflicts when waiting independently. As such, group commit essentially incorporates lock waits to avoid lock conflicts.

The sync stage contains operations of sync binlog. You need to form a team again before entering the sync stage to complete the flush group of the flush stage. Several flush groups form a sync group to further merge IO.

The sync stage team-up mechanism is similar to t the flush stage. The sync group in the sync binlog holds a lock_sync lock, and the flush group that is ready to enter the sync stage accumulates while waiting for this lock to form a sync group. When the previous group of sync binlog finishes lock_sync and is released, the leader of the first flush group in this group is used as the leader of the new group to complete the sync stage of this group.

The commit stage contains the commit operation of the transaction. The purpose of the Commit stage is not to merge IO but to make the order of transactions in binlog the same as the actual commit order of transactions.

When the binlog_order_commits is enabled, or the commit order needs to be dependent during the cloning process, the transaction that completes the sync stage will enter the commit stage again. If the instance does not start the commit order, the commit stage will be skipped, and each transaction will be committed by itself.

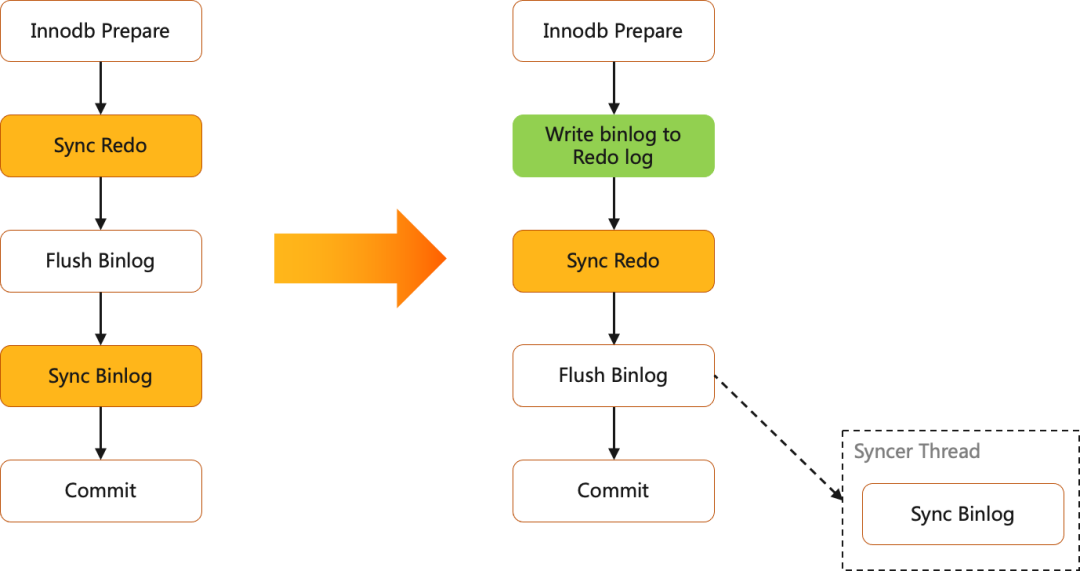

BGC optimization can merge many small IO operations by grouping, but the number of IO times that transaction commit needs to wait (sync redo and sync binlog twice IO) has not changed. AliSQL introduces Binlog in redo optimization to optimize IO further, which aims to merge two IO operations in the transaction commit phase into one.

How to implement optimization and verify the optimization effect will be described in subsequent articles.

The WAL mechanism of Innodb was mentioned in the previous chapter. Before Innodb writes any data, it needs to write the redo log of the data. Then, when you submit the data, you only need to wait for the redo log to be brushed synchronously and the data to be brushed asynchronously. Even if a crash occurs, as long as the redo log is complete, the data can be accurately restored. Binlog in redo, as the name implies, writes binlogs to redo logs. Under this architecture, binlog is data for the redo log and is protected by the redo log. Binlog disk brushing is completed by asynchronous threads. During transaction submission, there is no need to wait for binlog disk brushing. If a crash occurs, restore the binlog file based on the redo log. As such, the number of IO waiting times in the commit phase becomes one, which can improve the performance.

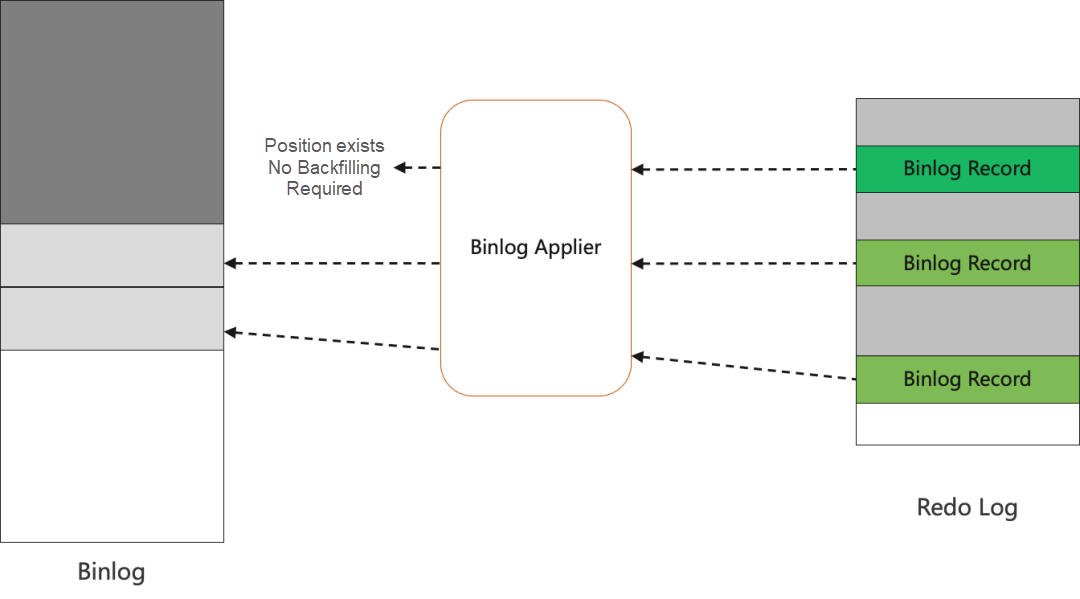

In the Binlog in redo architecture, the content in the binlog may be lost after the instance crashes. Therefore, you need to fill in the recovery process after the restart. A binlog applier is implemented in the server layer to complete binlogs. When applying redo during crash recovery, every time it reads the binlog event in the redo log, it parses the binlog position. If this position already exists in the binlog file, there is no need to backfill. If this position is not, he needs to backfill.

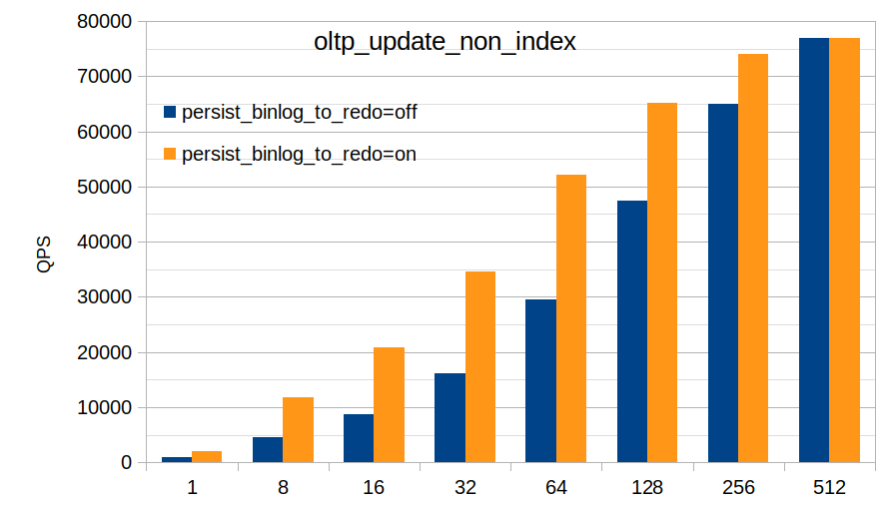

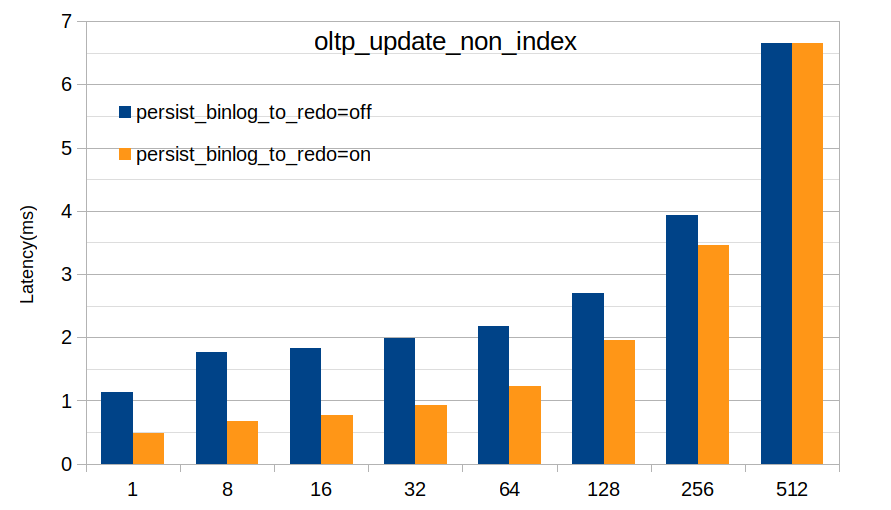

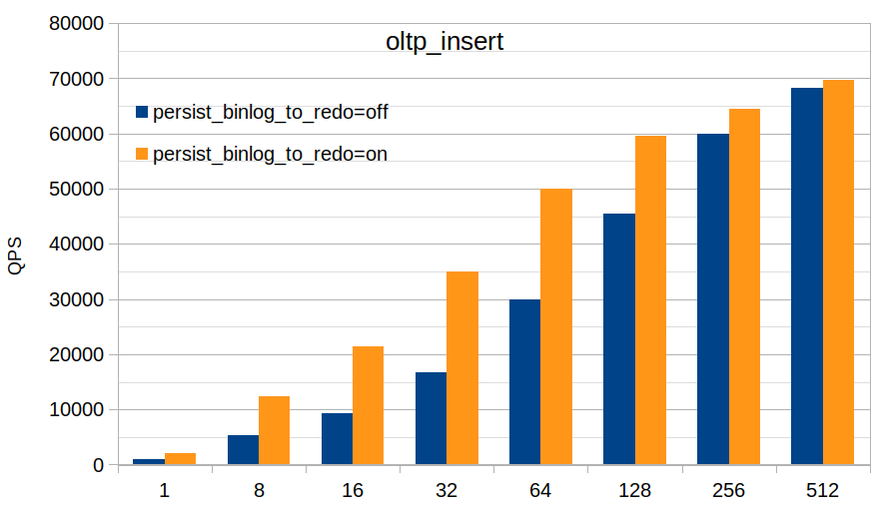

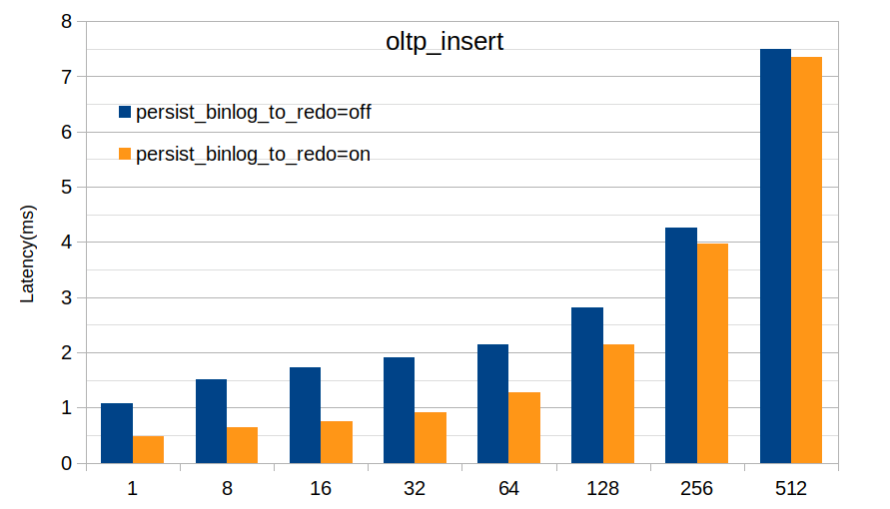

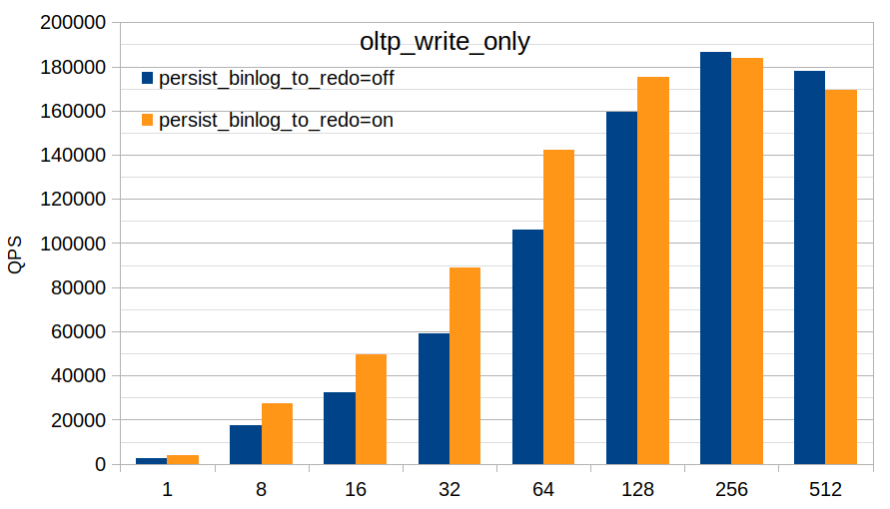

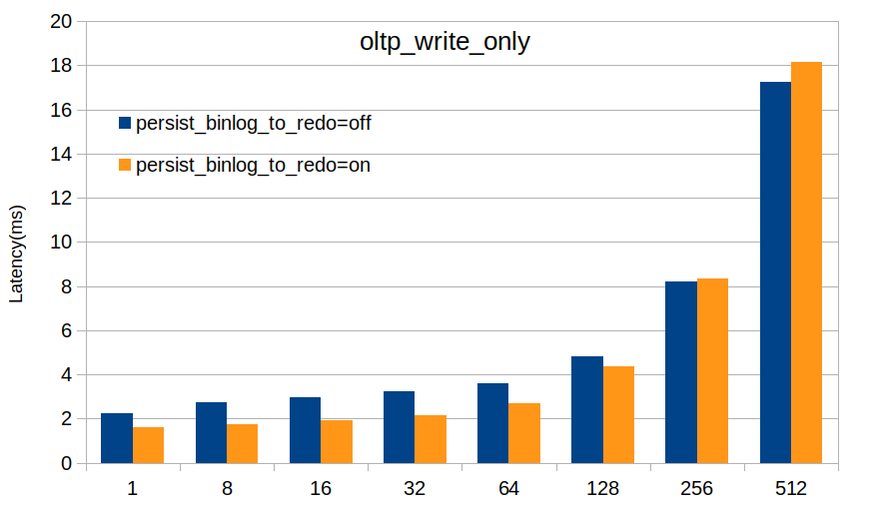

Test Environment: 32Core, 64 GB Ram, and ESSD storage

Test Tool: Sysbench

The Binlog In Redo function reduces IO once without losing reliability. In the case of no more than 256 concurrencies, the performance improvement and latency reduction of the Binlog in Redo function are significant. However, the performance improvement effect is limited for scenarios with large concurrency because the role of BGC merging IO is obvious under large concurrency. AliSQL introduces Binlog Parallel Flush optimization to optimize this bottleneck, which ultimately improves the performance of the instance in all business scenarios.

Stay tuned for the next part!

PolarDB-X Practice Series – Part 2: Use PolarDB-X to Develop Applications

Analysis on the Serverless Elasticity of Cloud-Native AnalyticDB for MySQL

Alibaba Cloud Community - February 24, 2023

ApsaraDB - March 19, 2020

Alibaba Clouder - April 8, 2018

ApsaraDB - September 10, 2025

ApsaraDB - August 1, 2022

ApsaraDB - March 27, 2024

ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn MoreMore Posts by ApsaraDB