Hadoop is an open source distributed computing framework that processes data in a reliable, efficient, and scalable way. This document provides a simple method for deploying a Hadoop cluster on Alibaba cloud.

Through Alibaba Cloud Resource Orchestration Service (ROS), users can automate the VPC, NAT gateway, ECS creation, and Hadoop deployment process to quickly and conveniently deploy a Hadoop cluster. You can deploy a Hadoop Cluster in 3 different ways, manually, with our ROS console, or with an ROS template. The Hadoop cluster created in this document includes three nodes: master.hadoop, slave1.hadoop, and slave2.hadoop.

Installing a Hadoop cluster involves four steps: configure SSH login without a password; install Java JDK; install and configure the Hadoop package; start and test the cluster.

All hosts in a cluster can login using SSH mode without a password. Therefore, run the following command on the three ECSs to ensure that the temporary private keys and public keys on all hosts are the same so that login without a password can be enabled.

"ssh-keygen -t rsa -P '' -f '/root/.ssh/id_rsa' \n",

"cd /root/.ssh \n",

"mv id_rsa bak.id_rsa \n",

"mv id_rsa.pub bak.id_rsa.pub \n",

"echo '-----BEGIN RSA PRIVATE KEY-----' > id_rsa \n",

"echo 'MIIEowIBAAKCAQEAzfQ/QHwWB1njU9+Wu3RYi9g+g5rydSpAE0klefTJuZjtcaic' >> id_rsa \n",

"echo 'SCeBN5avih8UToZ148+Ef2YzOtoosqluZpoYLCPSpAqr8pmviBJIU3vfu9mnDG9L' >> id_rsa \n",

"echo 'oevT6K8w3wCBRCmqu+vc0Tpju/EeCuYK3+8w7e2I6F3+zIYzhhX3qmkocje7ACnV' >> id_rsa \n",

"echo 'yh2DB/2m3sogTMc0RT+5y3kJAnC6TOIlGsYjOOkPbEF3Ifn1o4ZjOFOmQlcRJer4' >> id_rsa \n",

"echo '1E6rTdXAxS2uDNMFBCf9Xyx7O9J+ELTCAXc4h7AE6WLdQb5Apzv4t1KswCAtRenP' >> id_rsa \n",

"echo '1xGcUYY8I/JUT2VvBWtQJennrk9jrPZUFDcmcwIDAQABAoIBAAwzDZQaRYvF7UtI' >> id_rsa \n",

"echo 'kTslVyFhe8J76SS7jfQWfxvMPi66OkZjQG6duG+8g0VhNei42j7WSfjp6trvlT2P' >> id_rsa \n",

"echo '/7QgKJJkxNNmtmy2Ycljm9kmG0ibSePYq9g5ieHcjr6G3yFUfoKHJBtYpBO74pWu' >> id_rsa \n",

"echo 'rrI5DuLpERUCjFc9E8w7fOIhPH4XZ6wk/EmPxHTgxZk+aMvqptyPSbUyiUOvCiZf' >> id_rsa \n",

"echo 'MD0ircs9vgtslMVDlz9m6CoiNz6B3Yf6eLRoGGMiGnsQzZHIfnHCMX8i65Jc+TvQ' >> id_rsa \n",

"echo 'fLopIHzwBwI55xpcOIBRgYiEAQJhLsSNSFugoxMcwe9RalTGGS21HOQu4b3ZylKM' >> id_rsa \n",

"echo '8ofEVKECgYEA/Y4MzN04wAsM1yNuHN+9sdiVLG28LWH3dgpcXqa9gyNsWs9Gf8uf' >> id_rsa \n",

"echo 'qbuvQGeKDRXCW93wO1jO+pCYVrkyY3l+KhBKIqmkJT5gFsa8dBUvEBLALiHNg3+o' >> id_rsa \n",

"echo 'jR2Vqsemk8kMZA8zfJ3FKcMb1pt4S2GqepqsdC3DgzIIsxufCh7jSzsCgYEAz/Cv' >> id_rsa \n",

"echo 'Z7gAdSFC8q6QxFxSyhfZwGA6QW6ZU7rZv9ZdGySvZg+vHbpNVmQ0BAQzJui3Qs9u' >> id_rsa \n",

"echo 'XQMpYafXWzKsPzG6ZWvYXTF7fuxlovvG8Qd2A2QnGtGMB9YtQMHVqbsUDwxMjiTn' >> id_rsa \n",

"echo 'VBZILkDf+WCwQ98P5UMoumI0goIcNZ+AXhcqrikCgYBkSwLvKfYfqH9Mvfv5Odsr' >> id_rsa \n",

"echo '9NKUv1c20FB1BYYh/mxp6eIbTW/CbwXZup6IqCvoHxpBAlna77b3T6iibSDsTgtE' >> id_rsa \n",

"echo 'kirw6Q8/mBukBrpWZGa4QeJ4nPBQuncuUmx4H/7Y6CaZkZW5DiMF8OIbEmYT0y7+' >> id_rsa \n",

"echo 'zh222r9CLtFYH23aL/uSLwKBgQCS1xyG2eE41aw5RBznDWtJW15iA5If8sJD5ocu' >> id_rsa \n",

"echo 'eWp2aImUQS8ghxdmEozI6U5WA7CmdWUyObFXTPc/Z6FLXwqJ5IZ+CRt0neuIFNSA' >> id_rsa \n",

"echo 'EQy9iFQ1FBUW06BRQpBns7yOg9jr6BOTxchjIV0I9caDp1nKRIrWU9NQ9iCFnYVA' >> id_rsa \n",

"echo '7IsvQQKBgFxgF7UOhwaiMb/ATSuhm2v9kVvRPEO9umdo7YJ9I4L09lYbAtpcnusQ' >> id_rsa \n",

"echo 'fIROYL25VeEMgYcQyInc3Fm/sgJdbXQnUy+3QbbCcBCmcLj27LPQyxuu7p9hbPPT' >> id_rsa \n",

"echo 'Mxx37OmYvOkSVTQz0T9HfDGvOJgt4t4cXD4T/7ewk62p6jdpSQrt' >> id_rsa \n",

"echo '-----END RSA PRIVATE KEY-----' >> id_rsa \n",

"echo 'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDN9D9AfBYHWeNT35a7dFiL2D6DmvJ1KkATSSV59Mm5mO1xqJxIJ4E3lq+KHxROhnXjz4R/ZjM62iiyqW5mmhgsI9KkCqvyma+IEkhTe9+72acMb0uh69PorzDfAIFEKaq769zROmO78R4K5grf7zDt7YjoXf7MhjOGFfeqaShyN7sAKdXKHYMH/abeyiBMxzRFP7nLeQkCcLpM4iUaxiM46Q9sQXch+fWjhmM4U6ZCVxEl6vjUTqtN1cDFLa4M0wUEJ/1fLHs70n4QtMIBdziHsATpYt1BvkCnO/i3UqzAIC1F6c/XEZxRhjwj8lRPZW8Fa1Al6eeuT2Os9lQUNyZz root@iZ2zee53wf4ndvajz30cdvZ' > id_rsa.pub \n",

"cp id_rsa.pub authorized_keys \n",

"chmod 600 authorized_keys \n",

"chmod 600 id_rsa \n",

"sed -i 's/# StrictHostKeyChecking ask/StrictHostKeyChecking no/' /etc/ssh/ssh_config \n",

"sed -i 's/GSSAPIAuthentication yes/GSSAPIAuthentication no/' /etc/ssh/ssh_config \n",

"service sshd restart \n",To ensure security and prevent the disclosure of the private keys and public keys, run the following command on the master node to replace public temporary private keys and public keys:

"scp -r /root/.ssh/bak.* root@$ipaddr_slave1:/root/.ssh/ \n",

"scp -r /root/.ssh/bak.* root@$ipaddr_slave2:/root/.ssh/ \n",

"ssh root@$ipaddr_slave1 \"cd /root/.ssh; rm -f id_rsa; mv bak.id_rsa id_rsa; rm -f id_rsa.pub; mv bak.id_rsa.pub id_rsa.pub; rm -f authorized_keys; cp id_rsa.pub authorized_keys; chmod 600 authorized_keys; chmod 600 id_rsa; exit\" \n",

"ssh root@$ipaddr_slave2 \"cd /root/.ssh; rm id_rsa; mv bak.id_rsa id_rsa; rm id_rsa.pub; mv bak.id_rsa.pub id_rsa.pub; rm authorized_keys; cp id_rsa.pub authorized_keys; chmod 600 authorized_keys; chmod 600 id_rsa; exit\" \n",

"cd /root/.ssh \n rm id_rsa \n mv bak.id_rsa id_rsa \n rm id_rsa.pub \n mv bak.id_rsa.pub id_rsa.pub \n rm authorized_keys \n cp id_rsa.pub authorized_keys \n chmod 600 authorized_keys \n chmod 600 id_rsa \n cd \n",Install the JDK on the master node and remotely install the JDK on the slave node.

"yum -y install aria2 \n",

"aria2c --header='Cookie: oraclelicense=accept-securebackup-cookie' $JdkUrl \n",

"mkdir -p $JAVA_HOME \ntar zxvf jdk*-x64.tar.gz -C $JAVA_HOME \ncd $JAVA_HOME \nmv jdk*.*/* ./ \nrmdir jdk*.* \n",

"echo >> /etc/profile \n",

"echo export JAVA_HOME=$JAVA_HOME >> /etc/profile \n",

"echo export JRE_HOME=$JAVA_HOME/jre >> /etc/profile \n",

"echo export CLASSPATH=$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$CLASSPATH >> /etc/profile \n",

"echo export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH >> /etc/profile \n",

"source /etc/profile \n",

"ssh root@$ipaddr_slave1 \"mkdir -p $JAVA_HOME; exit\" \n",

"ssh root@$ipaddr_slave2 \"mkdir -p $JAVA_HOME; exit\" \n",

"scp -r $JAVA_HOME/* root@$ipaddr_slave1:$JAVA_HOME \n",

"scp -r $JAVA_HOME/* root@$ipaddr_slave2:$JAVA_HOME \n",

"ssh root@$ipaddr_slave1 \"echo >> /etc/profile; echo export JAVA_HOME=$JAVA_HOME >> /etc/profile; echo export JRE_HOME=$JAVA_HOME/jre >> /etc/profile; echo export CLASSPATH=$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$CLASSPATH >> /etc/profile; echo export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH >> /etc/profile; source /etc/profile; exit\" \n",

"ssh root@$ipaddr_slave2 \"echo >> /etc/profile; echo export JAVA_HOME=$JAVA_HOME >> /etc/profile; echo export JRE_HOME=$JAVA_HOME/jre >> /etc/profile; echo export CLASSPATH=$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$CLASSPATH >> /etc/profile; echo export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH >> /etc/profile; source /etc/profile; exit\" \n",

" \n",Download and install Hadoop:

"aria2c $HadoopUrl \n",

"mkdir -p $HADOOP_HOME \ntar zxvf hadoop-*.tar.gz -C $HADOOP_HOME \ncd $HADOOP_HOME \nmv hadoop-*.*/* ./ \nrmdir hadoop-*.* \n",

"echo >> /etc/profile \n",

"echo export HADOOP_HOME=$HADOOP_HOME >> /etc/profile \n",

"echo export HADOOP_MAPRED_HOME=$HADOOP_HOME >> /etc/profile \n",

"echo export HADOOP_COMMON_HOME=$HADOOP_HOME >> /etc/profile \n",

"echo export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native >> /etc/profile \n",

"echo export YARN_HOME=$HADOOP_HOME >> /etc/profile \n",

"echo export HADOOP_HDFS_HOME=$HADOOP_HOME >> /etc/profile \n",

"echo export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop >> /etc/profile \n",

"echo export HADOOP_OPTS=-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_HOME/lib/native >> /etc/profile \n",

"echo export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH >> /etc/profile \n",

"echo export CLASSPATH=.:$CLASSPATH:$HADOOP__HOME/lib >> /etc/profile \n",

"source /etc/profile \n",

" \n",

"mkdir -p $HADOOP_HOME/tmp \n",

"mkdir -p $HADOOP_HOME/dfs/name \n",

"mkdir -p $HADOOP_HOME/dfs/data \n",

###

Correctly configure these four files. For details, see the template Hadoop_Distributed_Env_3_ecs.json.

Core-site.xml,-site.xml, hdfs-site.xml, mapred-site.xml, yarn-site.xml

###

"ssh root@$ipaddr_slave1 \"mkdir -p $HADOOP_HOME; exit\" \n",

"ssh root@$ipaddr_slave2 \"mkdir -p $HADOOP_HOME; exit\" \n",

"scp -r $HADOOP_HOME/* root@$ipaddr_slave1:$HADOOP_HOME \n",

"scp -r $HADOOP_HOME/* root@$ipaddr_slave2:$HADOOP_HOME \n",

" \n",

"cd $HADOOP_HOME/etc/hadoop \n",

"echo $ipaddr_slave1 > slaves \n",

"echo $ipaddr_slave2 >> slaves \n",Format the Hadoop distributed file system (HDFS), disable the firewall, and start the cluster.

"hadoop namenode -format \n",

"systemctl stop firewalld \n",

"start-dfs.sh \n",

"start-yarn.sh \n",Instead of performing the four steps in the previous section, visit the ROS console for a template that greatly simplifies the installation process.

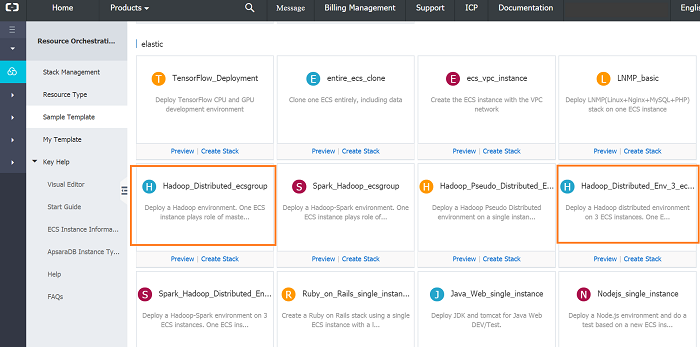

On your ROS console, go to Sample Template and select the most appropriate template for your application. You can deploy your Hadoop Cluster with a single click of a button using these templates. This is a convenient way to achieve an automated and repeatable deployment process.

Hadoop_Distributed_Env_3_ecs.json: One-click deployment can be achieved using this template. This template creates the same cluster as the one from the previous section.

Hadoop_Distributed_ecsgroup.json: This template allows the user to specify the number of slave nodes.

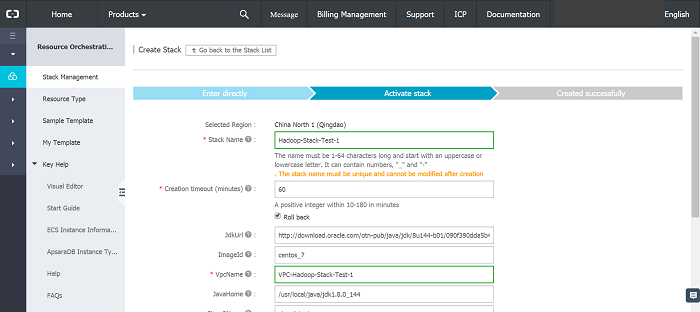

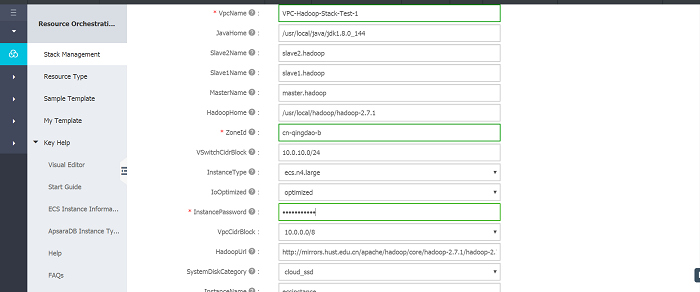

After selecting Hadoop_Distributed_Env_3_ecs, fill out the details as below.

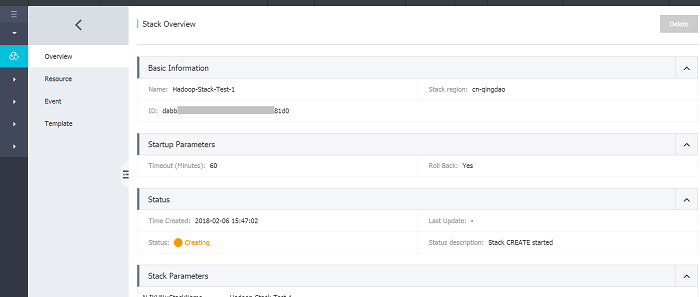

Once completed, you can activate your Hadoop stack.

Go back to your ROS console and click "Manage" to view the details of the newly created stack.

Note:

• We must ensure that the Jdk and Hadoop tar installation packages can be correctly downloaded. Select a URL similar to the following:

• http://download.oracle.com/otn-pub/java/jdk/8u121-b13/e9e7ea248e2c4826b92b3f075a80e441/jdk-8u121-linux-x64.tar.gz (Must set 'Cookie: oraclelicense=accept-securebackup-cookie' in Header, when download.)

• When we use a template to create a Spark cluster, only the CentOS operating system is available.

• The duration can be set to 120 minutes to prevent timeouts.

• We have selected Qingdao as the region for this example. You need to complete real name registration to use servers in Mainland China.

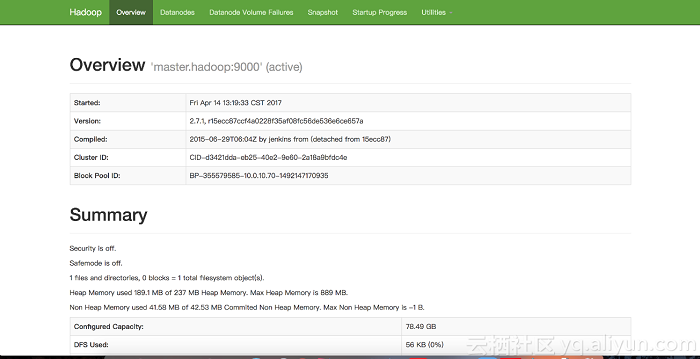

After deploying the Hadoop cluster, view the resource stack overview. You should be able to see the URL for your website.

Enter the website URL shown in the resource stack overview. If the following result is displayed, the deployment is successful.

Millisecond Marketing with Trillions of User Tags Using PostgreSQL

2,593 posts | 791 followers

FollowAlibaba Cloud Community - November 16, 2021

Alibaba Cloud MaxCompute - March 3, 2020

Apache Flink Community - January 31, 2024

Alibaba Clouder - January 5, 2021

Alibaba EMR - May 14, 2021

Alibaba EMR - April 30, 2021

2,593 posts | 791 followers

Follow ROS(Resource Orchestration Service)

ROS(Resource Orchestration Service)

Simplify the Operations and Management (O&M) of your computing resources

Learn More CloudOps Orchestration Service

CloudOps Orchestration Service

CloudOps Orchestration Service is an automated operations and maintenance (O&M) service provided by Alibaba Cloud.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Resource Management

Resource Management

Organize and manage your resources in a hierarchical manner by using resource directories, folders, accounts, and resource groups.

Learn MoreMore Posts by Alibaba Clouder