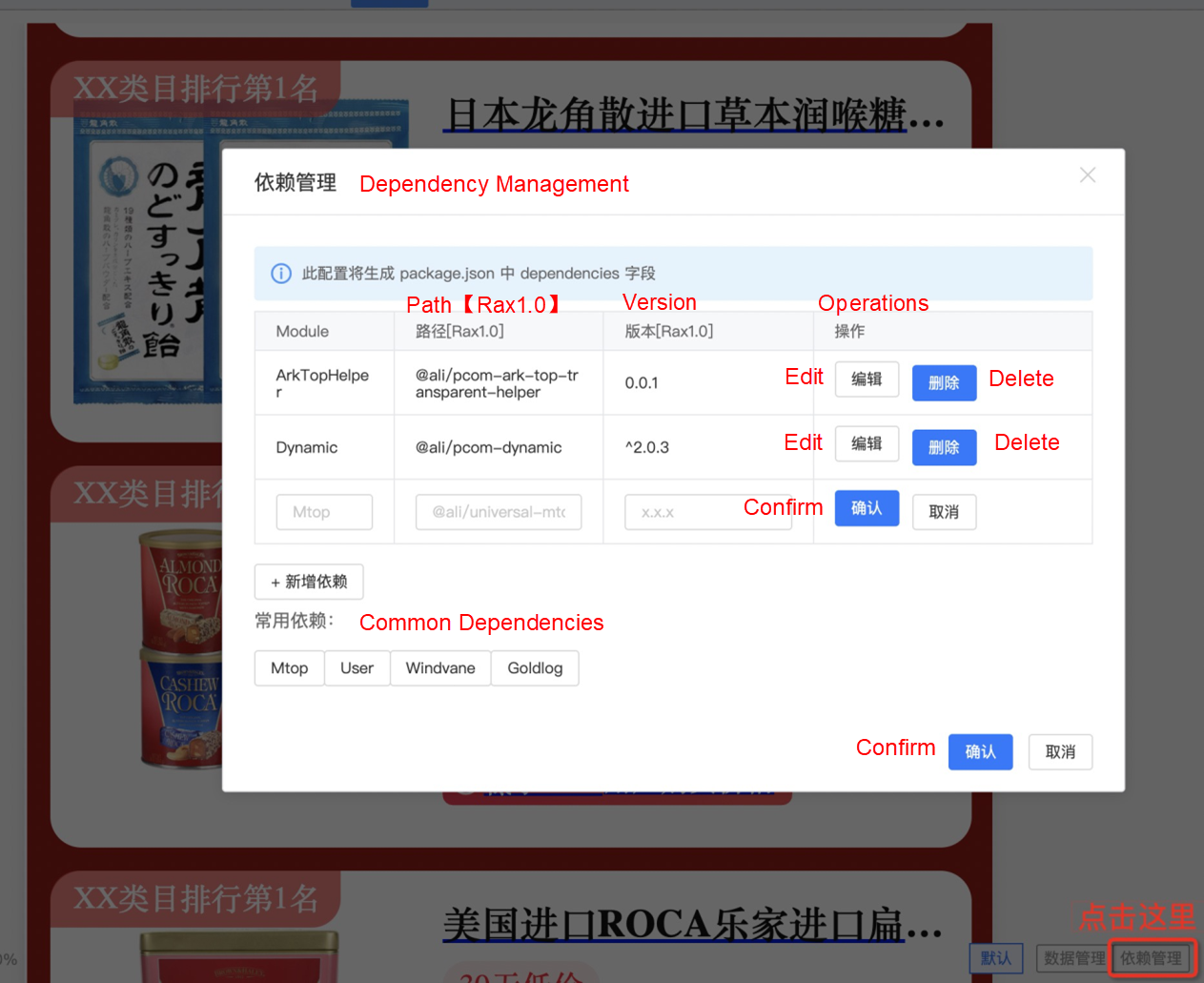

imgcook in Taobao provides a function similar to dependency management. It is used to introduce other dependency packages when writing functions in the imgcook editor, such as Axios, underscore, and @rax/video.

However, it is still relatively complicated to use because the editor does not accustom us to declaring dependencies as we do in package.json. Moreover, the editor has a GUI interface, so it is relatively complicated to open the code from each function and view the dependencies. As a result, after each imgcook module is developed, if dependency packages exist (required in most cases), we need to open the functions one by one, confirm their version numbers, and add them to the dependency management. This process is often painful for me.

imgcook provides the Schema source code development mode. It can replace the operation steps of GUI by directly modifying the module protocol (Schema) in the editor. Then, after searching for dependencies, I found that the dependency management function is implemented through imgcook.dependencies in the protocol:

{

"alias": "Axios",

"packageRax1": "axios",

"versionRax1": "^0.24.0",

"packageRaxEagle": "axios",

"versionRaxEagle": "^0.24.0",

"checkDepence": true

}Since the function code also exists in the protocol, we can process the original protocol document, scan the corresponding dependencies, save them to the node, and click Save. As such, the package list in dependency management is updated. Can we do that?

I implemented the function of pulling module protocol content in @imgcook/cli for this purpose. The specific Pull Request includes imgcook/imgcook-cli#12 and imgcook/imgcook-cli#15. The protocols (Schema) of the corresponding module that can be pulled through the command line tool listed below:

$ imgcook pull <id> -o jsonAfter execution, the module protocol content is output to stdout in the command line.

With this function, some command line tools can be implemented to cooperate with the data sources of imgcook-cli based on Unix Pipeline programs. For example, JSON content output through imgcook pull is not easy to read, so you may as well write imgcook-prettyprint to optimize the output results. The code implementation is listed below:

#!/usr/bin/env node

let originJson = '';

process.stdin.on('data', (buf) => {

originJson += buf.toString('utf8');

});

process.stdin.on('end', () => {

const origin = JSON.parse(originJson);

console.log(JSON.stringify(origin, null, 2));

});The program above receives the data from the upstream of the pipeline through process.stdin, namely the content of the imgcook module protocol. Then, it parses the data, beautifies the output in the end event, and runs the following command:

$ imgcook pull <id> -o json | imgcook-prettyprintNow, we can get the beautified output. This is a simple example of the Unix Pipeline program.

Next, let's learn about how we can automatically generate dependencies this way. Similar to the example above, let's create a file called ckdeps:

#!/usr/bin/env node

let originJson = '';

process.stdin.on('data', (buf) => {

originJson += buf.toString('utf8');

});

process.stdin.on('end', () => {

transform();

});

async function transform() {

const origin = JSON.parse(originJson);

const funcs = origin.imgcook?.functions || [];

if (funcs.length === 0) {

process.stdout.write(originJson);

return;

}

console.log(JSON.stringify(origin));

}We can get the code content of the function through origin.imgcook.functions, such as:

{

"content": "export default function mounted() {\n\n}",

"name": "mounted",

"type": "lifeCycles"

}Then, the process is to parse the content, obtain the import statement in the code, and generate the corresponding dependency object into origin.imgcook.dependencies. We need to reference @swc/core to parse the JavaScript code:

const swc = require('@swc/core');

await Promise.all(funcs.map(async ({ content }) => {

const ast = await swc.parse(content);

// the module AST(Abstract Syntax Tree)

}));After obtaining AST, we can obtain the information of the import statement through the code. Due to the complexity of AST, @swc/core provides a dedicated traversal mechanism:

const { Visitor } = require('@swc/core/visitor');

/**

* Save the list of dependency objects obtained through function parsing.

*/

const liveDependencies = [];

/** * Define the visitor * /

class ImportsExtractor extends Visitor {

visitImportDeclaration(node) {

let alias = 'Default';

liveDependencies.push({

alias,

packageRax1: node.source.value,

versionRax1: '',

packageRaxEagle: node.source.value,

versionRaxEagle: '',

checkDepence: true,

});

return node;

}

}

// Usage

const importsExtractor = new ImportsExtractor();

importsExtractor.visitModule(ast);The class ImportsExtractor inherits from the Visitor of @swc/core/visitor. Since it traverses the import declaration statement, its syntax type is ImportDeclaration. Therefore, it only needs to implement the visitImportDeclaration(node) method to obtain all import statements in the method. Then, we only need to convert them into dependency objects according to the structure of the corresponding nodes and update them. After defining the extractor, feed the AST to the extractor, so all the dependencies of the module can be collected for the generation of subsequent dependencies.

As we can see from the code above, the version number currently uses an empty string. This will cause the loss of the dependency version information if we update the protocol content. Therefore, we need to define a method to obtain the version.

Since the frontend dependencies are stored on NPM Registry, we can obtain the version through HTTP interface. For example:

const axios = require('axios');

async function fillVersions(dep) {

const pkgJson = await axios.get(`https://registry.npmjs.org/${dep.packageRax1}`, { type: 'json' });

if (pkgJson.data['dist-tags']) {

const latestVersion = pkgJson.data['dist-tags'].latest;

dep.versionRax1 = `^${latestVersion}`;

dep.versionRaxEagle = `^${latestVersion}`;

}

return dep;

}According to the rules in https://registry.npmjs.org/${packageName}, we can get the package information stored in the Registry. data['dist-tags'].latest represents the version corresponding to the latest tag, namely the latest version of the current package. Then, add a version prefix ^ to this version number. (You can also modify the final version and NPM Registry according to your demands.)

Now, update the dependency information we grabbed from the function code and output it:

async function transform() {

// ...

origin.imgcook.dependencies = newDeps;

console.log(JSON.stringify(origin));

}Then, execute the following code:

$ imgcook pull <id> -o json | ckdeps

> { ..., "dependencies": [{ ...<updated dependencies> }] }Next, the developer only needs to copy the output JSON content to the editor and save it. However, we cannot directly save JSON content in the editor, so we need to use the method of ECMAScript Module (export default { ... }). Does this mean we need to edit the content manually every time? The answer is no. The idea of Unix Pipeline is very helpful to solve this process problem. We only need to create a new node script called imgcook-save.

#!/usr/bin/env node

let originJson = '';

process.stdin.on('data', (buf) => {

originJson += buf.toString('utf8');

});

process.stdin.on('end', () => {

transform();

});

async function transform() {

const origin = JSON.parse(originJson);

console.log(`export default ${JSON.stringify(origin, null, 2)}`);

}Finally, the complete command is:

$ imgcook pull <id> -o json | ckdeps | imgcook-save

> export default { ... }Then, we can directly copy the content to the editor.

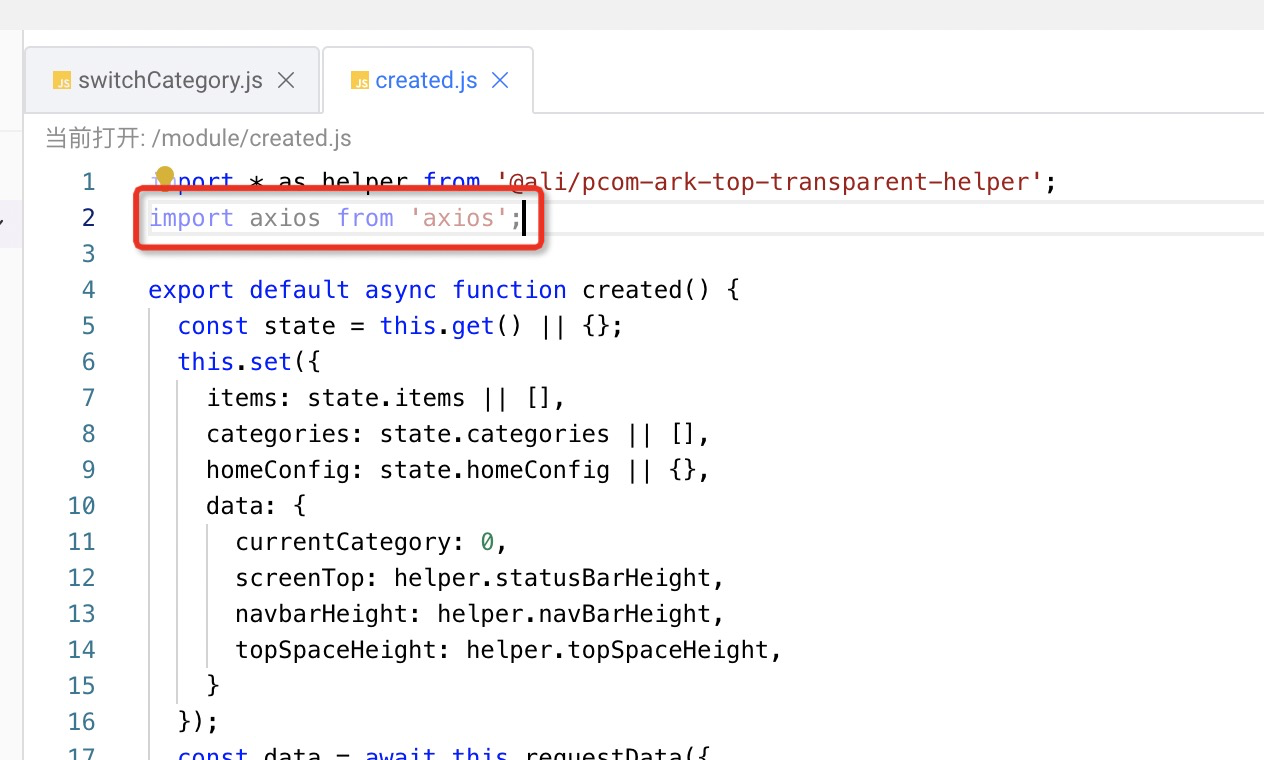

For example, I added Axios dependency to the created function in one of my projects. Then, I close the window, click Save (to make sure Schema is saved), and run this command:

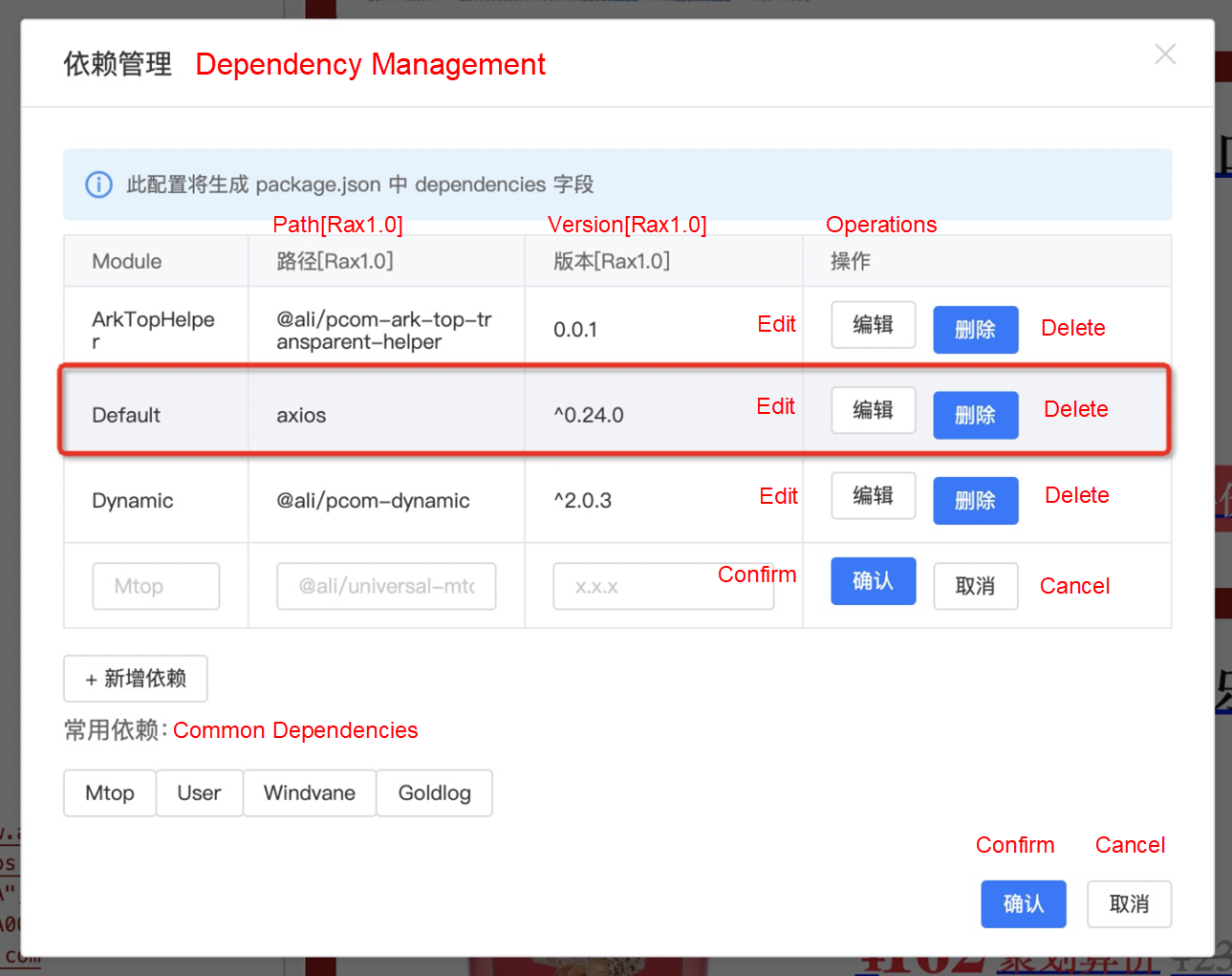

$ imgcook pull <id> -o json | ckdeps -f | imgcook-saveNext, I open and edit Schema in the editor, copy the generated content, and save it. In Dependency Management, we can see:

The code generated through parsing has been updated to the dependency panel. We can finally focus more on other things.

Is this the end? In macOS, the pbcopy command is provided to copy stdin to the clipboard. Combine it with the example of imgcook:

$ imgcook pull <id> -o json | ckdeps | imgcook-save | pbcopyThis way, we do not need to copy the content. After the command is executed, we can open the editor and use the ⌘V shortcut.

Lastly, I would like to take a broader view. After @imgcook/cli supports the function of outputting JSON text, it means imgcook is connected to the ecosystem of Unix Pipeline. This way, we can build many interesting and practical tools in this process and use them in collaboration with many Unix tools (such as bpcopy, grep, cat, and sort).

This article takes the automatic generation of dependencies as an example and verifies its feasibility and the experience of working with the imgcook editor by using Unix Pipeline. With this feature, I can make up for the lack of experience with many editors and easily use the core functions of imgcook.

Designers Have Developed over 1,100 Pages with Frontend Developers Using imgcook

Youku's Frontend Team Builds an Intelligent Material Generation Platform with imgcook

66 posts | 5 followers

FollowAlibaba Clouder - December 31, 2020

Alibaba F(x) Team - September 10, 2021

Alibaba F(x) Team - February 26, 2021

Alibaba F(x) Team - February 25, 2021

Alibaba F(x) Team - June 20, 2022

Alibaba F(x) Team - June 9, 2021

66 posts | 5 followers

Follow YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn MoreMore Posts by Alibaba F(x) Team