Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统. All rights reserved to the original author.

In the previous two articles, we introduced two optimization methods of SQL query performance based on big data: RBO and CBO.

RBO is loyal to established rules, while CBO is more concerned with actual costs. Fundamentally, they are two optimization methods with completely different ideas.

They have individual focus but also have commonalities.

Although CBO is based on cost, it also selects the least costly one among several alternative plans. The alternative plans are not randomly selected but are usually selected based on experience.

For example, join order, which is often used as an example, was made into different alternative plans to be evaluated after past experiences showed that the different order of join had a significant impact on performance.

From this point of view, broadly speaking, RBO and CBO are both based on experience.

However, CBO adds some variables to these experiences, which are various statistical metrics of actual data.

As we said in the previous article, these statistical metrics have shortcomings:

This type of problem can be summarized as the inaccuracy of statistical metrics.

The introduction of statistical metrics makes the optimization measures more accurate and flexible, but the shortcomings above make the statistical metrics inaccurate and inflexible.

In addition, another common problem is that the settings of the threshold class are often not universal. In some cases, performance may be affected. For example, the default value of spark.sql.shuffle.partitions is 200, but the appropriate number of partitions is different when the amount of data is different.

The existence of static configuration also chooses optimization methods that are not accurate and flexible enough.

The experience is rigid. Therefore, we need more accurate and flexible optimization methods.

It is necessary to have timely and full coverage of statistical data to be more accurate. It is necessary to have relatively dynamic threshold settings to be more flexible.

The Adaptive Query Execution technology introduced by Spark in version 3.0 demonstrates good ideas for these two problems:

Let's take a look at the three main optimizations currently supported by Spark AQE.

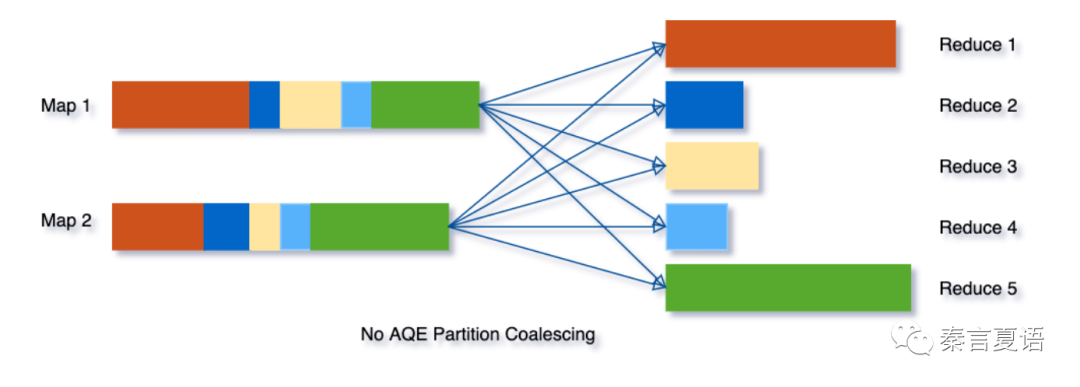

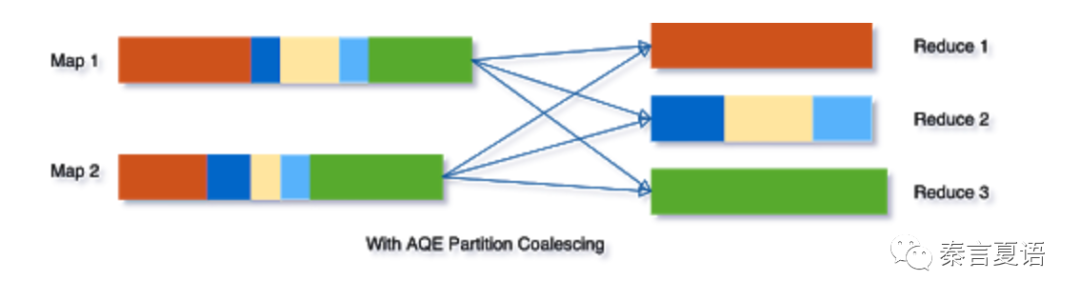

As mentioned earlier, in Spark, the number of shuffle partitions is set through static configuration. The result that the number of partitions is not universal is inevitable.

For example, in the example above, it is clear that Reduces 2, 3, and 4 are very small. It would be good to merge them.

In order to achieve this effect, you need to set more indirectly and flexibly rather than set a fixed number of partitions.

Think about it. How did we judge that Reduces 2, 3, and 4 are smaller? It is based on the amount of data in the partition.

Therefore, you can change the setting parameters that control the number of partitions from directly setting the number of partitions to setting the data size of each partition.

Of course, the data size of each partition is still an empirical value and is limited to experience. However, the effect is much more accurate and flexible than setting the number of partitions.

After the partition size is set, there are two possibilities:

There is no problem with merging. However, splitting may lead to shuffle and drag down performance. Therefore, Spark AQE adopts the strategy of setting a large number of partitions first and then merging small partitions.

This optimization solves the problem of inflexible static configuration.

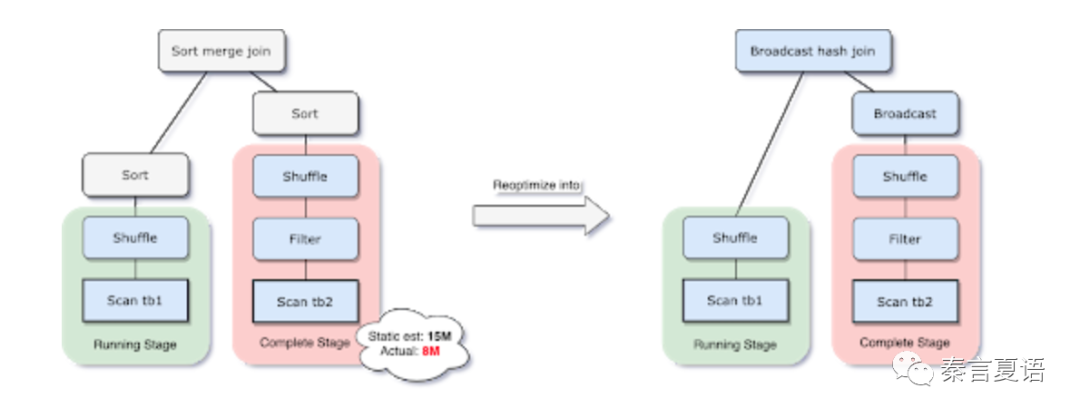

A previous article in this series focused on the impact of different join strategies on performance.

Among them, broadcast join is the most efficient join of Spark. However, because the data needs to be stored in memory, it is suitable for small tables.

What size of a table can be seen as small? Spark controls this threshold by a spark.sql.autoBroadcastJoinThreshold parameter.

However, this parameter examines the size of the source table. Once operations (such as filter) are encountered, the filtered data may be much smaller than the size of the source table, and the estimation may be stuck near the boundary value.

As shown in the chart above, AQE can evaluate the data size at run time and then optimize sort merge join to broadcast join.

This optimization solves the problem of inaccurate estimation.

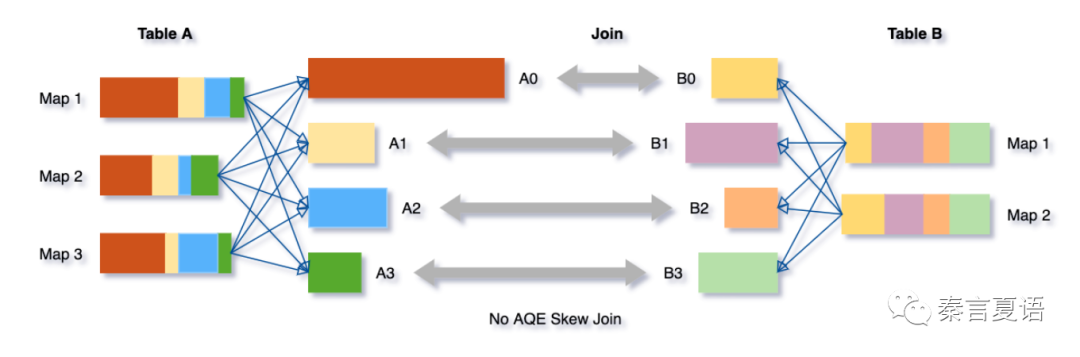

Data skew is a common optimization scenario and often takes a lot of time. However, there is a common solution that can be done automatically, as shown in the following scenario:

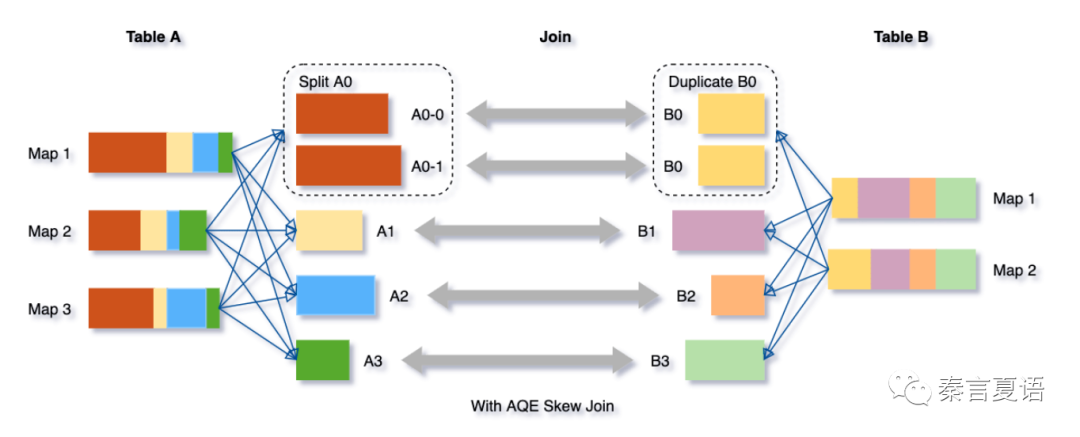

The A0 partition significantly affects the overall execution time due to data skew. The common optimization method is to split and then join the corresponding partitions and finally merge the results.

As I said earlier, the skew solution is generic and well-handled at the code level.

The question is – how can we determine if it is skewed? Is it appropriate to make a static configuration like that used in setting the number of partitions? The answer is no.

AQE adopts the idea that if absolute values are not flexible, use relative values. If the size of one partition is spark.sql.adaptive.skewJoin.skewedPartitionFactor times larger than the other partitions, and the default value is 5 times, it is considered to be skewed and needs to be split.

This optimization provides a general solution to the data skew and solves the problem that static configuration is not universal.

We can see the idea of Spark AQE from the three examples below:

The way Spark divides stage scheduling according to DAG makes it possible to get complete statistical information after the end of the previous stage. Then, it can do real-time optimization again. AQE can intervene at the correct place without affecting the overall implementation framework.

As a supplement and improvement to RBO and CBO, the idea of AQE is worthy of our consideration and reference. We hope AQE can be frequently used in Spark and hope other computing engines can refer to it.

This is a carefully conceived series of 20-30 articles. I hope to give everyone a core grasp of the distributed system in a storytelling way. Stay tuned for the next one!

Learning about Distributed Systems - Part 21: Cost-based Optimization

Learning about Distributed Systems - Part 23: Distributed Data Warehouse

64 posts | 59 followers

FollowApache Flink Community China - February 28, 2022

ApsaraDB - July 9, 2025

dehong - July 8, 2020

Alibaba Cloud Data Intelligence - September 5, 2023

Alibaba Cloud Native - January 16, 2025

Alibaba Clouder - June 3, 2019

64 posts | 59 followers

Follow Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud_Academy