By Dehong Gao, Linbo Jin, Ben Chen, Minghui Qiu, Peng Li, Yi Wei, Yi Hu, and Hao Wang

In this article, we address the text and image matching in cross-modal retrieval of the fashion industry. Different from the matching in the general domain, the fashion matching is required to pay much more attention to the fine-grained information in the fashion images and texts. Pioneer approaches detect the region of interests (i.e., RoIs) from images and use the RoI embeddings as image representations. In general, RoIs tend to represent the "object-level" information in the fashion images, while fashion texts are prone to describe more detailed information, e.g. styles, attributes. RoIs are thus not fine-grained enough for fashion text and image matching. To this end, we propose FashionBERT, which leverages patches as image features. With the pre-trained BERT model as the backbone network, FashionBERT learns high level representations of texts and images. Meanwhile, we propose an adaptive loss to trade off multitask learning in the FashionBERT modeling. Two tasks (i.e., text and image matching and cross-modal retrieval) are incorporated to evaluate FashionBERT. On the public dataset, experiments demonstrate FashionBERT achieves significant improvements in performances than the baseline and state-of-the-art approaches. In practice, FashionBERT is applied in a concrete cross-modal retrieval application. We provide the detailed matching performance and inference efficiency analysis.

You can download the full version of the paper by clicking here.

Over the last decade, a great number of multimedia data (including image, video, audio, and text) have been emerged on the internet. To search important information from these multi-modal data efficiently, multimedia retrieval is becoming an essential technique and widely researched by world-wide researchers. Recently, it has been witnessed a soar increase of the research interest in cross-modal retrieval, which takes one type of data as the query and retrieves relevant data of another type. The pivot of cross-modal retrieval is to learn a meaningful cross-modal matching [40].

There exists a long research line in cross-modal matching, especially in text and image matching. The early approaches usually project visual and textual modal representations into a shared embedded subspace for the cross-modal similarity computation or fuse them to learn the matching scores, for example, the CCA-based approaches [14, 25, 44] and the VSE-based approaches [10, 11, 18, 41]. Very recently, the pre-training technique has been successfully applied in Compute Visual (CV) [1, 2] and Nature Language Processing (NLP) [8, 46]. Several researchers are inspired to adopt the pre-trained BERT model as the backbone network to learn the cross-modal information representation [19, 34]. The proposed approaches have achieved promising performances on several down-stream tasks, such as cross-modal retrieval [40], image captioning [1] and visual question answering [2]. However, these studies are centered on text and image matching of the general domain. In this paper, we focus on the text and image matching of the fashion industry [47], which is mainly referred to clothing, footwear, accessories, makeup and etc.

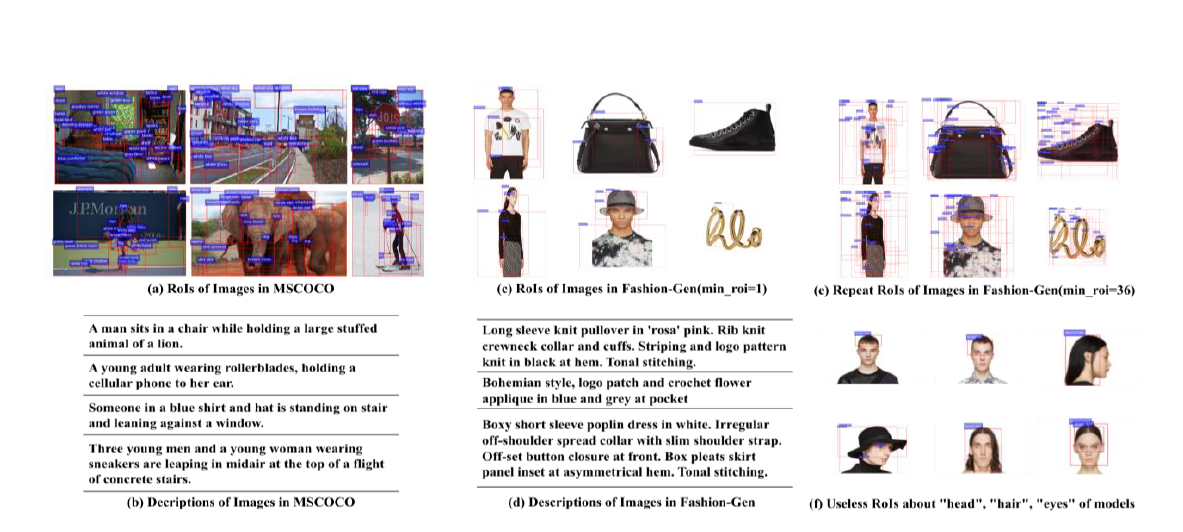

Figure 1: Comparison of text and image in the general and fashion domains. (a) and (b) are the RoIs and descriptions of MSCOCO Images from the general domain. (c) and (b) are the relatively-rare RoIs and fine-grained descriptions of Fashion-Gen Images from the fashion domain. (e) and (f) are large amount of the repeated and useless RoIs detected from fashion images.

The main challenge of these pioneer matching approaches is how to extract the semantic information from images, and integrate this information into the BERT model. All current approaches detect RoIs 48 from images as seen in Figure 1(a) and treat these RoIs as "image tokens". This RoI method does not work well in the fashion domain since relatively-rare RoIs can be detected from fashion images. As seen in Figure 1(b), we show the detected RoIs of Fashion-Gen images of different categories, where the minimum number of detected RoIs is set to one from an image. We found on average 19.8 RoIs can be detected from one MSCOCO [49] image, but only 6.4 can be detected from one Fashion-Gen [50] image. This is because in general a fashion image contains only one or two objects (e.g., a coat and/or a pant) with a flat background. We can set the minimum RoI number to detect, but under this setting lots of detected RoIs are repeated since they only focus on the same object(s) as seen in Figure 1(e). These repeated RoIs will produce similar features and contribute little to the later modeling. Meanwhile, we find some RoIs from fashion images are useless for text and image matching, for example, RoIs about the body parts (head, hair, hands etc.) of the models in fashion images as seen in Figure 1(f). These RoIs are irrelated to the fashion products and cannot build connection with the descriptions. On the contrary, most of the fashion texts describe the fine-grained information about the products (e.g., "crew neck", "off-shoulder", "high collar"). Occasionally, some of descriptions contain abstract styles, e.g., "artsy" and "bohemian" as seen in Figure 1(d). The RoIs in fashion images can indicate main fashion object(s), but fail to distinguish these fine-grained attributes or styles. Thus, it is more difficult for fashion text and image matching with such "object-level" RoIs and fine-grained descriptions.

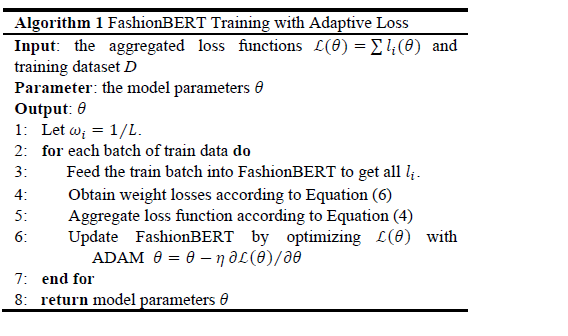

In this paper, we propose FashionBERT to solve the above problems. Inspired by the selfie idea [38], we first introduce the patch method to extract image tokens. Each fashion image is split to small patches with the same pixels and we assume these patches as image tokens. The patches show rawer pixel information, and thus contain more detained information compared with object-level RoIs. Besides, the split patches are non-repeated and ordered in nature, which are well suitable as the sequence inputs of the BERT model. The training procedure of FashionBERT is a standard multitask learning procedure (i.e., Masked Language Modeling, Masked Patch Modeling and Text&Image Alignment, which will be depicted in the later section). We propose an adaptive algorithm to balance the learning of each task. The adaptive algorithm treats the determination of loss weights of each task as a new optimal problem and will estimate the loss weights in each batch step.

We evaluate FashionBERT with two tasks, Text&Image alignment classification and cross-modal retrieval (including Image-to-Text and Text-to-Image retrieval). Experiments are conducted on the public fashion product dataset (Fashion-Gen). The results show that FashionBERT significantly outperforms the SOTA and other pioneer approaches. We also apply FashionBERT in our E-commercial website. The main contributions of this paper are summarized as follows:

1) We show the difficulties of text and image matching in the fashion domain and propose FashionBERT to address these issues.

2) We present the patch method to extract image tokens, and the adaptive algorithm to balance the multitask learning of FashionBERT. The patch method and the adaptive algorithm are task-agnostic, which can be directly applied in others tasks.

3) We extensively experiment FashionBERT on the public dataset. Experiments show the powerful ability of FashionBERT in text and image matching of the fashion domain.

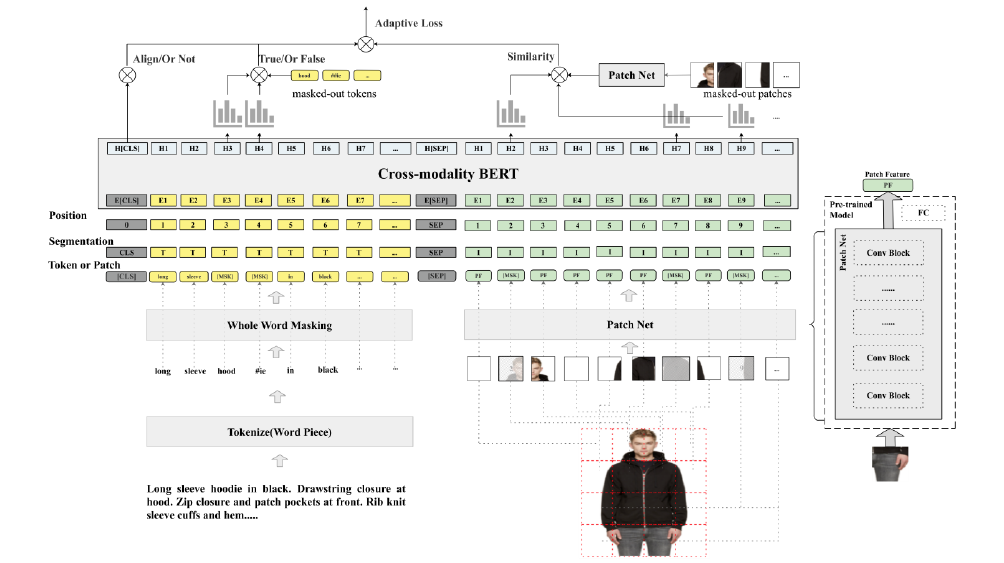

Figure 2: our FashionBERT framework for text and image matching. We cut each fashion image into patches and treat these patches as "image tokens". After the interaction of text tokens and image patches in BERT, three tasks with adaptive loss weights are proposed to train the entire FashionBERT model.

4) FashionBERT currently has been applied in practice. We present the concrete application of FashionBERT in cross-modal retrieval. Meanwhile, we analyze both matching performances and inference efficiencies in detail.

In this section, we will briefly revisit the BERT language model and then describe how we extract the image features and how FashionBERT jointly models the image and text data.

The BERT model introduced by [8] is an attention-based bidirectional language model. Taking tokens (i.e., word pieces) as inputs, BERT processes the embeddings of tokens with a multi-layer Transformer encoder [39]. When pre-trained on a large language corpus, BERT has proven to be very effective for transfer learning in variants of natural language processing tasks.

The original BERT model focuses on encoding of the single-modality text data. In the cross-modal scenario, the extended BERT model takes multi-modality data as input and allows them to interact within the Transformer blocks.

The overview of FashionBERT is illustrated in Figure 2. It is composed of four parts, text representation, image representation, matching backbone and FashionBERT training with adaptive loss.

Text Representation: Similar to [8], the input text is first tokenized into a token sequence according to WordPieces [42]. The same BERT vocabulary is adopted in our FashionBERT model. We use the standard BERT pre-process method to process the input text. Finally, the sum of the word-piece embedding, position embedding and segmentation embedding is regarded as the text representation. The segmentation (i.e., "T" and "I" in Figure 2) is used to differentiate text and image inputs.

Image Representation: Different from the RoI method, we cut each image into patches with the same pixels as illustrated in Figure 2. We regard each patch as an "image token". For each patch, the outputs of the patch network are regarded as the patch features. It is possible to select any pre-trained image model, (e.g., InceptionV3 [36] and ResNeXt-101 [43]) as the backbone of the patch network. These patches are ordered in nature. The spatial positions of the patches are used in the position embedding. The sum of the patch features, the position embedding and segmentation embedding are regarded as patch representations.

Matching Backbone: The concatenation of the text token sequence and image patch sequence consists of the FashionBERT inputs. Similar to BERT, the special token [CLS] and separate token [SEP] are added in the first position and between the text token sequence and the image patch sequence, respectively.

The pre-trained standard BERT is adopted as the matching backbone network of FashionBERT. The information of text tokens and image patches thus interact freely in multiple self-attention layers. FashionBERT outputs the final representations of each token or patch.

FashionBERT Training with Adaptive Loss: We exploit three tasks to train FashionBERT. Please refer to the link for further details.

We illustrate the training procedure of FashionBERT in Algorithm.1 (below).

In this paper, we focus on the text and image matching in cross-modal retrieval of the fashion domain. We propose FashionBERT to address the matching issues in the fashion domain. FashionBERT splits images into patches. The images patches and the text tokens are as the inputs of the BERT backbone. To trade off the learning of each task, we present the adaptive loss algorithm which automatically determines the loss weights. Two tasks are incorporated to evaluate FashionBERT and extensive experiments are conducted on the Fashion-Gen dataset. The main conclusions are 1) the patch method shows its advantages in matching fashion texts and images, compared with the object-level RoI method; 2) through the adaptive loss, FashionBERT shifts its attention on different tasks during the training procedure.

Compared with the matching of the general domain, there is still room for further improvements in the fashion domain. In the future, 1) To better understand the semantic of the fashion images, we attempt to construct more fine-grained training task (for example, token-level and patch-level alignment) to force FashionBERT to learn more detail information. 2) We attempt to visualize the FashionBERT matching secrets. This would help to understand how FashionBERT work inside and make further improvement. 3) We are attempting the model reduction, knowledge distillation approaches to further speed up the online inference.

Please refer to the link for more details on our experimental settings and the main results of our experiments.

The authors would like to thank Alibaba PAI team for providing experimental environments and their constructive comments. This work was partially supported by the China Postdoctoral Science Foundation (No. 2019M652038). Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect those of the sponsor.

[1] Peter Anderson, Xiaodong He, Chris Buehler, Damien Teney, Mark Johnson, Stephen Gould and Lei Zhang, 2018. Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering. In Proceedings of IEEE Conference of Computer Vision and Pattern Recognition.

[2] Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick and Devi Parikh, 2015. VQA: Visual Question Answering. In Proceedings of International Conference on Computer Vision.

[3] Stephen Boyd and Lieven Vandenberghe. 2004. Convex Optimization. Cambridge University Press, New York, NY USA.

[4] Huizhong Chen, Andrew Gallagher and Bernd Girod, 2012. Describing clothing by semantic attributes. In Proceedings of European Conference on Computer Vision, 609–623.

[5] Daoyuan Chen, Yaliang Li, Minghui Qiu, Zhen Wang, Bofang Li, Bolin Ding, Hongbo Deng, Jun Huang, Wei Lin and Jingren Zhou, 2020. AdaBERT: Task-Adaptive BERT Compression with Differentiable Neural Architecture Search. arXiv preprint arXiv:2001.04246.

[6] Yiming Cui, Wanxiang Che, Ting Liu, Bing Qin, Ziqing Yang, Shijin Wang and Guoping Hu, 2019. Pre-Training with Whole Word Masking for Chinese BERT. arXiv. preprint arXiv:1906.08101.

[7] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li and Li Fei-Fei, 2009. ImageNet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition. 248-255.

[8] Jacob Devlin, Ming-Wei Chang, Kenton Lee and Kristina Toutanova, 2018. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv. preprint arXiv:1810.04805.

[9] Jeff Donahue, Yangqing Jia, Oriol Vinyals, Judy Hoffman, Ning Zhang, Eric Tzeng and Trevor Darrell, 2013. DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition. arXiv:1310.1531.

[10] Fartash Faghri, David J. Fleet, Jamie Ryan Kiros and Sanja Fidler, 2017. VSE++: Improving Visual-Semantic Embeddings with Hard Negatives. arXiv preprint arXiv:1707.05612.

[11] Andrea Frome, Greg S Corrado, Jon Shlens, Samy Bengio, Jeff Dean, Marc'Aurelio Ranzato and Tomas Mikolov, 2013. DeViSE: A Deep Visual-Semantic Embedding Model. In Proceedings of Advances in Neural Information Processing Systems.

[12] Ross Girshick, Jeff Donahue, Trevor Darrell and Jitendra Malik, 2014. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of IEEE Conference of Computer Vision and Pattern Recognition.

[13] Ross 13. Fast r-cnn. In Proceedings of IEEE International Conference on Computer Vision.

[14] David R. Hardoon, Sandor Szedmak and John Shawe-Taylor, 2004. Canonical Correlation Analysis: An overview with Application to Learning Methods. Neural Computation. Vol.16(12), 2639-2664.

[15] Herve Jégou, Matthijs Douze and Cordelia Schmid, 2011. Product Quantization for Nearest Neighbor Search. In IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 33(1), 117-128.

[16] Xiaoqi Jiao, Yichun Yin, Lifeng Shang, Xin Jiang, Xiao Chen, Linlin Li, Fang Wang and Qun Liu, 2019. TinyBERT: Distilling BERT for Natural Language Understanding. arXiv preprint arXiv:1909.10351.

[17] Zhenzhong Lan, Mingda Chen, Sebastian Goodman, Kevin Gimpel, Piyush Sharma and Radu Soricut, 2019. ALBERT: A Lite BERT for Self-supervised Learning of Language Representations. arXiv preprint arXiv:1909.11942.

[18] Kuang-Huei Lee, Xi Chen, Gang Hua, Houdong Hu and Xiaodong He, 2018. Stacked Cross Attention for Image-Text Matching. In Proceedings of European Conference on Computer Vision.

[19] Gen Li, Nan Duan, Yuejian Fang, Ming Gong, Daxin Jiang and Ming Zhou, 2019. Unicoder-VL: A Universal Encoder for Vision and Language by Cross-modal Pre-training. In Proceedings of Association for the Advancement of Artificial Intelligence.

[20] Si Liu, Zheng Song, Guangcan Liu, Changsheng Xu, Hanqing Lu and Shuicheng Yan, 2012. Street-to-shop: Cross-scenario clothing retrieval via parts alignment and auxiliary set. In Proceedings of IEEE Conference of Computer Vision and Pattern Recognition. 3330–3337.

[21] Ziwei Liu, Ping Luo, Shi Qiu, Xiaogang Wang and Xiaoou Tang, 2016. Deepfashion: Powering robust clothes recognition and retrieval with rich annotations. In Proceedings of IEEE Conference of Computer Vision and Pattern Recognition. 1096–1104.

[22] Jonathan Long, Evan Shelhamer and Trevor Darrell, 2015. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of IEEE Conference of Computer Vision and Pattern Recognition.

[23] Jiasen Lu, Dhruv Batra, Devi Parikh and Stefan Lee, 2019. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. arXiv preprint arXiv:1908.02265.

[24] M Hadi Kiapour, Kota Yamaguchi, Alexander C Berg, and Tamara L Berg, 2014. Hipster wars: Discovering elements of fashion styles. In Proceedings of European Conference on Computer Vision. 472–488.

[25] Tae-Kyun Kim, Josef Kitter and Roberto Cipolla, 2007. Discriminative Learning and Recognition of Image Set Classes Using Canonical Correlations. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(6):1005-1018.

[26] Nikita Kitaev, Łukasz Kaiser, Anselm Levskaya, 2020. Reformer: The Efficient Transformer. In The International Conference on Learning Representations.

[27] Ranjay Krishna, Yuke Zhu, Oliver Groth, Justin Johnson, Kenji Hata, Joshua Kravitz, Stephanie Chen, Yannis Kalantidis, Li-Jia Li, David A. Shamma, Michael S. Bernstein and Fei-Fei Li, 2016. Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations. arXiv preprint arXiv:1602.07332.

[28] Alex Krizhevsky, Ilya Sutskever and Geoffrey E. Hinton, 2012. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of Annual Conference on Neural Information Processing Systems.

[29] Ishan Misra, C Lawrence Zitnick and Martial Hebert, 2016. Shuffle and learn: unsupervised learning using temporal order verification. In Proceedings of European Conference on Computer Vision, 527–544.

[30] Jeffrey Pennington, Richard Socher and Christopher Manning, 2014. Glove: Global vectors for word representation. In Proceedings of conference on Empirical Methods in Natural Language Processing.

[31] Alec Radford, Karthik Narasimhan, Tim Salimans and Ilya Sutskever, 2018. Improving language understanding by generative pre-training.

[32] Jie Shao, Leiquan Wang, Zhicheng Zhao Fei Su and Anni Cai, 2016. Deep canonical correlation analysis with progressive and hypergraph learning for cross-modal retrieval. Neurocomputing, 214:618-628.

[33] Karen Simonyan and Andrew Zisserman, 2014. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv:1409.1556.

[34] Weijie Su, Xizhou Zhu, Yue Cao, Bin Li, Lewei Lu, Furu Wei and Jifeng Dai, 2019. VL-BERT: Pre-training of Generic Visual-Linguistic Representations. arXiv. preprint arXiv:1908.08530.

[35] Chen Sun, Austin Myers, Carl Vondrick, Kevin Murphy and Cordelia Schmid, 2019. Videobert: A joint model for video and language representation learning. arXiv preprint arXiv:1904.01766.

[36] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jonathon Shlens and Zbigniew Wojna, 2016. Rethinking the Inception Architecture for Computer Vision. arXiv preprint arXiv:1512.00567.

[37] Lorenzo Torresani, Martin Szummer and Andrew Fitzgibbon, 2010. Efficient object category recognition using classemes. ECCV, 776–789.

[38] Trieu H. Trinh, Minh-Thang Luong and Quoc V. Le, 2019. Selfie: Self-supervised Pretraining for Image Embedding. arXiv preprint arXiv:1906.02940.

[39] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser and Illia Polosukhin, 2017. Attention Is All You Need. arXiv, preprint arXiv:1706.03762.

[40] Nam Vo, Lu Jiang, Chen Sun, Kevin Murphy, Li-Jia Li, Li Fei-Fei and James Hays, 2019. Composing Text and Image for Image Retrieval – An Empirical Odyssey. In Proceedings of IEEE Conference of Computer Vision and Pattern Recognition.

[41] Yaxiong Wang, Hao Yang, Xueming Qian, Lin Ma, Jing Lu, Biao Li and Xin Fan, 2019. Position Focused Attention Network for Image-Text Matching. In Proceedings of International Joint Conference on Artificial Intelligence.

[42] Yonghui Wu, Mike Schuster, Zhifeng Chen, Quoc V Le, Mohammad Norouzi and et al. 2016. Google's neural machine translation system: Bridging the gap between human and machine translation. arXiv preprint arXiv:1609.08144.

[43] Saining Xie, Ross Girshick, Piotr Dollár, Zhuowen Tu and Kaiming He, 2016. Aggregated Residual Transformations for Deep Neural Networks. arXiv preprint arXiv:1611.05431.

[44] Fei Yan and Krystian Mikolajczyk, 2015. Deep Correlation for Matching Images and Text. In Proceedings of IEEE Conference of Computer Vision and Pattern Recognition.

[45] Artem Babenko Yandex, and Victor Lempitsky, 2016. Efficient Indexing of Billion-Scale Datasets of Deep Descriptors. In IEEE Conference on Computer Vision and Pattern Recognition. 2055-2063.

[46] Zhilin Yang, Zihang Dai, Yiming Yang, Jaime Carbonell, and Quoc VLe, 2019. Xlnet: Generalized autoregressive pretraining for language understanding. arXiv preprint arXiv:1906.08237.

[47] https://en.wikipedia.org/wiki/Fashion

[48] https://github.com/peteanderson80/bottom-up-attention

[49] http://cocodataset.org

[50] https://fashion-gen.com/

Alibaba Cloud Data Intelligence - July 18, 2023

Alibaba Clouder - March 9, 2017

Alibaba Cloud Community - November 20, 2024

Alibaba Cloud Data Intelligence - September 6, 2023

Alibaba Cloud Community - September 5, 2024

Alibaba Cloud Community - October 29, 2024

Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More CT Image Analytics Solution

CT Image Analytics Solution

This technology can assist realizing quantitative analysis, speeding up CT image analytics, avoiding errors caused by fatigue and adjusting treatment plans in time.

Learn More ChatAPP

ChatAPP

Reach global users more accurately and efficiently via IM Channel

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreStart building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free