By Jianjie and Yunyao

Elasticsearch (ES) is a search and analytics engine based on distributed storage. It is widely used in many scenarios today, such as searches on Wikipedia and GitHub. While ES involves numerous intricate details, this article will concentrate on its core features: distributed storage and analytical retrieval. Centering around these two core features, this article will introduce the related concepts, principles, and practical cases, hoping to help readers quickly understand the core features and application scenarios of ES.

A node is a single Elasticsearch instance, which can run on a physical server or a virtual machine.

A cluster is a collection of nodes.

A cluster is a collection of one or more Elasticsearch nodes running on different hosts (physical or virtual machines). Within a cluster, nodes collaborate to store and manage data.

Shards are segments of an index.

Due to the performance limits of a single ES node (such as memory and disk I/O speed), storing and managing data as a whole would not allow for fast responses to client requests. Therefore, ES breaks down indexes into smaller shards for distributed storage and parallel processing of data.

Replicas are copies of shards.

Each shard can have zero or multiple replicas, and both shards and replicas can provide data query services externally. The existence of replicas can increase the high availability and concurrency of the entire ES system because replicas can do whatever shards can do. However, there is no silver bullet in the computer system. The cost of replicas mainly lies in the increased data synchronization overhead. Each time data is updated, changes on the shards need to be synchronized to other replicas.

| Elasticsearch | Relational Database |

|---|---|

| index | Database |

| type | Table |

| document | Row |

| field | Column |

| mapping | Schema |

An index consists of one or more shards. The index serves as the top-level container for data in Elasticsearch, corresponding to the database model in the relational database.

In earlier versions of Elasticsearch, an index could contain multiple types, each used to store different kinds of documents. The type corresponds to the data table in the relational database. However, in Elasticsearch 7.0 and later, types have been gradually deprecated. The reason is that for data of different types, storing fields from other types within the same index leads to a large number of null values, causing resource waste.

Each piece of data within an index is called a document, which is a JSON-formatted data object. The document corresponds to the data row in the relational database. This is similar to the non-relational database MongoDB. MongoDB is a document-oriented database, where each piece of data is a BSON document (Binary JSON). Compared with data rows in relational databases, documents in non-relational databases offer greater flexibility, allowing fields to be easily added or removed and not requiring all documents to have the same fields. At the same time, documents retain some structured storage characteristics, encapsulating the data in a structured manner, unlike K-V non-relational databases which completely abandon structured processing for data.

The inverted index is a key data structure in Elasticsearch used for efficient document retrieval. It maps every word in the documents to the documents that contain it. This data structure enables Elasticsearch to efficiently handle unstructured data like text information. In contrast to traditional relational databases that use the forward index to scan entire tables, it achieves efficient text retrieval and analysis.

A forward index maps documents to keywords (given the documents, find the keywords), whereas an inverted index maps keywords to documents (given the keywords, find the documents). The inverted index consists of two main parts: the vocabulary and the inverted list. The vocabulary stores all unique words, while the inverted list stores the distribution of each word across documents.

An analyzer is a component in Elasticsearch used for text preprocessing. Its main function is to convert text into terms that can be invertedly indexed. Analysis usually consists of the following steps:

• Tokenization: Split text into terms. For English, a space is used as the dividing line to split terms.

• Normalization: Normalize terms, usually including converting to lowercase, removing stop words, and others.

• Filtering: Filter out special characters, such as removing specific characters and deleting digit substitutions.

In Alibaba Cloud DTS data synchronization tools, you can choose from a series of built-in analyzers of Elasticsearch. However, the built-in analyzers have poor support for Chinese, adopting a brute-force approach that splits each Chinese character individually. For proper tokenization of Chinese, third-party tokenizers like the jieba tokenizer can be selected.

Based on the core concepts mentioned above, Elasticsearch uses a distributed storage structure and analytical retrieval capabilities to support and provide various types of query functionalities, catering to diverse search requirements.

Below are the main query types in ES:

Robust full-text indexing capability is supported by the fundamental word-level query capability.

• Term Query (Exact)

o It is the most basic ES query, which works by treating the input string as a complete word and directly searching it in the inverted index table.

• Fuzzy Query (Approximate)

o It is a term query with edit distance. Specifically, given a fuzziness level (edit distance), ES will expand the original word based on this edit distance, generating a series of candidate new words. For each new word within the edit distance, a term query is performed.

High-level queries that use match and match_phrase fall under the category of full-text level queries, which encapsulate multiple or various word-level queries.

• match

o Match is adaptive:

o Main steps of a match query:

• match_phrase

o Based on the match query, match_phrase ensures that the order of the input words remains unchanged for a hit, which can result in slightly poorer performance compared to match.

It is used to implement complex combined query logic. Specifically, there are four types:

o should: OR

o must: AND

o must _not: NOT

o filter: Can be used as pre-filters in queries, similar to must, but it does not participate in relevance score calculations.

Logical Completeness: A sufficient number of AND, OR, and NOT operations can implement any logic.

Using the inverted index, for the input word, consider the following metrics for each document:

o TFIDF

o Field Length

Combining the two metrics, we can get the relevance score (_score) for each document.

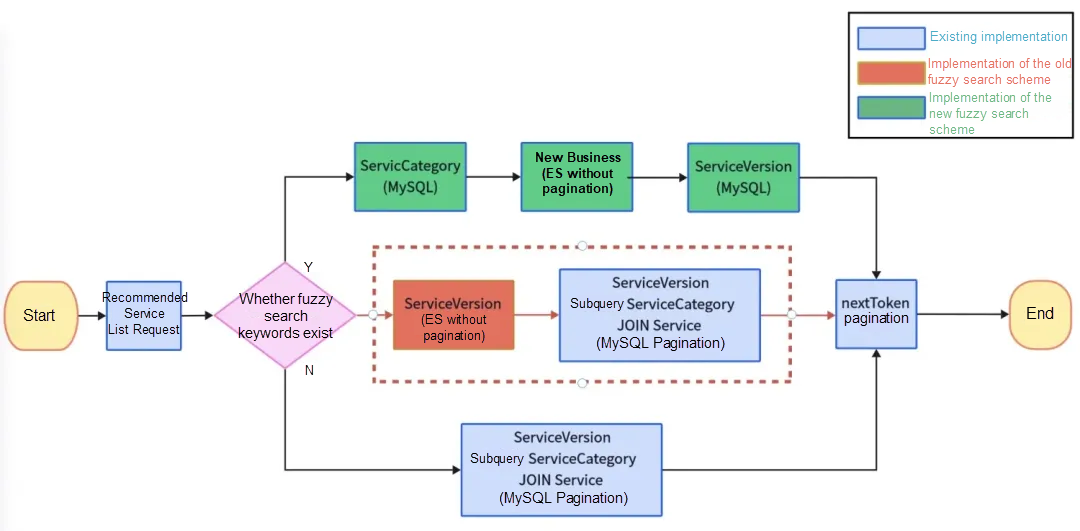

In the practice of server-side development, due to large data volumes, it is impossible to return all data with a single request and query. Therefore, paginating data is a common engineering practice. Moreover, as a convenient tool for handling unstructured fields, ES is often used as the primary pagination method in search API implementations.

In ES, the built-in pagination mechanism is sort + Search After pagination. It generates a cursor field for each request, which marks the end position of the previous page. Thus, the next request can conveniently find the next page by starting from the last cursor field. This is actually an implementation of nextToken pagination officially provided by ES, which skips the process of building the cursor and only requires the user to specify the sorting fields in the query conditions:

GET /service_version_index/service_version_type/_search

{

"size": 100,

"sort": [

{"gmt_modified": "desc"},

{"score": "desc"},

{"id": "desc"}

],

...

}With sort + Search After, cursors for each page can be conveniently generated based on specific sorting.

{

"sort" : [

1614561419000,

"6FxZJXgBE6QbUWetnarH"

]

}For the next query, include this cursor to quickly locate the end of the previous page and start querying the next page:

GET /service_version_index/service_version_type/_search

{

"size": 100,

"sort": [

{"gmt_modified": "desc"},

{"score": "desc"},

{"id": "desc"}

],

"query": {

...

...

},

"search_after": [

1614561419000,

"6FxZJXgBE6QbUWetnarH"

]

}We can also manually construct query conditions to implement nextToken pagination. Even if you are not familiar with the specifics of nextToken pagination and ES query usage, you need to be able to conclude that you can implement any nextToken pagination logic using ES query conditions. The reason is that Bool queries of ES provide logical completeness.

Specifically, a simple if-else logic can be replicated using ES basic queries:

if A then B else C => (A must B) should (must not A must C)Similarly, any complex query condition can be implemented.

The benefit of this approach is that if your project involves pagination across multiple databases, the backend pagination logic can be shared, requiring only the implementation of the same nextToken conditions in different databases:

In the preceding project, an API service uses two query paths: one purely MySQL-based query path, and the other ES pagination + MySQL field supplementation query path. We have manually replicated the MySQL nextToken pagination conditions in ES pagination. Therefore, the backend logic that encapsulates the nextToken pagination requests can be reused after the query. If MySQL uses a custom nextToken pagination and ES uses the officially recommended Sort pagination, reusability will be lower, thus requiring two separate pagination logics.

Similar to non-relational document databases, ES stores data based on documents without a fixed table structure. Relational databases organize data in the two-dimensional table and excel in managing relationships between data tables. In contrast, ES organizes data in a flat structure using documents, excelling in text retrieval for flattened documents rather than relationship management.

In ES, due to its distributed storage features and non-relational data model, operations such as JOIN queries in relational databases are inconvenient. ES provides parent-child documents, a built-in join query implementation similar to MySQL's JOIN. However, the feature comes with functional and performance limitations: parent-child documents must reside in the same shard, so additional costs are involved in managing the relationship. ES officials usually do not recommend this method.

In ES, to implement join queries, the best practice is generally to create wide tables or adopt a server-side JOIN as a compromise solution.

In ES, data is stored across two indexes. During the query process, two queries are conducted within the business code on the server side, and the results of the first query are used as the condition for the second query.

Benefits: It is easy to implement and provides good user experience with small data volumes.

Drawbacks: With large data volumes, two queries can incur extra overhead because each query requires multiple operations such as establishing a connection and sending requests.

Extension: For join queries involving different databases, this solution can also be used. For example, ES can handle limited text fields, retrieving a list of IDs, which can then be passed to MySQL for a complete query to fill in the remaining fields.

Wide table: Informally, it refers to a database table with many fields. It is a single table that contains all the fields required for specific query business needs. Due to the inclusion of many redundant fields, the wide table no longer conforms to the three paradigms of database design. The benefit gained from this is improved query performance and simplified join queries (avoiding joins at query time). This is a typical space-for-time optimization approach. However, wide tables are not easy to extend, while any change in business requirements, even adding a single field, necessitates changing the wide table.

Narrow table: It is a data table that is strictly designed according to the three paradigms of database design. The design of such tables reduces data redundancy but requires multiple tables to perform complex queries, involving multi-table JOIN issues, which might affect performance. It is characterized by easy extension. Multiple narrow tables can be combined and adapted to various business scenarios. No matter how many different scenarios there are, there is no need to modify the original table structure. However, query logic and code logic need to be encapsulated.

If high query speed performance is required, it is recommended to choose wide tables.

Since ES excels at retrieval rather than storage, it is rarely used as the primary storage solution in business scenarios. Instead, relational databases are used for storage, and when ES is needed, the required data is constructed and synchronized. Specifically, there are solutions such as manual writing and data synchronization tools.

Within existing business logic, add synchronous or asynchronous operations to insert, delete, update, and query ES. This approach is simple to implement but is not scalable and has high coupling.

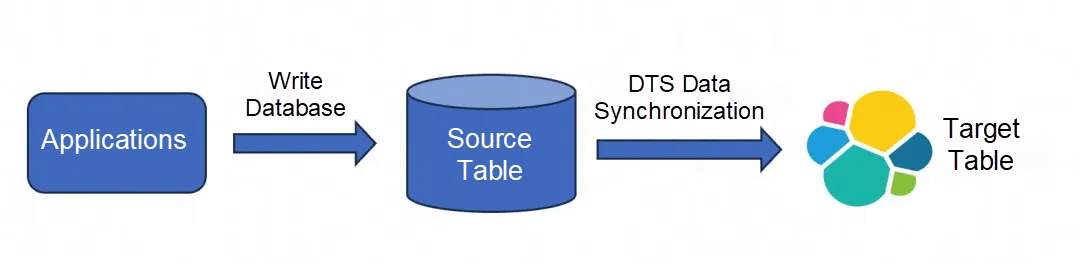

This is a cloud service product provided by Alibaba Cloud that achieves data synchronization by simulating master-replica replication based on binlog. It is convenient and efficient for one-to-one data synchronization but does not support multi-table JOIN scenarios. If the table structure changes, you need to manually delete the target ES database and rebuild the synchronization task.

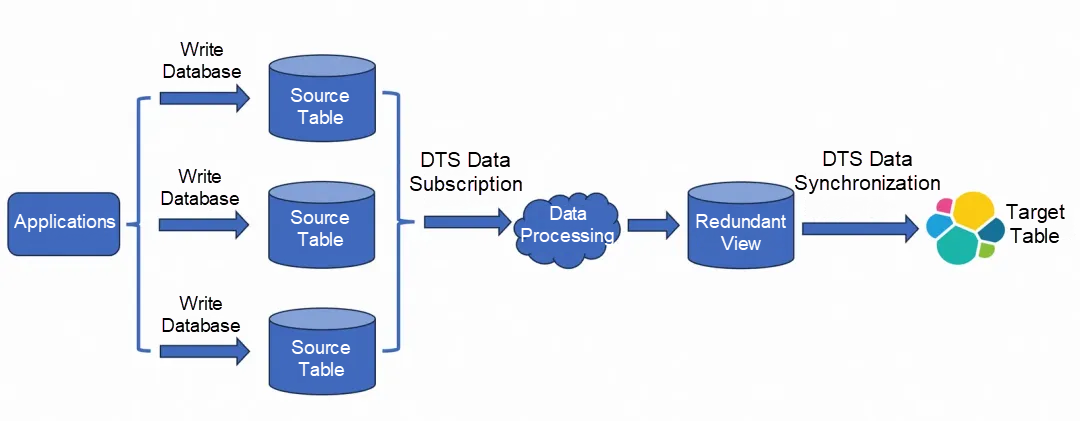

If we set up an intermediate layer to first write the results of multi-table JOINs into a redundant MySQL wide table before synchronizing them to ES, the data synchronization can thus use the simple and efficient DTS. However, the cost is an increased number of synchronization chain links, which adds instability.

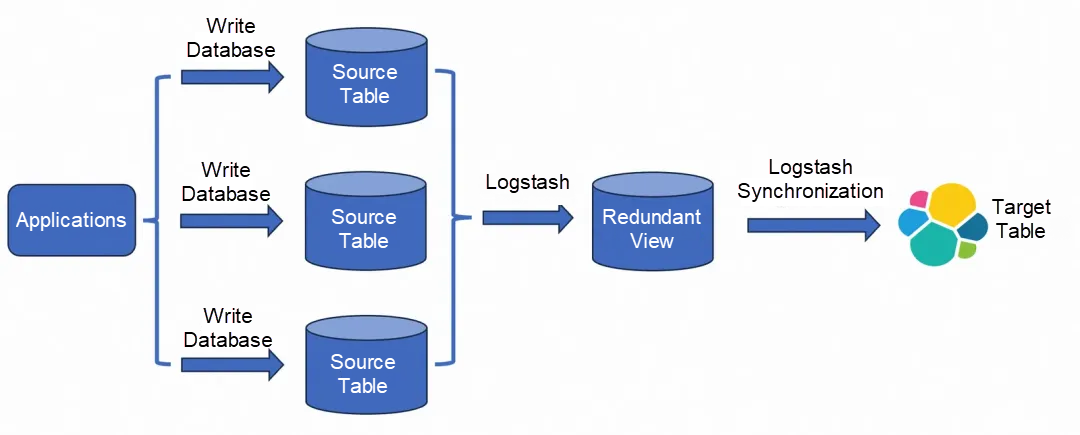

ES Suite Logstash:

This is a data synchronization tool in the official ES suite. It supports writing the required SQL logic into a config file and storing it as a view, and then using the view to write the results of multi-table queries into ES. This method offers high flexibility and allows for easy construction of the required data. However, the data synchronization performance is slightly worse (at the second level).

CREATE VIEW my_view AS

SELECT sv.*, s.score, sc.category

FROM service_version sv

JOIN service s ON sv.service_id = s.service_id

JOIN service_category sc ON s.service_id = sc.service_id;

Guide to Other Data Synchronization Tool Selection:

https://www.alibabacloud.com/help/en/es/use-cases/select-a-synchronization-method

[1] NetEase's Practice of Building a General Search System Based on Elasticsearch - Sharing - Elastic Chinese Community: https://elasticsearch.cn/slides/243#page=20

[2] RDSMySQL Synchronization Solution_Retrieval and Analysis Service Elasticsearch Edition - Alibaba Cloud Help Center: https://www.alibabacloud.com/help/en/es/use-cases/select-a-synchronization-method

[3] Elasticsearch Guide [8.9] | Elastic: https://www.elastic.co/guide/en/elasticsearch/reference/current/index.html

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

LLM Inference Acceleration: GPU Optimization for Attention in the Decode Phase (2)

1,353 posts | 478 followers

FollowAlibaba Clouder - December 29, 2020

Data Geek - June 6, 2024

Data Geek - April 11, 2024

Alibaba Clouder - March 1, 2021

Whybert - January 10, 2019

Data Geek - July 18, 2023

1,353 posts | 478 followers

Follow Data Transmission Service

Data Transmission Service

Supports data migration and data synchronization between data engines, such as relational database, NoSQL and OLAP

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Cloud Community