By Zhao Mingshan (Liheng)

Kruise Rollout is an open-source progressive delivery framework from the OpenKruise community. Kruise Rollout supports canary rollout, blue-green rollout, and A/B testing rollout in conjunction with the smooth transition of traffic and instance. The rollout process can be automated in batches and paused based on the Prometheus Metrics. Kruise Rollout also provides imperceptible connection of bypasses and is compatible with various existing workloads (Deployment, CloneSet, and DaemonSet). I recently delivered a speech at the 2022 OpenAtom Global Open Source Summit. The following are the main contents.

A progressive rollout is different from a full/one-time rollout. It mainly includes the following features:

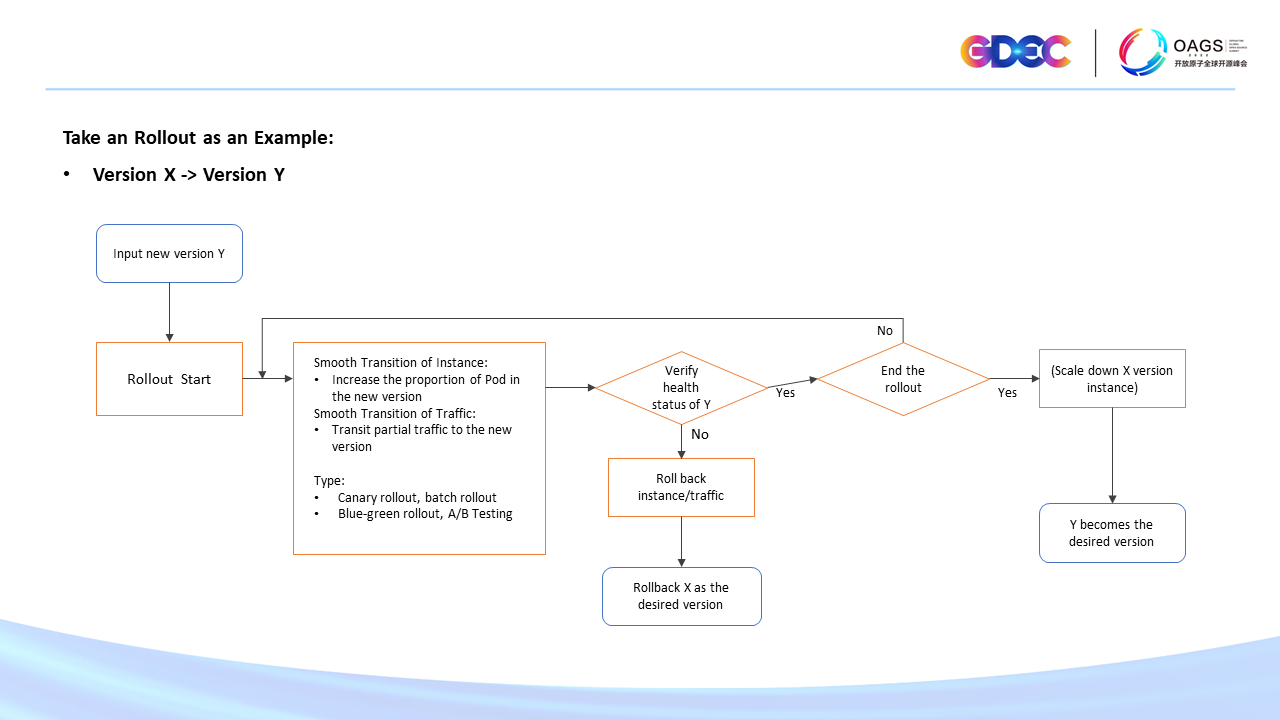

Let's look at an example:

If the current version is X and it needs to be updated to version Y. First of all, the rollout will be divided into multiple batches (for example, only ten instances will be released in the first batch). Then, perform a canary rollout to some traffic to version Y (for example, Taobao will first import the employee traffic to a new version by A/B Testing for each major rollout). Finally, verify the health of the new version. After the verification, the process above will be repeated to complete the remaining rollout batches. If any exceptions are found during this process, you can quickly roll back the new version to version X. The example above indicates that there are a lot of intermediate verification processes in progressive rollout compared with the full rollout. Progressive rollout improves the stability of delivery substantially. Progressive rollout is very necessary, especially for some large-scale rollout scenarios.

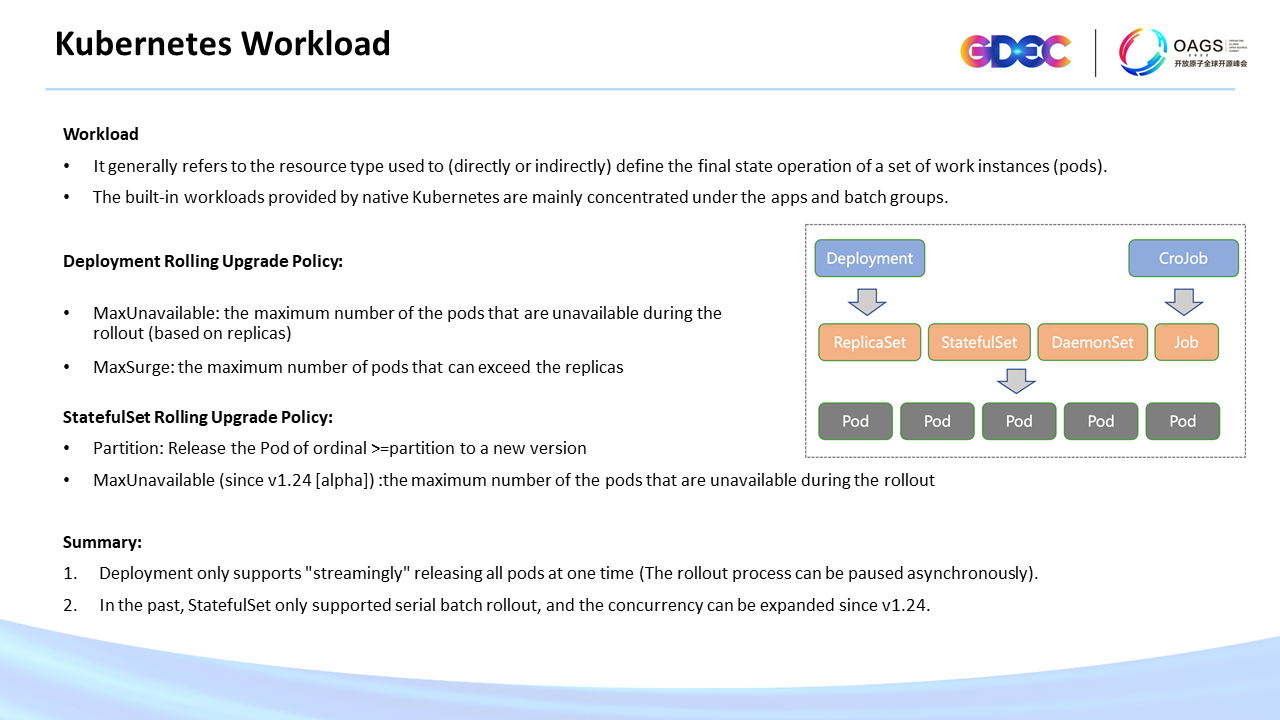

All pods in Kubernetes are managed by workloads. The two most common workloads are Deployment and StatefulSet. Deployment provides the maxUnavailable and maxSurge parameters for the upgrade. However, in essence, Deployment only supports one-time streaming rollout, and users cannot divide the rollout into batches. Although StatefulSet supports batch rollout, it is still far from the progressive rollout capability we want.

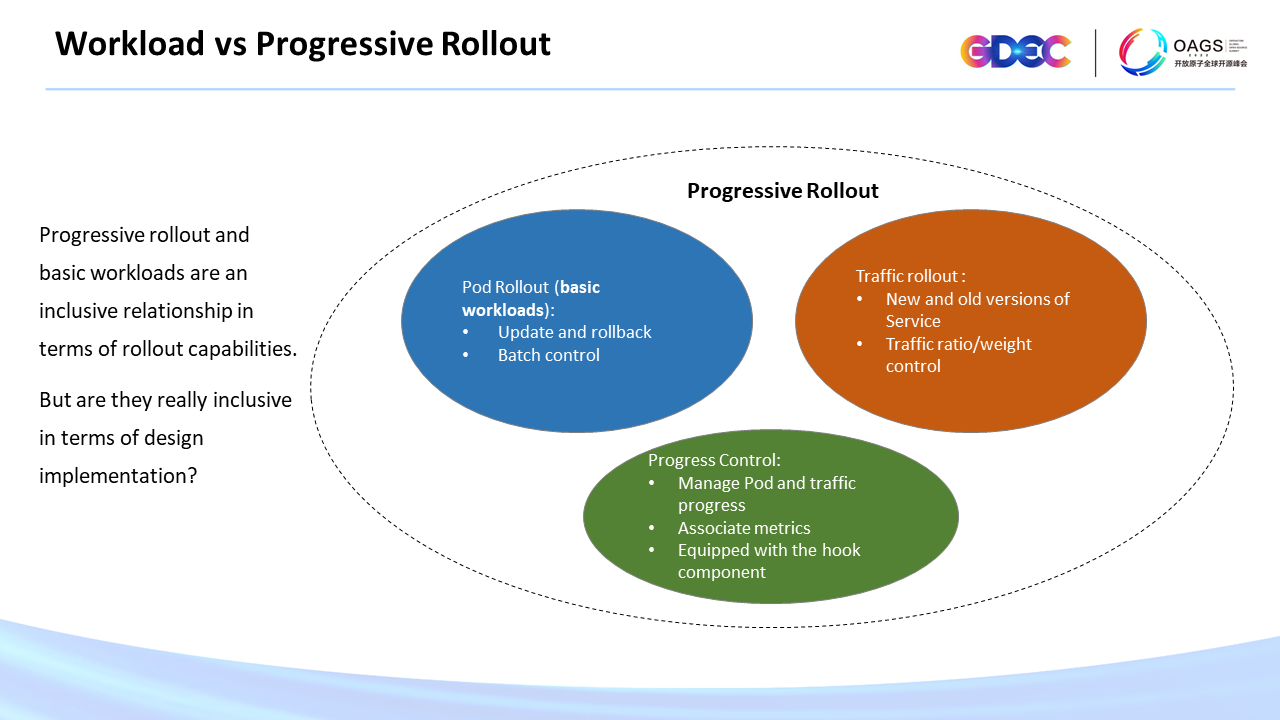

Therefore, progressive rollout and workloads are an inclusive relationship in terms of capabilities. In addition to the basic pod rollout, progressive rollout should include traffic rollout and progress control. Now that the capabilities have been clarified, let's look at the implementation. How to design and implement the Rollout capabilities is also very important. Are they also inclusive in terms of design?

Before starting, we must investigate the excellent solutions of the community:

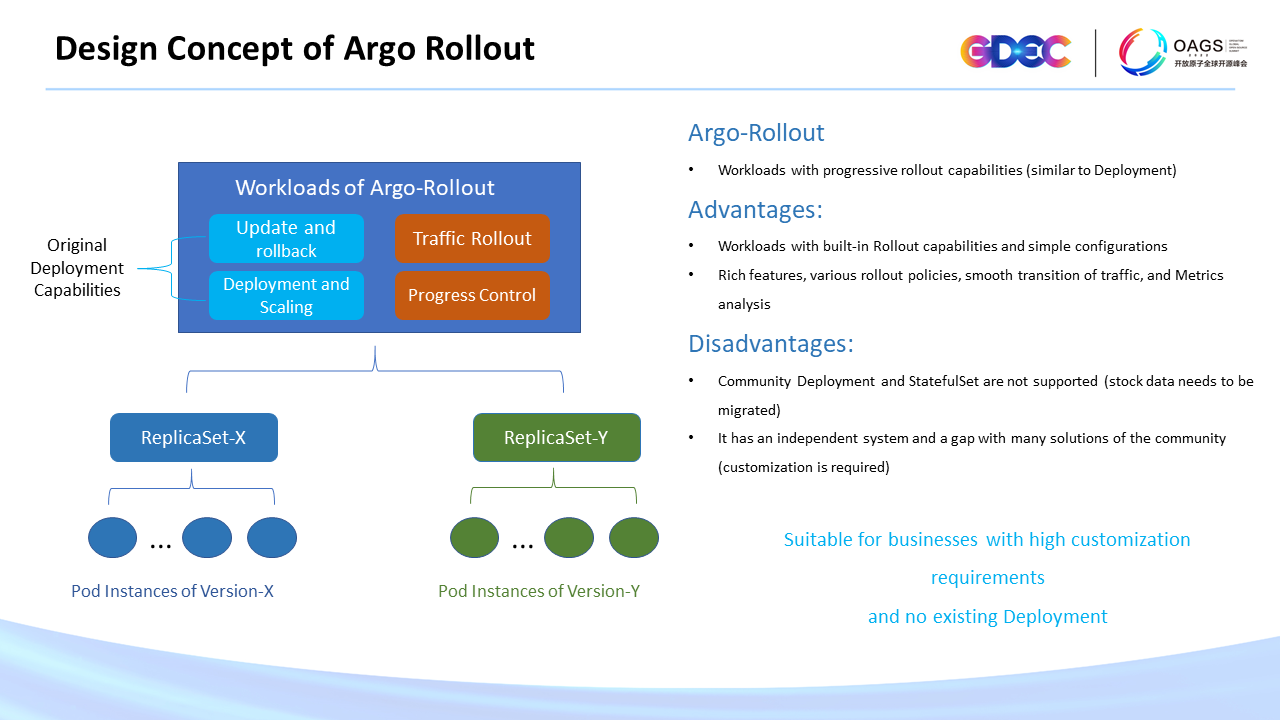

Argo Rollout is a Workload launched by Argo. Its implementation ideas are to redefine a workload similar to Deployment and expand the relevant capabilities of Rollout based on the original capabilities of Deployment. Its advantages are that the workload has built-in Rollout capabilities with simple configuration and easy implementation. Currently, it supports a wide range of features, including various rollout policies, smooth transition of traffic, and metrics analysis. All of these above indicate that Argo Rollout is a relatively mature project, but it also has some disadvantages. Due to being a workload itself, it cannot be applied to community Deployment. Online migration of workloads is needed, especially for companies that have already deployed Deployment. Secondly, the implementation of many solutions in the community now relies on Deployment, and many companies have built Deployment-based container management platforms, which must be compatible with Argo Rollout. Therefore, the Argo Rollout is more suitable for businesses with high customization requirements and no existing Deployment.

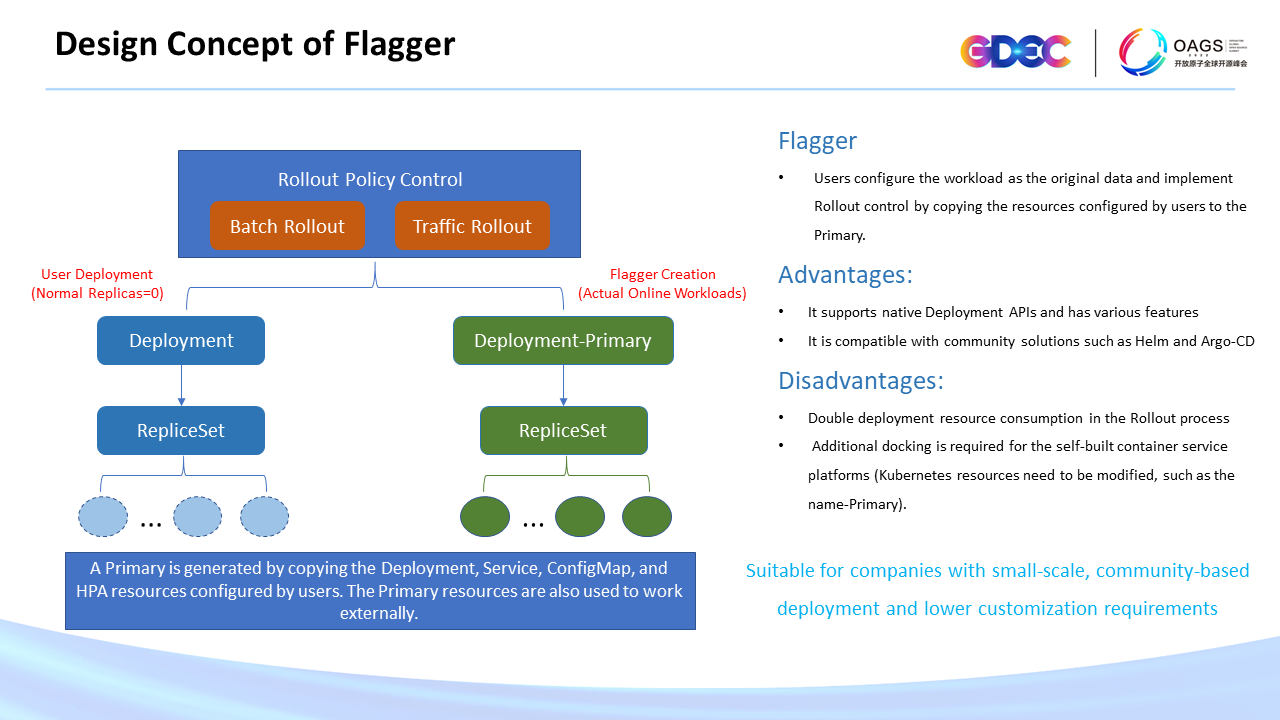

Another community project is Flagger, whose implementation idea is completely different from Argo Rollout. Instead of implementing a single workload, it expands the capabilities of the smooth transition of traffic and batch rollout based on existing Deployment.

The advantages of Flagger are that it supports native Deployment and is compatible with community solutions (such as Helm and Argo-CD). However, it also has some disadvantages. The first is the problem of double Deployment resources during the rollout process. It upgrades the Deployment deployed by users and then upgrades the Primary, so you need to prepare double Pod resources for this rollout process. Second, additional docking is required for some self-built container platforms because its implementation idea is to copy all user deployment resources and change the name and Label of the resources. Therefore, Flagger is more suitable for companies with small-scale, community-based deployment and lower customization requirements.

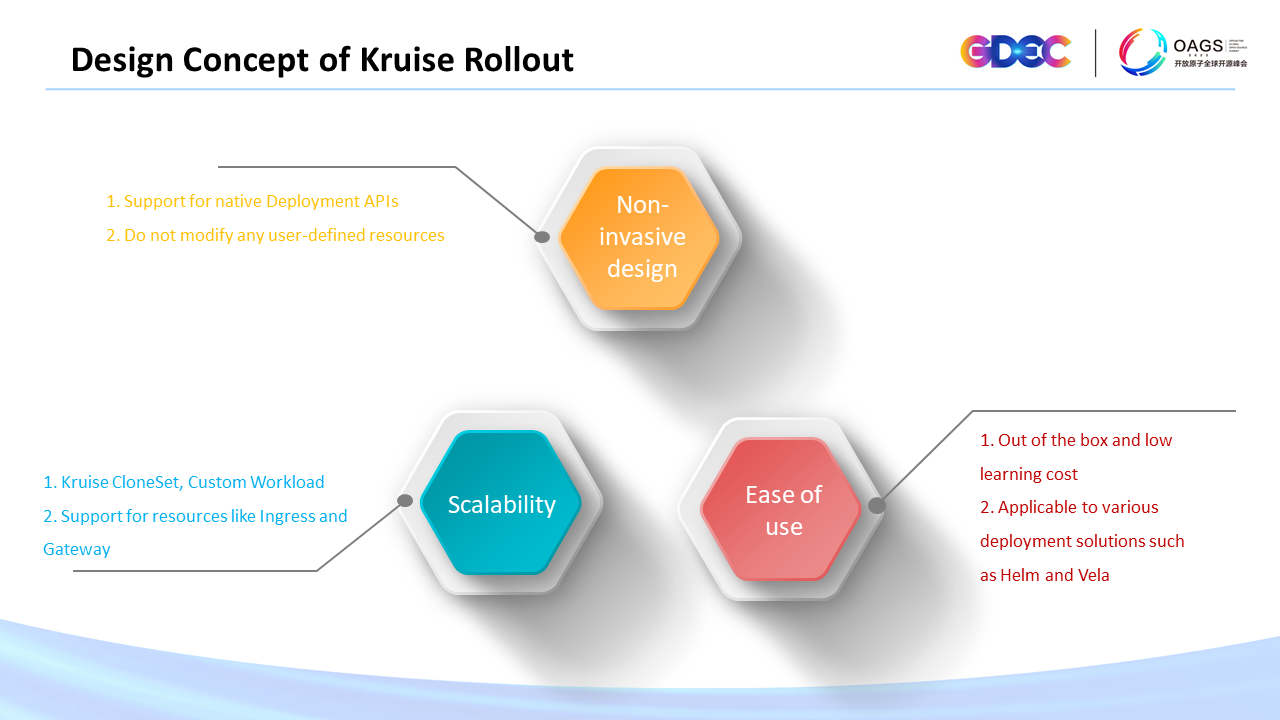

In addition, free development is a major feature of cloud-native. The Alibaba Cloud Container Team is responsible for the evolution of the cloud-native architecture of the entire container platform and has a strong requirement for the application of progressive delivery. Therefore, based on the reference to community solutions and consideration of Alibaba's internal scenarios, we have the following goals in the design of Rollout:

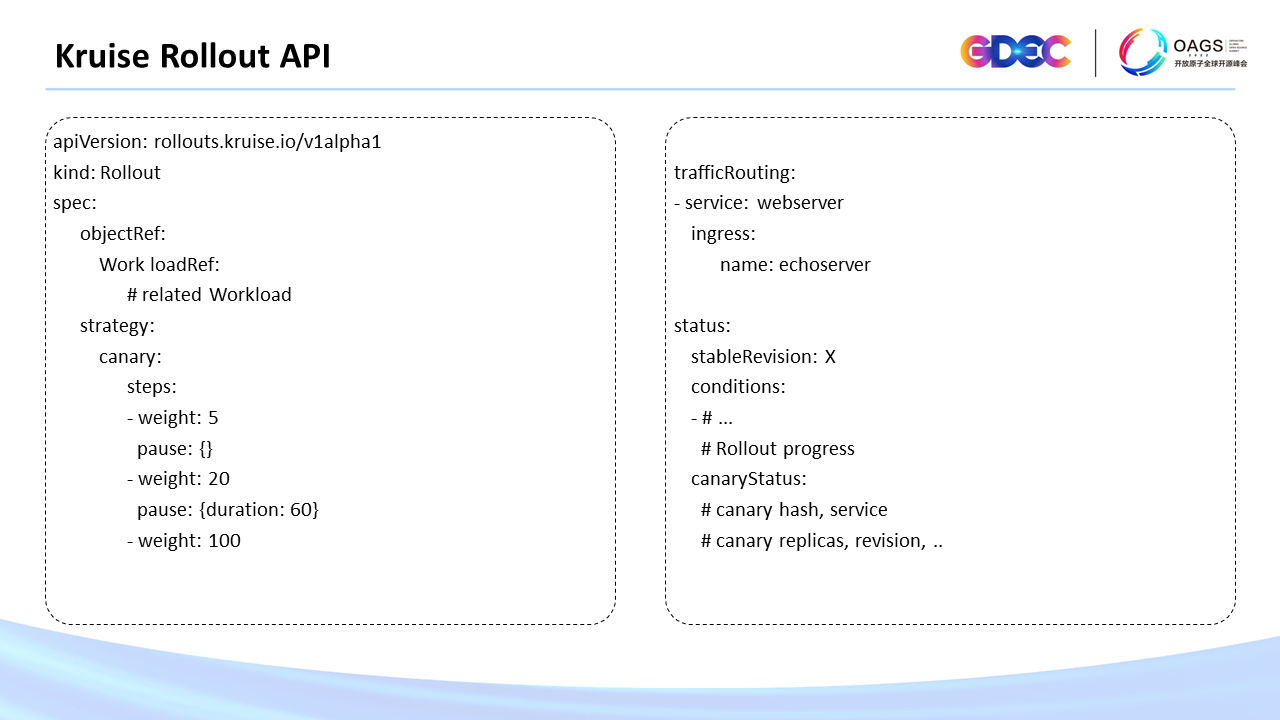

The Kruise Rollout API design is very simple and mainly includes the following four parts:

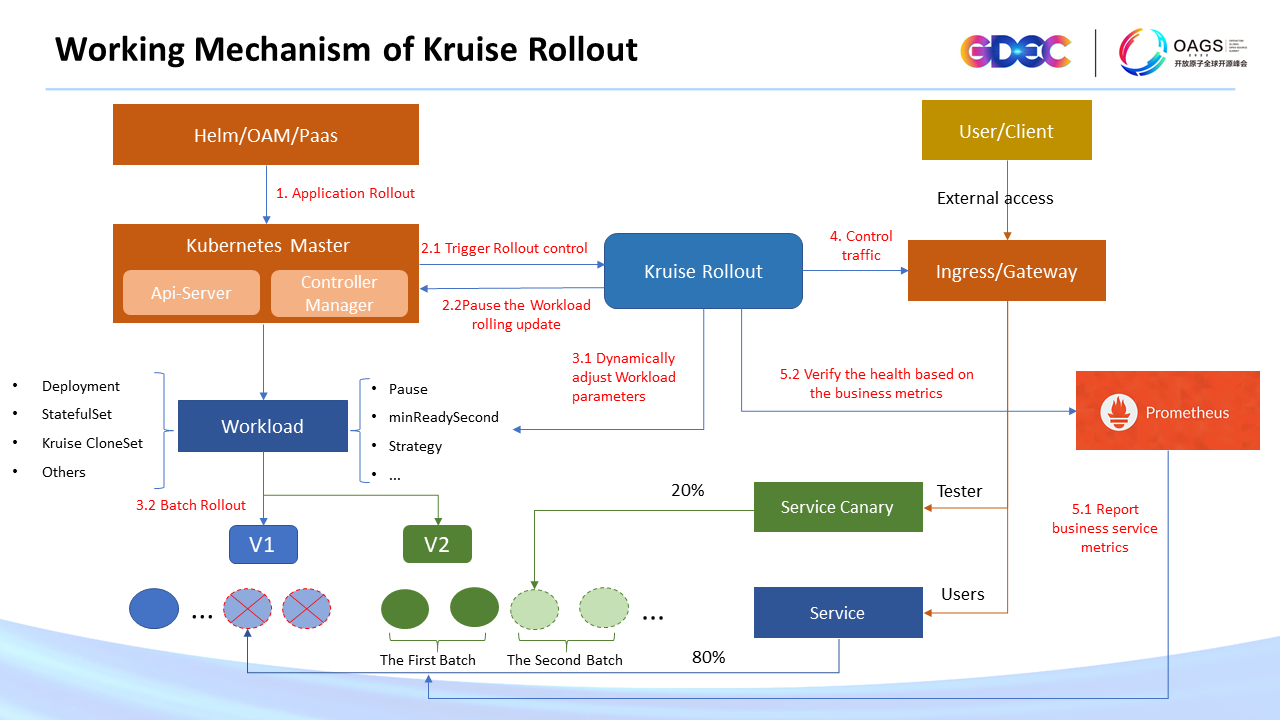

Next, the working mechanism of Kruise Rollout will be introduced:

First, the user releases a version based on the container platform (a rollout is essentially to apply Kubernetes resources to the cluster).

In the process above, the smooth transition of the first batch is completed, and the following batches are similar. After the complete rollout process is finished, Kruise restores the configurations of resources (such as workloads). Therefore, the entire Rollout process is a kind of synergy with the existing workload capabilities. It tries to reuse the workload's capabilities and achieves zero intrusion of non-Rollout processes.

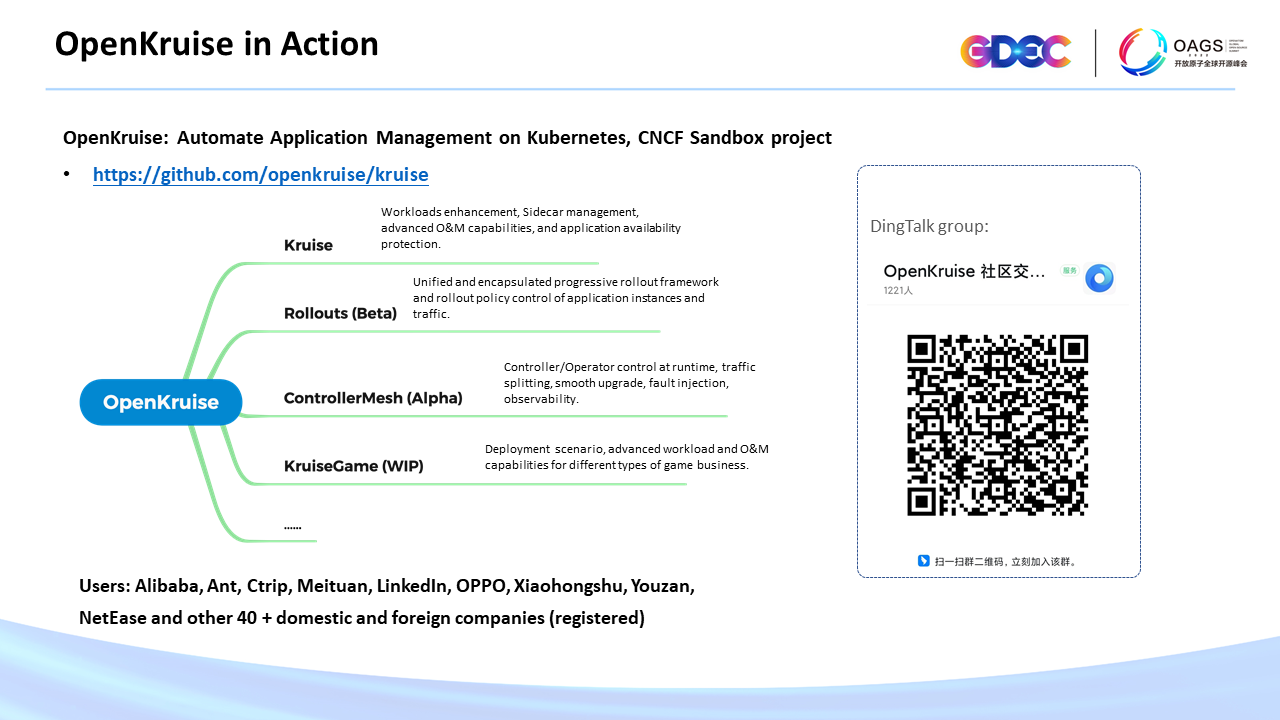

Let's put a period to the introduction of the working mechanism of Kruise Rollout. Let me briefly introduce the OpenKruise community:

With the increasing number of applications deployed on Kubernetes, how to achieve the balance between rapid business iteration and application stability is a problem that platform builders must solve. Kruise Rollout is a new exploration of OpenKruise in the field of progressive delivery. It aims to solve the problem of traffic scheduling and batch deployment in the field of application delivery. Kruise Rollout has been officially released in V0.2.0 and is integrated with the KubeVela project of the OAM community. Vela users can quickly deploy and use Rollout capabilities through Addons. In addition, we also hope that community users can join us, and we will do more exploration in the application delivery together.

Kruise Rollout Enables Progressive Delivery for All Workloads

668 posts | 55 followers

FollowAlibaba Container Service - February 25, 2026

Alibaba Cloud Native Community - July 27, 2023

Alibaba Cloud Native Community - September 20, 2022

Alibaba Cloud Native Community - November 22, 2023

Alibaba Cloud Native Community - September 18, 2023

Alibaba Container Service - April 3, 2025

668 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community