By Boben

With the development of artificial intelligence (AI) in recent years, AI is already being applied in various industries. In terms of web R&D and design, public data sets similar to Rico, a mobile application tagging data set, are constantly emerging. This allows industry researchers to conduct academic research in mobile application scenarios easily. In this field, intelligently generating frontend code is a promising direction of development.

Apart from data sets used for academic research, some Internet products directly provide services that intelligently generate code. These services seek to constantly improve their capabilities by evaluating the quality of the code generated as they provide services to users.

These three code-generating products mostly use structured design documents or images as the input information. The parsing of the input information determines the quality of the final code output. Therefore, we need a way to evaluate the quality of the generated code. First, let's look at the structure of frontend code.

Generally, frontend code includes static GUI code and dynamic logic code.

Static GUI code generally consists of HyperText Markup Language (HTML) and Cascading Style Sheets (CSS) code. The visual style description of the code can be based on the fill styles of the layer-level nodes in the design phase. However, the hierarchical description of the code is usually unique to frontend code and cannot be reflected in the design phase.

Frontend logic code (JavaScript) usually indicates interactive logic code, which often assigns static GUI code some dynamic interactive logic actions. Frontend logic code is also the most tedious part of programming implementation. However, the purpose of such interactive logic actions usually cannot be directly reflected in static design tools in the design phase.

With the popularization of serverless, frontend developers also need to develop code for Function as a Server (FaaS) at the server end, which includes obtaining server-end data from requirements and processing the data. This is similar to the encoding of data flows behind the programs.

The preceding three types of code account for most frontend programming content. To intelligently generate the code, we need to make the following preparations:

This article focuses on ways to obtain information. The following section introduces the sources of input information used to generate code.

When given business requirements, we can access the following information before R&D: product requirement documents (PRDs) from product managers, interactive documents from interaction designers, visual documents from visual designers, and finalized page images. If we compare the information flow of a web page (program) with the information theory (information source :arrow_right: encoder :arrow_right: channel :arrow_right: decoder) proposed by the famous mathematician Claude Shannon:

The description above shows the flows of information in various stages allow users to understand and acquire information about an application. If only one channel is considered, you will miss other important information. This is also true for the acquisition of R&D status information. For example, if you only consider the visual document without considering the PRD during R&D, the delivered code cannot meet the requirements.

The PRD includes the required functional points for the frontend and server-end data. It is also the starting point for obtaining user information. However, the content of the PRD cannot be described in a structured way. This means human intervention is required to understand its information. What if we required product managers to deliver PRDs that are described in a structured way?

| Frontend Data Display | Frontend Interaction Logic | Backend Data Logic | |

| Module A | Display fields A and B | Hide field A when it is unavailable; send traced data when the module is clicked | Obtain field A from channel xxx and perform xxx processing on the field |

As you can see, if PRDs are described in a structured manner, we can easily extract the information of a specific module from the preceding three parts of frontend code. Then, we can use capabilities, such as natural language processing (NLP), with existing data models in specific fields to analyze and describe important logic code in a structured way.

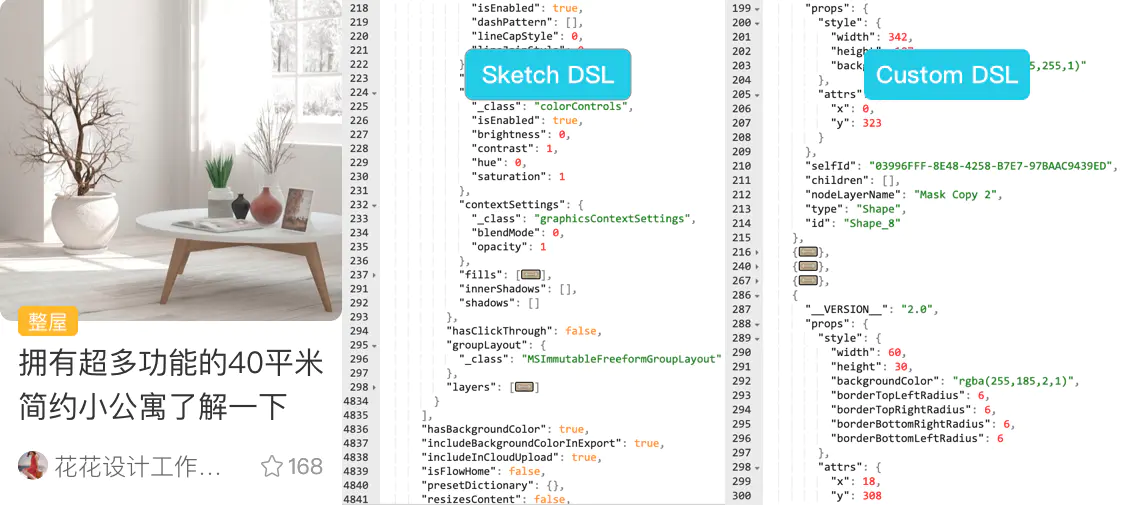

The content description of a visual document is relatively simple. Currently, designers can use design tools, such as Sketch, Photoshop, and XD, which come with some structured descriptions. We only need to use the developer capabilities of the design tools to extract the structured information from the visual document and convert the information into the structured description we need. Then, we can fill the structured description in our static GUI visual code.

Here, we are mainly considering interactive documents that focus on dynamic effects and responses. Different from visual documents that describe static information of layer-level nodes, interactive documents usually describe responses between node statuses. In common design tools that can produce interactive responses (such as AE and Principle), if we can extract the structured description of interactions provided by designers from the design tools, then we can also convert the description into corresponding interactive actions in our programming. Next, we can use the actions to implement frontend interactive logic code.

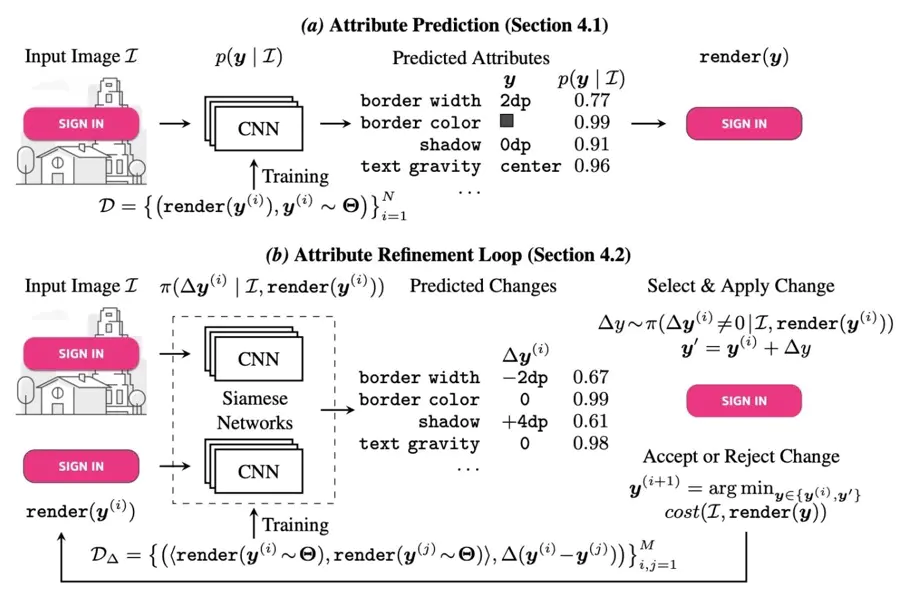

In addition to obtaining information directly from the information media as described above, we can also enrich the extracted information through model training and learning based on our experience. For example, common web components cannot be described in design documents. Therefore, we can use a target detection model to extract information about common web components. We can also use classified learning on the text in a business field to automatically map static text fields in a design document to dynamic fields.

Different from the information media mentioned above, images do not contain structured information. We can only intuitively obtain pixel lattice information in the images. However, using deep learning, we can extract basic design elements, such as text, images, and shapes, as well as style attributes from the images.

We can also train a model to identify the descriptions of targets (components) in an image.

If some static text fields in a design document are mapped to dynamic fields issued by APIs, we can analyze the text content of designer descriptions and find data fields that may map to the text content for a specific business. For example, if we see xxx Flagship Store, we may guess that it is the Store Title field in the Store data source for business data. Therefore, we can implement automatic mapping between the field and the corresponding field in the API when we generate code.

Structure description refers to the output (JSON data description) extracted from the design document mentioned above. When we use static GUI code to describe pages or applications, we also use certain semantic structures (structural layout groups) in addition to basic style information. A visual document does not contain structure semantics. Therefore, we often need to use a training model to obtain structure semantics based on our experience with layout structure semantics. This way, we can make the layout structure description of the GUI more semantic.

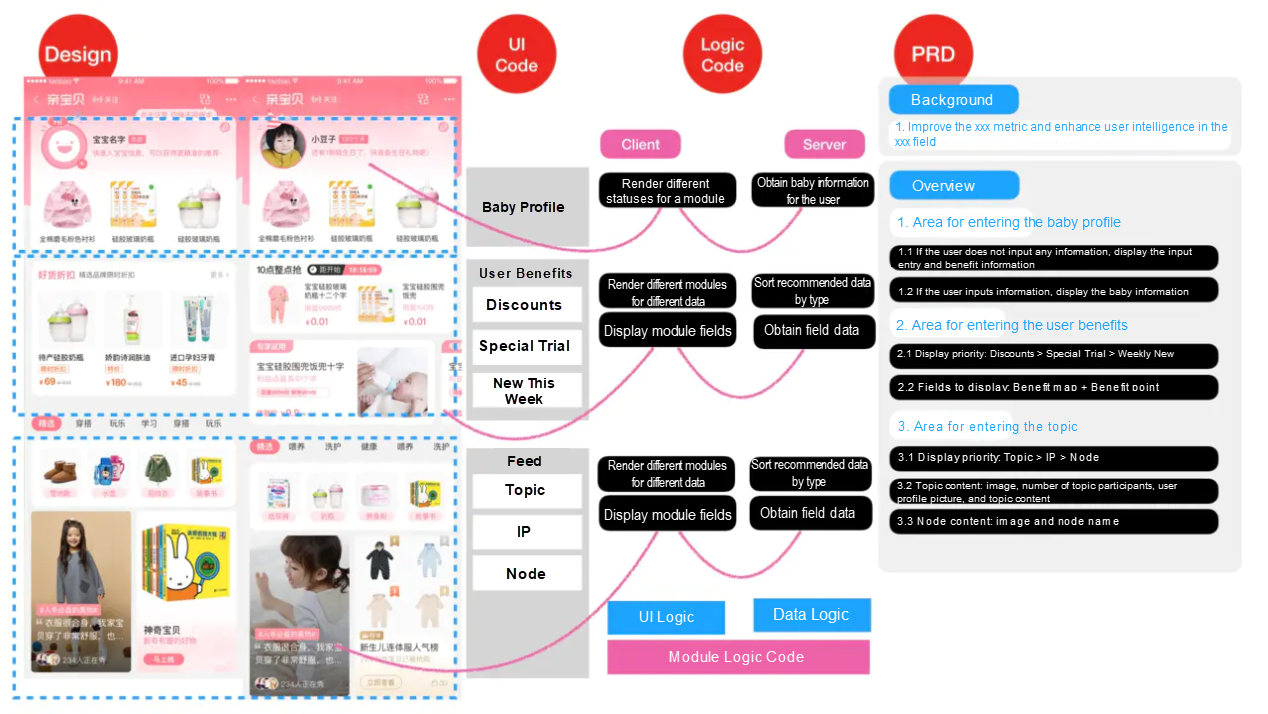

There are many sources of information used in the intelligent generation of code, each of which is related to the ultimately generated code to a greater or lesser extent. If certain information cannot be understood or used, the generated code will be incomplete. Therefore, it is also important to connect the information in different dimensions.

The following figure shows an attempt at correlated analysis of the information from different channels. We can see that input information from different channels can be correlated in different dimensions of a module. In other words, the module corresponds to static GUI code, frontend logic code, server-end logic code, and identified model components within the module. If we connect the information, the generated module code not only contains the static UI code obtained from the visual document in the initial phase but also other logic and semantic information that can be used to automatically convert the static code into dynamic code. This way, we can obtain the complete code, including code for field binding, module material identification, and rendering logic.

After two years of development and refinement, imgcook has proved that it is possible to extract information from design documents to automatically generate some GUI code. In the future, we will continuously investigate methods of correlated information analysis to establish complete graphs and generate more accurate code.

Pipcook — Providing the Frontend with a Complete Intelligent Algorithm Framework

66 posts | 5 followers

FollowAlibaba F(x) Team - February 24, 2021

Alibaba F(x) Team - February 2, 2021

Alibaba F(x) Team - February 25, 2021

Alibaba F(x) Team - February 26, 2021

Alibaba F(x) Team - June 20, 2022

Alibaba F(x) Team - February 3, 2021

66 posts | 5 followers

Follow Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Serverless Application Engine

Serverless Application Engine

Serverless Application Engine (SAE) is the world's first application-oriented serverless PaaS, providing a cost-effective and highly efficient one-stop application hosting solution.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba F(x) Team