Function Compute is an Alibaba cloud serverless platform that allows engineers to develop an internet scale service with just a few lines of code. It seamlessly handles resource management, auto scaling and load balancing so that developers can focus on their business logic without worrying about managing the underlying infrastructure making it easy to build applications that respond quickly to new information”. Internally, we utilize container technology and develop proprietary distributed algorithms to schedule our user's code on resources that are scaled elastically. Since it's inception a little over an year ago, we have developed many cutting-edge technologies internally aiming to provide our users with high scalability, reliability and performance.

In this guide, we show you a step by step tutorial that showcases some of its innovative features. You can read this quick start guide to familiarize yourself with basic serverless concepts if this is your first time using Function Compute.

The first feature that we introduce allows developers to write functions that read and write from a network attached file system like Alibaba Cloud NAS.

The serverless nature of the platform means that user code can run on different instances each time it is invoked. This further implies that the functions cannot rely on its local file system to store any intermediate results. The developers have to rely on another cloud service like Object Storage Services to share processed results between functions or invocations. This is not ideal as dealing with another distributed service adds extra development overhead and complexities in the code to handle various edge cases.

To solve this problem, we developed the access Network Attached Storage (NAS) feature. NAS is another Alibaba cloud service that offers a highly scalable, reliable and available distributed file system that supports standard file access protocols. We can mount the remote NAS file system to the resource on which the user code is running which effectively creates a "local" file system for the function code to use.

This demo section shows you how to create a serverless web crawler that downloads all the images starting from a seed webpage. This is a quite a challenge problem to be run on a serverless platform as it is not possible to crawl all the websites in one function given the time constraints. However, with the access to NAS feature, it becomes straightforward as one can use the NAS file system to share data between function runs. Below we show a step by step tutorial. We assume that you understand the concept of VPC and know how to create a NAS mount point in a VPC. Otherwise, you can read the basic NAS tutorial before proceeding to the steps below.

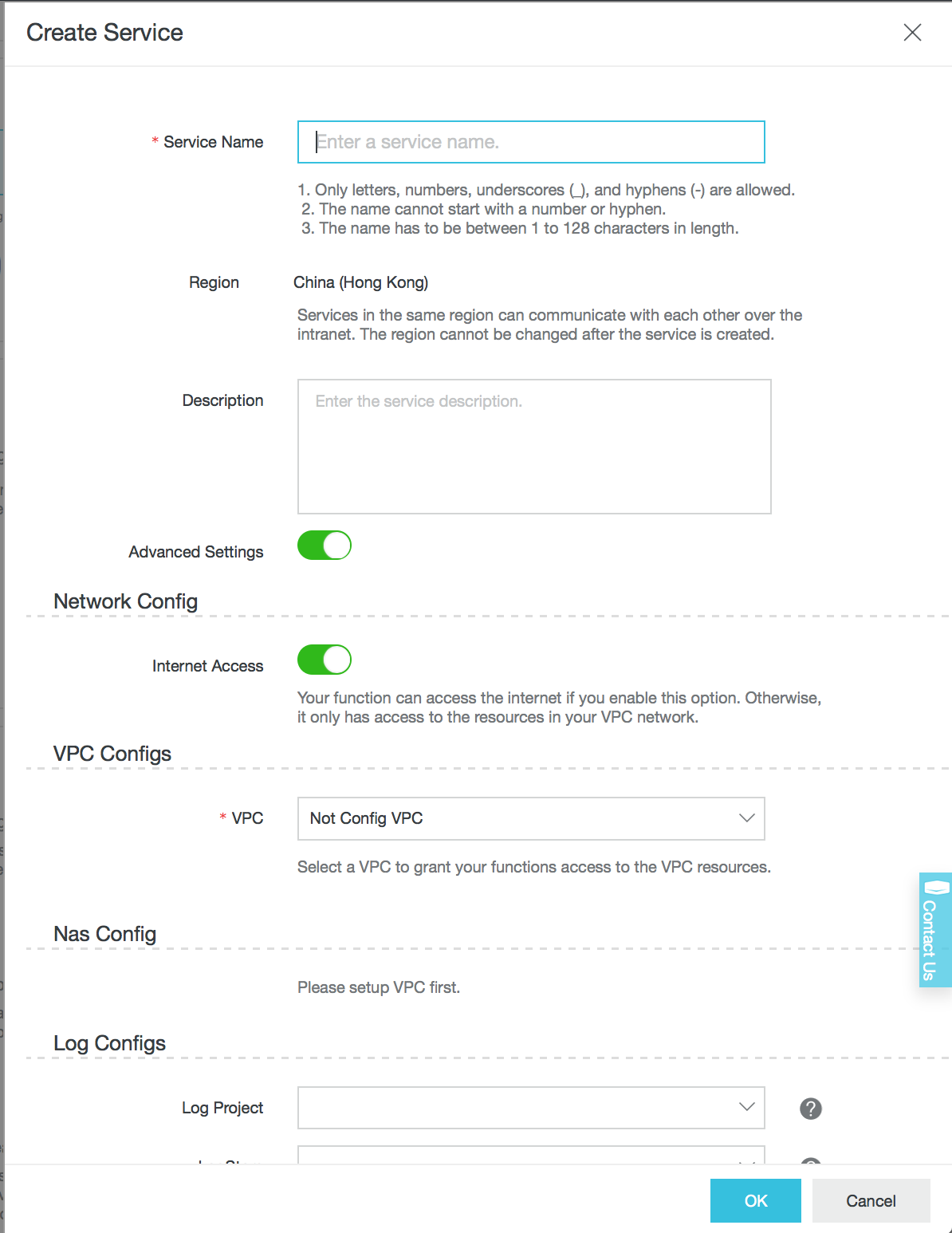

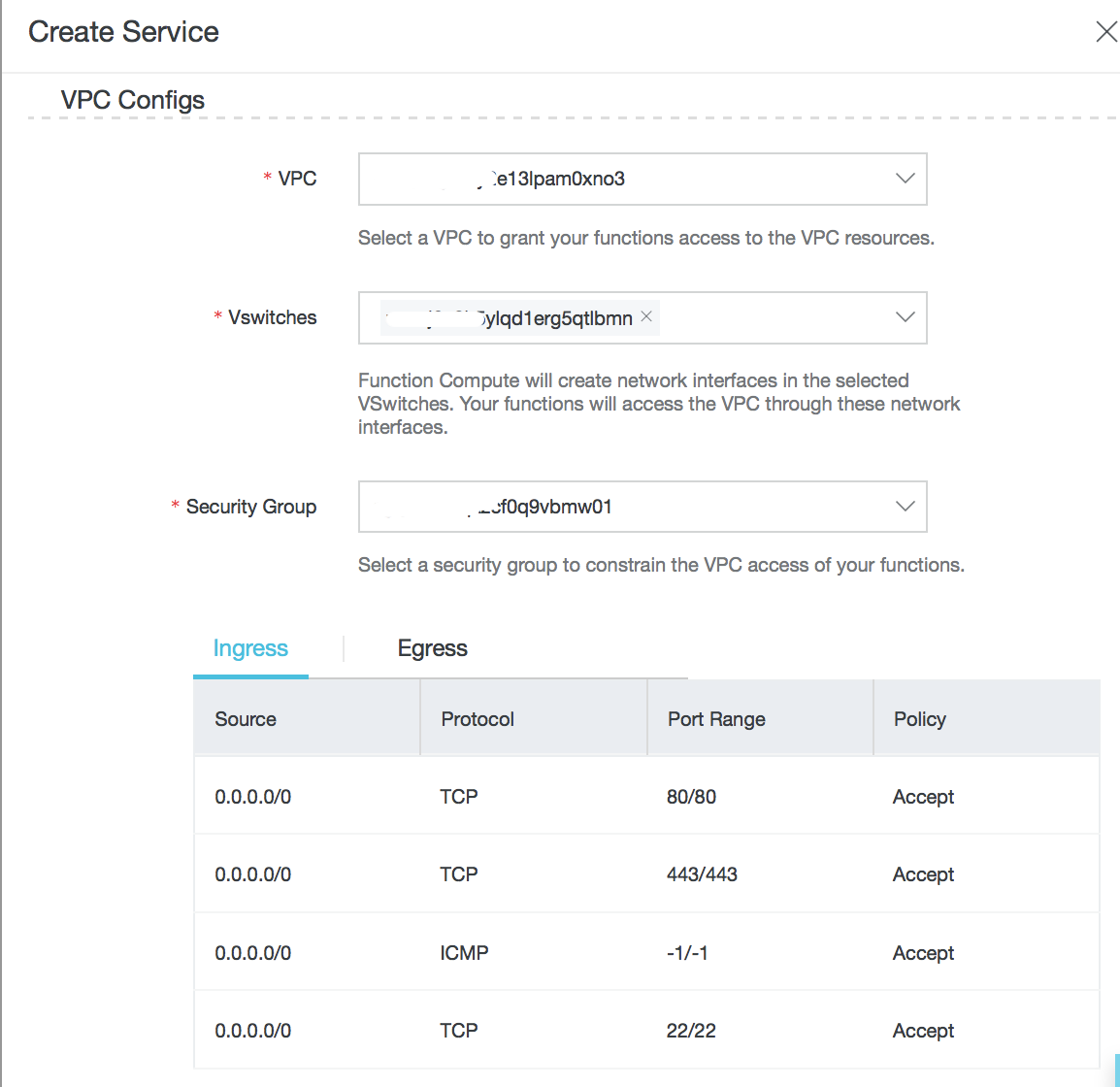

Create a service that uses a pre-created NAS file system. In this demo:

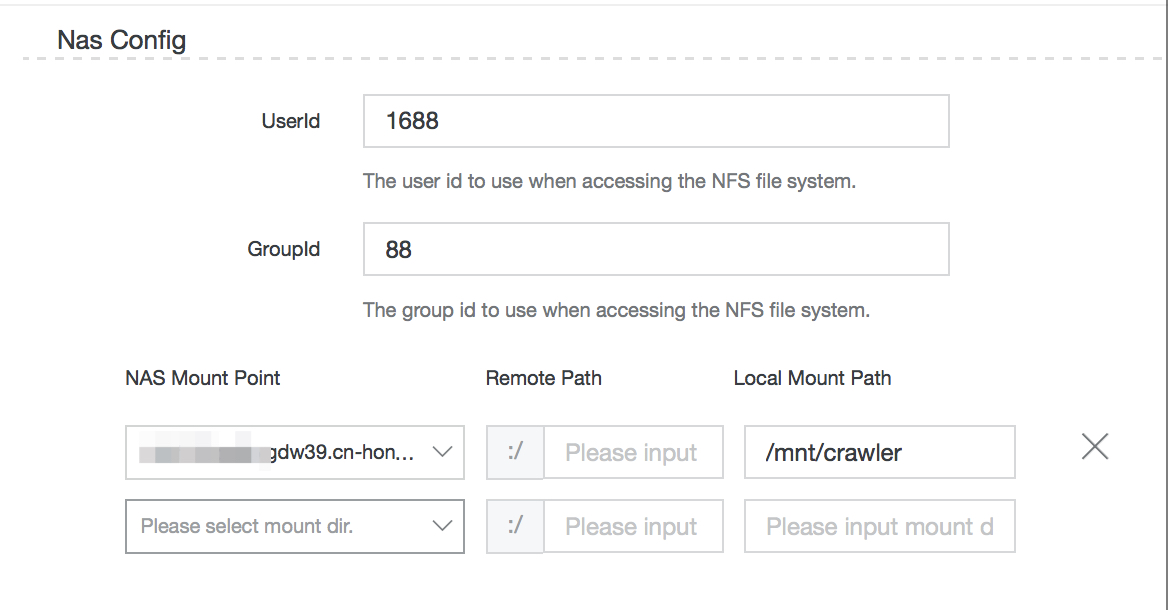

Complete the Nas Config fields as described below.

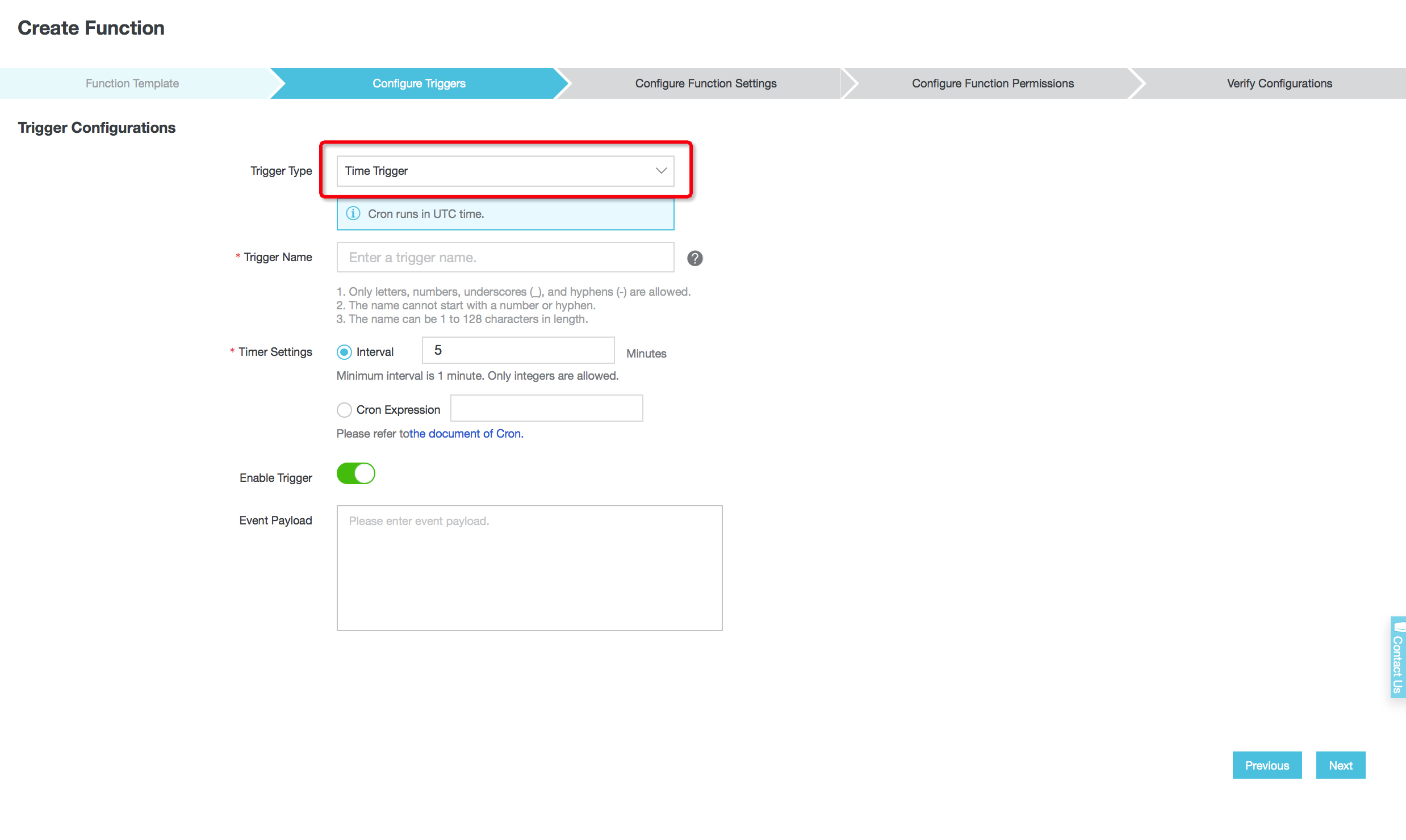

uid/gid under which the function runs. They determine the owner of all the files created on the NAS file system. You can pick any user/group id for this demo as they are shared among all functions in this service.Now that we have a service with NAS access, it's time to write the crawler. Since the crawler function has to run many times before it can finish, we use a time trigger to invoke it every 5 minutes.

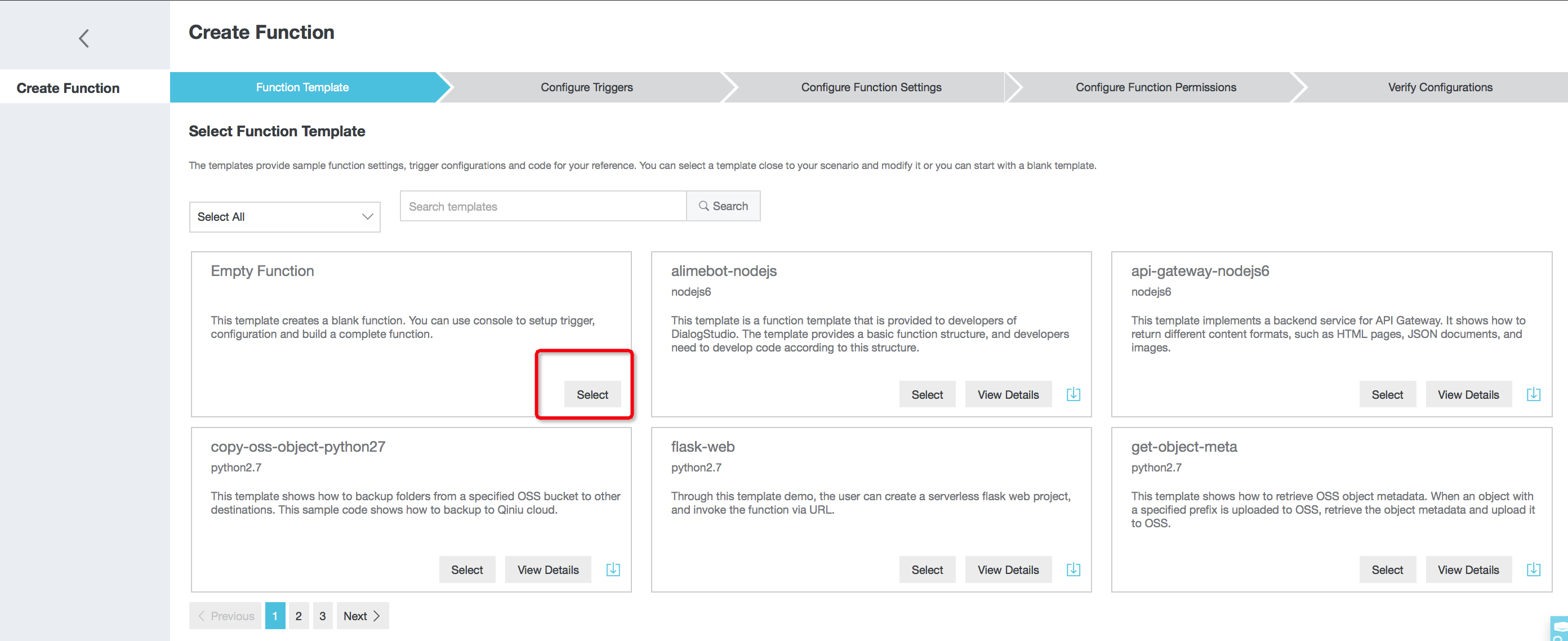

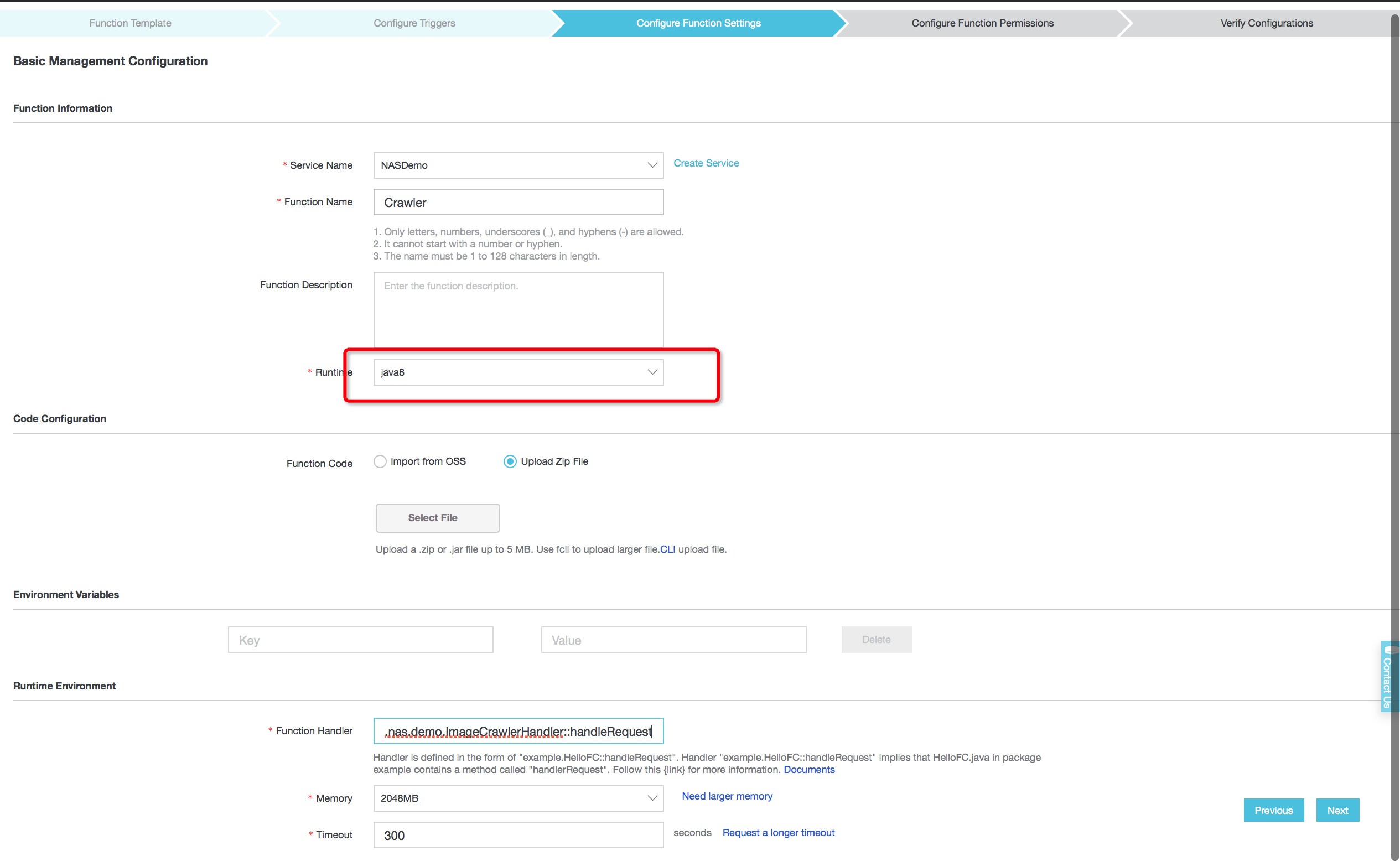

java8 as the runtime. Also fill in the function handler and set the memory to be 2048MB and Time out as 300 seconds and click next

Now you should see the function code page and it's time to write the crawler. The handler logic is pretty straightforward as shown below.

Here is an excerpt of the Java code and you can see that we read and write files to the NAS file system exactly the same way as to the local file system.

public class ImageCrawlerHandler implements PojoRequestHandler<TimedCrawlerConfig, CrawlingResult> {

private String nextUrl() {

String nextUrl;

do {

nextUrl = pagesToVisit.isEmpty() ? "" : pagesToVisit.remove(0);

} while (pagesVisited.contains(nextUrl) );

return nextUrl;

}

private void initializePages(String rootDir) throws IOException {

if (this.rootDir.equalsIgnoreCase(rootDir)) {

return;

}

try {

new BufferedReader(new FileReader(rootDir + CRAWL_HISTORY)).lines()

.forEach(l -> pagesVisited.add(l));

new BufferedReader(new FileReader(rootDir + CRAWL_WORKITEM)).lines()

.forEach(l -> pagesToVisit.add(l));

} catch (FileNotFoundException e) {

logger.info(e.toString());

}

this.rootDir = rootDir;

}

private void saveHistory(String rootDir, String justVistedPage, HashSet<String> newPages)

throws IOException {

//append crawl history to the end of the file

try (PrintWriter pvfw = new PrintWriter(

new BufferedWriter(new FileWriter(rootDir + CRAWL_HISTORY, true)));

) {

pvfw.println(justVistedPage);

}

//append to be crawled workitems to the end of the file

try (PrintWriter ptfw = new PrintWriter(

new BufferedWriter(new FileWriter(rootDir + CRAWL_WORKITEM, true)));

) {

newPages.stream().forEach(p -> ptfw.println(p));

}

}

@Override

public CrawlingResult handleRequest(TimedCrawlerConfig timedCrawlerConfig, Context context) {

CrawlingResult crawlingResult = new CrawlingResult();

this.logger = context.getLogger();

CrawlerConfig crawlerConfig = null;

try {

crawlerConfig = JSON_MAPPER.readerFor(CrawlerConfig.class)

.readValue(timedCrawlerConfig.payload);

} catch (IOException e) {

....

}

ImageCrawler crawler = new ImageCrawler(

crawlerConfig.rootDir, crawlerConfig.cutoffSize, crawlerConfig.debug, logger);

int pagesCrawled = 0;

try {

initializePages(crawlerConfig.rootDir);

if (pagesToVisit.isEmpty()) {

pagesToVisit.add(crawlerConfig.url);

}

while (pagesCrawled < crawlerConfig.numberOfPages) {

String currentUrl = nextUrl();

if (currentUrl.isEmpty()) {

break;

}

HashSet<String> newPages = crawler.crawl(currentUrl);

newPages.stream().forEach(p -> {

if (!pagesVisited.contains(p)) {

pagesToVisit.addAll(newPages);

}

});

pagesCrawled++;

pagesVisited.add(currentUrl);

saveHistory(crawlerConfig.rootDir, currentUrl, newPages);

}

// calculate the total size of the images

.....

} catch (Exception e) {

crawlingResult.errorStack = e.toString();

}

crawlingResult.totalCrawlCount = pagesVisited.size();

return crawlingResult;

}

}public class ImageCrawler {

...

public HashSet<String> crawl(String url) {

links.clear();

try {

Connection connection = Jsoup.connect(url).userAgent(USER_AGENT);

Document htmlDocument = connection.get();

Elements media = htmlDocument.select("[src]");

for (Element src : media) {

if (src.tagName().equals("img")) {

downloadImage(src.attr("abs:src"));

}

}

Elements linksOnPage = htmlDocument.select("a[href]");

for (Element link : linksOnPage) {

logDebug("Plan to crawl `" + link.absUrl("href") + "`");

this.links.add(link.absUrl("href"));

}

} catch (IOException ioe) {

...

}

return links;

}

}For the sake of simplicity, we have omitted some details and other helper classes. You can get all the code from the awesome-fc github project repo if you would like to run the code and get images from your favorite websites.

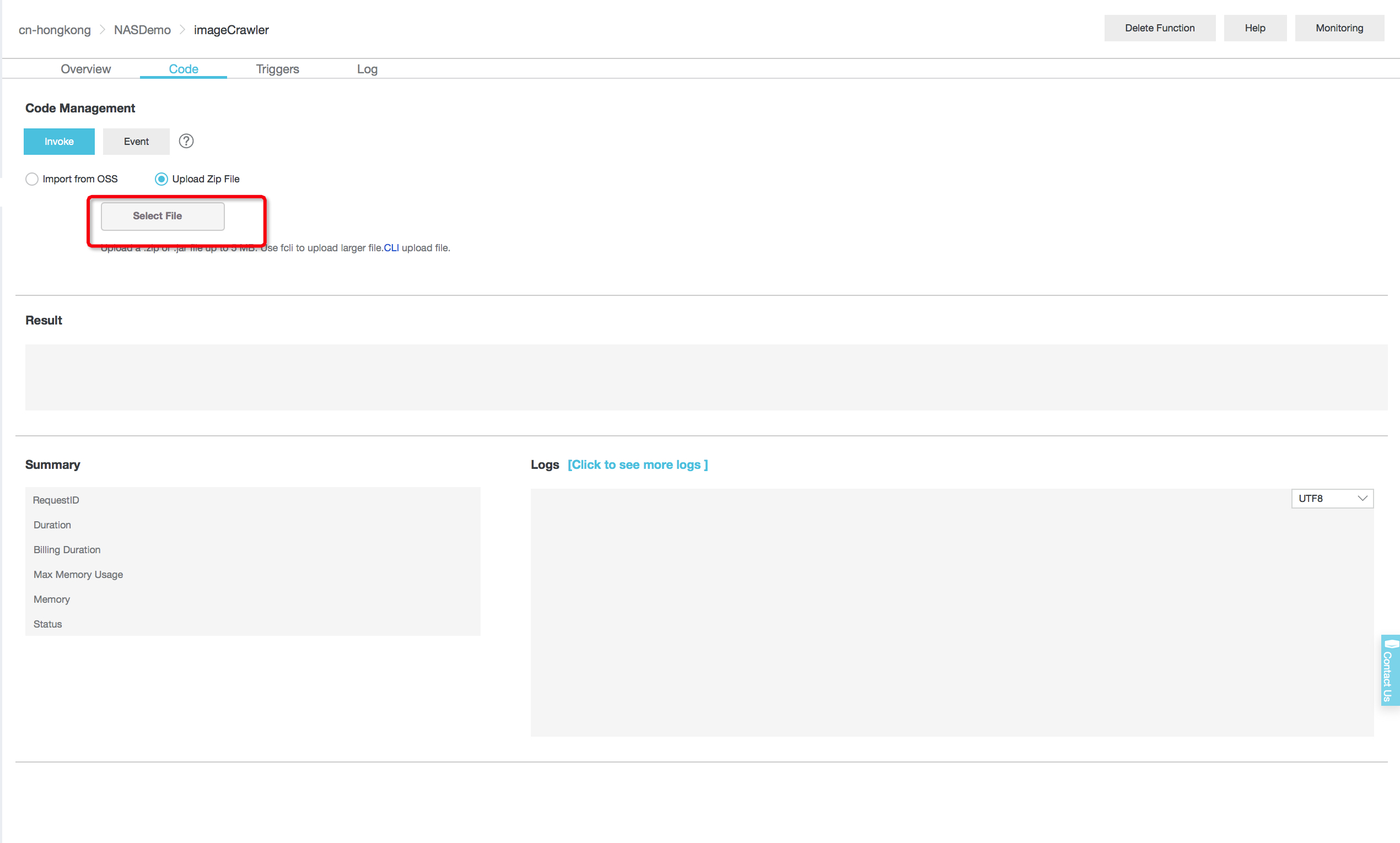

Now that we have written the code, we need to run it. Here are the steps.

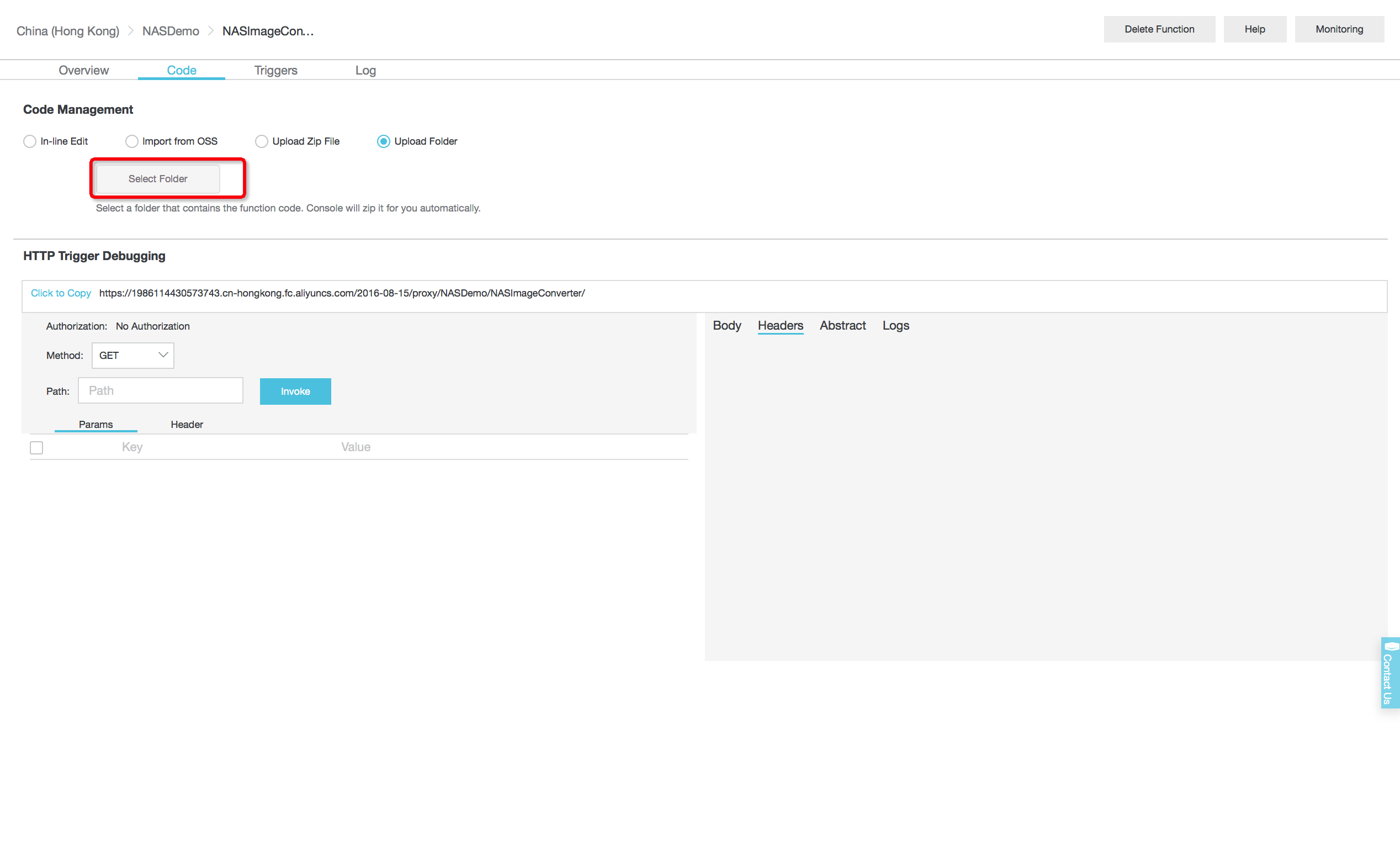

mvn clean packageCode tab in the function page. Upload the jar file (the one with name ends with dependencies) created in the previous step through the console.

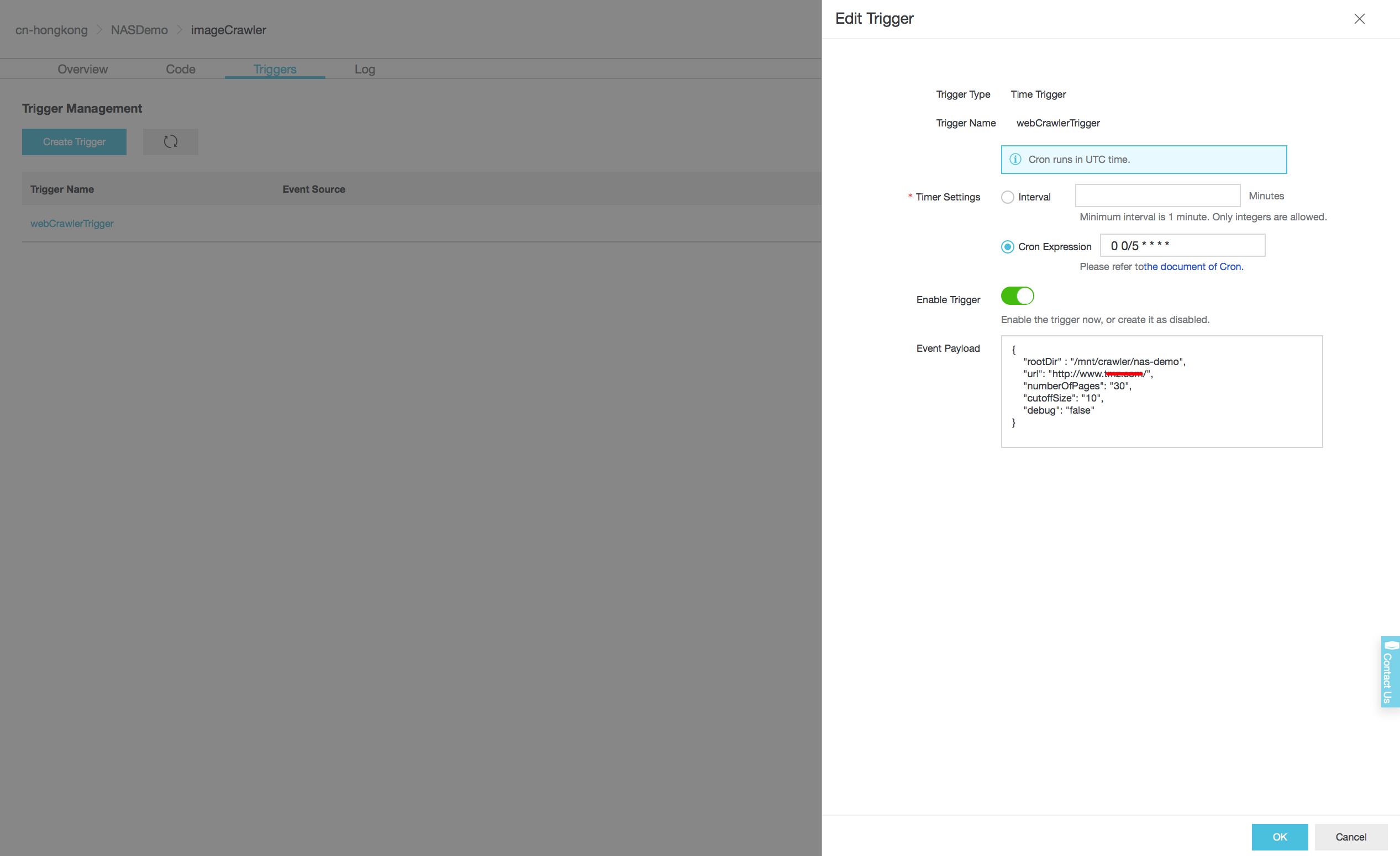

Triggers tab in the function page. Click the time trigger link to enter the event in Json format. The Json event will be serialized to the crawler config and passed to the function. Click Ok.

Log tab to search for the crawler execution log.The second feature that we introduce allows anyone to send an HTTP request to trigger a function execution directly.

Now that we have a file system filled with the images downloaded from the web, we want to find a way to serve those images through a web service. The traditional way is to mount the NAS to a VM and start a webserver on it. This is both a waste of resources if the service is lightly used and not scalable when the traffic is heavy. Instead, you can write a serverless function that reads the images stored on the NAS file system and serve it through a HTTP endpoint. In this way, you can enjoy the instant scalability that Function Compute provides while still only pay for the actual usage.

This demo shows how to write an Image Processing Service.

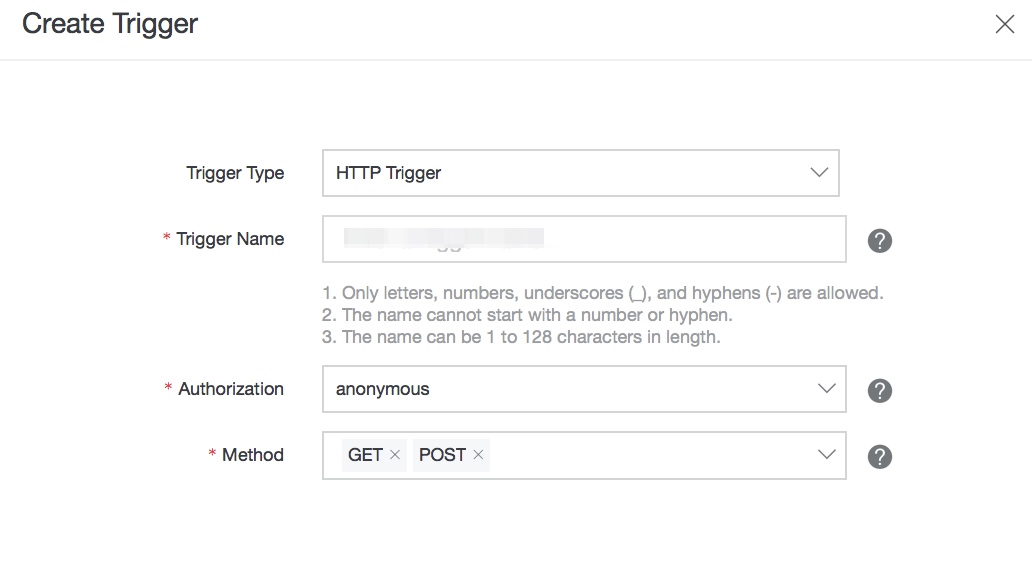

GET and POST invoke method and click next.

Function Compute's python runtime comes with many built-in modules that one can use. In this example, we use both opencv and wand to do image transformations.

Even with an image processing function, we still need to setup a web site to serve the requests. Normally, one needs to use another service like API gateway to handle HTTP requests. In this demo, we are going to use the Function Compute HTTP Trigger feature to allow a HTTP request to trigger a function execution directly. With the HTTP trigger, the headers/paths/query in the HTTP requests are all passed to the function handler directly and the function can return the HTML content dynamically.

With these two features, the handler code is surprisingly straightforward and here is a high-level breakdown.

environ variable.action.Here is an excerpt of the handler logic and we can see that wang loads the image stored on NAS just like a normal file on the local system.

import cv2

from wand.image import Image

TEMPLATE = open('/code/index.html').read()

NASROOT = '/mnt/crawler'

face_cascade = cv2.CascadeClassifier('/usr/share/opencv/lbpcascades/lbpcascade_frontalface.xml')

def handler(environ, start_response):

logger = logging.getLogger()

context = environ['fc.context']

path = environ.get('PATH_INFO', "/")

fileName = NASROOT + path

try:

query_string = environ['QUERY_STRING']

logger.info(query_string)

except (KeyError):

query_string = " "

action = query_dist['action']

if (action == "show"):

with Image(filename=fileName) as fc_img:

img_enc = base64.b64encode(fc_img.make_blob(format='png'))

elif (action == "facedetect"):

img = cv2.imread(fileName)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.03, 5)

for (x, y, w, h) in faces:

cv2.rectangle(img, (x, y), (x + w, y + h), (0, 0, 255), 1)

cv2.imwrite("/tmp/dst.png", img)

with open("/tmp/dst.png") as img_obj:

with Image(file=img_obj) as fc_img:

img_enc = base64.b64encode(fc_img.make_blob(format='png'))

elif (action == "rotate"):

assert len(queries) >= 2

angle = query_dist['angle']

logger.info("Rotate " + angle)

with Image(filename=fileName) as fc_img:

fc_img.rotate(float(angle))

img_enc = base64.b64encode(fc_img.make_blob(format='png'))

else:

# demo, mixed operation

status = '200 OK'

response_headers = [('Content-type', 'text/html')]

start_response(status, response_headers)

return [TEMPLATE.replace('{fc-py}', img_enc)]

Now we have the function and the HTTP trigger ready, we can try image rotation or an advanced transformation like face detection.

1 posts | 2 followers

FollowAlibaba Clouder - June 16, 2020

Alibaba Clouder - June 2, 2020

Alibaba Clouder - August 1, 2019

Alibaba Cloud MVP - March 6, 2020

Alibaba Cloud Serverless - August 21, 2019

Alibaba Clouder - October 30, 2018

1 posts | 2 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Batch Compute

Batch Compute

Resource management and task scheduling for large-scale batch processing

Learn More Compute Nest

Compute Nest

Cloud Engine for Enterprise Applications

Learn More