By Zhang Zuowei (Youyi)

Application workloads are deployed on hosts in the form of containers and share various physical resources in the cloud-native era. The container deployment density of a single node is improved with the enhancement of host hardware performance. The resulting problems (such as inter-process CPU contention and cross-NUMA memory access) are also more serious, affecting application performance. How to allocate and manage the CPU resources of the host to ensure that applications can obtain the optimal service quality is a key factor in measuring the technical capabilities of container services.

Kubernetes provides a semantic description of requests and limits for container Resource Management. When a container specifies a request, the scheduler uses this information to determine which node the Pod should be assigned. When a container specifies a limit, Kubelet ensures that the container is not overused at the run time.

The CPU is a typical time division multiplexing resource. The kernel scheduler divides the CPU into multiple time slices and allocates a certain amount of up time to each process in turn. Kubelet's default CPU management policy uses the CFS bandwidth controller of the Linux kernel to control the upper limit of container CPU resource usage. Under multi-core nodes, processes are often migrated to their different cores during the running process. Considering the performance of some applications is sensitive to CPU context switching, Kubelet also provides static policies to allow Guaranteed type Pods to monopolize CPU cores.

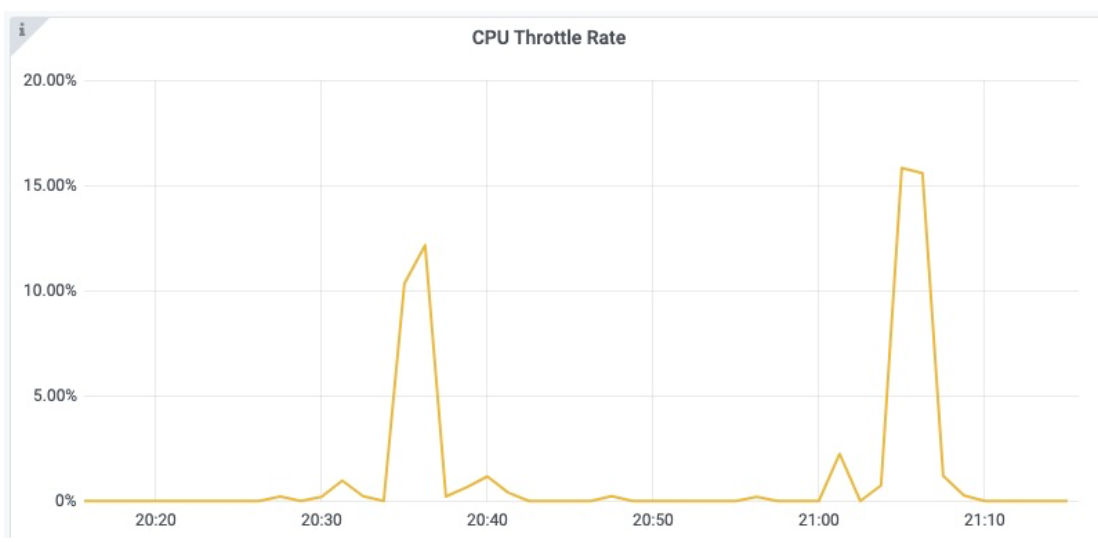

Kernel CFS scheduling uses the cfs_period and cfs_quota parameters to manage the consumption of container CPU time slices. cfs_period is a fixed value of 100 ms, and cfs_quota corresponds to the CPU Limit of the container. For example, for a container whose CPU limit is set to 2, its cfs_quota will be set to 200ms, which means the container can use up to 200ms of CPU time slices every 100ms period, which is two CPU cores. When its CPU usage exceeds the preset limit value, the processes in the container are throttled by kernel scheduling constraints. Careful application administrators often observe this feature in CPU Throttle Rate metrics in the cluster Pod monitoring.

What makes application administrators wonder is why the resource utilization of containers is not high, and the problem of application performance degradation occurs frequently. The problem from the perspective of CPU resources comes from the following two aspects. The first aspect is the CPU Throttle problem caused by the kernel limiting container resource consumption according to the CPU Limit. Second, due to the influence of CPU topology, some applications are sensitive to the context switching of processes between CPUs, especially when cross-NUMA access occurs.

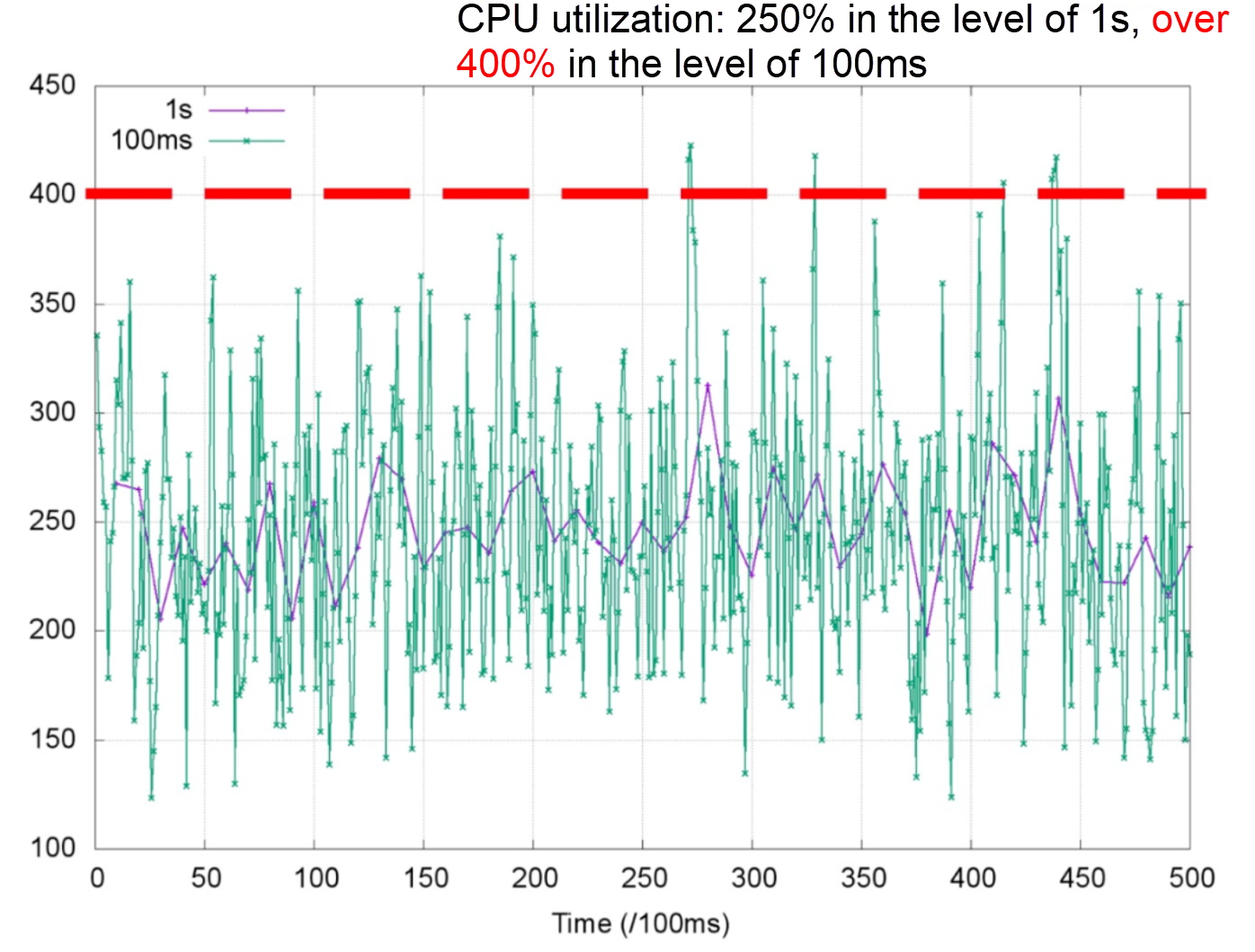

Affected by the kernel scheduling control period (cfs_period), the CPU utilization of containers is often deceptive. The following figure shows the CPU usage of a container for a while (in 0.01 cores). You can see the CPU usage of the container is stable at a granularity of 1s (purple polyline in the figure), with an average of about 2.5 cores. According to experience, the administrator will set the CPU Limit to 4 cores. It is thought this had reserved enough elastic space. However, if we enlarged the observation granularity to 100ms (green polyline in the figure), the CPU usage of the container would show a serious glitch, with the peak reaching more than 4 cores. As such, the container will generate frequent CPU throttles, which will lead to application performance degradation and RT jitter. However, we cannot realize the CPU throttles judging from the common CPU utilization indicators.

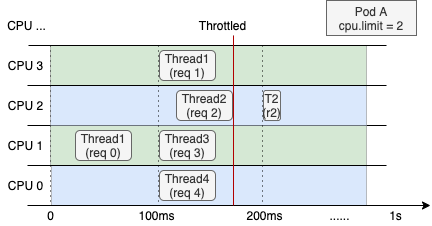

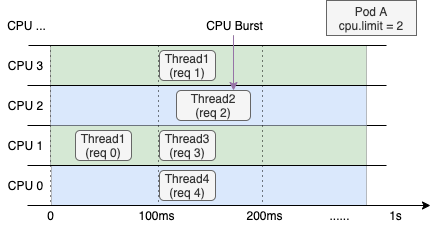

Glitches are usually caused by sudden CPU resource requirements (such as code logic hotspots and traffic spikes). The following example uses a specific example to describe the process of application performance degradation caused by CPU Throttle. The following figure shows the rep thread allocation of a web service container. The CPU limit of the container is set to 2. Assuming the processing time of each request is 60 ms, even if the overall CPU utilization rate of the container is low, recently the time slice budget (200 ms) in the kernel scheduling period was completely consumed because four requests are processed within the interval of 100 ms to 200 ms. Thread 2 needs to wait for the next period to continue req 2 processing. The response delay (RT) of the request will be longer. This situation will occur more easily when the application load rises, causing the long tail of its RT to be more serious.

We can only increase the CPU Limit value of the container to avoid the CPU Throttle problem. However, if you want to solve the CPU Throttle completely, you need to increase the CPU limit two or three times (sometimes even five to ten times). It is also necessary to reduce the deployment density of containers to reduce the risk of excessive CPU limit overselling, which leads to an increase in the overall resource cost.

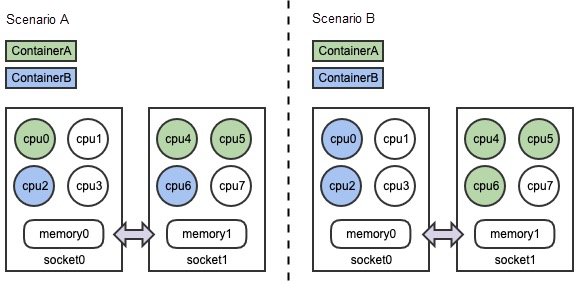

Under the NUMA architecture, the CPU and memory in the node will be divided into two parts or more (Socket0, Socket1 in the figure). The CPU is allowed to access different parts of the memory at different speeds. When the CPU accesses the memory at the other end across the Socket, its memory access delay is higher. Blindly allocating physical resources to containers on nodes may reduce the performance of latency-sensitive applications. Therefore, we need to avoid decentralizing the CPU and binding it to multiple sockets to improve the locality during memory access. As shown in the following figure, CPU and memory resources are also allocated to the two containers. The allocation strategy in scenario B is more reasonable.

The CPU management policy static policy and the topology management policy single-numa-node provided by Kubelet will bind the container to the CPU, which can improve the affinity between the application load, CPU Cache, and NUMA. However, we can look at the following example to determine whether this can solve all the performance problems caused by the CPU.

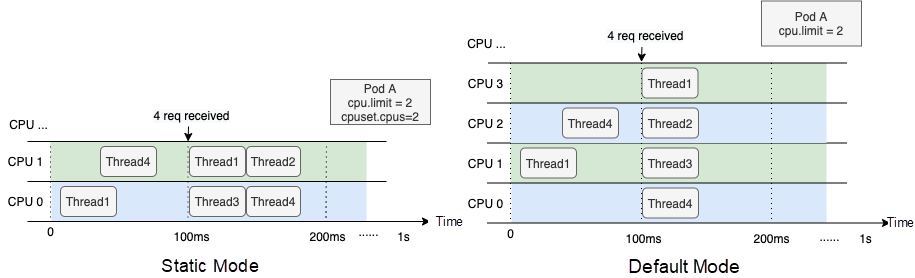

For a container with a CPU limit equal to 2, its application receives four requests that need to be processed at 100ms. In the static mode provided by Kubelet, the container will be fixed to the two cores of CPU0 and CPU1, and each thread can only run in line. In Default mode, the container gains more CPU flexibility, and each thread can process the requests immediately after receiving them. The nuclear binding strategy is not a silver bullet, and the Default mode also has its application scenarios.

The CPU binding core solves the performance problem caused by context switching between different cores, especially different NUMA, but it also loses resource elasticity. As such, the thread will be queued to run on each CPU. Although the CPU Throttle metric may be reduced, the performance problem of the application is not solved.

We introduced the CPU Burst kernel features contributed by Alibaba Cloud in the previous articles, which can solve the CPU Throttle problem. When the real CPU resource usage of the container is less than cfs_quota, the kernel will store the excess CPU time into cfs_burst. When the container has sudden CPU resource requirements and needs to use resources beyond cfs_quota, the CFS bandwidth controller (BWC) of the kernel will allow it to consume the time slices it previously saved to cfs_burst.

The CPU Burst mechanism can solve the RT long-tail problem of latency-sensitive applications and improve container performance. Currently, Alibaba Cloud Container Service has completed comprehensive support for the CPU Burst mechanism. ACK detects CPU throttling events for kernel versions that do not support CPU Burst and dynamically adjusts the CPU limit to achieve the same effect as CPU Burst.

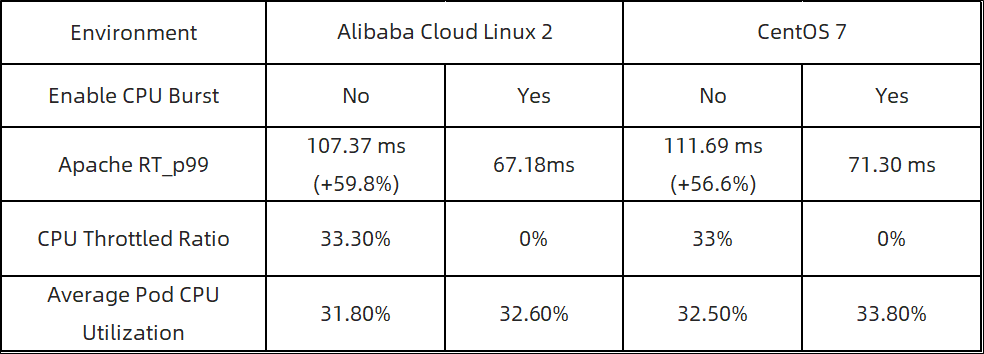

We use Apache HTTP Server as a delay-sensitive online application to evaluate the effect of CPU Burst capability on response time (RT) improvement by simulating request traffic. The following data shows the performance of the CPU Burst policy before and after it is enabled:

After comparing the preceding data, we know:

Although Kubelet provides a standalone resource management policy (static policy, single-numa-node), which can partially solve the problem that application performance is affected by CPU cache and NUMA affinity, this policy still has the following shortcomings:

Alibaba Cloud Container Service implements topology-aware scheduling and flexible kernel binding policies based on the Scheduling framework. This provides better performance for CPU-sensitive workloads. ACK topology-aware scheduling can be adapted to all QoS types and enabled on-demand in the Pod dimension. At the same time, you can select the optimal combination of node and CPU topology in the whole cluster.

Through the evaluation of Nginx services, we found that on Intel (104 core) and AMD (256 core) physical servers, using CPU topology-aware scheduling can improve application performance by 22% to 43%.

| Performance Metrics | Intel | AMD |

| QPS | Improved by 22.9% | Improved by 43.6% |

| AVG RT | Reduced by 26.3% | Reduced by 42.5% |

CPU Burst and topology-aware scheduling are two major tools that allow Alibaba Cloud Container Service to improve application performance. They solve CPU Resource Management in different scenarios and can be used together.

CPU Burst solves the problem of throttling CPU limit at the time of kernel BWC scheduling, which can improve the performance of latency-sensitive tasks. However, the essence of CPU Burst is not to change resources out of nothing. If the container CPU utilization is already high (for example, greater than 50%), the optimization effect of CPU Burst will be limited. As such, the application should be expanded using HPA or VPA.

Topology-aware scheduling reduces the overhead of workload CPU context switching, especially under the NUMA architecture, which can improve the quality of service for CPU-intensive and memory-access-intensive applications. However, CPU core binding is not a silver bullet. The actual effect depends on the application type. In addition, if a large number of Burstable-type Pods in the same node have topology-aware scheduling enabled at the same time, CPU binding may overlap. Interference between applications may be aggravated in individual scenarios. Therefore, topology-aware scheduling is suitable for targeted openings.

Click here to view more information. Alibaba Cloud ACK supports CPU Burst and topology-aware scheduling.

Observability and Cause Diagnosis of DNS Faults in Kubernetes Clusters

495 posts | 48 followers

FollowAlibaba Container Service - April 28, 2020

Alibaba Cloud Community - December 21, 2021

Alibaba Cloud MaxCompute - August 31, 2020

Clouders - January 18, 2022

Alibaba Cloud Native Community - August 15, 2024

Alibaba Cloud Serverless - November 27, 2023

495 posts | 48 followers

Follow Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Remote Rendering Solution

Remote Rendering Solution

Connect your on-premises render farm to the cloud with Alibaba Cloud Elastic High Performance Computing (E-HPC) power and continue business success in a post-pandemic world

Learn More Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by Alibaba Cloud Native Community