By Taiye

When writing programs, multi-threaded programming is a crucial concept, especially with the prevalence of multi-core processors. This approach enables us to fully utilize the computer's processing power, implementing parallel processing tasks and ultimately improving program efficiency and performance.

Multi-threading means that multiple execution threads can run simultaneously in a single program. Each thread can be viewed as a separate path of program execution. In a multi-threaded environment, the operating system is responsible for the scheduling of multiple threads so that they can run concurrently on one or more cores. Compared with a single-threaded program, a multi-threaded program can more efficiently utilize the computing resources of a multi-core processor to execute multiple tasks or handle concurrent requests.

Multi-threading improves program performance, but it also introduces complex synchronization issues. Locking is the traditional way to solve these problems, while lock-free programming is a more advanced but complex technique that can provide better performance and scalability in some cases. The key to efficient multi-threaded programming is to correctly select and implement the concurrency strategy suitable for application scenarios.

As an observable data collector self-developed by the Alibaba Cloud Simple Log Service (SLS) team, iLogtail is now open-source on GitHub. As an end-to-end application, iLogtail pursues extreme optimization for resource usage and performance in the evolution of technology.

Many concepts, such as concurrent programming, multi-threading, and shared resources are related to threads. The thread mentioned here is called "user thread", and there is another thread called "kernel thread" corresponding to the operating system.

At the operating system and programming language level, threading models can be divided into the following types:

Since the threading model directly affects the performance, scalability, responsiveness, and simplicity of the program, it is critical for developers to choose a proper one. For example, the concurrency philosophy of the Go language encourages the use of channels to communicate between goroutines, rather than traditional locking mechanisms and shared memory. By passing messages through channels, the designer intends to reduce the use of locks, thereby avoiding such problems. In addition, the design philosophy of the Go language favors a simple and clear concurrency model. Complexities such as reentrant locks often run counter to that philosophy.

The goal of synchronization is to ensure the consistency of concurrent operations on shared data by different execution streams. In the single-core era, this goal is easily achieved by using atomic variables. Even the reads or writes of certain memory-aligned data have atomic properties due to some memory access characteristics of the CPU. However, in the multi-core architecture, even if the operations are atomic, synchronization can still fail for other reasons.

Firstly, code optimization and instruction rearrangements by modern compilers can affect the execution order of the code. Secondly, inconsistent actual execution order can be caused by out-of-order optimization at the instruction execution level, pipeline, out-of-order execution, and branch prediction.

In C++, the volatile keyword is a type modifier. It tells compilers that the value of a variable may be changed outside the control of the program. This means that compilers should prevent access to these variables from being optimized away. That is, every read and write of a volatile variable should be done directly from memory, rather than using cached values.

• Volatile does not guarantee atomicity, and operations on volatile variables may not be thread-safe.

• Since volatile does not prevent visibility issues due to CPU caching, it is not sufficient to handle memory order issues in multi-threading.

A memory barrier, also known as a memory fence, is a synchronization mechanism. It ensures that specified memory operations have a clear execution order before and after the barrier. It is implemented at the hardware level to prevent the improper rearrangements of instructions by compilers and CPU. Memory barriers are generally classified into the following types:

• Full Barrier: It ensures that all read and write operations behind the barrier are performed only after those before the barrier are completed.

• Read Barrier: It ensures that all read operations before the barrier are completed before the read and write operations behind the barrier are performed.

• Write Barrier: It ensures that all write operations before the barrier are completed before the read and write operations behind the barrier are performed.

In C++, memory_order is an enumeration type. It indicates the memory order semantics of atomic operations. Since C++11, the standard library has provided a series of atomic types and operations, which are located in header files. Memory_order specifies how compilers and processors handle memory access around atomic operations. This is essential for properly writing lock-free data structures and algorithms.

Here are some of the different memory_order options and their meanings:

Understanding and accurately using weaker memory sequences can bring improved performance. The implementation of spin locks in iLogtail uses memory_order_acquire and memory_order_release.

class SpinLock {

std::atomic_flag v_ = ATOMIC_FLAG_INIT;

public:

SpinLock() {}

bool try_lock() { return !v_.test_and_set(std::memory_order_acquire); }

void lock() {

for (unsigned k = 0; !try_lock(); ++k) {

boost::detail::yield(k);

}

}

void unlock() { v_.clear(std::memory_order_release); }

};

using ScopedSpinLock = std::lock_guard<SpinLock>;Compiler rearrangement refers to compilers in the process of generating object code to exchange the order of memory access without dependencies. Suppressing compiler rearrangement can be achieved specifically in three ways:

• If the corresponding variable is declared as volatile, the C++ standard guarantees that the access compiler between volatile variables is not rearranged. However, it happens only between volatile variables. It is possible to rearrange between volatile variables and other variables.

• If proper Memory Barrier instructions are manually added where needed, the semantics of Memory Barrier instructions guarantee that compilers do not perform wrong rearrangement operations.

• If the corresponding variable is declared as atomic, similar to volatile, the C++ standard also guarantees that the access compiler between atomic variables is not rearranged.

Reads and writes operations of variables are sometimes optimized by compiler optimization. In the following example, if data_ready is not modified by volatile, compilers may optimize the reading check of data_ready when optimizing in the main program loop, thus causing the thread to be unable to stop.

// Flag modified by the interrupt service routine.

volatile bool data_ready = false;

// Interrupt the service routine.

void ISR() {

// The data is ready. Set the flag.

data_ready = true;

}

int main() {

// Main program loop.

while (true) {

if (data_ready) {

// Process the data.

// ...

// Reset the flag.

data_ready = false;

}

// Perform other tasks...

}

}Declaring the corresponding variable as volatile or atomic can suppress the compiler optimization of variable reading. C++ guarantees that access to volatile or atomic memory occurs and is not optimized.

In modern multi-core CPU architectures, a technique called Out-of-Order Execution is often used to increase processing speed. Out-of-order execution refers to that the CPU does not necessarily follow the original order of instructions in the program when executing machine instructions but reorders the instruction stream to make more efficient use of the CPU's resources.

#include <thread>

#include <atomic>

#include <cassert>

#include <iostream>

std::atomic<bool> x(false), y(false);

int a = 0, b = 0;

void thread1() {

x.store(true, std::memory_order_relaxed); // A1

a = y.load(std::memory_order_relaxed); // A2

}

void thread2() {

y.store(true, std::memory_order_relaxed); // B1

b = x.load(std::memory_order_relaxed); // B2

}

int main() {

std::thread t1(thread1);

std::thread t2(thread2);

t1.join();

t2.join();

std::cout << "a: " << a << ", b: " << b << std::endl;

return 0;

}In this example, std::memory_order_relaxed is used to tell compilers and CPU that no memory order guarantee is required. This allows compilers and processors to generate machine instructions in an optimal manner, including possible out-of-order execution.

Now, let's consider some possible execution sequences caused by out-of-order execution:

For CPU out-of-order, the problem is essentially solved only by inserting so-called Memory Barrier instructions. These instructions allow the CPU to guarantee the visibility of specific memory access orders and memory write operations across multiple cores. However, since the memory model and specific Memory Barrier instructions are different between different processor architectures, there is no universal rule as to which location to add which instruction. Therefore, C++ 11 makes a layer of abstraction on this basis and introduces the concepts of atomic type and Memory Order, which helps write more common code. Essentially, it is up to the compiler to automatically select whether to insert low-level Memory Barrier instructions on a particular processor architecture based on the high-level Memory Order specified in the code.

Concept of Lock

In multi-threaded programs, a lock is a synchronization mechanism used to control access to shared resources. It prevents multiple threads from reading or modifying the same data at the same time, thus preventing issues such as data inconsistencies and race conditions. The most common locks are Mutex and Read-Write Lock. They guarantee that, at any given time, a resource is either exclusive to a single thread (write operations) or is shared by multiple threads (read operations).

However, the use of locks also brings problems, such as Deadlock, Starvation, and Lock Contention. These may cause the program to degrade performance or even to become unresponsive.

Mutex is used to control the mutually exclusive access of multiple threads to shared resources between them. That is, it prevents multiple threads from simultaneously operating on a shared resource at a certain time. Only one thread can acquire the Mutex at a certain time. No other thread can acquire the Mutex until it is released and they are waiting in a blocked state in a waiting queue.

During lock and unlock operations, std::mutex implicitly guarantees the required memory order, ensuring that memory read and write operations between lock and unlock operations are not rearranged (providing serialization effects). Specifically, the lock() operation of std::mutex executes a memory barrier after acquiring the lock. It ensures that all memory writes prior to the lock operation are visible to the thread that acquired the lock. The unlock() operation also performs a memory barrier before releasing the lock. It ensures that all modifications to the shared data are visible to other threads after the lock is released.

In iLogtail, std::mutex is used a lot. For example, in the simplest case, complex operations on the global Map need to be implemented with a Mutex.

class LogFileProfiler {

private:

typedef std::unordered_map<std::string, LogStoreStatistic*> LogstoreSenderStatisticsMap;

// key : region, value :unordered_map<std::string, LogStoreStatistic*>

std::map<std::string, LogstoreSenderStatisticsMap*> mAllStatisticsMap;

std::mutex mStatisticLock;

};When using std::mutex, it Is usually paired with an RAII (Resource Acquisition Is Initialization) wrapper like std::lock_guard or std::unique_lock to ensure that the lock is automatically released at the end of the scope, preventing deadlock or forgetting to release the lock.

The std::condition_variable is a synchronization primitive in C++, which is used to implement conditional waits between threads in multi-threaded programs. The condition variable is often used in conjunction with Mutex (std::mutex) to wait for a condition to be true. Its main function is to block one or more threads until a notification from another thread is received or until a condition is met.

The following is a typical instance of iLogtail using std::condition_variable. Mutex and semaphore together control the life cycle of threads, avoiding while (true) dead loop consuming CPU.

std::condition_variable mStopCV;

void LogtailAlarm::Stop() {

{

lock_guard<mutex> lock(mThreadRunningMux);

mIsThreadRunning = false;

}

mStopCV.notify_one();

}

bool LogtailAlarm::SendAlarmLoop() {

{

unique_lock<mutex> lock(mThreadRunningMux);

while (mIsThreadRunning) {

SendAllRegionAlarm();

if (mStopCV.wait_for(lock, std::chrono::seconds(3), [this]() { return !mIsThreadRunning; })) {

break;

}

}

}

SendAllRegionAlarm();

return true;

}2.2.6 Recursive Mutex

The std::recursive_mutex is a mutex in the C++ standard library. It allows the same thread to acquire the same mutex multiple times. Compared with std::mutex, it provides a reentrant, which means that if a thread already owns a lock, it can still lock again without deadlock.

This kind of recursive lock is useful for dealing with scenarios where the same lock needs to be acquired multiple times in the same thread. For example, when a recursive function needs to access a shared resource in each recursive call. Using std::recursive_mutex avoids deadlock caused by a thread trying to reacquire a lock it already holds.

A shared lock means that the same lock can be acquired by multiple threads at the same time, while an exclusive lock allows only one thread to acquire the lock. A read-write lock is a typical application of the shared lock and the exclusive lock. A read lock is a shared one and a write lock is an exclusive one. Read locks can be held by multiple threads at the same time, while write locks can only be held by one thread at most.

Read-write locks are scenario-specific locks that improve resource utilization and reduce waiting time. However, they can also introduce more complex synchronization problems, such as writer starvation (waiting too long for write operations).

In iLogtail, the metric module is a typical one-write-multiple-read scenario. ReadMetrics provides external data. Therefore, multiple reads may occur during external access. If a simple Mutex is used, there will be contention between reads. Therefore, a read-write lock is used here to ensure the performance of multiple reads.

void ReadMetrics::ReadAsLogGroup(std::map<std::string, sls_logs::LogGroup*>& logGroupMap) const {

ReadLock lock(mReadWriteLock);

// Read the linked list and add a read lock to allow multiple reads.

MetricsRecord* tmp = mHead;

while (tmp) {

...

tmp = tmp->GetNext();

}

}

void ReadMetrics::Clear() {

WriteLock lock(mReadWriteLock);

// Change the linked list and add a write lock.

while (mHead) {

MetricsRecord* toDelete = mHead;

mHead = mHead->GetNext();

delete toDelete;

}

}The characteristic of spin lock is that when a thread cannot acquire the lock immediately, it continuously tries to acquire the lock through a loop rather than directly block or release CPU resources. This process is called "spin". A non-spin lock does not have a spin process. If the lock cannot be acquired, the thread will directly give up or execute other processing logic, such as queuing and blocking.

• Spin lock is a deadlocked locking mechanism. When access resources conflict, there are two options. One is to spin to wait, and the other is to suspend the current process and schedule other processes to execute. The current executing thread of the spin lock will keep retrying until the lock is acquired in the critical section.

• Only one thread is allowed in. Only one thread can acquire the lock and enter the critical section at a time, and the other threads are constantly trying at the threshold.

• The execution time is short. Due to the waiting nature of the spin lock, it is applied to critical sections where the code is not very complex. If the critical section takes too long to execute, those threads that keep "waiting" at the threshold of the critical section will waste a lot of CPU resources.

• A spin lock can be executed in an interrupt context. Since it runs all the time, a spin lock can be applied in an interrupt context.

ILogtail uses spin locks in some relatively fast data Set Get. This is mainly because these operations are relatively fast and the time cost of spinning is less than that of context switching. For example, the following example uses the spin lock for the lookup and insert operations on a map.

int32_t ConfigManager::FindAllMatch(vector<FileDiscoveryConfig>& allConfig,

const std::string& path,

const std::string& name /*= ""*/) {

...

{

// Spin lock locking, data lookup.

ScopedSpinLock cachedLock(mCacheFileAllConfigMapLock);

auto iter = mCacheFileAllConfigMap.find(cachedFileKey);

if (iter != mCacheFileAllConfigMap.end()) {

if (iter->second.second == 0

|| time(NULL) - iter->second.second < INT32_FLAG(multi_config_alarm_interval)) {

allConfig = iter->second.first;

return (int32_t)allConfig.size();

}

}

}

...

{

// Spin lock locking, data insertion.

ScopedSpinLock cachedLock(mCacheFileAllConfigMapLock);

mCacheFileAllConfigMap[cachedFileKey] = std::make_pair(allConfig, alarmFlag ? (int32_t)time(NULL) : (int32_t)0);

}

return (int32_t)allConfig.size();

}To solve the problems caused by locks, lock-free programming provides an alternative method. Lock-free programming does not rely on traditional lock synchronization. It uses atomic operations to ensure that multiple threads can safely access shared resources concurrently. Atomic operations are indivisible and not to be interrupted by other threads during execution. Therefore, they can be used to implement lock-free data structures.

The advantages of lock-free programming include reducing the overhead of thread context switching, avoiding deadlocks, and improving the concurrency performance of programs. However, lock-free programming is generally more complex and requires precise design and a good understanding of the memory model. Not all situations are suitable for lock-free programming. It is simpler and more efficient to use the appropriate locking mechanism.

class Application {

private:

Application();

~Application() = default;

// Whether the identification of SigTerm signal is received.

std::atomic_bool mSigTermSignalFlag = false;

};There is a std::atomic_bool variable in iLogtail. If the process receives SigTerm signal, it sets mSigTermSignalFlag variable to be true. In the main thread of iLogtail, it calls mSigTermSignalFlag.load() continuously. Once the variable is judged to be true, it exits.

void Application::Start() {

...

while (true) {

...

if (mSigTermSignalFlag.load()) {

LOG_INFO(sLogger, ("received SIGTERM signal", "exit process"));

Exit();

}

}

}The split-lock strategy divides the data structure into multiple independent stripes. For example, a large hash table can be split into multiple parts, each with its lock. Therefore, different threads can access different parts of the table at the same time, thereby achieving lock-free access to some extent.

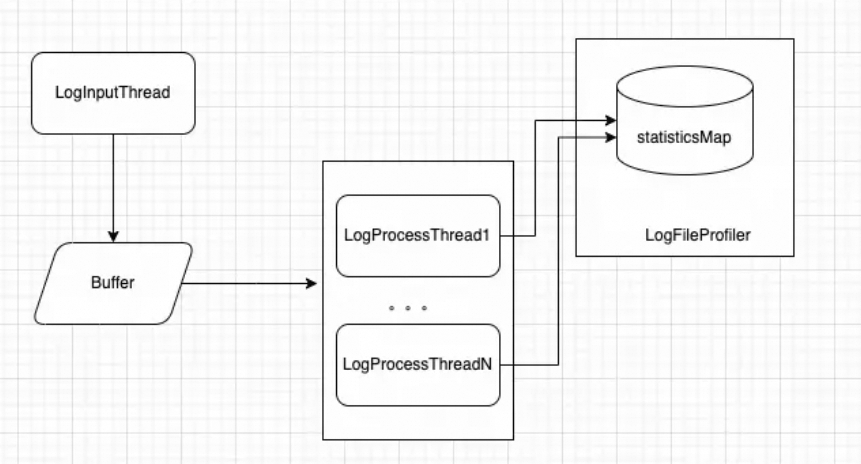

The previous thread model for iLogtail metric statistics is as follows.

The metrics of iLogtail are stored in a global Map in the LogFileProfiler. Multiple processing threads access this global Map to calculate the metrics during each processing.

void LogFileProfiler::AddProfilingData(const std::string& configName,

const std::string& region,

const std::string& projectName,

const std::string& category,

const std::string& convertedPath,

const std::string& hostLogPath,

const std::vector<sls_logs::LogTag>& tags,

uint64_t readBytes,

uint64_t skipBytes,

uint64_t splitLines,

uint64_t parseFailures,

uint64_t regexMatchFailures,

uint64_t parseTimeFailures,

uint64_t historyFailures,

uint64_t sendFailures,

const std::string& errorLine) {

string key = projectName + "_" + category + "_" + configName + "_" + hostLogPath;

// Lock.

std::lock_guard<std::mutex> lock(mStatisticLock);

// Find the metric Map for each region from the global Map.

LogstoreSenderStatisticsMap& statisticsMap = *MakesureRegionStatisticsMapUnlocked(region);

// Find the metric currently configured for collection from the metric Map of the region.

std::unordered_map<string, LogStoreStatistic*>::iterator iter = statisticsMap.find(key);

// Metric calculation.

...

}It can be seen that, in the AddProfilingData, there is a lock with a relatively large range, starting from the Map to find the corresponding metric object until the end of the metric calculation. In the case of multiple LogProcessThreads, the lock contention is more frequent and intense. Therefore, the performance is relatively poor.

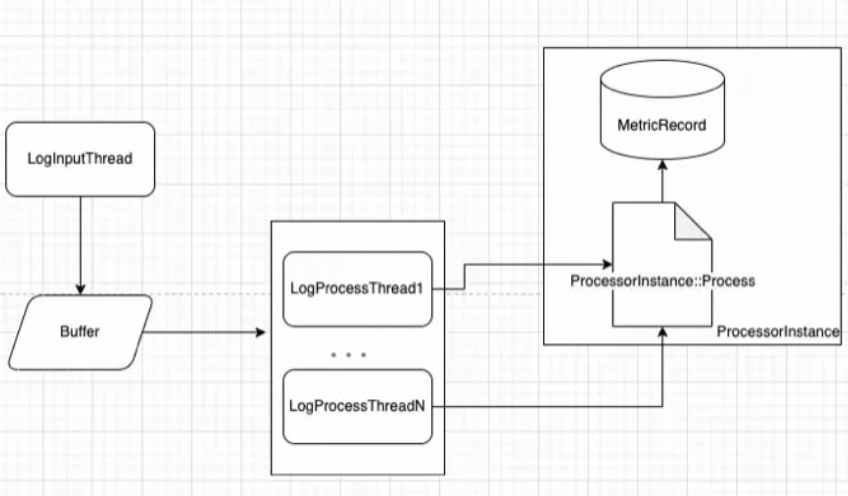

The new metric calculation module places the metric data structure to each Plugin instance, as shown in the ProcessorInstance.

class Plugin {

protected:

// The data structure of the metric storage.

mutable MetricsRecordRef mMetricsRecordRef;

};When each LogProcess thread processes data, it calls the ProcessorInstance::Process function, which internally calculates the metrics of its instance.

void ProcessorInstance::Process(std::vector<PipelineEventGroup>& logGroupList) {

if (logGroupList.empty()) {

return;

}

// Calculate metrics for the data input by the plug-in.

for (const auto& logGroup : logGroupList) {

mProcInRecordsTotal->Add(logGroup.GetEvents().size());

}

uint64_t startTime = GetCurrentTimeInMicroSeconds();

mPlugin->Process(logGroupList);

uint64_t durationTime = GetCurrentTimeInMicroSeconds() - startTime;

// Calculate metrics of the processing time of the plug-in.

mProcTimeMS->Add(durationTime);

// Calculate metrics for the data output by the plug-in.

for (const auto& logGroup : logGroupList) {

mProcOutRecordsTotal->Add(logGroup.GetEvents().size());

}

}Each metric uses an atomic type internally. For example, the metric in Counter is std::atomic_long. In this way, even in multi-thread calculating, the accuracy of the calculation results can be ensured by using atomic types. This avoids the use of expensive locks and achieves lock-free calculation.

class Counter {

private:

std::string mName;

std::atomic_long mVal;

public:

Counter(const std::string& name, uint64_t val);

uint64_t GetValue() const;

const std::string& GetName() const;

void Add(uint64_t val);

Counter* CopyAndReset();

};Read/write splitting means that the write operation is performed on a copied array, and the read operation is still performed on the original array. The read and the write operation splits and does not affect each other. Write operations need to be locked to prevent data loss during concurrent writes. After the write operation, the array pointer needs to be pointed to the new copied array.

Imagine a scenario where there are two threads, thread A and thread B. Thread A is responsible for processing user requests, during which the corresponding requests acquire some data from the buffer of thread B. The buffer of thread B is a piece of memory, which is updated regularly or continuously. This piece of memory becomes the critical section of the two threads (thread A may be reading when thread B refreshes this piece of memory).

Normally, we may avoid simultaneous access by locking. However, since this piece of memory may be asked hundreds of times per second and updated every minute, it brings a lot of useless work and reduces performance if each access is locked and unlocked. Therefore, an optimization strategy, dual Buffer switching, is applied. As the name implies, there are two memory blocks with the same structure and only one memory block provides services at the same time. If an update or write occurs, the new data is stored in another memory block. When the memory block is finished, the "pointer" (the handle pointing to the resource) is changed to the new memory block. That is, the switch is completed. The original memory block remains in service before the new memory block is finished.

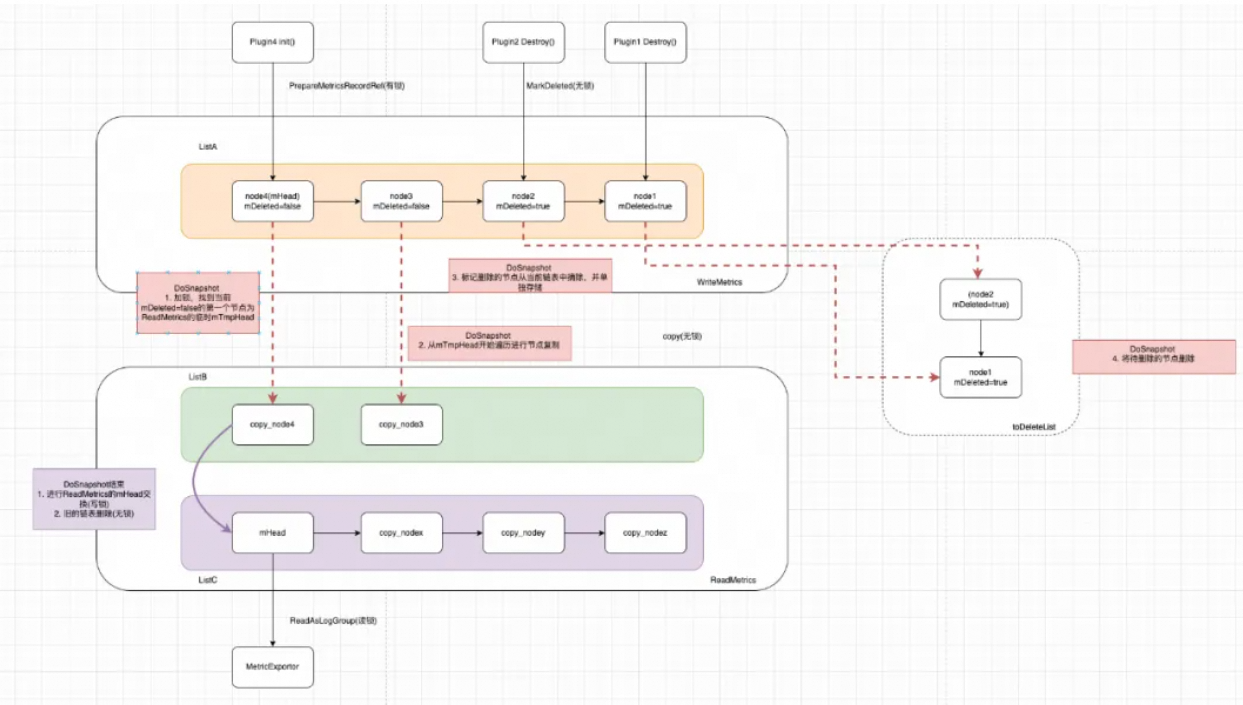

The metric module in iLogtail well reflects this idea. The specific process is as follows:

The metric module in iLogtail has two classes, WriteMetrics and ReadMetrcs, each with a one-way linked list.

class WriteMetrics {

private:

WriteMetrics() = default;

std::mutex mMutex;

MetricsRecord* mHead = nullptr;

void Clear();

MetricsRecord* GetHead();

public:

~WriteMetrics();

static WriteMetrics* GetInstance() {

static WriteMetrics* ptr = new WriteMetrics();

return ptr;

}

void PrepareMetricsRecordRef(MetricsRecordRef& ref, MetricLabels&& labels);

MetricsRecord* DoSnapshot();

};

class ReadMetrics {

private:

ReadMetrics() = default;

mutable ReadWriteLock mReadWriteLock;

MetricsRecord* mHead = nullptr;

void Clear();

MetricsRecord* GetHead();

public:

~ReadMetrics();

static ReadMetrics* GetInstance() {

static ReadMetrics* ptr = new ReadMetrics();

return ptr;

}

void ReadAsLogGroup(std::map<std::string, sls_logs::LogGroup*>& logGroupMap) const;

void UpdateMetrics();

};When each Plugin is initialized, a metric object is constructed and stored in a linked list in WriteMetrics. In the whole linked list, only the head node has contention, but when Plugin is initialized, it is usually executed in a single thread order. Therefore, the head insertion method is used when storing.

void WriteMetrics::PrepareMetricsRecordRef(MetricsRecordRef& ref, MetricLabels&& labels) {

MetricsRecord* cur = new MetricsRecord(std::make_shared<MetricLabels>(labels));

ref.SetMetricsRecord(cur);

std::lock_guard<std::mutex> lock(mMutex);

cur->SetNext(mHead);

mHead = cur;

}The linked list in ReadMetrcs is a copy of that in WriteMetrics and is responsible for providing data to external users. To Snapshot the linked list in WriteMetrics, there are probably the following steps:

After calling WriteMetrics::DoSnapshot() in ReadMetrics to acquire the new linked list, lock it, set its head to a new linked list, and delete the original linked list without locking.

void ReadMetrics::UpdateMetrics() {

MetricsRecord* snapshot = WriteMetrics::GetInstance()->DoSnapshot();

MetricsRecord* toDelete;

{

// Only lock when change head

WriteLock lock(mReadWriteLock);

toDelete = mHead;

mHead = snapshot;

}

// delete old linklist

while (toDelete) {

MetricsRecord* obj = toDelete;

toDelete = toDelete->GetNext();

delete obj;

}

}If the nodes in the linked list are to be deleted thread-safely, the pointer operations of the nodes before and after need to be locked. If synchronous deletion is performed during Plugin destructing, it requires frequent locking and weakens the performance. Therefore, when Plugin is destructing, the metric object, namely, the node in the above linked list, is marked for deletion and is deleted in a regular and unified manner. It can reduce lock contention.

In the destructor of the MetricsRecordRef object, the internal mMetrics is marked as the node to be deleted.

MetricsRecordRef::~MetricsRecordRef() {

if (mMetrics) {

mMetrics->MarkDeleted();

}

}Within the DoSnapshot function, WriteMetrics temporarily stores the nodes to be deleted while traversing the linked table and deletes them in a unified manner. Since the object is not accessed by other threads, the delete operation is lock-free.

MetricsRecord* WriteMetrics::DoSnapshot() {

// new read head

MetricsRecord* toDeleteHead = nullptr;

// Traverse the linked list and add the nodes to be deleted to toDeleteHead.

// Delete.

while (toDeleteHead) {

MetricsRecord* toDelete = toDeleteHead;

toDeleteHead = toDeleteHead->GetNext();

delete toDelete;

writeMetricsDeleteTotal ++;

}

return snapshot;

}Each time ReadMetrics executes the UpdateMetrics function, it calls DoSnapshot of WriteMetrics to acquire the new linked list. After the head pointer is converted, the old linked list is deleted. The delete operation is also lock-free.

void ReadMetrics::UpdateMetrics() {

MetricsRecord* snapshot = WriteMetrics::GetInstance()->DoSnapshot();

MetricsRecord* toDelete;

{

// Only lock when change head

WriteLock lock(mReadWriteLock);

toDelete = mHead;

mHead = snapshot;

}

// Delete the old linked list.

while (toDelete) {

MetricsRecord* obj = toDelete;

toDelete = toDelete->GetNext();

delete obj;

}

}

664 posts | 55 followers

FollowAlibaba Cloud Community - August 2, 2022

Alibaba Cloud Native Community - November 29, 2024

Alibaba Cloud Native Community - September 8, 2025

Alibaba Cloud Native Community - August 7, 2025

Alibaba Cloud Community - June 27, 2023

Alibaba Cloud Native Community - August 14, 2024

664 posts | 55 followers

Follow Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Lindorm

Lindorm

Lindorm is an elastic cloud-native database service that supports multiple data models. It is capable of processing various types of data and is compatible with multiple database engine, such as Apache HBase®, Apache Cassandra®, and OpenTSDB.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Cloud Native Community