By Hitesh Jethva, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

Hadoop is a free, open-source, scalable, and fault-tolerant framework written in Java that provides an efficient framework for running jobs on multiple nodes of clusters. Hadoop can be setup on a single machine or a cluster of machines. You can easily scale it to thousand machines on the fly. Its scalable architecture distributes workload across multiple machines. Hadoop works in master-slave architecture, there is a single master node and N number of slave nodes. Master node is used to manage and monitor slave nodes. Master node stores the metadata and slave node stores the data and do the actual task.

Hadoop made up of three main components:

In this tutorial, we will learn how to setup an Apache Hadoop on a single node cluster in an Alibaba Cloud Elastic Compute Service (ECS) instance with Ubuntu 16.04.

First, log in to your https://ecs.console.aliyun.com">Alibaba Cloud ECS Console. Create a new ECS instance, choosing Ubuntu 16.04 as the operating system with at least 2GB RAM. Connect to your ECS instance and log in as the root user.

Once you are logged into your Ubuntu 16.04 instance, run the following command to update your base system with the latest available packages.

apt-get update -yHadoop is written in Java, so you will need to install Java to your server. You can install it by just running the following command:

apt-get install default-jdk -yOnce Java is installed, verify the version of the Java using the following command:

java -versionOutput:

openjdk version "1.8.0_171"

OpenJDK Runtime Environment (build 1.8.0_171-8u171-b11-0ubuntu0.16.04.1-b11)

OpenJDK 64-Bit Server VM (build 25.171-b11, mixed mode)Next, create a new user account for Hadoop. You can do this by running the following command:

adduser hadoopNext, you will also need to set up SSH key-based authentication. First, login to hadoop user with the following command:

su - hadoopNext, generate rsa key using the following command:

ssh-keygen -t rsaOutput:

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:mEMbwFBVnsWL/bEzUIUoKmDRq5mMitE17UBa3aW2KRo hadoop@Node2

The key's randomart image is:

+---[RSA 2048]----+

| o*oo.o.oo. o. |

| o =...o+o o |

| . = oo.=+ o |

| . *.o*.o+ . |

| + =E=* S o o |

|o * o.o = |

|o. . o |

|o |

| |

+----[SHA256]-----+

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keysNext, give proper permission to the authorized key:

chmod 0600 ~/.ssh/authorized_keysNext, check the key based authentication using the following command:

ssh localhostBefore starting, you will need to download the latest version of the Hadoop from their official website. You can download it with the following command:

wget http://www-eu.apache.org/dist/hadoop/common/hadoop-3.1.0/hadoop-3.1.0.tar.gzOnce the download is completed, extract the downloaded file with the following command:

tar -xvzf hadoop-3.1.0.tar.gzNext, move the extracted directory to the /opt with the following command:

mv hadoop-3.1.0 /opt/hadoopNext, change the ownership of the hadoop directory using the following command:

chown -R hadoop:hadoop /opt/hadoop/Next, you will need to set an environment variable for Hadoop. You can do this by editing .bashrc file:

First, log in to hadoop user:

su - hadoopNext, open .bashrc file:

nano .bashrcAdd the following lines at the end of the file:

export HADOOP_HOME=/opt/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/binSave and close the file, when you are finished. Then, initialize the environment variables using the following command:

source .bashrcNext, you will also need to setup Java environment variable for Hadoop. You can do this by editing hadoop-env.sh file:

First, find the default Java path using the following command:

readlink -f /usr/bin/java | sed "s:bin/java::"Output:

/usr/lib/jvm/java-8-openjdk-amd64/jre/Now, open hadoop-env.sh file and paste above output in the hadoop-env.sh file:

nano /opt/hadoop/etc/hadoop/hadoop-env.shMake the following changes:

#export JAVA_HOME=${JAVA_HOME}

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/jre/Save and close the file, when you are finished.

Next, you will need to configure multiple configuration files to setup Hadoop infrastructure. First, log in with hadoop user and create a directory for hadoop file system storage:

mkdir -p /opt/hadoop/hadoopdata/hdfs/namenode

mkdir -p /opt/hadoop/hadoopdata/hdfs/datanodeFirst, you will need to edit core-site.xml file. This file contains the Hadoop port number information, file system allocated memory, data store memory limit and the size of Read/Write buffers.

nano /opt/hadoop/etc/hadoop/core-site.xmlMake the following changes:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>Save the file, then open the hdfs-site.xml file. This file contains the replication data value, namenode path and datanode path for local file systems.

nano /opt/hadoop/etc/hadoop/hdfs-site.xmlMake the following changes:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///opt/hadoop/hadoopdata/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///opt/hadoop/hadoopdata/hdfs/datanode</value>

</property>

</configuration>Save the file, then open the mapred-site.xml file.

nano /opt/hadoop/etc/hadoop/mapred-site.xmlMake the following changes:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>Save the file, then open the yarn-site.xml file:

nano /opt/hadoop/etc/hadoop/yarn-site.xmlMake the following changes:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>Save and close the file, when you are finished.

Hadoop is now installed and configured. It's time to initialize HDFS file system. You can do this by formatting Namenode:

hdfs namenode -formatYou should see the following output:

WARNING: /opt/hadoop/logs does not exist. Creating.

2018-06-03 19:12:19,228 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = Node2/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.1.0

...

...

2018-06-03 19:12:28,692 INFO namenode.FSImageFormatProtobuf: Image file /opt/hadoop/hadoopdata/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 of size 391 bytes saved in 0 seconds .

2018-06-03 19:12:28,790 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2018-06-03 19:12:28,896 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at Node2/127.0.1.1

************************************************************/Next, change the directory to the /opt/hadoop/sbin and start the Hadoop cluster using the following command:

cd /opt/hadoop/sbin/

start-dfs.shOutput:

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [Node2]

2018-06-03 19:16:13,510 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

start-yarn.shOutput:

Starting resourcemanager

Starting nodemanagersNext, check the status of the service using the following command:

jpsOutput:

20961 NameNode

22133 Jps

21253 SecondaryNameNode

21749 NodeManager

21624 ResourceManager

21083 DataNodeHadoop is now installed and configured, it's time to access Hadoop different services through web browser.

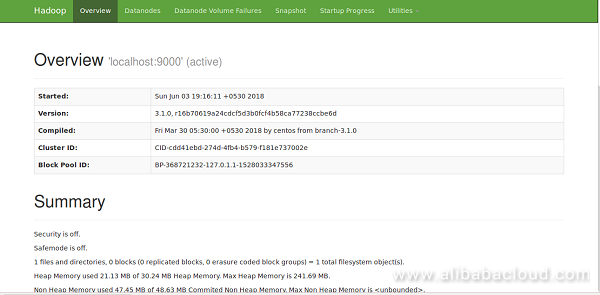

By default, Hadoop NameNode service started on port 9870. You can access it by visiting the URL http://192.168.0.104:9870 in your web browser. You should see the following image:

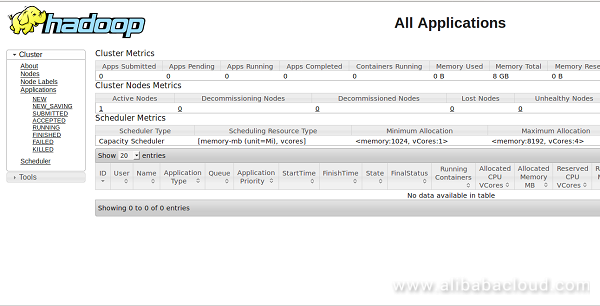

You can get information about Hadoop cluster by visiting the URL http://192.168.0.104:8088 as below:

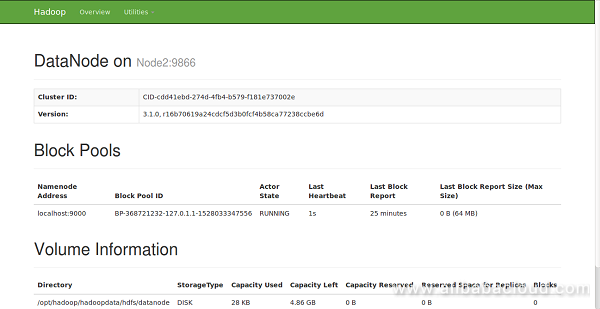

You can get details about secondary namenode by visiting the URL http://192.168.0.104:9864 as below:

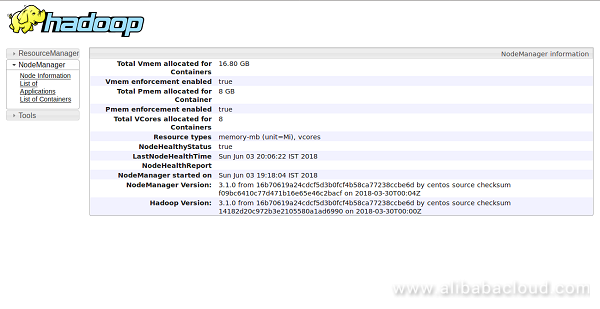

You can also get details about DataNode by visiting the URL http://192.168.0.104:8042/node in your web browser as below:

To test Hadoop file system cluster. Create a directory in the HDFS file system and copy a file from local file system to HDFS storage.

First, create a directory in HDFS file system using the following command:

hdfs dfs -mkdir /hadooptestNext, copy a file named .bashrc from the local file system to HDFS storage using the following command:

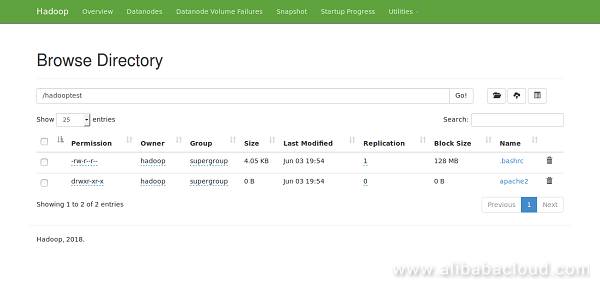

hdfs dfs -put .bashrc /hadooptestNow, verify the hadoop distributed file system by visiting the URL http://192.168.0.104:9870/explorer.html#/hadooptest in your web browser. You will be redirected to the following page:

Now, copy hadooptest directory from the hadoop distributed file system to the local file system using the following command:

hdfs dfs -get /hadooptest

ls -la /hadooptestOutput:

drwxr-xr-x 3 hadoop hadoop 4096 Jun 3 20:05 .

drwxr-xr-x 6 hadoop hadoop 4096 Jun 3 20:05 ..

-rw-r--r-- 1 hadoop hadoop 4152 Jun 3 20:05 .bashrcBy default, Hadoop services are not starting at system boot time. You can enable Hadoop services to start at boot time by editing /etc/rc.local file:

nano /etc/rc.localMake the following changes:

su - hadoop -c "/opt/hadoop/sbin/start-dfs.sh"

su - hadoop -c "/opt/hadoop/sbin/start-yarn.sh"

exit 0 Save and close the file, when you are finished.

Next time you restart your system the Hadoop services will be started automatically.

Resource Orchestration Service provides developers and system managers with a simple method to create and manage their Alibaba Cloud resources. Through ROS you can use text files in JSON format to define any required Alibaba Cloud resources, dependencies between resources, and configuration details.

ROS offers a template for resource aggregation and blueprint architecture that can be used as code for development, testing, and version control. Templates can be used to deliver Alibaba Cloud resources and system architectures. Based on the template, API, and SDK, you can then conveniently manage your Alibaba Cloud resource by code. ROS produce is free of charge for Alibaba Cloud users.

How to Create a Chat Server Using Matrix Synapse on Ubuntu 16.04

2,598 posts | 769 followers

FollowAlibaba Clouder - May 22, 2019

Alibaba Clouder - May 23, 2019

Alibaba Clouder - May 23, 2019

Alibaba Clouder - May 22, 2019

Alibaba Clouder - September 5, 2018

Alibaba Clouder - June 13, 2018

2,598 posts | 769 followers

Follow E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 9, 2019 at 6:47 am

Good one. Provisioning E-MapReduce cluster is easy, integration , security...