In this article, we will learn how to use the Data Integration function on Alibaba Cloud DataWorks console to extract JSON fields from MongoDB to MaxCompute.

First, upload the data to your MongoDB database. This example uses Alibaba Cloud's ApsaraDB for MongoDB. The network type is VPC because a public IP address is required for MongoDB to communicate with the default resource group of DataWorks. The test data is as follows:

{

"store": {

"book": [

{

"category": "reference",

"author": "Nigel Rees",

"title": "Sayings of the Century",

"price": 8.95

},

{

"category": "fiction",

"author": "Evelyn Waugh",

"title": "Sword of Honour",

"price": 12.99

},

{

"category": "fiction",

"author": "J. R. R. Tolkien",

"title": "The Lord of the Rings",

"isbn": "0-395-19395-8",

"price": 22.99

}

],

"bicycle": {

"color": "red",

"price": 19.95

}

},

"expensive": 10

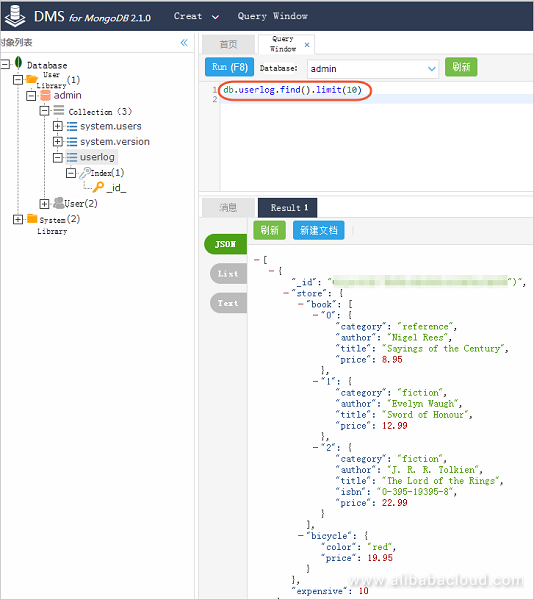

}Log on to the DMS for MongoDB console. In this example, the database is admin and the collection is userlog. You can run the db.userlog.find().limit(10) command in the Query Window to view the uploaded data, as shown in the following figure.

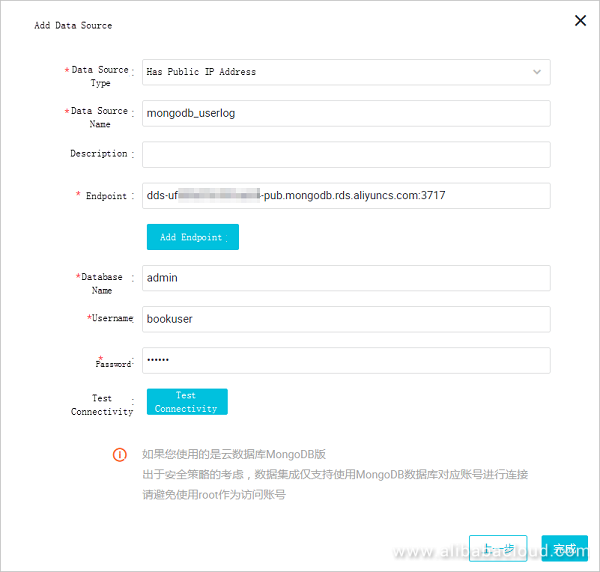

Create a user in the database in advance to add data sources in DataWorks. In this example, run the db.createUser({user:"bookuser",pwd:"123456",roles:["root"]}) command to create a user named bookuser. The password of the user is 123456, and the permission is root.

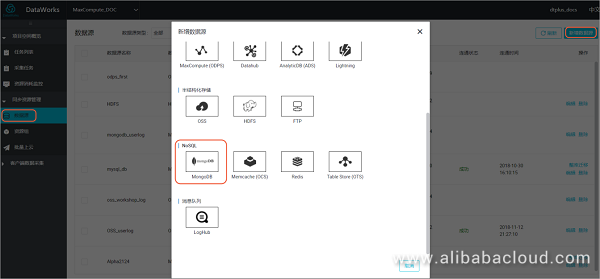

In the DataWorks console, go to the Data Integration page and add a MongoDB data source.

For specific parameters, see the following figure. Click Finish after the data source connectivity test is successful. In this example, the MongoDB network type is VPC. Therefore, set the Data Source Type to Has Public IP Address.

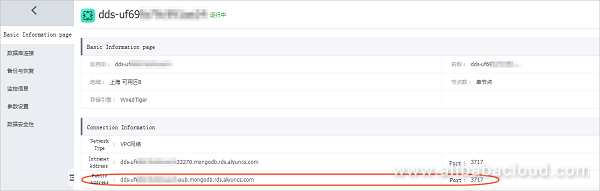

To retrieve the endpoint and the port number, log on to the ApsaraDB for MongDB console and click an instance, as shown in the following figure.

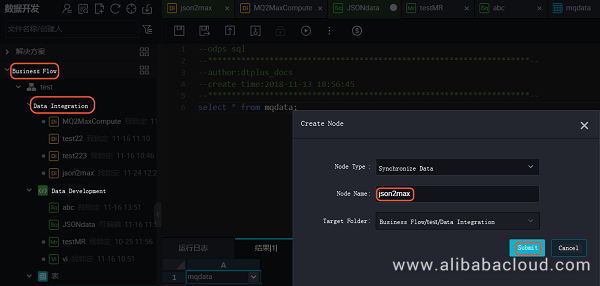

In the DataWorks console, create a data synchronization node.

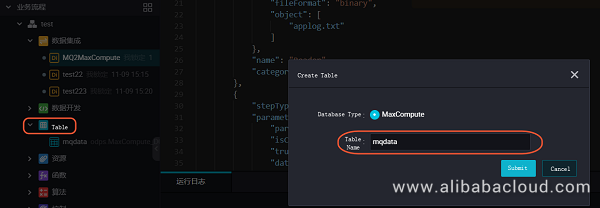

Meanwhile, create a table named mqdata in DataWorks to store JSON data.

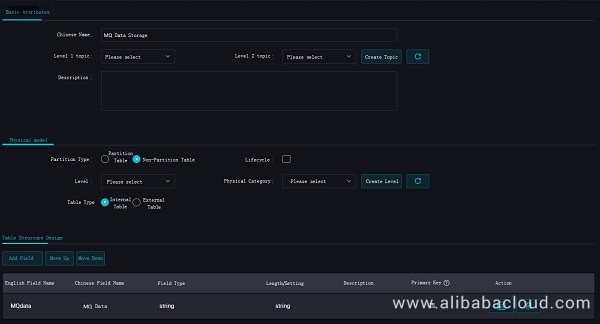

You can set the table parameters on the graphic interface. In this example, the mqdata table has only one column named MQ data, whose data type is string.

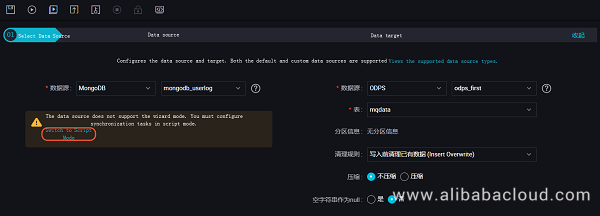

After creating the table, set the data synchronization task parameters on the graphic interface, as shown in the following figure. Set the target data source to odps_first and the target table to mqdata. Set the original data source to MongoDB and select mongodb_userlog. After completing the preceding configuration, click Switch to Script Mode.

The following shows the example code in script mode:

{

"type": "job",

"steps": [

{

"stepType": "mongodb",

"parameter": {

"datasource": "mongodb_userlog",

//Indicates the data source name.

"column": [

{

"name": "store.bicycle.color", //Indicates the JSON field path. In this example, the value of color is extracted.

"type": "document.document.string" //Indicates the number of fields in this line must be consistent with that in the preceding line (the name line). If the JSON field is a level 1 field, such as the "expensive" field in this example, enter "string" for this field.

}

],

"collectionName // Collection name": "userlog"

},

"name": "Reader",

"category": "reader"

},

{

"stepType": "odps",

"parameter": {

"partition": "",

"isCompress": false,

"truncate": true,

"datasource": "odps_first",

"column": [

//Indicates the table column name in MaxCompute, namely "mqdata".

],

"emptyAsNull": false,

"table": "mqdata"

},

"name": "Writer",

"category": "writer"

}

],

"version": "2.0",

"order": {

"hops": [

{

"from": "Reader",

"to": "Writer"

}

]

},

"setting": {

"errorLimit": {

"record": ""

},

"speed": {

"concurrent": 2,

"throttle": false,

"dmu": 1

}

}

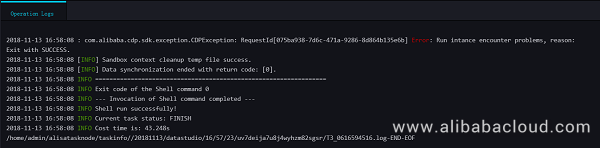

}After completing the preceding configuration, click Run. If the operation is successful, the following log is displayed.

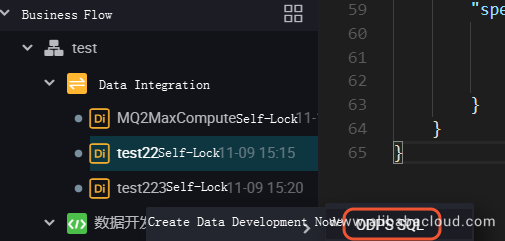

Create an ODPS SQL node in your Business Flow.

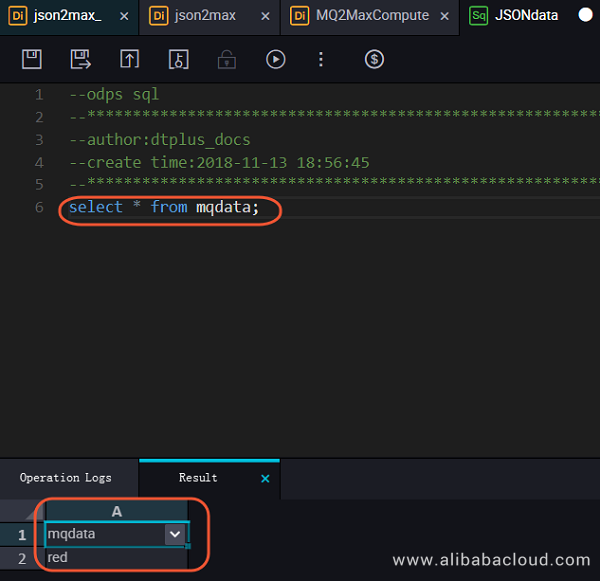

Enter the SELECT*from mqdata; statement to view the data in the mqdata table. Alternatively, you can run this command on the MaxCompute client for the same purpose.

To learn more about Data Migration on Alibaba Cloud MaxCompute, visit https://www.alibabacloud.com/help/doc-detail/98009.html

Alibaba Cloud Big Data Products Continue to Impress the Global Market

Alibaba Cloud MaxCompute: Advancing to EB-level Data Platforms with 600-PB Computing Capacity

137 posts | 21 followers

FollowAlibaba Clouder - March 1, 2019

Alibaba Clouder - August 25, 2020

Alibaba Clouder - September 3, 2019

Alibaba Clouder - January 6, 2021

Alibaba Clouder - September 27, 2019

Alibaba Cloud MaxCompute - April 26, 2020

137 posts | 21 followers

Follow Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud MaxCompute