By Wang Xining, Senior Alibaba Cloud Technical Expert

This article is taken from Technical Analysis and Practice of Istio Service Mesh written by Wang Xining, a senior Alibaba Cloud technical expert. It describes how to manage multi-cluster deployment with Istio, and illustrates the hybrid deployment capacity of service mesh to support multi-cloud environments and multi-cluster deployment.

In a topology with multiple control planes, each Kubernetes cluster installs the same Istio control plane, and each control plane manages only service endpoints in its own cluster. By using the Istio gateway, public root certificate authority (CA), and service entry (ServiceEntry), you can configure multiple clusters as a logical single service mesh. This configuration method has no special network requirements, and, therefore, is often considered to be the simplest method when no general network connection is available between Kubernetes clusters.

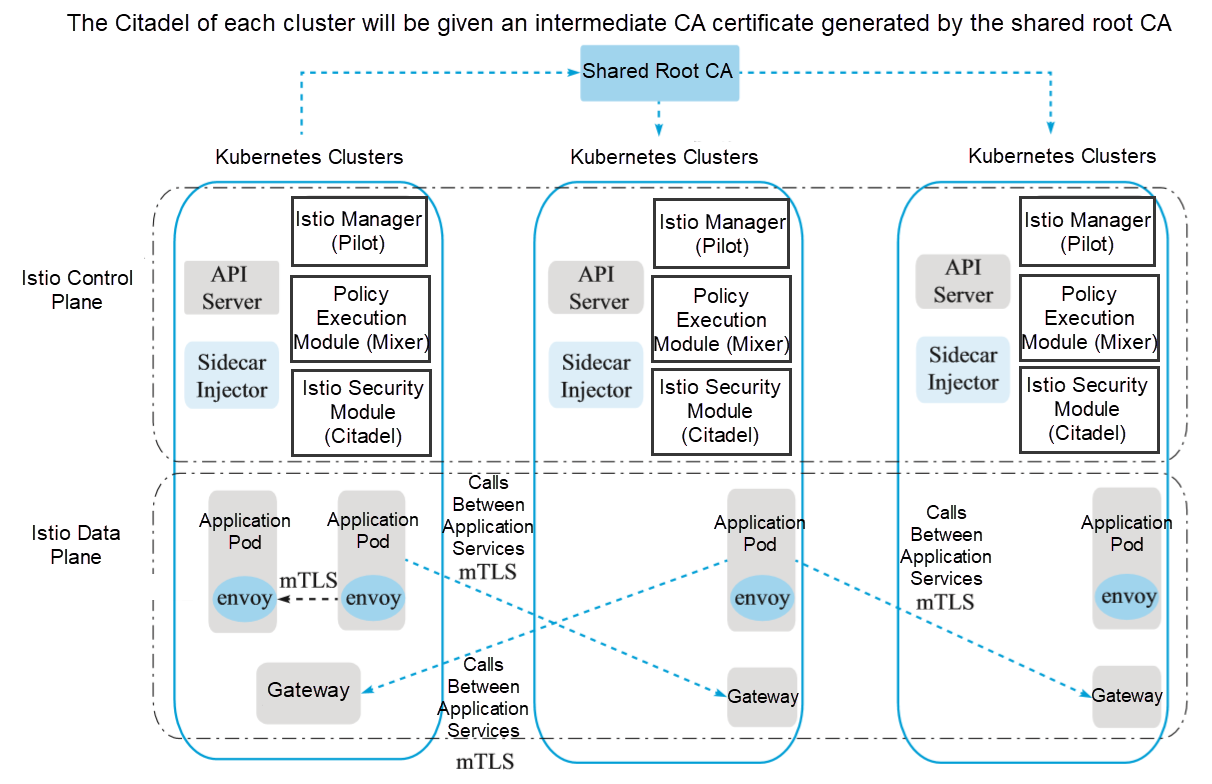

In this topology configuration, Kubernetes requires a two-way transport-layer security (TLS) connection between services for cross-cluster communication. To enable two-way TLS communication between clusters, the Citadel of each cluster will hold an intermediate CA certificate generated by the shared root CA, as shown in the following figure.

(Multiple control planes)

The shared root CA generates an intermediate CA certificate for the Citadel of each cluster and enables two-way TLS communication across different clusters. For a better explanation, we use the sample root CA certificate for two clusters. The certificate is under the samples/certs directory and is provided during Istio installation. In actual deployment, you may use different CA certificates for each cluster, and all CA certificates are signed by a public root CA.

Next, complete the following steps in each Kubernetes cluster to deploy the same Istio control plane configuration in all clusters.

Run the following commands to create a Kubernetes secret key for the generated CA certificate:

kubectl

create namespace istio-system

kubectl

create secret generic cacerts -n istio-system \

--from-file=samples/certs/ca-cert.pem \

--from-file=samples/certs/ca-key.pem \

--from-file=samples/certs/root-cert.pem \

--from-file=samples/certs/cert-chain.pemInstall custom resource definitions (CRDs) of Istio and wait a few seconds to submit them to Kubernetes API Server, as shown in the following figure.

for

i in install/kubernetes/helm/istio-init/files/crd*yaml; do kubectl apply -f $i;

doneNext, deploy the Istio control plane. If the helm dependencies are missing or are not the latest, you can update them by running the "helm dep update" command. Note: As istio-cni is not used, you can temporarily remove it from the dependencies in the requirements.yaml file before performing the update operation. To do this, run the following commands:

helm

template install/kubernetes/helm/istio --name istio --namespace istio-system \

-f

install/kubernetes/helm/istio/values-istio-multicluster-gateways.yaml >

./istio.yaml

kubectl

apply -f ./istio.yamlMake sure that the preceding steps are successfully completed in each Kubernetes cluster. You only need to run the command once to use helm to generate istio.yaml.

Domain Name System (DNS) provides DNS resolution for services in remote clusters. Therefore, existing applications can run without changing IP addresses, because applications usually expect to resolve services and access the resolved IP addresses through DNS names. Istio does not use DNS to route requests between services. Services in the same Kubernetes cluster have the same DNS suffix, for example, svc.cluster.local. Kubernetes DNS provides the DNS resolution capability for these services. To deploy a similar configuration for services in the remote cluster, we name the services in the remote cluster in the .global format.

The Istio installation package includes a CoreDNS server that provides the DNS resolution capability for these services. To use the DNS resolution capability, you need to direct the DNS service of Kubernetes to the CoreDNS service. Then, the CoreDNS service will act as the DNS server for the .global DNS domain.

For clusters that use kube-dns, create the following configuration items or update existing configuration items.

kubectl

apply -f - <<EOF

apiVersion:

v1

kind:

ConfigMap

metadata:

name: kube-dns

namespace: kube-system

data:

stubDomains: |

{"global": ["$(kubectl get

svc -n istio-system istiocoredns -o jsonpath={.spec.clusterIP})"]}

EOFFor clusters that use CoreDNS, create the following configuration items or update existing configuration items.

kubectl

apply -f - <<EOF

apiVersion:

v1

kind:

ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa

ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

reload

loadbalance

}

global:53 {

errors

cache 30

proxy . $(kubectl get svc -n

istio-system istiocoredns -o jsonpath={.

spec.clusterIP})

}

EOFTo demonstrate cross-cluster access, you can deploy the sleep application service in one Kubernetes cluster and the httpbin application service in the other cluster. After the deployment, verify whether the sleep application can call the httpbin service in the remote cluster.

Deploy the sleep service to the first cluster (cluster1) by running the following commands:

kubectl

create namespace app1

kubectl

label namespace app1 istio-injection=enabled

kubectl

apply -n app1 -f samples/sleep/sleep.yaml

export

SLEEP_POD=$(kubectl get -n app1 pod -l app=sleep -o

jsonpath={.items..metadata.name})Deploy the httpbin service to the other cluster (cluster2) by running the following commands:

kubectl

create namespace app2

kubectl

label namespace app2 istio-injection=enabled

kubectl

apply -n app2 -f samples/httpbin/httpbin.yamlObtain the gateway address of cluster2 by running the following commands:

export

CLUSTER2_GW_ADDR=$(kubectl get svc --selector=app=istio-ingressgateway \

-n istio-system -o

jsonpath="{.items[0].status.loadBalancer.ingress[0].ip}")In order to make the sleep service in cluster1 able to access the httpbin service in cluster2, we need to create a service entry named ServiceEntry for the httpbin service in cluster1. The host name of ServiceEntry will be .globalname, while the name and namespace respectively correspond to the name and namespace of the remote service in cluster2.

For DNS resolution of services under the *.global domain, you need to assign IP addresses for the services and ensure that each service in the. globalDNS domain has a unique IP address in the cluster. These IP addresses cannot be routed outside the pod. In this example, we will use the 127.255.0.0/16 CIDR block to avoid conflict with other IP addresses. The application traffic for these IP addresses will be captured by the Sidecar proxy and routed to other appropriate remote services.

Create a ServiceEntry for the httpbin service in cluster1 by running the following commands:

kubectl

apply -n app1 -f - <<EOF

apiVersion:

networking.istio.io/v1alpha3

kind:

ServiceEntry

metadata:

name: httpbin-app2

spec:

hosts:

# must be of form name.namespace.global

- httpbin.app2.global

# Treat remote cluster services as part of

the service mesh

# as all clusters in the service mesh share

the same root of trust.

location: MESH_INTERNAL

ports:

- name: http1

number: 8000

protocol: http

resolution: DNS

addresses:

# the IP address to which httpbin.bar.global

will resolve to

# must be unique for each remote service,

within a given cluster.

# This address need not be routable. Traffic

for this IP will be captured

# by the sidecar and routed appropriately.

- 127.255.0.2

endpoints:

# This is the routable address of the ingress

gateway in cluster2 that

# sits in front of sleep.bar service. Traffic

from the sidecar will be

# routed to this address.

- address: ${CLUSTER2_GW_ADDR}

ports:

http1: 15443 # Do not change this port

value

EOFWith this configuration, all traffic that accesses httpbin.app2.global through all ports in cluster1 will be routed to endpoint 15443 with two-way TLS connection enabled.

The gateway on port 15443 is a special SNI-aware Envoy proxy. It was preconfigured and installed as part of the multi-cluster Istio installation in the beginning section. Traffic that goes through port 15443 is load balanced in the appropriate internal pod of the target cluster.

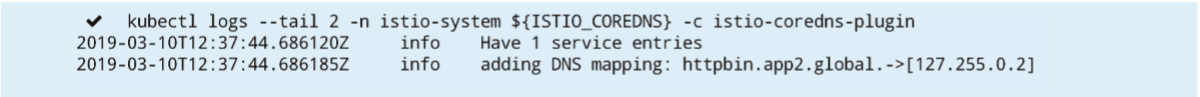

In cluster1, run the following commands to view the container istiocoredns. You can see that the domain mapping relationship for the ServiceEntry has been loaded:

export

ISTIO_COREDNS=$(kubectl get -n istio-system po -l app=istiocoredns -o

jsonpath={.items..metadata.name})

kubectl

logs --tail 2 -n istio-system ${ISTIO_COREDNS} -c istio-coredns-pluginThe execution output is as follows:

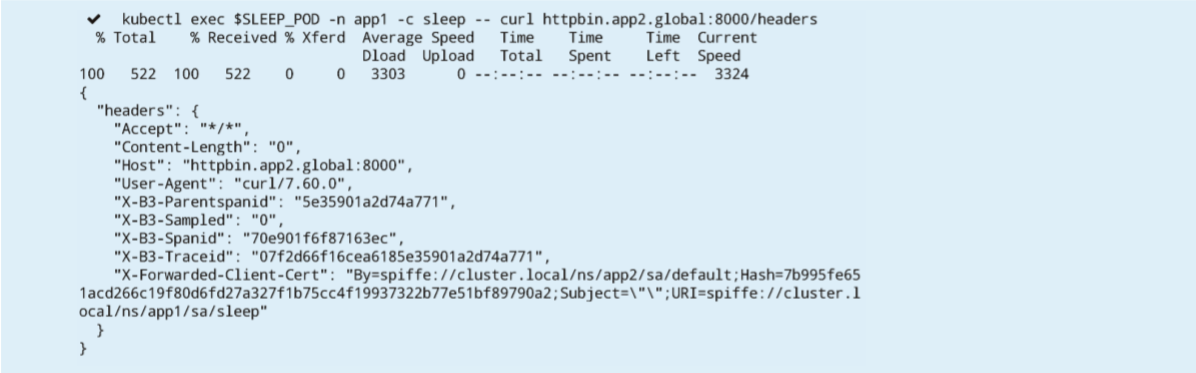

Verify whether the sleep service in cluster1 can call the httpbin service in cluster2 by running the following commands in cluster1:

kubectl

exec $SLEEP_POD -n app1 -c sleep -- curl httpbin.app2.global:8000/headersThe execution output is as follows:

Now, the connection between cluster1 and cluster2 has been established under the configuration of multiple control planes.

In the preceding topics, we have learned many Istio functions, such as basic routing that can be easily implemented in a single Kubernetes cluster. However, in many real business scenarios, this is not the case for microservice-based applications. Instead, they need to assign and run services among different clusters. Then, the problem is, can these Istio functions be used easily in complex real environments?

The following example shows how Istio's traffic management function works properly in a multi-cluster mesh with a topology with multiple control planes.

First, deploy the helloworld service v1 to cluster1 by running the following commands:

kubectl

create namespace hello

kubectl

label namespace hello istio-injection=enabled

kubectl

apply -n hello -f samples/sleep/sleep.yaml

kubectl

apply -n hello -f samples/helloworld/service.yaml

kubectl

apply -n hello -f samples/helloworld/helloworld.yaml -l version=v1Then, deploy the helloworld services v2 and v3 to cluster2 by running the following commands:

kubectl

create namespace hello

kubectl

label namespace hello istio-injection=enabled

kubectl

apply -n hello -f samples/helloworld/service.yaml

kubectl

apply -n hello -f samples/helloworld/helloworld.yaml -l version=v2

kubectl

apply -n hello -f samples/helloworld/helloworld.yaml -l version=v3As mentioned in the preceding topics, in a topology with multiple control planes, you need to use DNS names suffixed with .global to access remote services.

In this example, the DNS name is helloworld.hello.global, and we need to create a service entry (ServiceEntry) and a destination rule (DestinationRule) in cluster1. The ServiceEntry uses the gateway of cluster2 as the endpoint address to access the service.

Run the following commands to create a service entry (ServiceEntry) for the helloworld service and a target rule (DestinationRule) in cluster1.

kubectl

apply -n hello -f - <<EOF

apiVersion:

networking.istio.io/v1alpha3

kind:

ServiceEntry

metadata:

name: helloworld

spec:

hosts:

- helloworld.hello.global

location: MESH_INTERNAL

ports:

- name: http1

number: 5000

protocol: http

resolution: DNS

addresses:

- 127.255.0.8

endpoints:

- address: ${CLUSTER2_GW_ADDR}

labels:

cluster: cluster2

ports:

http1: 15443 # Do not change this port

value

---

apiVersion:

networking.istio.io/v1alpha3

kind:

DestinationRule

metadata:

name: helloworld-global

spec:

host: helloworld.hello.global

trafficPolicy:

tls:

mode: ISTIO_MUTUAL

subsets:

- name: v2

labels:

cluster: cluster2

- name: v3

labels:

cluster: cluster2

EOFCreate a target rule on both clusters. Create a target rule for the subset v1 in cluster1 by running the following commands:

kubectl

apply -n hello -f - <<EOF

apiVersion:

networking.istio.io/v1alpha3

kind:

DestinationRule

metadata:

name: helloworld

spec:

host: helloworld.hello.svc.cluster.local

trafficPolicy:

tls:

mode: ISTIO_MUTUAL

subsets:

- name: v1

labels:

version: v1

EOFCreate a target rule for subsets v2 and v3 in cluster2 by running the following commands:

kubectl

apply -n hello -f - <<EOF

apiVersion:

networking.istio.io/v1alpha3

kind:

DestinationRule

metadata:

name: helloworld

spec:

host: helloworld.hello.svc.cluster.local

trafficPolicy:

tls:

mode: ISTIO_MUTUAL

subsets:

- name: v2

labels:

version: v2

- name: v3

labels:

version: v3

EOFCreate a virtual service to route traffic.

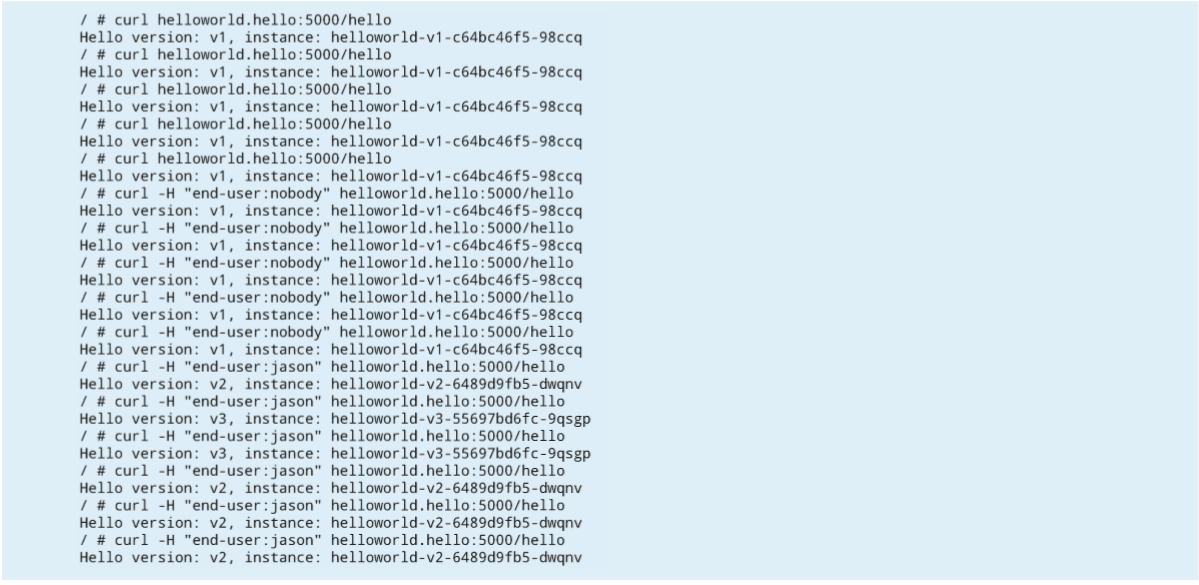

Calling the virtual service redirects traffic requests from the jason user to v2 and v3 in cluster2, where 70% of the requests goes to v2 and 30% to v3. Traffic requests from any other users to helloworld will be redirected to v1 in cluster1.

kubectl

apply -n hello -f - <<EOF

apiVersion:

networking.istio.io/v1alpha3

kind:

VirtualService

metadata:

name: helloworld

spec:

hosts:

- helloworld.hello.svc.cluster.local

- helloworld.hello.global

http:

- match:

- headers:

end-user:

exact: jason

route:

- destination:

host: helloworld.hello.global

subset: v2

weight: 70

- destination:

host: helloworld.hello.global

subset: v3

weight: 30

- route:

- destination:

host:

helloworld.hello.svc.cluster.local

subset: v1

EOFAfter calling the service several times, you can see from the output that the routing rule works. This also proves that the rule definition for routing in a topology with multiple control planes works in the same way as in a local cluster.

The simplest way to set up a multi-cluster mesh is to use a topology with multiple control planes, as it has no special network requirements. The preceding example shows that the routing function for a single Kubernetes cluster also can easily be used for multiple clusters.

Wang Xining, a senior Alibaba Cloud technical expert and the technical owner of ASM and Istio on Kubernetes, specializes in Kubernetes, cloud native, and service mesh. He once worked in IBM China Development Center and served as the chairman of the patent review committee. He possesses more than 40 international technology patents in related fields. He wrote a book, Technical Analysis and Practice of Istio Service Mesh, which introduces the principles and development practices of Istio in detail. It contains a large number of selected cases and sample code for download. It is a useful quick start guide for Istio development. Gartner believes that in 2020, service mesh will become the standard technology of all leading container management systems. This book is suitable for readers who are interested in microservices and cloud native. We recommend that you read this book in depth.

Architecture Analysis of Istio: The Most Popular Service Mesh Project

56 posts | 8 followers

FollowXi Ning Wang(王夕宁) - December 16, 2020

Hironobu Ohara - February 3, 2023

Xi Ning Wang(王夕宁) - December 16, 2020

Alibaba Container Service - October 12, 2024

Alibaba Container Service - May 23, 2025

Alibaba Developer - November 17, 2021

56 posts | 8 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn MoreMore Posts by Xi Ning Wang(王夕宁)