By Chen Hao and Zhang Yingqiang

At this year's SIGMOD conference, the paper PolarDB-MP: A Multi-Primary Cloud-Native Database via Disaggregated Shared Memory by the Alibaba Cloud ApsaraDB team won the Industry Track Best Paper Award. This was the first time that an independent achievement by a Chinese company had won the highest award of SIGMOD. PolarDB-MP is a multi-master cloud-native database that is based on distributed shared memory. In this article, we will explain the specific details of this paper.

Nowadays, there is a growing need for multi-master cloud-native databases that support write scale-out. A database with a multi-master architecture not only supports write scale-out, but also provides higher availability, and offers better scalability for computing nodes in a serverless database. Currently, most mainstream multi-master databases are based on share-nothing and share-storage architectures. In a share-nothing architecture, if a transaction needs to access multiple partitions, a distributed transaction mechanism must be used, such as a two-phase commit policy. This often introduces significant additional overhead. In addition, when there is a need to scale in or out the system, the data may need to be repartitioned, which is a process that often involves cumbersome and time-consuming data migration. However, the shared-storage architecture is exactly the opposite, as all data can be accessed from all nodes. In this architecture, there is no need to use distributed transactions, and each node processes the transactions independently. DB2 pureScale and Oracle Real Application Clusters (RAC) are two traditional databases that are based on shared storage. They are generally deployed on dedicated machines and cost more than today's cloud-native databases. Aurora-MM and Taurus-MM are cloud-native multi-master databases that have been proposed recently. Aurora-MM handles write conflicts by using optimistic concurrency control, which will abort more transactions when conflicts occur. In contrast, Taurus-MM uses pessimistic concurrency control, but it is less efficient when synchronizing data between different nodes, and it has low scalability in high-conflict scenarios.

To address these difficulties faced by current multi-master databases, we have designed and implemented PolarDB-MP, which uses a distributed shared memory pool to overcome the challenges of the existing architecture.

Watch the paper interpretation

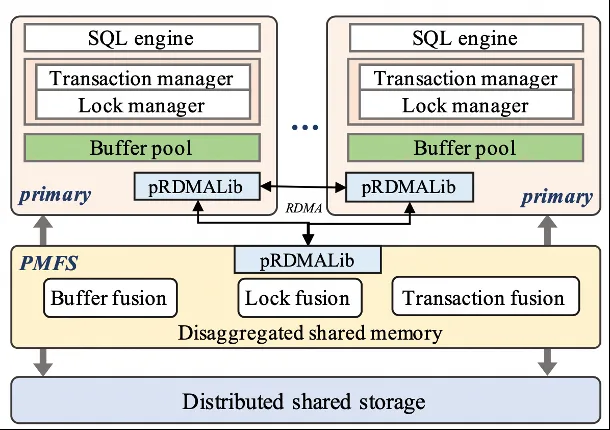

The following diagram shows the PolarDB-MP architecture, which is different from other multi-master databases that are based on shared storage. PolarDB-MP introduces the distributed shared memory-based Polar Multi-Primary Fusion Server (PMFS) to support the multi-master architecture. PMFS has three core components: Transaction Fusion, Cache Fusion, and Lock Fusion. Transaction Fusion aims to manage global transaction processing and ensure the atomicity, consistency, isolation, and durability (ACID) of transactions. Buffer Fusion plays a vital role in maintaining cache consistency across all nodes. Lock Fusion is responsible for two lock-based protocols: page lock (PLock) and row lock (RLock). The PLock protocol ensures the physical consistency when accessing pages on different nodes. The RLock protocol ensures the transactional consistency of user data. Besides, PolarDB-MP proposes a logical log sequence number (LLSN) that can be used to sort the redo logs (write-ahead logs) from different nodes. LLSN provides a partial order relation for all redo logs generated by different nodes.

The first issue to be resolved by Transaction Fusion is timestamp. PolarDB-MP has adopted the timestamp oracle (TSO) method. When a transaction is committed, there is a commit timestamp (CTS) request from the TSO. This CTS is a logically incremental timestamp assigned that ensures the order of transaction submission. Typically, CTS is obtained through remote direct memory access (RDMA). The process is often complete in a few microseconds, and it was not found to be a bottleneck during our testing.

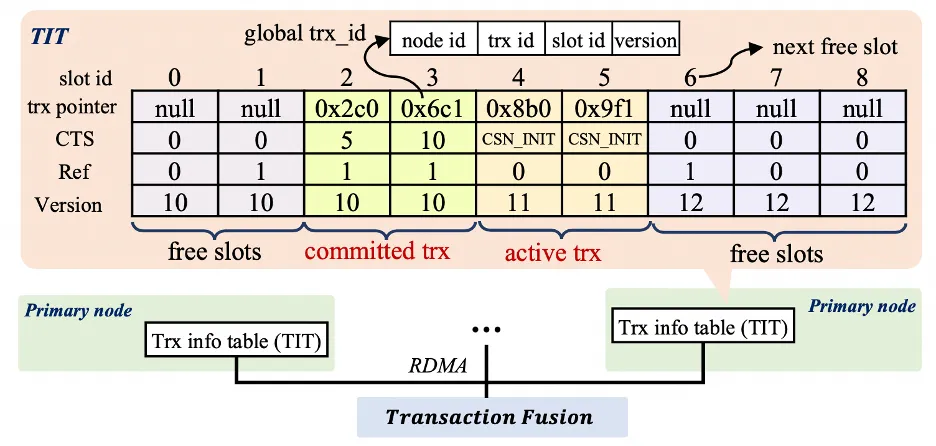

To effectively manage all transaction information in the cluster, PolarDB-MP adopts a decentralized approach, so the information is distributed across all nodes. For each node in the PolarDB-MP, there is a small portion of memory reserved for it to store its local transaction information. A node can remotely access the transaction information of other nodes through RDMA. The following diagram shows this design. Each node maintains the information about its local transactions in a transaction information table (TIT). TIT plays a key role in managing transactions. It maintains four key fields for each transaction: pointer, CTS, version, and ref. Among them, pointer is the pointer to the transaction object in memory, CTS indicates the timestamp when the transaction is committed, version is used to identify different transactions in the same slot, and ref is used to indicate whether there are other transactions waiting for this transaction to release its row lock.

When a transaction starts on a node, a unique ID that is locally incremental will be assigned to the transaction. Then, an idle TIT slot is allocated to the transaction. Since TIT slots can be reused, the version field can be used to distinguish transactions occupying the same slot at different times, and each new transaction will increment the version. To identify a transaction at the cluster level, PolarDB-MP combines node_id, trx_id, slot_id, and version into a global transaction ID (g_trx_id). With this g_trx_id, each node can remotely access the CTS of other nodes' transactions from the destination node through RDMA. In PolarDB-MP, when a record is updated, the global transaction ID (g_trx_id) will be stored in the metadata of that record. When a transaction is committed, if the records modified by the current transaction are still in the cache, the CTS for the transaction is written to the metadata of these records. Otherwise, their CTS remains the default value (CSN_INIT). When determining the visibility of a record, if the CTS field for the record is a valid value instead of the initial value (CSN_INIT), the CTS can be obtained directly from the metadata of that record. If the CTS of the record is not populated, it's necessary to use the TIT to obtain the CTS of the record. First, obtain the transaction ID (g_trx_id) that modified this record from the metadata field of the record. Then, use this transaction ID (g_trx_id) to obtain the corresponding TIT slot. If the version in the TIT does not match the version of the transaction ID (g_trx_id) being checked, the TIT slot has been reused by a new transaction, which means that the original transaction has been committed. In this case, we can return a minimum value of CTS to indicate that the record is visible to all transactions. This is because a TIT slot will be released and reused only when the CTS of the slot is less than the read view of all active transactions. If the version of the slot matches the version of the current transaction ID (g_trx_id), the slot has not been reused by other transactions, and we can obtain the CTS directly from the slot.

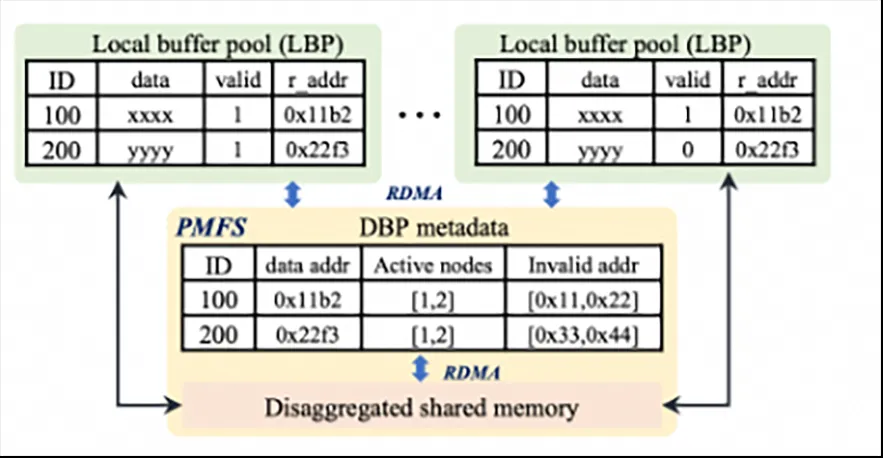

In PolarDB-MP, each node can update any data page, which leads to frequent transfer of data pages between different nodes. To achieve efficient cross-node data migration, PolarDB-MP proposed Buffer Fusion. A node can write its data pages to the distributed buffer pool (DBP) of Buffer Fusion, and then other nodes can access the pages modified by the node from this DBP. In this case, pages can be moved quickly between different nodes. The following diagram shows the design of Buffer Fusion. When a new version of a page is stored in the DBP, Buffer Fusion will invalidate its copies on other nodes. This way, when these nodes need to access the page, they will reload the new version of the page from the DBP to ensure that all operations are performed based on the latest data, maintaining the overall consistency of the database. RDMA is highly integrated in the implementation of DBP, making the latency between LBP and GBP very low.

Lock Fusion implements two protocols: PLock and RLock. PLock is similar to a page lock in a single-node database, and it ensures atomic access to the pages and consistency of the internal structures. RLock, on the other hand, is used to maintain transactional consistency across nodes, following a two-phase lock-based protocol. PLock ensures that during concurrent access, only the node holding a lock can perform read and write operations on the database page, so that data conflicts and inconsistency can be prevented. RLock focuses more on transaction consistency, allows finer-grained data access control, and uses a two-phase lock-based protocol.

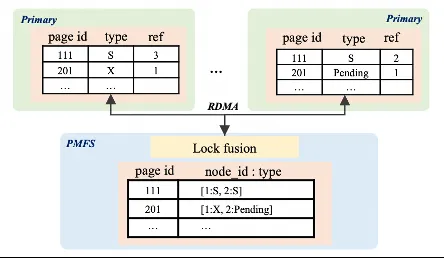

PLock: PLock is mainly used to maintain the consistency of physical data and resolve the issue of concurrent access to data pages across nodes. PLock is managed at the node level, as shown in the following diagram. Each node will keep track of the PLock it holds or is waiting for, and uses a reference count to indicate the number of threads using a particular PLock. Lock Fusion maintains the information about all PLocks and tracks the status of each lock. A node must hold a corresponding X PLock or S PLock before it can perform updates or reads on a page. If a node needs a PLock, it first checks its local PLock manager to see if it already holds the required lock or a lock of a higher level. If not, it sends a PLock request from Lock Fusion over RDMA-based RPC, and Lock Fusion will handle this request. If there is a conflict, the node sending the request will be suspended until its PLock request can be satisfied. Also, when a node releases a PLock, Lock Fusion is notified, which subsequently updates the status of the lock and informs other nodes that are waiting for the PLock.

RLock: PolarDB-MP embeds row lock information directly in each row of data and only maintains the wait relationship on Lock Fusion. For each row, a field is added to indicate the ID of the transaction holding the lock. When a transaction attempts to lock a row, it simply writes its global transaction ID into this field. If the transaction ID field of the row is already taken by an active transaction, then a conflict is detected and the current transaction has to wait. In PolarDB-MP, if you attempt to update a row, you must already hold an X PLock on the page containing the row. Therefore, only one transaction can access the lock information written into the row, and only one transaction can lock the row successfully. Similar to a single-node database, the RLock protocol also follows a two-phase lock-based protocol, that is, a transaction will hold a lock until the transaction is committed. This design reduces the management complexity and storage overhead of lock information because there is no need to maintain all lock status information in a central node location. By embedding lock information directly in the row, lock conflicts can be quickly detected and resolved, which improves the efficiency of transaction processing and overall database performance.

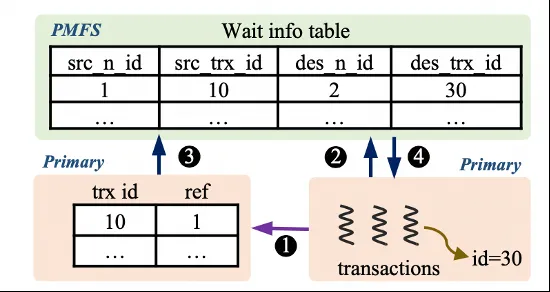

The following example shows the design of a row lock. Transaction T30 on Node 2 attempts to apply an X-lock to a row of data. By checking the metadata of the record, it can be seen that the row has been applied an X-lock by another transaction (T10). Then, T30 first remotely sets the reference ('ref') field in the metadata of T10. This action indicates that a transaction (T30) is waiting for T10 to release the lock. Then, T30 communicates with Lock Fusion, sending information about its wait status. This information will be added to the wait information table in Lock Fusion. Once T10 is complete and committed, it checks its ref field. When it found another transaction is waiting, T10 notifies Lock Fusion that it has committed. After receiving the notification from T10, Lock Fusion checks the wait information table and then notifies T30, which can be awakened and continue its processing at this point.

In PolarDB-MP, each node can generate its own redo logs, which results in multiple nodes having different redo logs for the same page. Each redo log records the changes made to a specific page. The key to crash recovery is to replay the redo logs for the same page in the order in which they are generated, while logs for different pages can be put in any order. Therefore, there's no need to maintain a total order for all redo logs. Instead, we need to only ensure that the redo logs for the same page are kept in order.

As a result, PolarDB-MP introduces LLSN to establish a partial order relation for logs of different nodes, ensuring that redo logs associated with the same page are maintained in the order in which they are generated. However, LLSN doesn't guarantee the order of redo logs for different pages, which is not necessary. In order to implement this design, each node maintains a local LLSN for the node, which is automatically incremented each time a log is generated. When a node updates a page and generates a log, the new LLSN is recorded in the page metadata and is assigned to the corresponding log, too. If a node reads a page from storage or the DBP, it will update its local LLSN to match the LLSN for the accessed page, provided that the LLSN for that page exceeds the node's current LLSN. This ensures that the LLSN for the node is in sync with the pages it accesses. Then, when a node updates a page and generates a log, its LLSN is incremented, ensuring that the new LLSN is greater than the LLSN for any node that previously updated the page. Because of the PLock design, only one transaction can update the page at a time. As a result, when a page is updated on different nodes sequentially, the LLSNs effectively maintain the order in which the logs are generated.

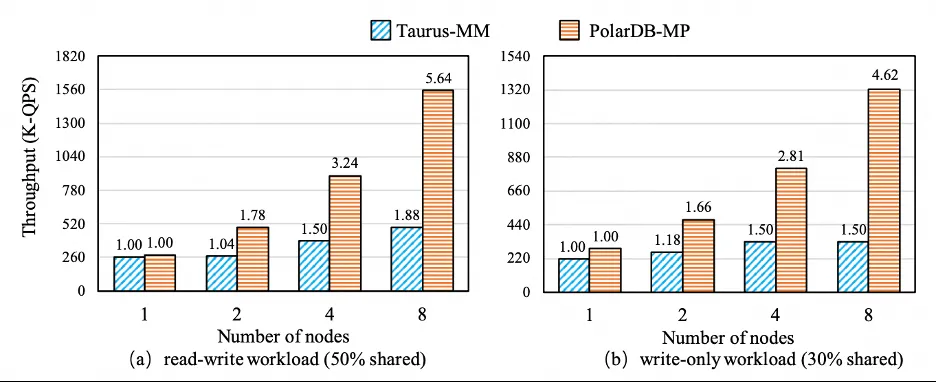

We tested the performance of PolarDB-MP with various workloads and configurations. Due to space limitation, only two sets of experiment results are listed here. For more complete experiment analysis, please refer to the original paper. We first made a comparison between the performance of PolarDB and Huawei Taurus multi-master. In the following diagram, the y-axis represents throughput, while the numbers in each bar chart represent scalability (normalized throughput relative to single-node performance). PolarDB-MP had equivalent performance to Taurus-MM on a single node. However, PolarDB-MP showed distinct advantages on multiple nodes. For example, for read-write and read-only workloads, the throughput of PolarDB-MP was 3.17 times and 4.02 times that of Taurus-MM in an eight-node cluster, respectively.

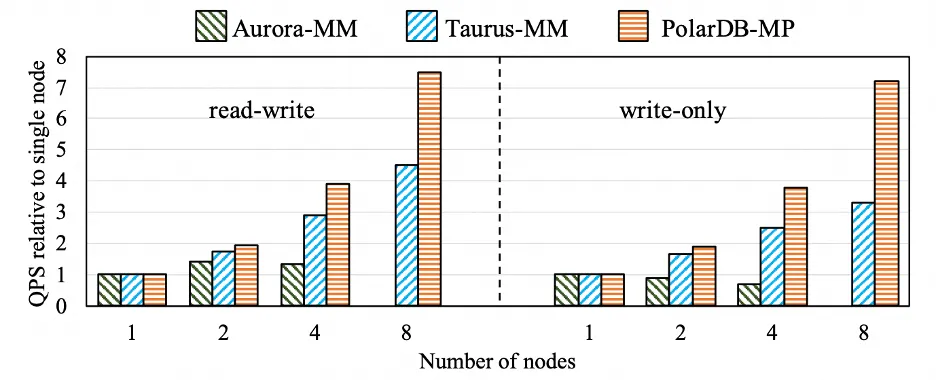

We further compared the performance of PolarDB-MP and Aurora-MM when the percentage of shared data was only 10%. Since Aurora-MM supported only a maximum of four nodes, its results for eight nodes were omitted. Even with a low proportion of shared data, Aurora-MM didn't show any performance improvement when increasing from two nodes to four nodes in read-write workloads. For write-only workloads, the performance for two and four nodes was even worse than that of a single node, which could be attributed to the optimistic concurrency control used by Aurora-MM. In these scenarios with fewer conflicts, although Taurus-MM showed higher scalability than Aurora-MM, it still fell behind PolarDB-MP, especially in an eight-node cluster. For write-only workloads, when there were four nodes, the scalability of PolarDB-MP was 5.4 times that of Aurora-MM. When there were eight nodes, the scalability of PolarDB-MP was 2.2 times that of Taurus-MM.

Download the original paper for more details.

The PolarDB multi-write architecture is available on Alibaba Cloud. You are welcome to use it.

Learn more: https://help.aliyun.com/zh/polardb/polardb-for-mysql/polardb-multi-master-clusters-support-crac (English version coming soon)

Try out database products for free:

ApsaraDB - January 6, 2023

Alibaba Clouder - September 28, 2020

ApsaraDB - September 21, 2022

ApsaraDB - November 30, 2023

Pum - December 21, 2023

ApsaraDB - May 17, 2023

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB

Santhakumar Munuswamy August 5, 2024 at 6:55 am

Thanks for the sharing