While continuing to wage war against the worldwide outbreak, Alibaba Cloud will play its part and will do all it can to help others in their battles with the coronavirus. Learn how we can support your business continuity at https://www.alibabacloud.com/campaign/fight-coronavirus-covid-19

By Zhang Zuozhong, nicknamed Jianyi, who is an Alibaba Cloud database O&M expert, as well as Chen Rongyao, nicknamed Yixin, from the ApsaraDB team.

DingTalk has long been a stable for corporate communications in China, but with the COVID-19 epidemic, DingTalk's daily online traffic quickly and dramatically surged as many people were working from home. Since February 3 this year, which, for context, marked the first day where most people started working in China after the Lunar new year holiday, DingTalk has continued to see traffic spike after traffic spike, with an estimated 40-fold increase in traffic. This was because several businesses, both big and small, as well as several types of organizations and institutions started to use DingTalk as one of their measures to facilitate and support corporate communication with people working remotely. With this big move to DingTalk, a striking 200 departments of education in the vast majority of Chinese provinces, including Hubei, have launched online education programs.

This sudden influx of new users resulted in several historic milestones for DingTalk. For example, on February 5, DingTalk ranked first in popularity in the free apps category in Apple's Mainland China App Store.

Then, on February 6, DingTalk had a taste of the limelight, with DingTalk CTO Zhu Hong being interviewed on CCTV's channel 13.

If you haven't heard about it before, DingTalk is a feature-packed corporate communication platform and mobile workplace with features that cover almost anything from the usual instant messaging, real-time document collaboration, as well as voice and video conferencing, all the way to new features introduced in response to COVID-19 like remote class live streams and health check-ins. Ensuring good performance and superior stability for the more than 20 service modules in DingTalk wasn't an easy task by any means considering the huge jumps in traffic seen.

In the remainder of this article, we will detail just how DingTalk's team was able to continue to provide reliable and stable service support despite some major challenges over the last few months.

DingTalk is highly dependent on databases. The closest analogy we have to the recent traffic surges in terms of sheer data volume is the annual Double 11 shopping promotion, for which planning and deploying databases is the most important and strategic part of ensuring service stability. Unlike Double 11, however, with this large increase in traffic, DingTalk's technical team had very little time to prepare for the coming traffic surges. All of this made the challenges that the team faced all the more difficult. Ultimately, the challenges that the team faced can be summarized in the three following points:

1. Unpredictable system capacity requirements: Consider the message module of DingTalk as an example. Before the Lunar New Year, in mid January of this year, DingTalk's daily messaging traffic peak amounted to less than 10 million users . So, when we performed the first capacity evaluation, we estimated that peak traffic would be three times that of the normal daily peak on February 3. But then, on February 10, the day when schools was supposed to reopen in China, we had to revise this estimate to 10 times the normal daily peak. Last, on February 17, the day remote learning started in China, we again had to adjust the estimate to 40 times. So, in other words, after these adjustments, the total capacity of the system was 40 times greater than our previous normal daily peak.

2. Tight schedule, complex scaling requirements, and insufficient resources: The sharp increase in traffic due to the pandemic was no less impactful on the system than the annual Double 11 Shopping Festival. The difference is that our counterparts who are responsible this event have 6 months to prepare. We only had hours. In addition, due to cost considerations, DingTalk had no free resources in the pool, and the existing resource deployment density was also high. This made moving resources around to free up room to scale out nearly 20 core clusters an enormous challenge.

3. Assuring system stability and user experience in extreme cases: What do we do when the clusters cannot be scaled out anymore due various factors and the system reaches a bottleneck? What emergency measures can we take? Can we find a balance point that minimizes the impact on users?

The sudden surge in real traffic put both the DingTalk databases to the test, testing the capabilities of several of the database administrators at Alibaba Cloud. However, thanks to our tested control mechanism, Database Transmission Service (DTS), middleware system, and the close work of the database team and the business teams, we were able to meet these challenges head-on.

1. The database team created a dedicated service support team for the duration of the pandemic. Team members included database administrators and others and from the database kernel, CORONA, TDDL, DTS, Data Replication System, and NoSQL product teams.

Each database administrator was assigned to a DingTalk line-of-business and monitored and reported the system status and resource usage during peak hours of their line so that the emergency team could keep track of how the system was performing. This structure allows issues in the production environment to be fixed as soon as possible, and sub-optimal service cases to be optimized to ensure that known issues only occur once. We also participated in system stress tests to discover potential risk factors and remedy them. Systems with insufficient capacity were scaled out when there were enough resources to enable databases to perform as expected during peak hours.

2. The database team and the stability team worked closely together. We started with limited resources and many systems needed scaling. Each line-of-business owner considers their system to be the most important and thinks they should have priority in database expansions. Some owners even dragged their bosses and the bosses of their bosses in to put pressure on the database administrators to expand their databases first.

This created a lot of stress for the database administrators. We had little to work with but a lot of demands. Luckily, the stability team took on a lot of the responsibilities. They originated the policy for ordering database expansion according to business priorities and unified expansion requirements. The database administrators were able to take over from there to expand the databases based on the order and existing capacities in an orderly and efficient fashion, to use the resources we had where they had the most impact.

We all knew traffic was going to spike due to the quarantine orders, but nobody knew by how much. So we started planning early and prepared for the worst case scenario. To prevent resources from being the bottleneck of our system's scaling, we worked with the cloud resource teams to make the proper arrangements. We borrowed some servers from Alibaba Group and resized several database clusters from other business units. In total, we were able to come up with about 400 servers. At the same time, we coordinated these cloud resources to be migrated. In just a couple of days, we were able to migrate 300 servers to the DingTalk resource pool, thereby ensuring that all database expansion requirements could be met.

With all resources now in place, it was time to test database elasticity. Thanks to the three node distributed deployment architecture of PolarDB-X, we were able to perform in-place upgrades and expansion on existing clusters, which minimized impact on user experience and ensured data consistency. In some cases, we needed to migrate user data from existing clusters to new ones that featured database and table sharding. We were able to complete this smoothly using the tested DTS platform. By the end of these steps, we could provide database elasticity as long as we had the resources available to meet our business needs.

To prepare for the peak hours, the database team developed the following emergency plans:

We also used emergency throttling or intervention through SQL Execute Plan Profile on exceptional SQL statements after assessing the impact. The entire cluster had standby servers for load balancing. Up to 100% of read traffic can be routed to standby servers in extreme cases. We prepared weak consistency scripts to further improve database throughput when necessary. Combining throttling with downgrading ensured that many database systems would operate stably during unforeseen traffic peaks.

However, throttling had a negative impact on user experience. Each group had a 30 queries per minute limit, which meant each group can only send up to 30 messages per minute. Most of the time, groups reached that limit in the first 20 seconds, and they could not use DingTalk for the remaining 40 seconds. To deal with this issue, we changed the 30 queries for minute to 30 queries per 20 seconds, effectively reducing the need for throttling by 97% and greatly improving the user experience.

The red line shown here is the throttling limit.

Generally speaking, the CPU utilization of a cluster can be translated into system resource usage. However, for databases, a capacity bottleneck can mean any number of things other than the CPU, such as I/O, network, SQL, or locks. Different business models have different bottlenecks. Even businesses with the same traits, such as large query volume, may be limited by different factors. Some may find their CPUs are to blame, some may want to look at their memory hit rate, and others may be blocked by a bad index design. To complicate things even further, some bottlenecks are not linear. Things may go along swimmingly at 2x stress with plenty of hardware and software power to spare. But as soon as 3x stress is hit, the system may fall apart. So, how do we accurately evaluate the database capacity in this case?

In the past, we worked from experience and with the business teams to perform comprehensive stress tests to estimate each database's capacity, or more specifically many reads and writes a cluster can support. This method has the following problems:

The solution to this may sound simple, being just a matter of collecting and replaying all online service traffic. However, implementing such a solution is far from easy, being incredibly complicated. And, in fact, what we really needed was a general-purpose stress test for databases. The database layer should generate its own traffic with the ability to increase or decrease traffic volume and restart the test after changes.

In 2019 we started working with scientists from Alibaba DAMO Academy database labs and developed CloudBench to meet these requirements. These efforts paid off when we used this tool to assess database capacity in preparation for the traffic surge due to the COVID-19 epidemic.

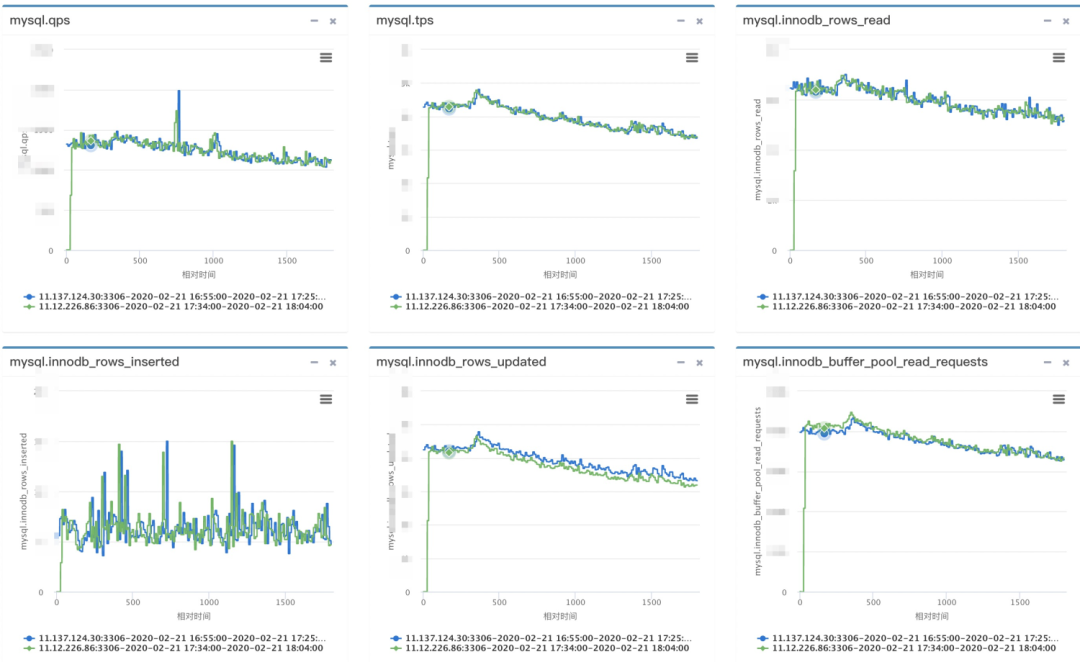

Let's take a look at the following figure:

In the above charts, the blue line indicates the performance of a real service at any given moment in time, and the green line is the performance of database traffic playback which was generated from collected database data. We can see that the two curves are highly correlated on the time axis, especially when it comes to the internal metrics of InnoDB, including traffic fluctuations.

Once we get a playback that is true to the real world service workload, we can use that to amplify stress and analyze database capacity. That allows us to identify performance bottlenecks in extreme cases, optimize databases to remove those bottlenecks and verify those optimizations.

CloudBench is now available to some users as part of the cloud database autonomous service Database Autonomy Service (DAS). We will describe the implementation of CloudBench in detail in subsequent articles in the future.

On February 17, the day of the third traffic peak, DingTalk was stable on all fronts. On February 18, we reverted from an all-hands-on-deck situation back to our usual daily maintenance scheme. What gave us the confidence to do this? All the databases had been optimized and expanded to handle far more than the traffic that was hitting us.

In just two weeks, we had optimized and scaled out each database system to handle from several times to 40 times the normal workload. Among these are ultra-large database clusters with a storage space of more than 1 PB. This feat is testament to the elasticity of Alibaba Cloud Database. Management, DTS, CORONA and other products all worked perfectly together to withstand the traffic peaks due to the pandemic.

The traffic peaks caused by the pandemic caught us off guard, but we prevailed and learned a lot from the challenge. Should we face a similar situation again, we will be able to pass the test with flying colors. In summary, we thought the following points were key to our success.

Talent organization is an important aspect. Developers and business professionals should have a keen sense of the challenges coming, discover issues in advance and prepare to handle them, let business teams head the emergency response team and sort out the dependencies and corresponding backend systems, and involve product line and database owners. The emergency response team should be in charge of planning, manpower allocation, and resource coordination. It should be the thread that pieces business teams, backend product teams, and resource teams together.

One important lesson is to use a database that is sufficiently elastic so that you can scale up when there are traffic peaks and scale down when things go back to normal. While automated O&M is great, and every cloud O&M component should be automated, we also need to have emergency toggles for many key operations to ensure that, in extreme cases as what we've seen here, we can switch from auto mode to manual mode to make things smooth and efficient.

Prepare for system bottlenecks. This means that you should do advance planning for both businesses and database products. Services should have downgrading and throttling measures in place. In the case of stress starting to overwhelm the system, non-essential features can be downgraded or throttled to ensure the smooth running of the core business. Databases should be able to achieve instant throughput increase by using optimizations alone, without scaling up or out. More importantly, these optimizations should have good compatibility with different deployment environments and database architectures with appropriate tools and plans.

On the other hand, we need to have the means to evaluate and test the effectiveness of our plan. We can use CloudBench but the current cost is still too high. In the future, we would like to increase the automation of CloudBench to reduce its cost of use, so that our business teams can use it as well. Before major promotions, it should automatically perform a large number of single-link database stress tests to assess resource usage and identify performance bottlenecks, which will enable us to optimize database parameters to test the effectiveness of the plan.

In order to win this inevitable battle and fight against COVID-19, we must work together and share our experiences around the world. Join us in the fight against the outbreak through the Global MediXchange for Combating COVID-19 (GMCC) program. Apply now at https://covid-19.alibabacloud.com/

Alibaba Researcher Wu Hanqing: What Kind of Intelligent System Does the World Need?

2,593 posts | 792 followers

FollowAlibaba Clouder - April 2, 2020

Alibaba Cloud Native Community - November 29, 2024

Alibaba Clouder - March 11, 2020

Alibaba Clouder - April 2, 2020

Alibaba Clouder - March 26, 2020

Alibaba Clouder - February 4, 2021

2,593 posts | 792 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreLearn More

Data Transmission Service

Data Transmission Service

Supports data migration and data synchronization between data engines, such as relational database, NoSQL and OLAP

Learn MoreMore Posts by Alibaba Clouder