By Jin Fenghua (a Technical Expert in the Middleware Field of the Information and Technology Department of China Everbright Bank)

With the development of the banking business, business systems have gradually shifted from monolithic and centralized to distributed and microservice, and the demand for inter-process communication has increased. As an enterprise-level basic service, a distributed messaging platform has emerged. Different business systems will introduce different message middleware products into the production system.

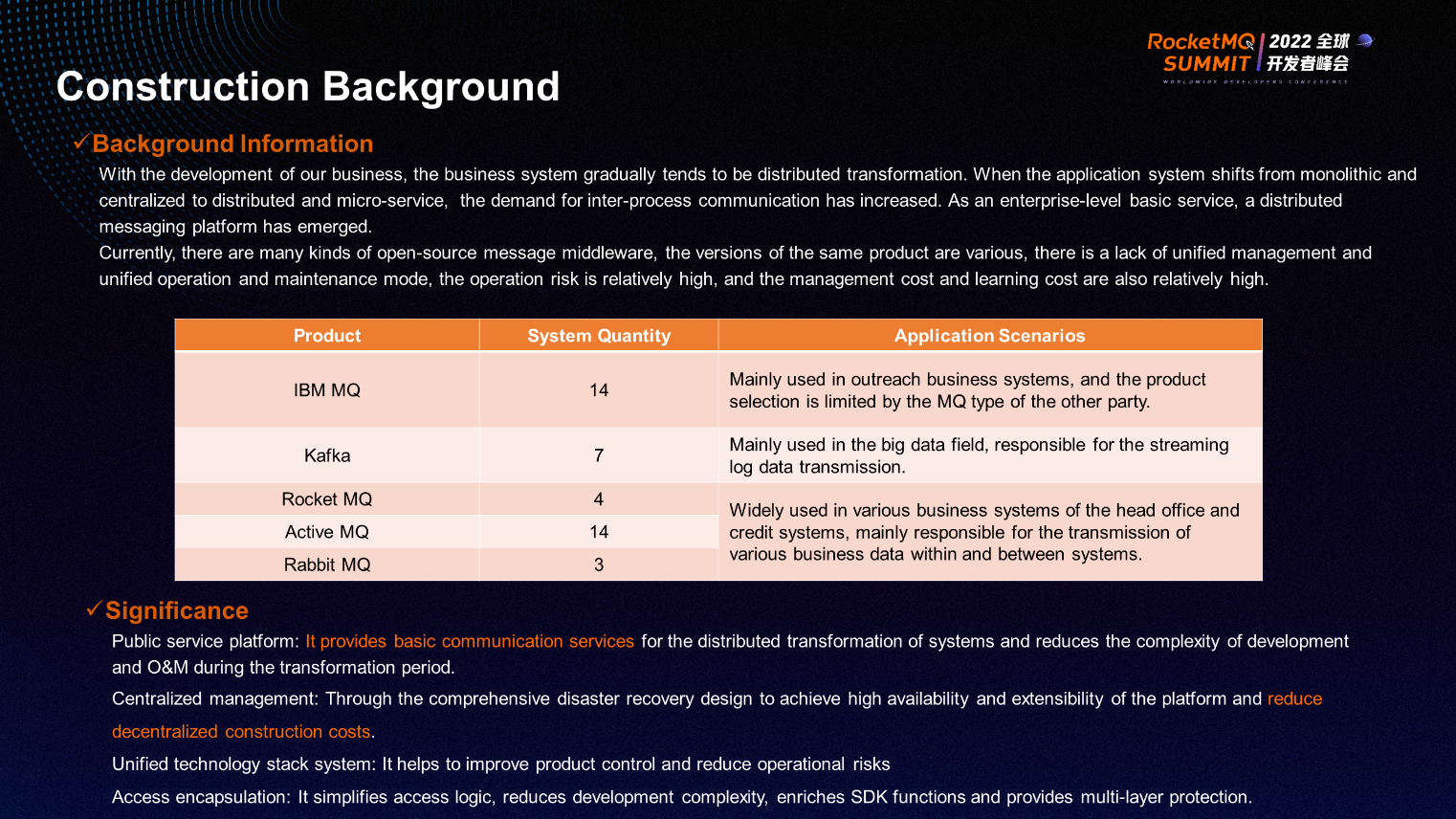

From the perspective of operation and maintenance, the usage scenarios of message middleware mainly involve five types of products: IBM MQ, Kafka, RocketMQ, ActiveMQ, and RabbitMQ. IBM MQ is mainly used in outreach business systems, Kafka is mainly used in the big data field, and the latter three are mainly related products introduced into different systems after distributed transformation and microservice transformation. The main ideas for the construction of a distributed messaging platform for enterprise-level basic services are:

(1) Building a Public Service Platform provides basic communication services for the subsequent distributed transformation of the entire system.

(2) Centralized Management through the comprehensive disaster recovery design of the platform achieves high availability and extensibility of the Message Service for upper-layer applications.

(3) Unified Technology Stack System: After the distributed transformation, at least three types of products have been introduced, and each type of product has different versions. The operation and maintenance risks are high. Therefore, it is expected to unify the technology stack system through platform construction.

(4) Access Encapsulation: Unified encapsulation for access is carried out from the perspective of development. The unified encapsulation component SDK simplifies the access logic and reduces the access complexity of the entire development. In addition, the rich features of SDK can meet more requirements of the application layer for Message Service.

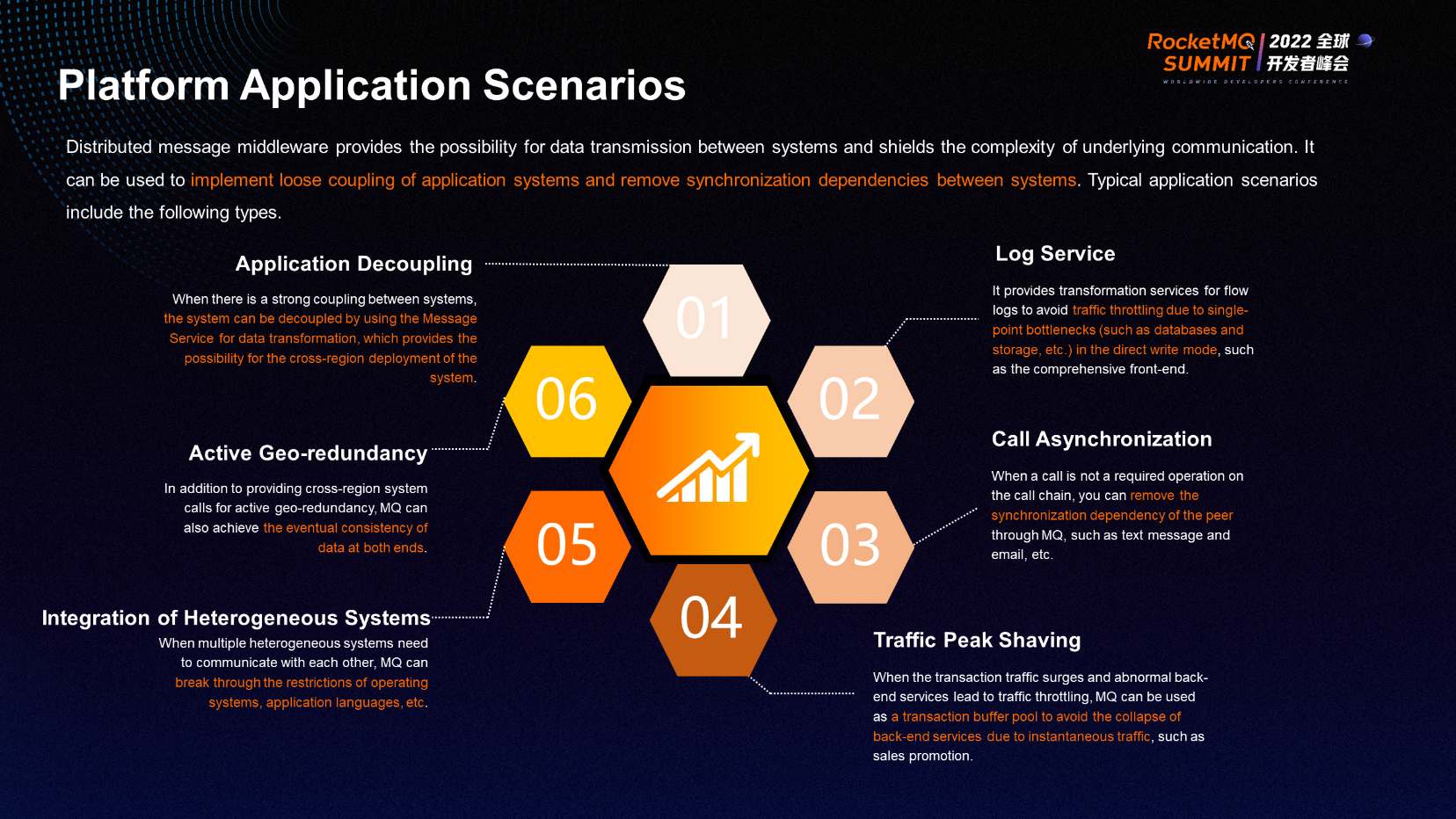

Platform application scenarios are for message platforms and all types of message middleware.

(1) Application Decoupling: When there is a strong coupling between systems, the system can be decoupled using the Message Service for data transformation, which provides the possibility for the cross-region deployment of the system and reduces strong coupling between systems.

(2) Log Service: It provides transformation services for flow logs to avoid traffic throttling due to single-point bottlenecks (such as databases and storage) in the direct write mode (such as the comprehensive frontend). The previous application system stored the transaction flow. The resulting database has single-point bottlenecks (such as database failure, storage failure, and network failure), which may cause system traffic throttling.

(3) Call Asynchronization: This is the most common application scenario. When a call is not a required operation on the call chain, you can remove the synchronization dependency of the peer through MQ (such as text message and email).

(4) Traffic Peak Shaving: It mainly applies to traffic surge scenarios (such as Double 11 and Double 12). If the frontend processing is not performed, the backend application system is likely to be broken down due to the instantaneous traffic impact. When the transaction traffic surges and abnormal backend services lead to traffic throttling, MQ can be used as a transaction buffer pool. First, all requests are incorporated into the messaging platform. The backend application system extracts messages from the platform for transaction processing according to its processing capacity to avoid the collapse of backend services due to instantaneous traffic.

(5) Integration of Heterogeneous Systems: When multiple heterogeneous systems need to communicate with each other, MQ can break through the restrictions of operating systems, application languages, etc. With the provision of SDKs in different languages, MQ supports access to the platform between different application systems, enabling the integration of different language systems in the application system.

(6) Active Geo-Redundancy: When the bank system does multi-active construction or active geo-redundancy construction, it requires data synchronization. In addition to providing cross-region system calls for active geo-redundancy, MQ can achieve the eventual consistency of data at both ends. We can send local transaction operations to the remote data center in the form of messages, and the remote data center will redo the transaction to realize the eventual consistency of data at both ends.

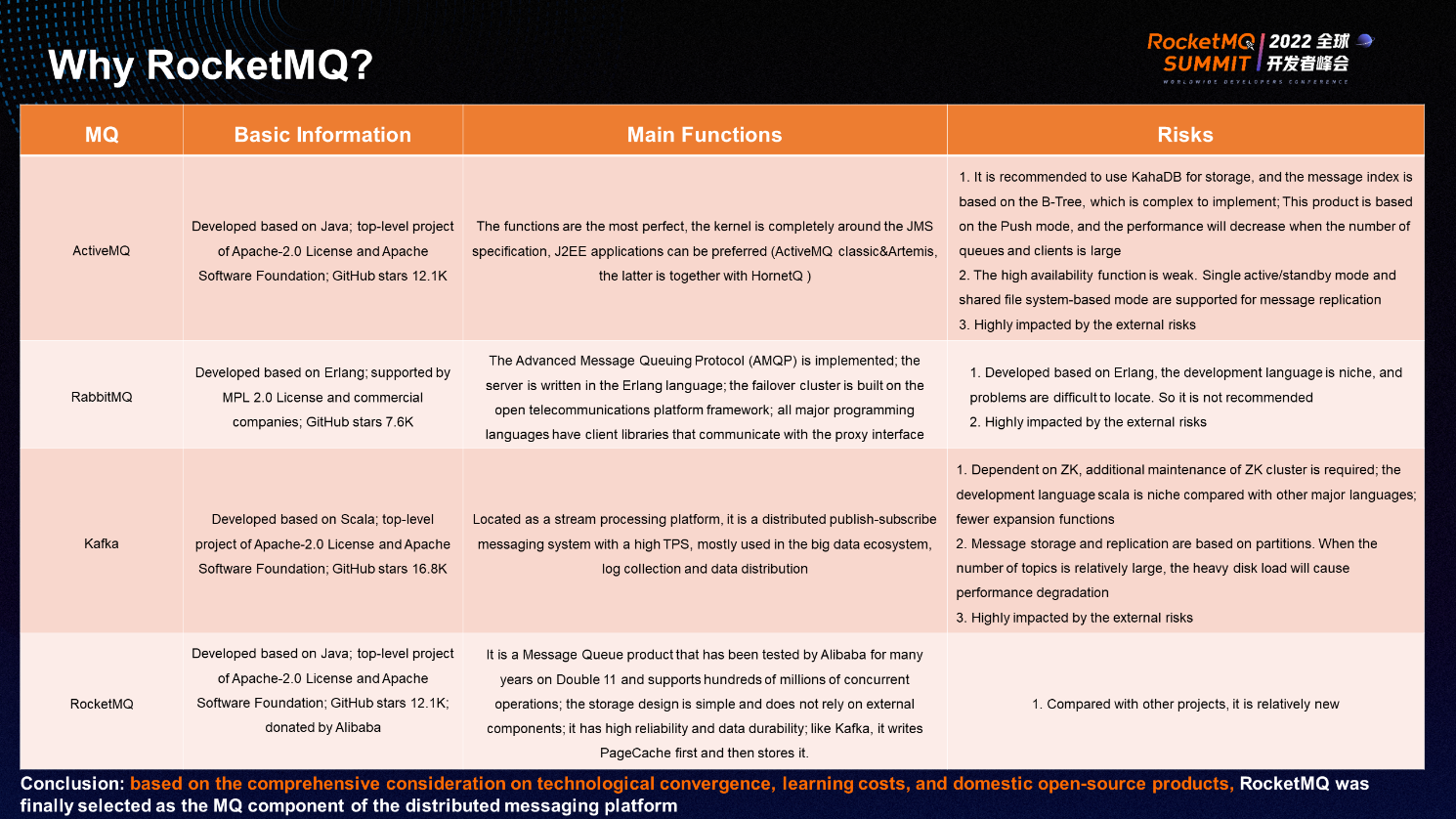

The preceding figure shows a comparison of several MQ products. First of all, the technology stack converges. Our technology stack is mainly focused on Java, so middleware products developed in Java are preferred. Secondly, there is the learning cost. It mainly requires the messaging platform and message middleware to be simple in structure and clear in architecture. We prefer domestic open-source products. Therefore, RocketMQ was finally selected as the cornerstone of messaging platform construction.

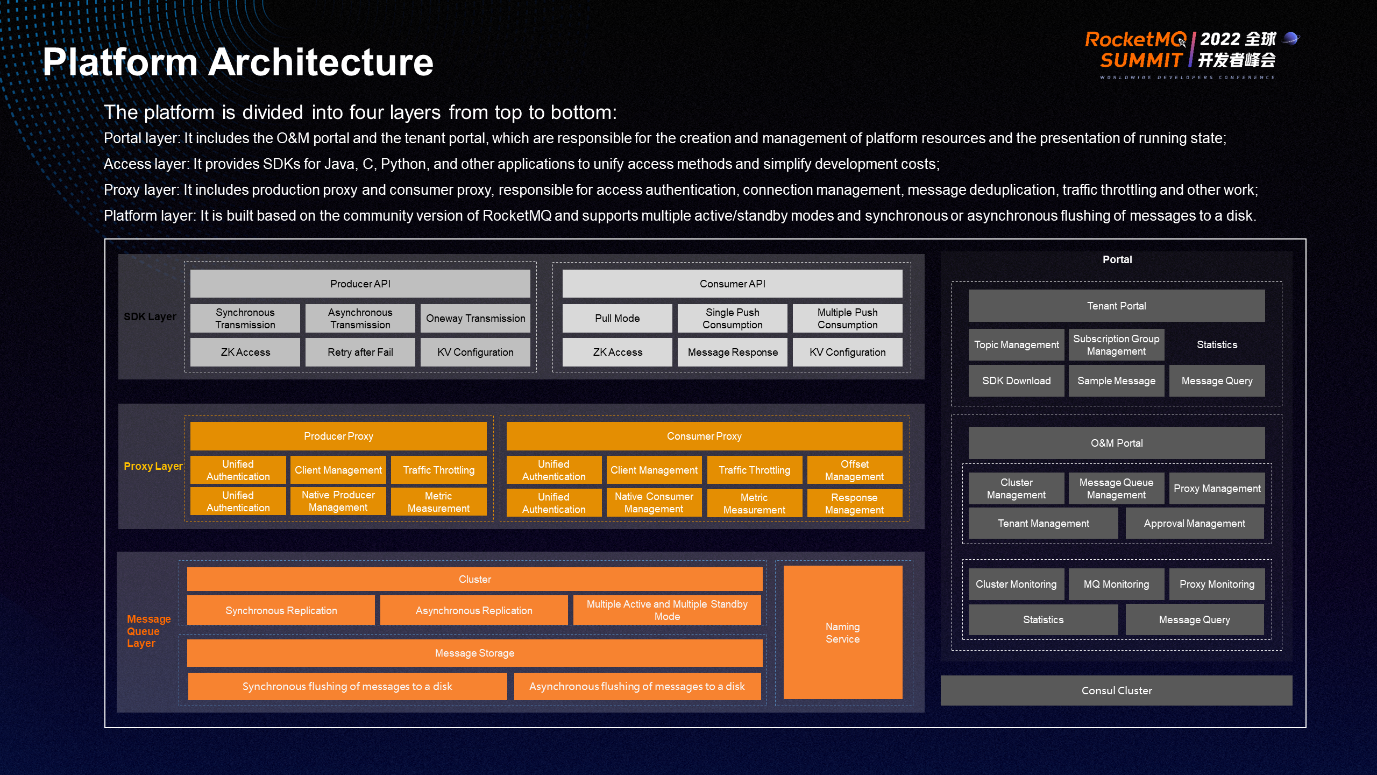

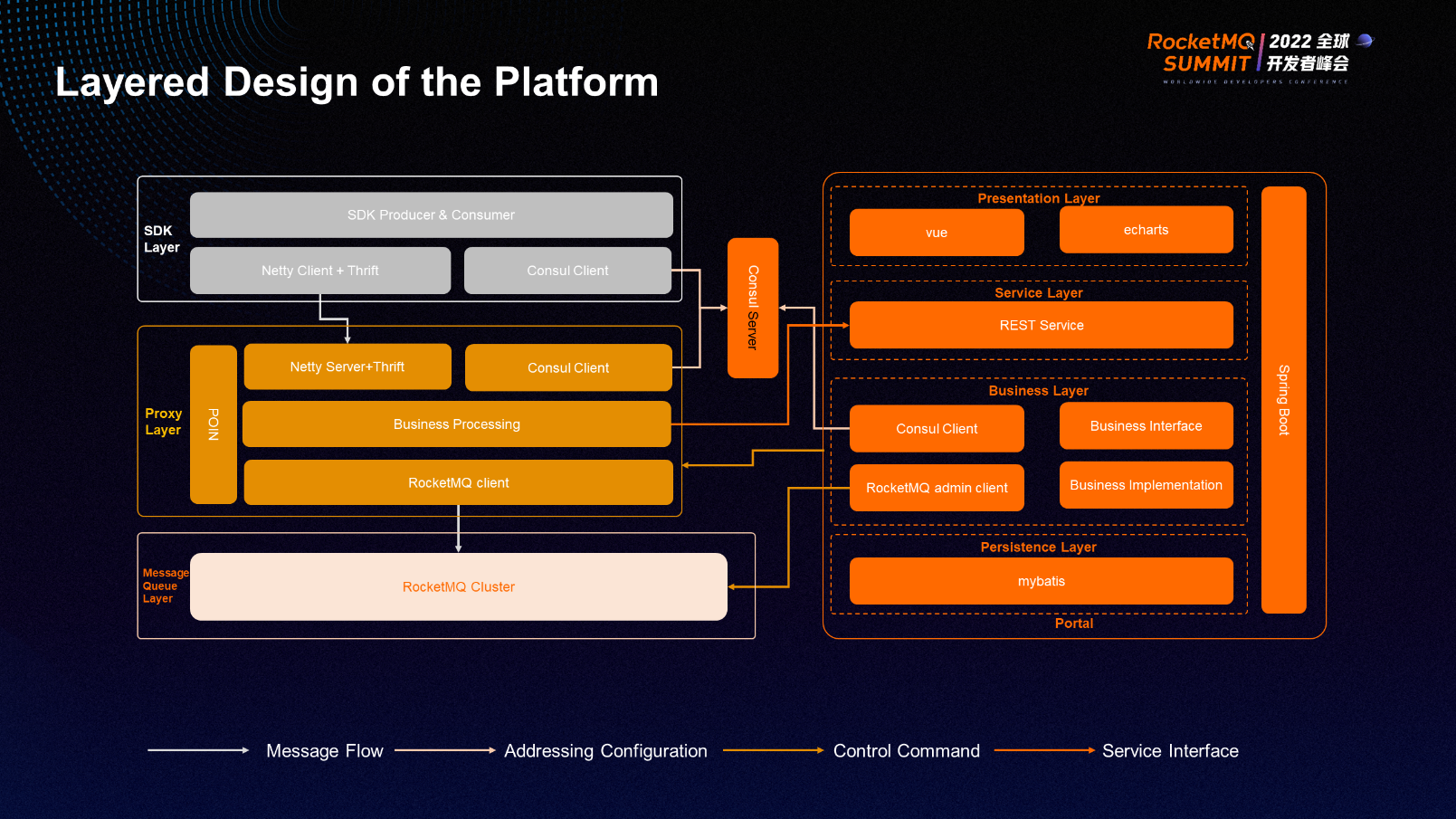

The platform architecture has four layers from top to bottom. On the far right is the portal layer, which is responsible for the creation, management, and monitoring of the entire resources. The portal layer is divided into two parts, one is the tenant portal, and the other is the O&M portal. When different application systems connect to the messaging platform, we assign them to the tenant portal. Each tenant has to manage, monitor, and calculate its resources and can only see its related resources. The O&M portal is equivalent to an Administrator. It can manage all resources and tenants in a unified manner, including managing message clusters at the backend and monitoring message queues.

There are three layers on the left. The first layer is the SDK layer. Applications connect to the messaging platform through SDKs. Currently, SDKs supported include Java, C, and Python SDK. The second layer is the proxy layer, which is the layer added between the underlying message cluster and the application system. With the proxy layer, there will be no communication between the application layer and the underlying message cluster, and all communication is done through the proxy layer. The bottom layer is the message cluster built based on RocketMQ.

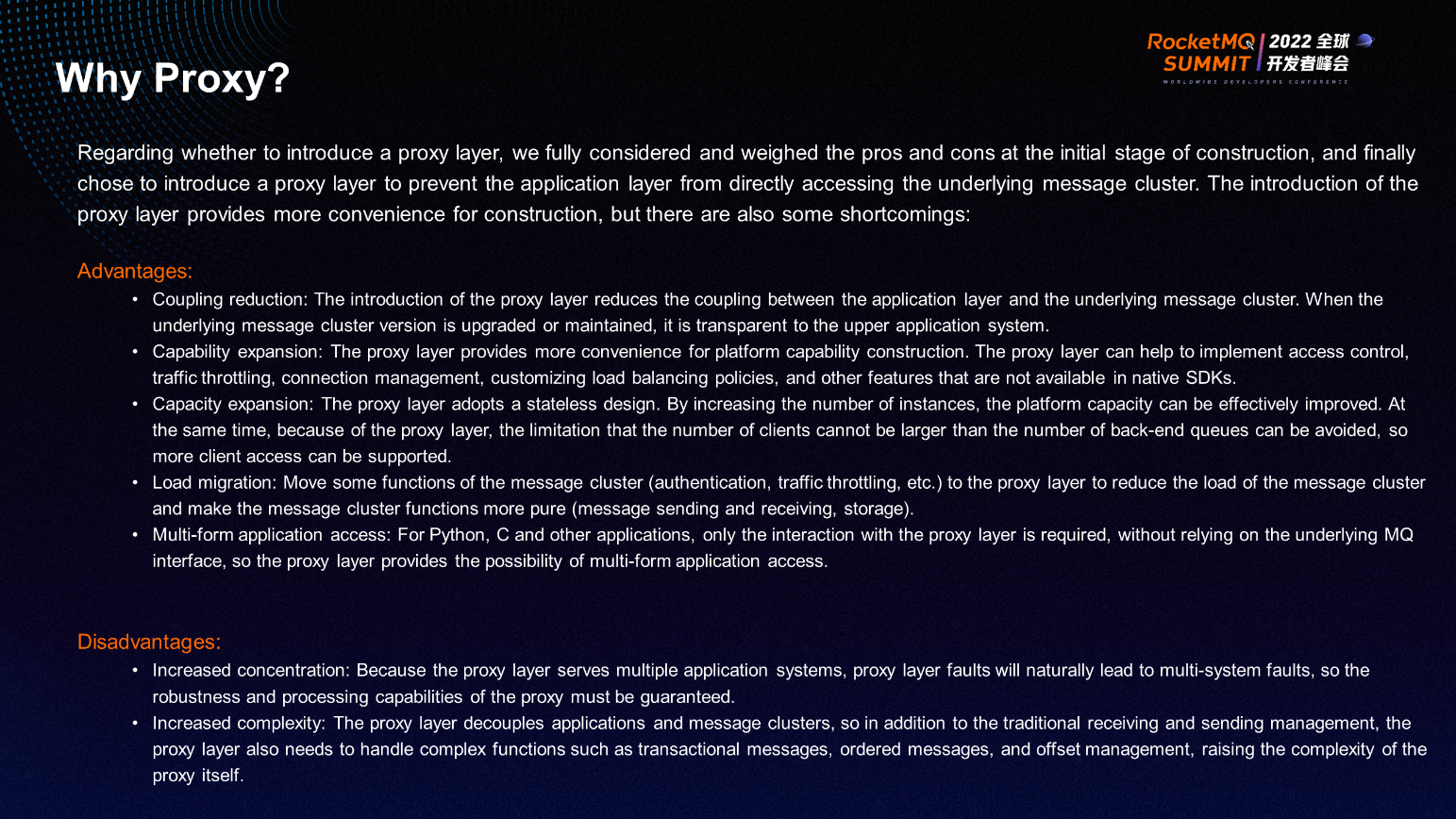

We introduced the proxy layer to prevent the application layer from directly accessing the underlying message cluster. This provides more space for construction, but there are also certain shortcomings. The first is the advantages of introducing the proxy layer:

(1) Coupling Reduction: The introduction of the proxy layer reduces the coupling between the application layer and the underlying message cluster. When the underlying message cluster version is upgraded or maintained, it is transparent to the upper application system. Furthermore, if product replacement will be done later, it will only affect the proxy layer, not the upper application system layer.

(2) Capability Expansion: If there is no proxy layer, all requirements of application systems for Message Service will be limited to the functions provided by the native SDK. With the proxy layer, more functions of the application system can be expanded based on the construction of the proxy layer (including access control, traffic throttling, connection management, and customizing load balancing policies), enriching the platform's capabilities.

(3) Capacity Expansion: Since the proxy layer adopts a stateless design, we can increase the message platform capacity by horizontally scaling the number of instances in the proxy layer. The premise is that the backend message cluster capacity is sufficient.

(4) Load Migration: The additional functions related to the Message Service (authentication, traffic throttling, etc.) are migrated to the proxy layer, which makes the functions of the message cluster purer and focus on message sending, receiving, and storage.

(5) Multi-Form Application Access: Only the interaction with the proxy layer is required for Python, C, and other applications, without relying on the underlying MQ interface, so the proxy layer provides the possibility of multi-form application access.

There are two disadvantages of introducing the proxy layer:

(1) It increases the platform concentration. The banking system should avoid the emergence of a single-point system, but there is a single point because of the centralized processing of the platform. As a result, there will be higher requirements for the robustness and processing capacity of the platform.

(2) It increases the platform complexity. The proxy layer decouples applications and message clusters, so the proxy layer needs to handle complex functions (such as transactional messages, ordered messages, and offset management) in addition to the traditional receiving and sending management, raising the complexity of the proxy itself.

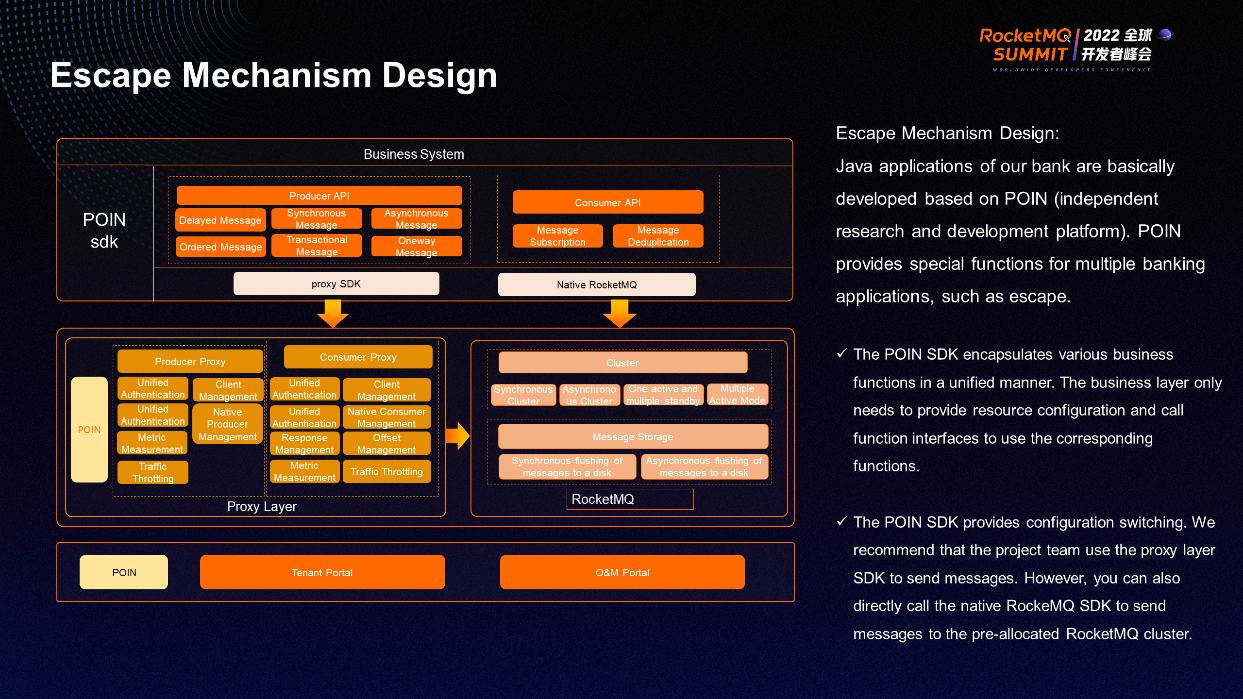

The platform adopts a layered design. The management console is on the rightmost side, and the SDK interface is encapsulated on the uppermost left. The bottom of the SDK layer is optimized by Netty plus Thrift and then interacts with the proxy layer. The proxy layer is based on the POIN. It receives the input from the SDK layer through Netty and then performs the relevant business processing logic. After that, it transfers the corresponding message to the RocketMQ cluster through the SDK provided by RocketMQ or pulls the corresponding message from the RocketMQ cluster to the proxy layer.

In addition, the platform adopts a simple escape mechanism design. When the proxy layer is unavailable, you can use additional configurations to allow the application layer to bypass the proxy layer through the configuration on the SDK and directly transfer the corresponding messages to the RocketMQ cluster or pull them from the RocketMQ cluster, avoiding the strong dependence of the application system on the proxy layer.

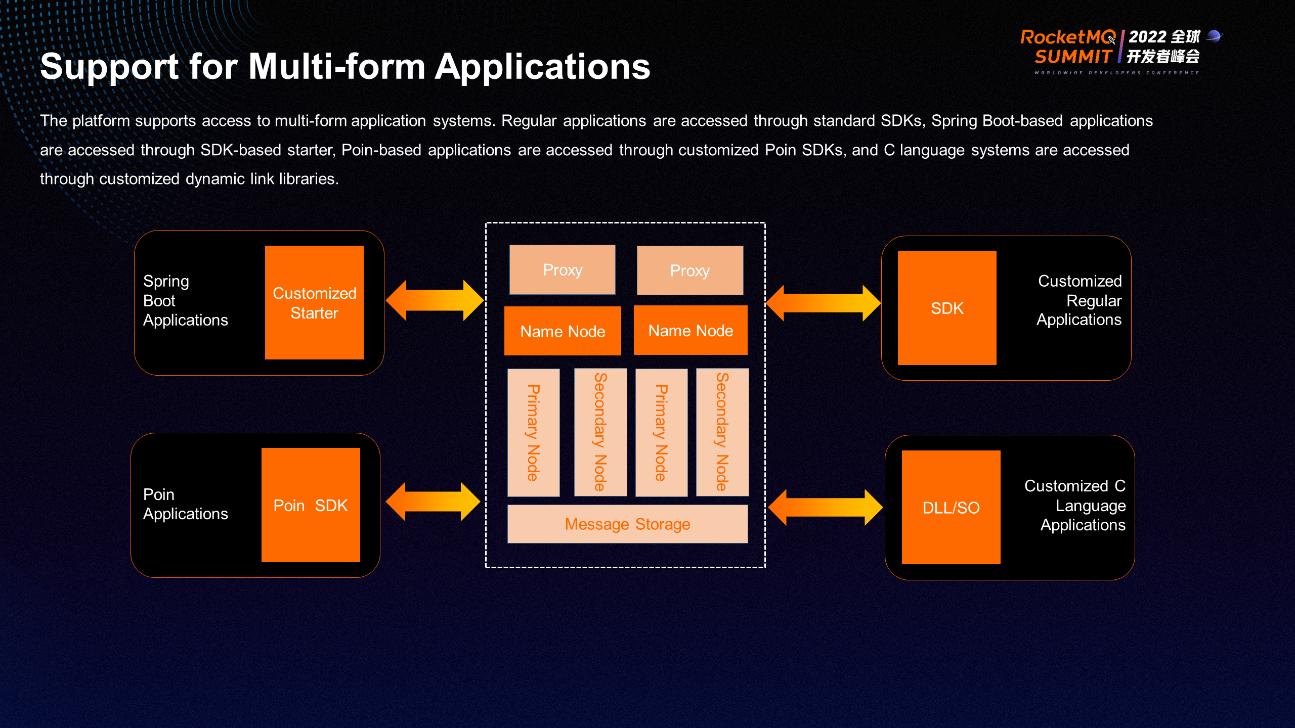

The platform supports multi-form applications, mainly including support for Spring Boot applications. We have customized the MQ Spring Boot Starter. Common Java applications can connect to the platform using the SDK provided by the platform. POIN application can connect to the platform using its integrated SDK. C language and Python language can connect to the platform using the corresponding SDKs.

The current deployment situation is that the cluster deployment has been done in two data centers, mainly including the following RocketMQ with four primary nodes and four secondary nodes for corresponding cross-deployment. There are two production proxies and two consumption proxies in each center on the upper layer, some platform-related Consul applications, and the deployment of database services similar to the application management console. Now, after the launch of some key applications, we have expanded a 2 + 2 cluster. This refers to the deployment of RocketMQ clusters. The upper layer still adopts a unified set of deployments.

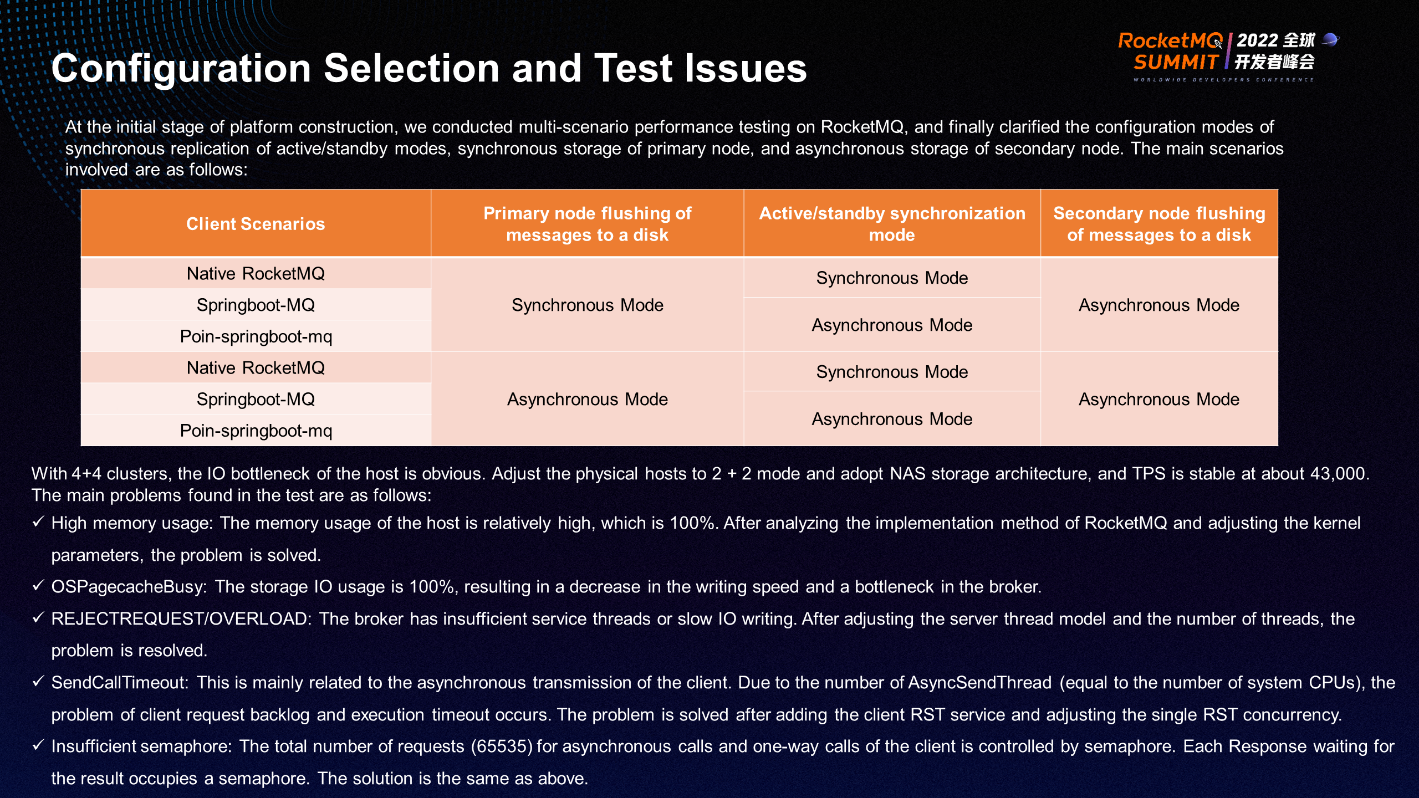

Many related tests were conducted at the beginning of the platform construction, including connecting the message clusters through RocketMQ's native SDKs in different client scenarios through Springboot-MQ, Poin-springboot-MQ, etc., and between the storage nodes of the message cluster, whether the data recording of the primary nodes and secondary nodes is synchronized or asynchronous, and whether the data is replicated synchronously or asynchronously, and so on. There was little difference in the whole test. TPS was about 43000 at the earliest, but some problems occurred during the test.

(1) The memory usage of the host is relatively high, which is 100%. After analyzing the implementation method of RocketMQ and adjusting the kernel parameters, the problem is solved.

(2) OSPagecacheBusy: The storage IO usage is 100%, resulting in a decrease in the writing speed and a bottleneck in the broker. Therefore, the corresponding adjustment is made.

(3) REJECTREQUEST/OVERLOAD: This is mainly due to the traffic throttling caused by some configurations on the broker side.

(4) SendCallTimeout and Insufficient Semaphore: These are mainly related to the asynchronous transmission of the client. If the pressure of asynchronous transmission on the client is too high, SendCallTimeout and insufficient semaphore may occur. The problems above have something to do with the features of the asynchronous call.

After some adjustments on the corresponding issues, the optimization and improvement are large.

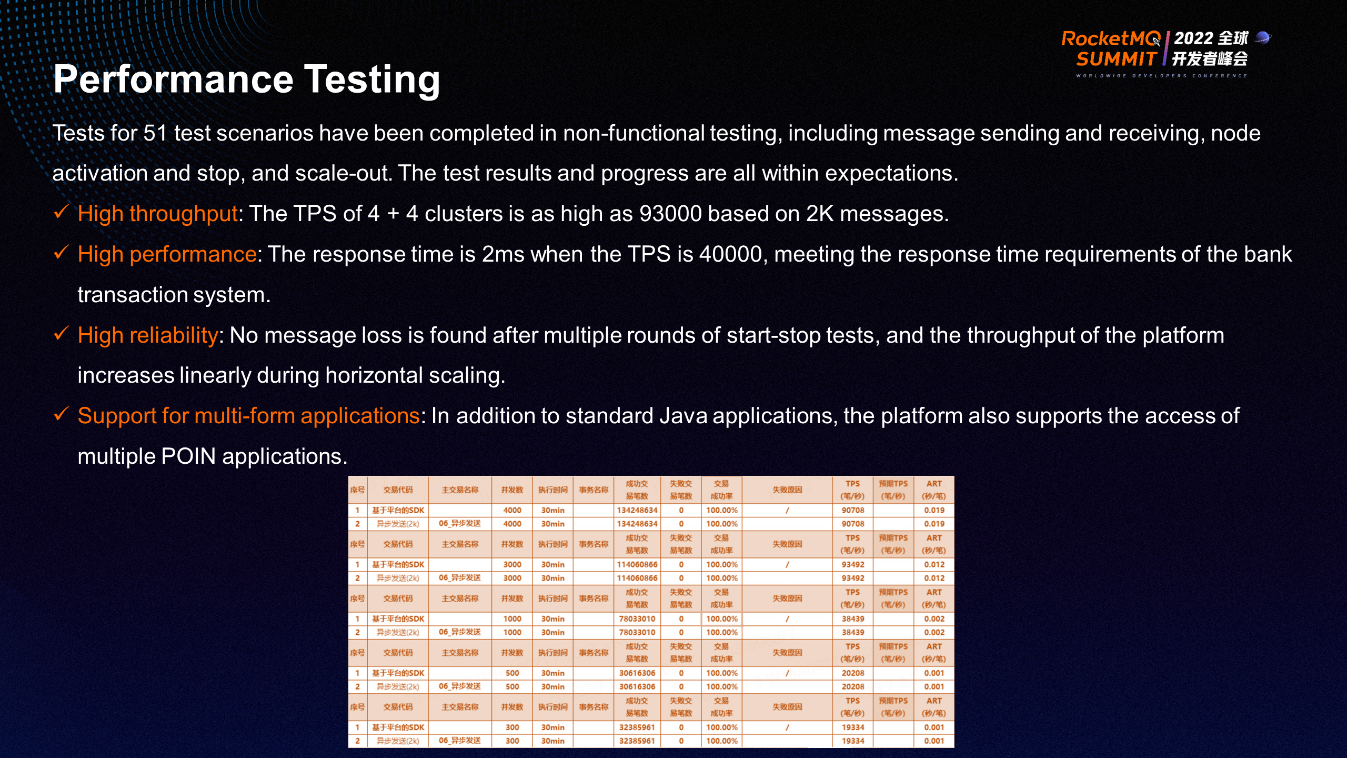

The earliest performance testing shows that the TPS of the 4 + 4 clusters is 40,000. After optimization, the overall TPS reaches 93,000. This has something to do with different planning scenarios. Now, the 4 + 4 clusters have a processing capacity of almost 160,000.

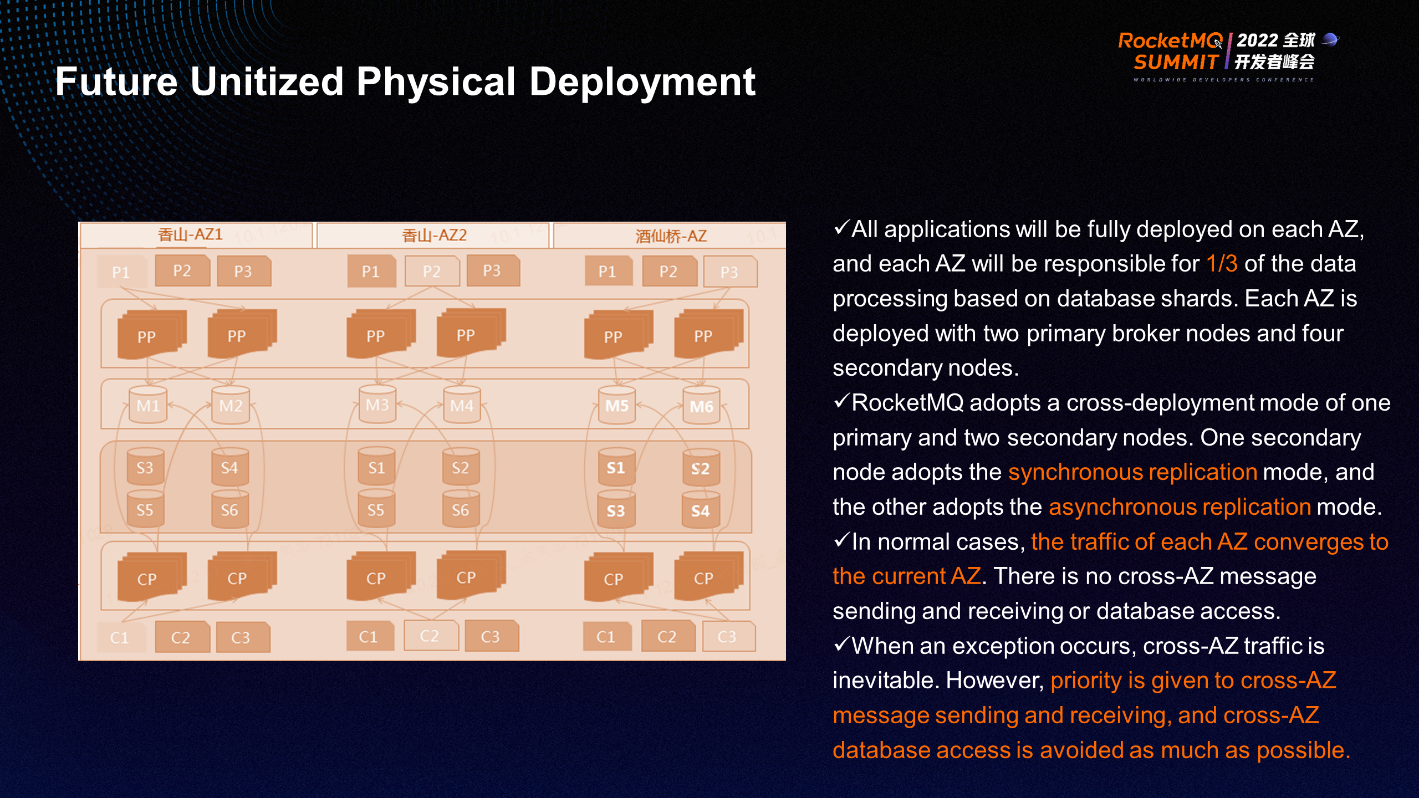

The construction of distributed messaging platforms will focus more on unitized construction in the future. All applications will be fully deployed on each AZ, and each AZ will be responsible for one-third of the data processing based on database shards. In the future, RocketMQ will generally adopt a cross-deployment mode of one primary and two secondary nodes. One secondary node adopts synchronous replication, and the other adopts asynchronous replication. All data are required to be converged in normal cases, and message sending and receiving across AZ is only considered in abnormal cases. However, only simple message sending and receiving are required for cross-AZ access. If database operations are designed on the underlying layer, the database will not be required to do cross-AZ access.

The focus of follow-up work is unitized deployment. Recently, the deployment architecture of distributed transformation is generally three AZs in the same city and one AZ in different cities. Multiple data centers are required in the same city. Each AZ processes the data in its shards, and each AZ must do the mutual backup at the same time. The remote AZ serves as the backup of the Beijing central AZ. Finally, service calls, message sending and receiving, and data access between AZs are prohibited in normal cases.

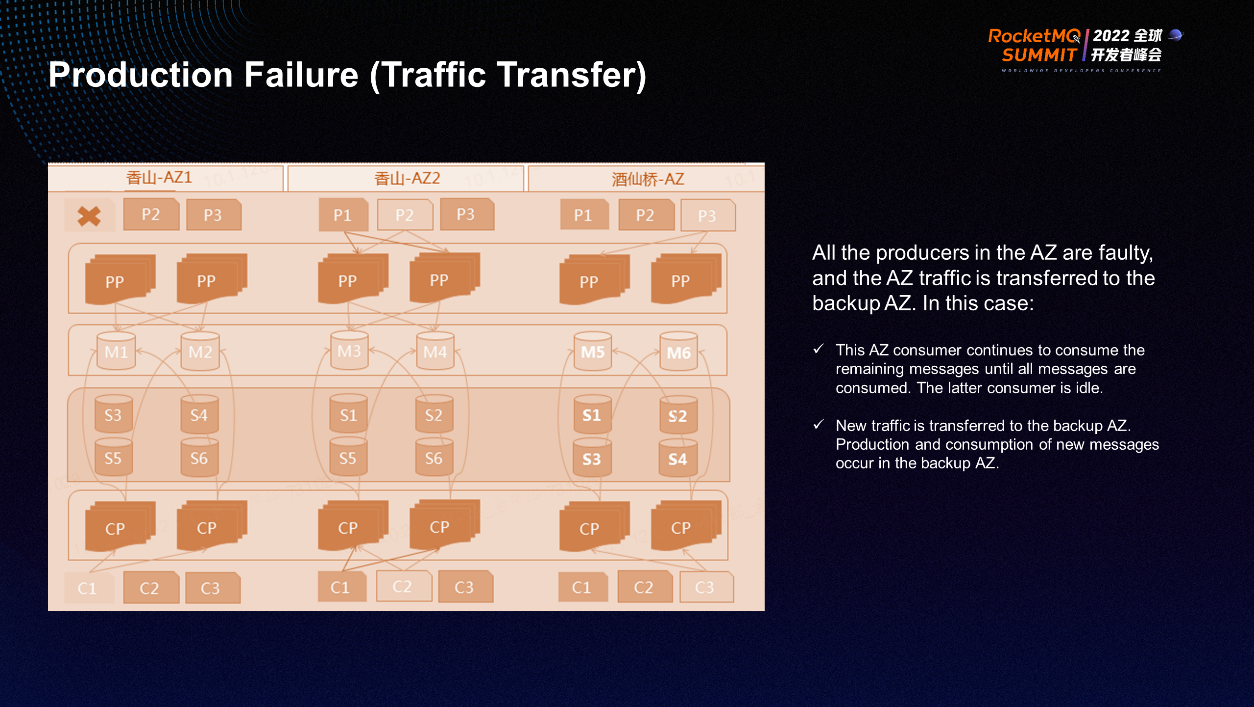

The messaging platform derives new scenarios to match the construction requirements related to the system. The first is the nearby access, including nearby access for production, nearby access for consumption, and cluster data synchronization between Beijing and other regions. The following failures may occur in this deployment scenario:

When the production application layer fails, the trading platform will not do any other work, and the messaging platform will normally process messages. At this time, the upper-layer application will do a similar traffic transfer, transfer data to the backup AZ, and only consume the corresponding backlog messages normally, while other data will not be processed.

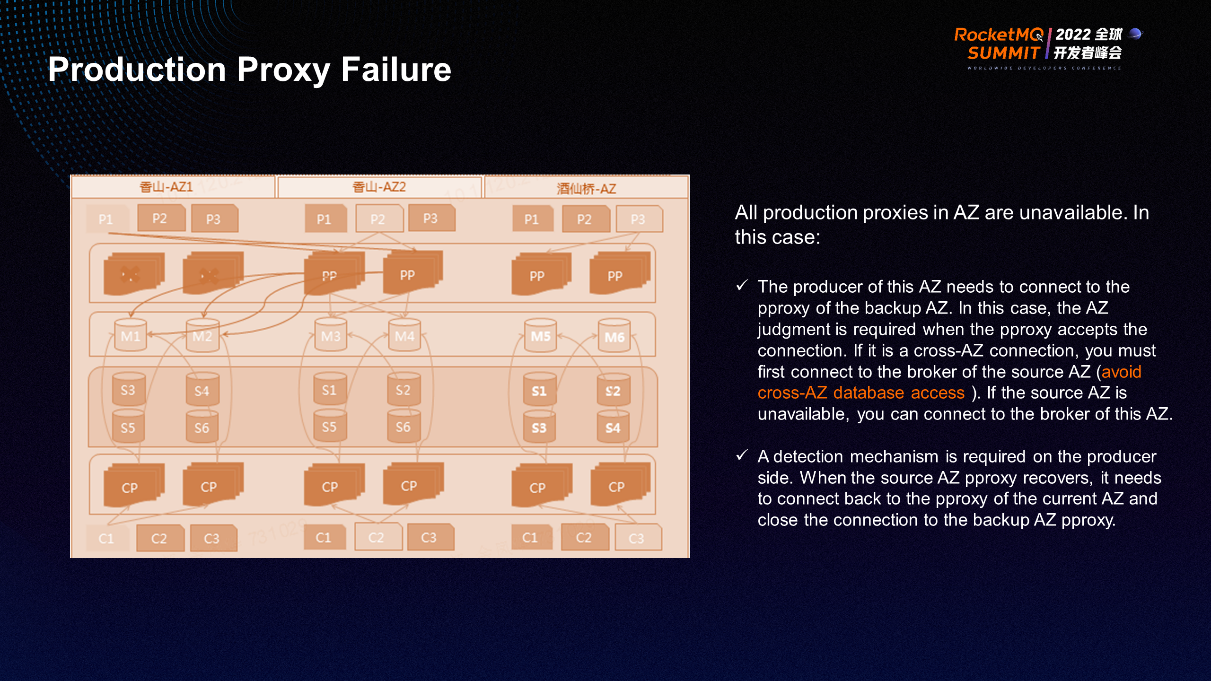

When the production proxy layer fails, the upper-layer applications must have the capability of fault discovery and can correspondingly connect to the production proxy in the backup AZ. Then, the production proxy makes the corresponding logical judgment. It will automatically connect the application layer to the corresponding Broker on the fault page, and other consumption will still be handled normally here. This only does cross-AZ access for message production. Other consumption (including data processing) does not cause cross-AZ access.

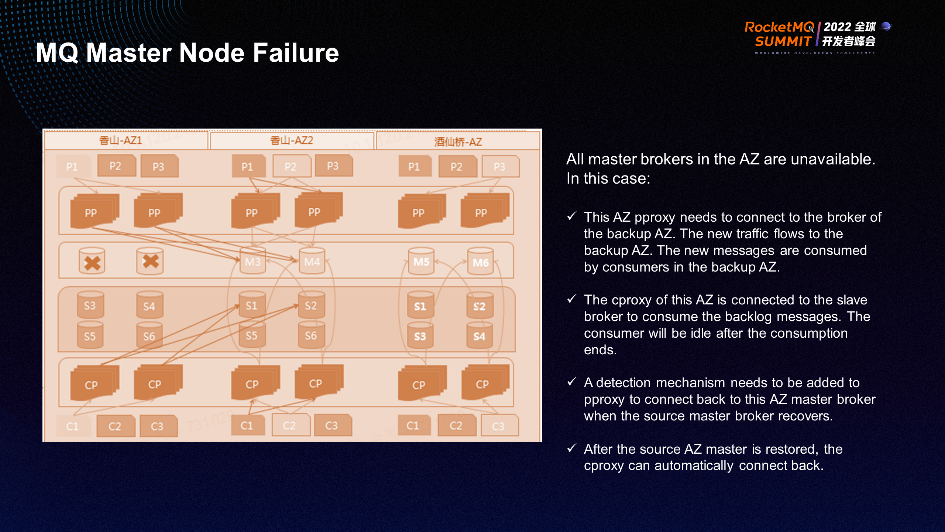

When the Broker fails, the production proxy is required. It has a corresponding detection capability and will correspondingly connect to the Broker of backup AZ. At this time, all newly produced messages will be processed here. The purpose of this connection is mainly to consume the remaining backlog messages above.

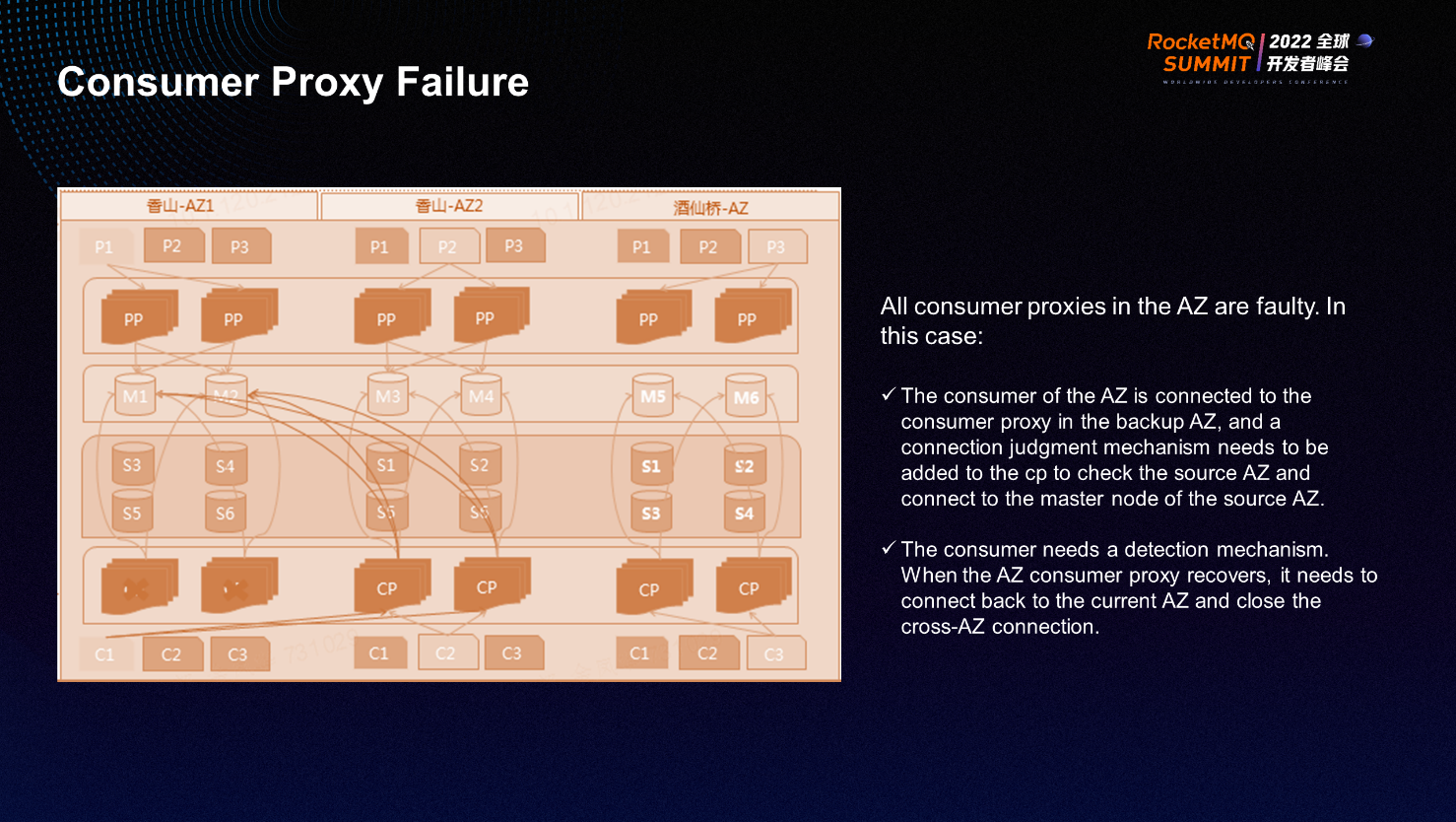

The consumption proxy failure is somewhat similar to the production proxy failure. After the consumption failure occurs, the application layer must have detection capability. At the same time, the connection to the proxy is transferred to the backup proxy. The backup proxy will connect to the proxy of the AZ, pull the messages produced by the AZ producer, and deliver them to the AZ after normal consumption. The entire message-pulling process is queued, but the data processing is done within the AZ.

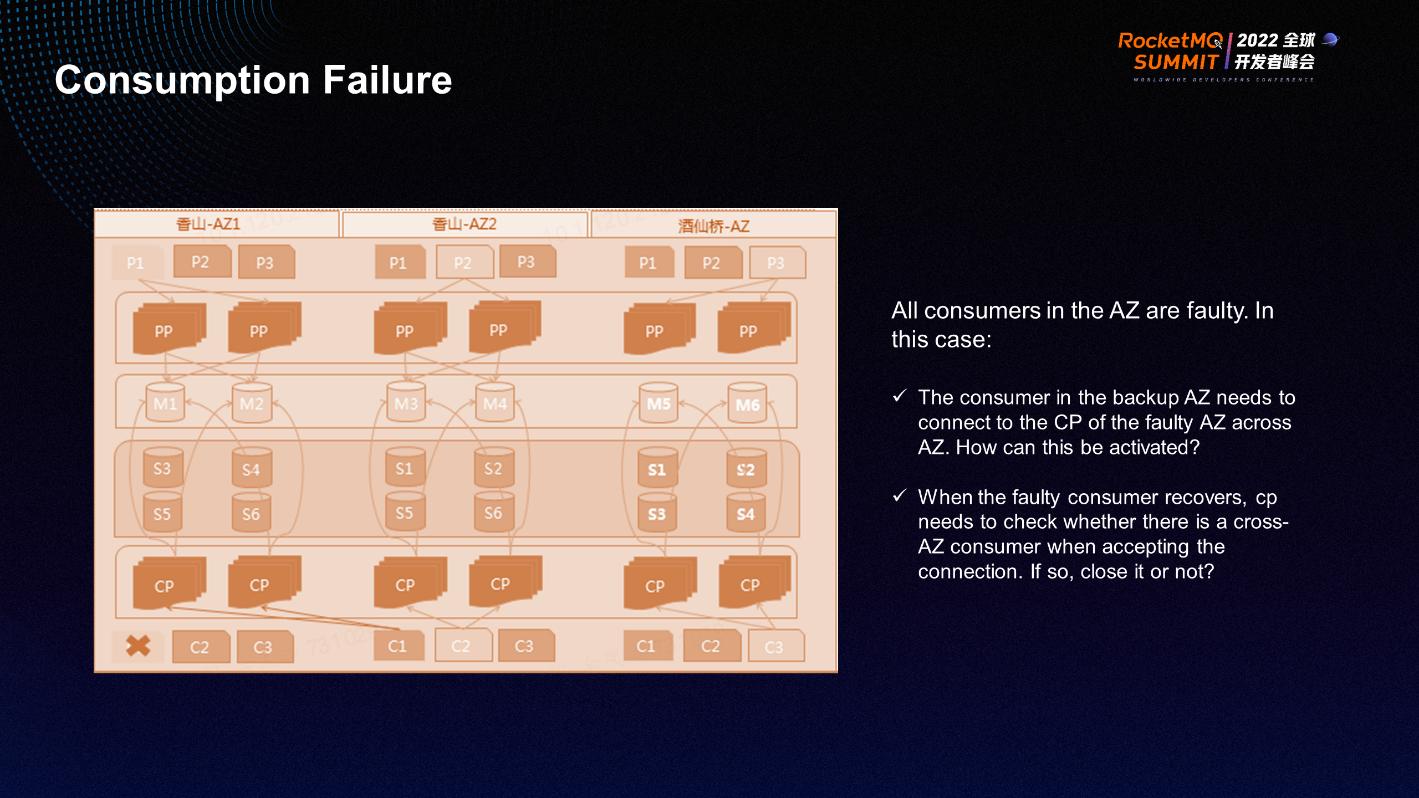

Finally, there is a consumption failure. The consumption SDK must have some detection capabilities. When all consumption of the main AZ fails, the consumer of the backup AZ is required for activation. At this time, it has to pull out the data across AZs, resulting in cross-AZ consumption.

AlibabaMQ for Apache RocketMQ Official Site: https://www.alibabacloud.com/product/mq

Join the Apache RocketMQ Community: https://github.com/apache/rocketmq

RocketMQ Message Integration: Multi-Type Business Message - Normal Message

The Challenges behind the Migration and Implementation of Large-Scale ActiveMQ to RocketMQ

668 posts | 55 followers

FollowAlibaba Clouder - December 19, 2018

Alibaba Developer - August 19, 2021

Alibaba Cloud New Products - November 10, 2020

Aliware - November 10, 2020

Alibaba Cloud Community - May 31, 2022

Alibaba Cloud Native Community - November 23, 2022

668 posts | 55 followers

Follow Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Cloud Native Community