By Tang Huamin (Huamin), Container Platform Technical Expert at Alibaba Cloud

The Linux container is a lightweight virtualization technology, which isolates and restricts process resources in kernel sharing scenarios based on namespace and cgroup technology. This article takes Docker as an example to provide a basic description of container images and container engines.

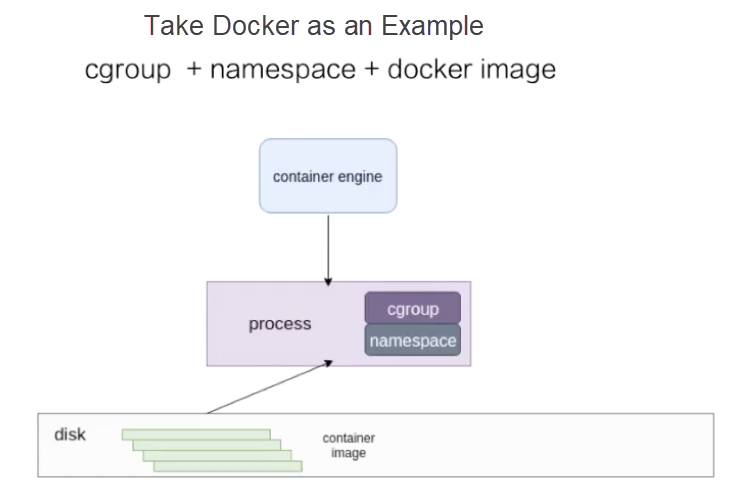

A container is a lightweight virtualization technology. In contrast with virtual machines (VMs), it does not contain the hypervisor layer. The following figure shows the startup process of a container.

At the bottom layer, the disk stores container images. The container engine at the upper layer can be Docker or another container engine. The container engine sends a request, such as a container creation request, to run the container image on the disk as a process on the host.

For containers, the resources used by the process must be isolated and restricted. This is implemented by the cgroup and namespace technologies in the Linux kernel. This article uses Docker as an example to describe resource isolation and container images.

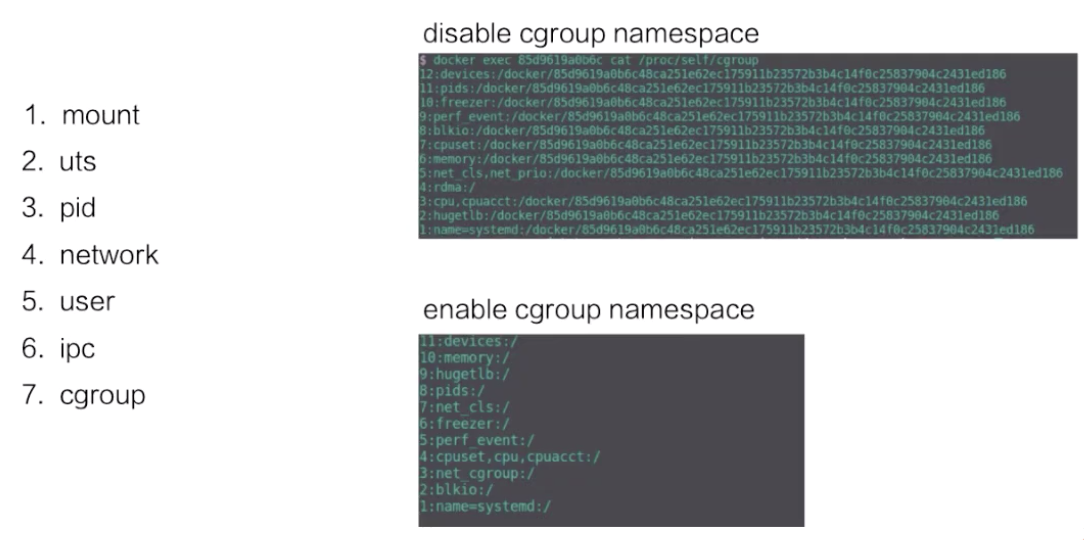

Namespace technology is used for resource isolation. Seven namespaces are available in the Linux kernel, and the first six are used in Docker. The cgroup namespace is not used in Docker but is implemented in runC.

The following describes the namespaces in sequence:

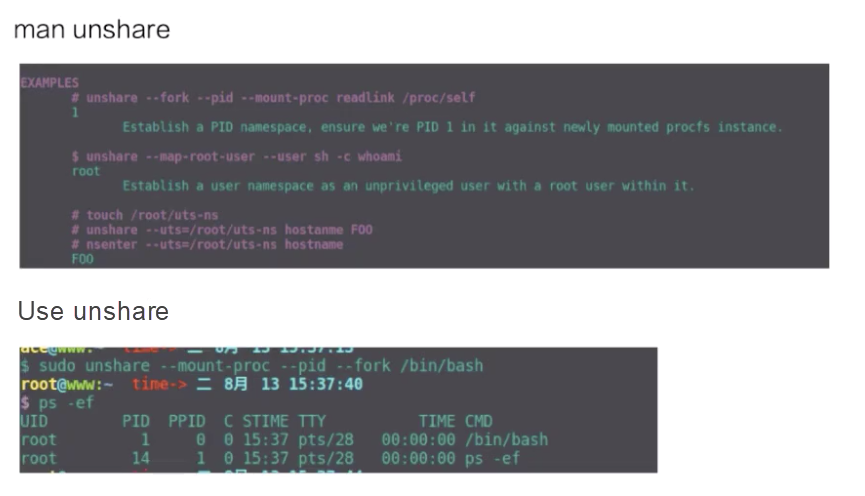

-v parameter bound to make some directories and files on the host visible in the container.The following describes how to create a namespace in a container by using unshare.

The upper part of the figure is an example of using unshare, while the lower part is a pid namespace that is created by the unshare command. As shown in the figure, the bash process is in a new pid namespace, and the ps result indicates that the PID of the bash process is 1, indicating that it is a new pid namespace.

Cgroup technology is used for resource restriction. Both systemd drivers and cgroupfs drivers are available for Docker containers.

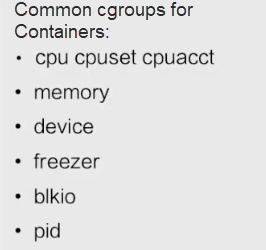

The following describes the common cgroups for containers. The Linux kernel provides many cgroups. Only the following six types are used for Docker containers:

Some cgroups are not used for Docker containers. cgroups are divided into common and uncommon cgroups. This distinction only applies to Docker because all cgroups except for rdma are supported by runC. However, they are not enabled for Docker. Therefore, Docker does not support the cgroups in the following figure.

This section uses a Docker image as an example to describe the container image structure.

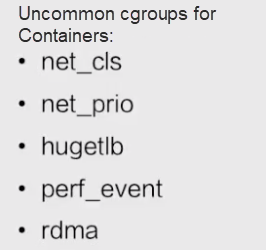

Docker images are based on the union file system. The union file system allows files to be stored at different layers. However, all these files are visible on a unified view.

In the preceding figure, the right part is a container storage structure obtained from the official Docker website.

This figure shows that the Docker storage is a hierarchical structure based on the union file system. Each layer consists of different files and can be reused by other images. When an image is run as a container, the top layer is the writable layer of the container. The writable layer of the container can be committed as a new layer of the image.

The bottom layer of the Docker image storage is based on different file systems. Therefore, its storage driver is customized for different file systems, such as AUFS, Btrfs, devicemapper, and overlay. Docker drives these file systems with graph drivers, which store images on disks.

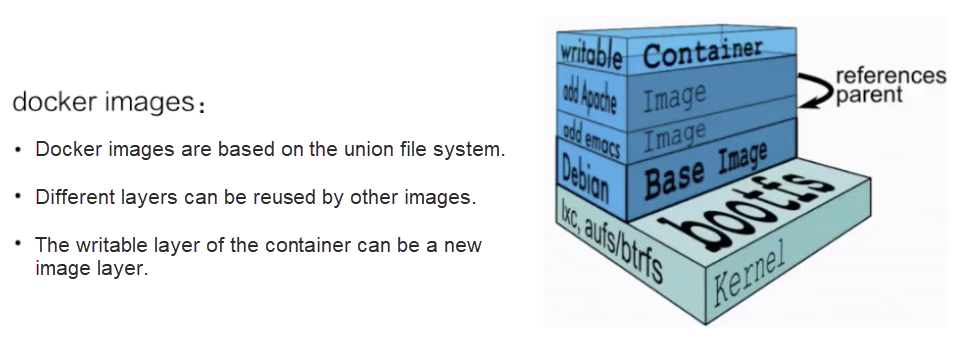

This section uses the overlay file system as an example to describe how Docker images are stored on disks.

The following figure shows how the overlay file system works.

This section describes how to perform file operations in a container based on overlay storage.

File Operations

trusted.overlay.opaque = y.When a container is created, the upper layer is empty. If you try to read data at this time, all the data is read from the lower layer.

As mentioned above, the overlay upper layer has a copy-on-write mechanism. When some files need to be modified, the overlay file system copies the files from the lower layer and modifies them.

There is no real delete operation in the overlay file system. Deleting a file actually means adding a mark to the file at the unified view layer so that the file is not displayed. Files can be deleted in two ways:

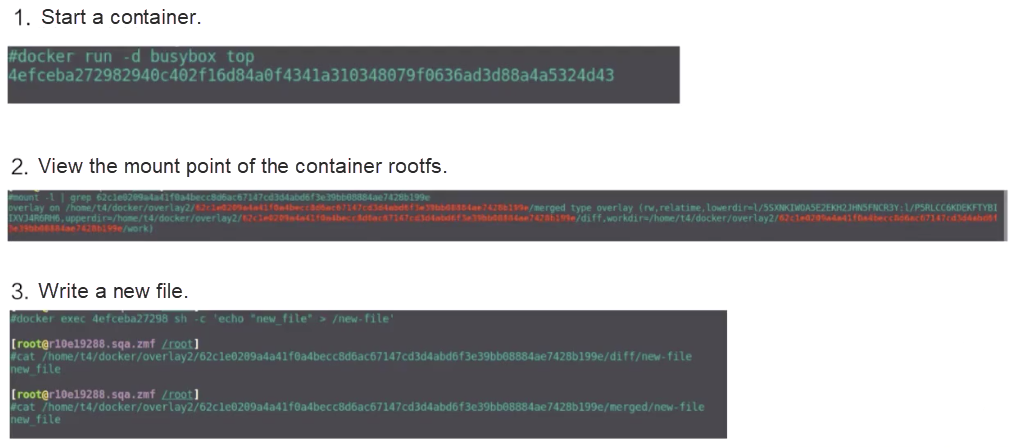

This section describes how to run the docker run command to start a busybox container and what the overlay mount point is.

The second figure shows the mount command used to view the mount point. The container rootfs mount point is of the overlay type, and includes the upper, lower, and workdir layers.

Next, let's learn how to write new files into a container. Run the docker exec command to create a file. As shown in the preceding figure, diff is an upperdir of the new file. The content in the file in upperdir is also written by the docker exec command.

The mergedir directory contains the content in upperdir and lowerdir and the written data.

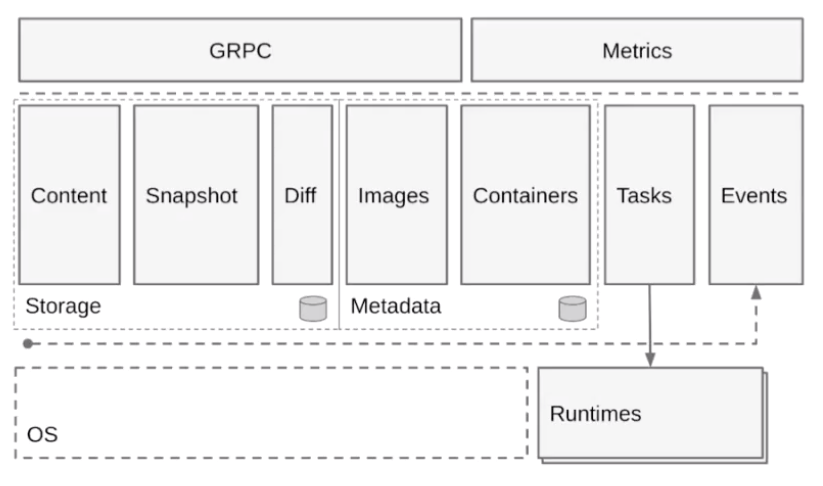

This section describes the general architecture of containerd on a container engine based on Cloud Native Computing Foundation (CNCF). The following figure shows the containerd architecture.

As shown in the preceding figure, containerd provides two main functions.

One is runtime, which is container lifecycle management. The other is storage, which is image storage management. containerd pulls and stores images.

Horizontally, the containerd structure is divided into the following layers:

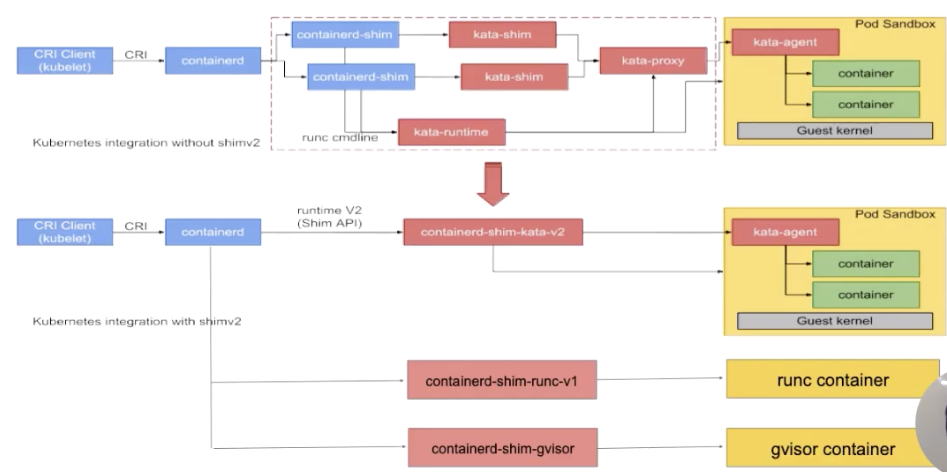

This section describes the general structure of containerd at the Runtimes layer. The following figure is taken from the official kata website. The upper part is the source image, while some extended examples are added to the lower part. Let's look at the architecture of containerd at the Runtimes layer.

The preceding figure shows a process from the upper layer to the Runtime layer from left to right.

A CRI Client is shown to the leftmost. Generally, Kubelet sends a CRI request to containerd. After receiving the request, containerd passes it through a containerd-shim that manages the container lifecycle and performs the following operations:

The upper part of the figure shows the security container, which is a kata process. The lower part of the figure shows various shims. The following describes the architecture of a containerd-shim.

Initially, there is only one shim in containerd, which is enclosed in the blue box. The shims in all containers, such as kata, runC, and gVisor containers, are containerd-shims.

Containerd is extended for different types of runtimes through the shim-v2 interface. In other words, different shims can be customized for different runtimes through the shim-v2 interface. For example, the runC container can create a shim named shim-runc, the gVisor container can create a shim named shim-gvisor, and the kata container can create a shim named shim-kata. These shims can replace the containerd-shims in the blue boxes.

This has many advantages. For example, when shim-v1 is used, there are three components due to the limits of kata. However, when shim-v2 is used, the three components can be made into one shim-kata component.

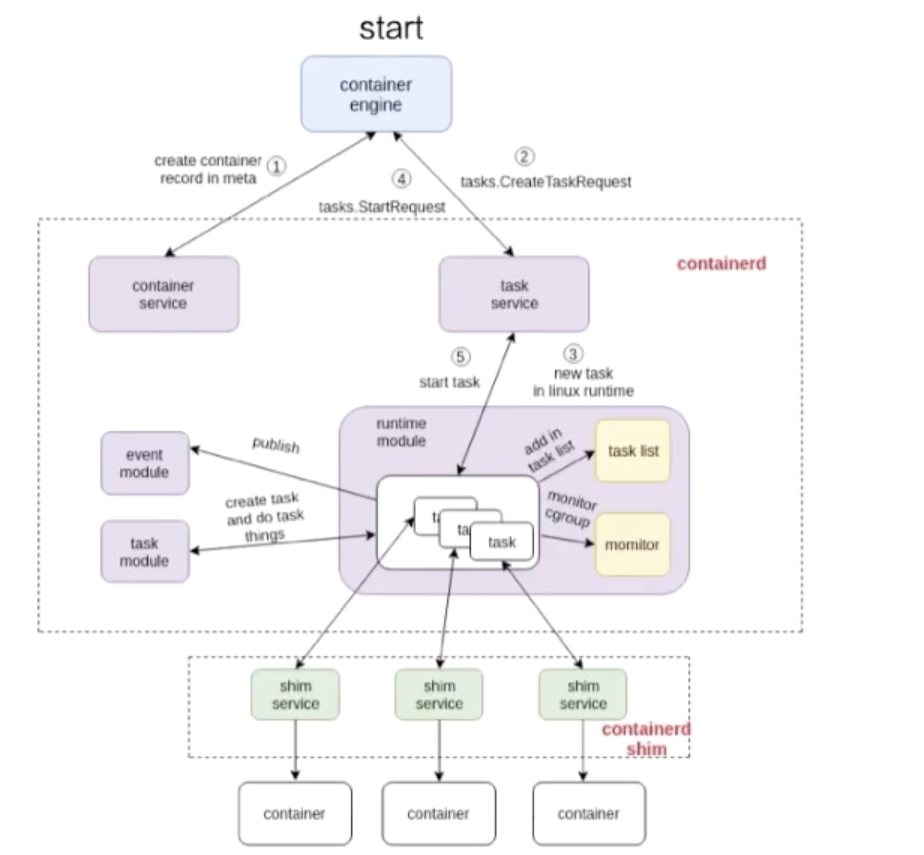

This section uses two examples to describe how a container process works. The following two figures show the workflow of a container based on the containerd architecture.

Start Process

The following figure shows the start process.

The process consists of three parts:

The numbers marked in the figure show the process by which containerd creates a container.

It first creates metadata and then sends a request to the task service to create a container. The request is sent to a shim through a series of components. containerd interacts with container-shim through gRPC. After containerd sends the creation request to container-shim, container-shim calls the runtime to create a container.

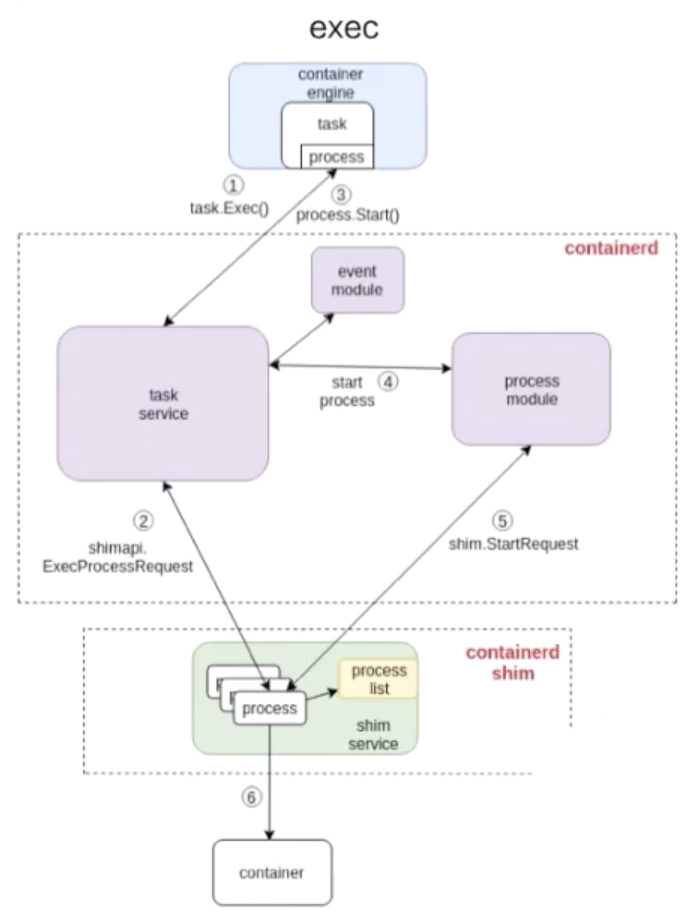

The following figure shows how to execute a container.

The exec process is similar to the start process. The numbers marked in the figure shows the steps by which containerd performs exec.

As shown in the preceding figure, the exec operation is also sent to containerd-shim. There is no essential difference between starting a container and executing a container.

The only difference is whether a namespace is created for the process running in the container.

I hope this article helped you better understand Linux containers. Let's summarize what we have learned in this article:

Getting Started with Kubernetes | Service Discovery and Load Balancing in Kubernetes

536 posts | 52 followers

FollowAlibaba Developer - March 31, 2020

Alibaba Developer - June 23, 2020

Alibaba Developer - February 26, 2020

Alibaba Developer - June 22, 2020

Alibaba Developer - February 26, 2020

Alibaba Clouder - July 24, 2020

536 posts | 52 followers

Follow Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free