By Lei Yin

Today, we will take a deep dive into the speech "A New Track for Fashion E-commerce - FashionAI Technology" shared by Lei Yin, a researcher at Alibaba and the head of FashionAI from Taobao's TECH department, at the Taobao TECH Festival in Silicon Valley.

Let's start with the recommendation technology. First, let's see the recommendations based on user behavior, which includes clicking, browsing and purchasing behaviors of users. Recommendation technology improves user efficiency in finding goods, which in turn also helps to increase the company's revenue. When recommendation efficiency is improved to a certain extent, bottlenecks may appear. For example, you may have already bought a top, so the system continues to recommend tops to you. This is pretty common and has been severely criticized in recent years. If the recommendation system is developed purely based on user behaviors, such problems are not uncommon.

Second, let's see the user portrait. It's commonly accepted that precise portraits can be made through user insights. But I have always doubted this. Take shopping for clothes as an example. You may get the user behavior data, such as browsing, clicking, and purchasing data. But even if you can determine the skin color, height, weight and measurements of the user, this user portrait will not be much more accurate than the previous one. Therefore, the so-called user insights and user portraits are actually still very rough today.

Third, we can also make a knowledge graph to help with related recommendations. For example, if you buy a fishing rod, I will recommend other fishing gear to you, or you finally buy other auto parts recommended as a result of buying headlights. However, as of today, the results have not been good enough and many difficulties still exist.

The above are common considerations for recommendation technology. Then, in the apparel recommendation field, let's take a look at the other possibilities. What is the core criterion for a shopping guide in an offline clothing shop? The criterion is the associated purchase. If a customer buys a piece of clothing, it is not counted in your contribution. But if you lead the customer to buy another piece, this is counted in your performance. Therefore, the associated purchase is very important. The key logic of it is collocation. We can see that, when we implement recommendation in a specific field, we have some recommendation logic that is specific to this field. This is the logic that occurs in daily life.

To make accurate and reliable recommendations, we need to know how to match products with users as well as other products. This is not a simple task because it requires a lot of prior knowledge and experience. For example, if we want to match clothes, we need to know the attributes of the clothes, as well as the design elements, in as much detail as possible. The accuracy and detail must be sufficient for the matching to be done reliably.

This problem, when viewed in a more technical sense, can be described as a knowledge graph. In such a graph, you typically have many knowledge points to map through human experience or user data. Knowledge points in the knowledge graph are generated more by common sense. For example: I am a person. Who are my friends? Who is my boss? "I" is a knowledge point generated by common sense.

Another method is called the expert system. For example, many celebrities, whom we understand as experts, will accumulate professional experience. Experts exist in every field, such as doctors in the medical system. The expert system was probably commonly used by AI before the rise of the knowledge graph.

The level further down in these two methods is the knowledge points, which are more basic. If problems exist in the knowledge points themselves, the knowledge relations constructed on them will have problems. Then, when AI algorithms are performed on this basis, the results will not be good enough. This may be one of the reasons why AI is hard to implement. We should have the courage to rebuild this knowledge system.

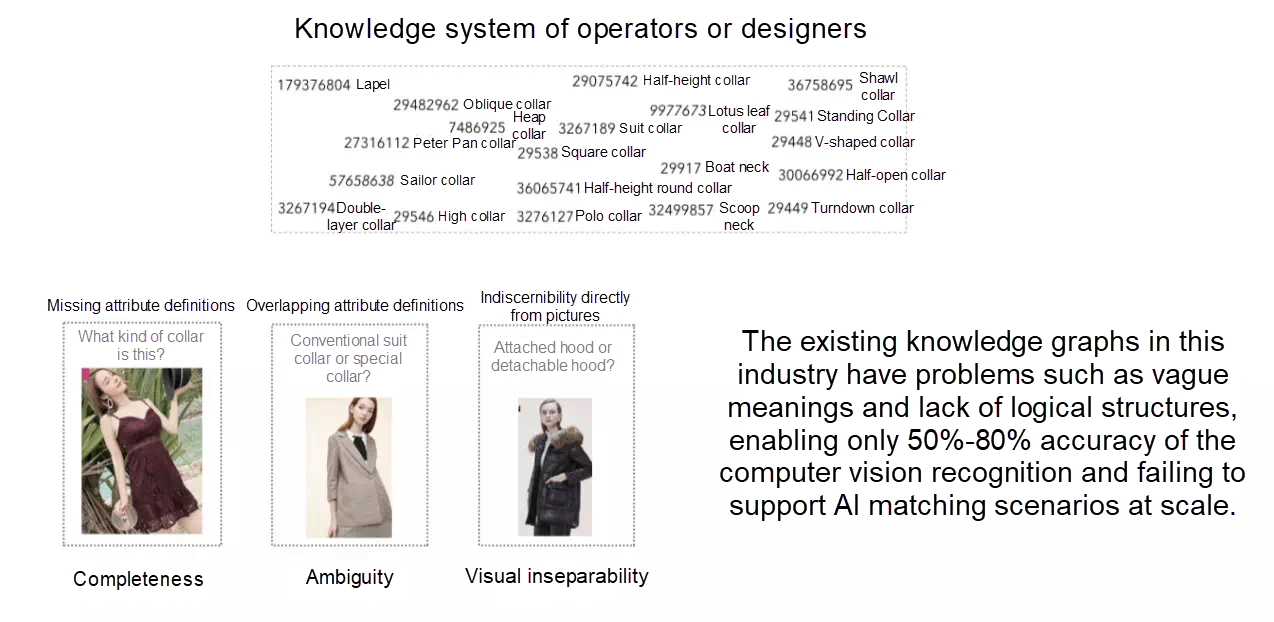

Let's take a look at an example from Taobao. The upper part of this figure is the knowledge system of operators or designers. This is an example of "collar type," with various round collars, inclined collars and navy collars. We can see that the structure is tiled and scattered. Previously, knowledge was spread between people. Especially in small circles, such as designer groups, it's alright if knowledge is very confusing as long as it can be communicated. For example, a doctor's cursive writing may be readable by other doctors, but patients may not be able to understand it. In fact, a lot of knowledge is used for communication between people, including a lot of ambiguity and incompleteness. For example, for the clothing style, one tag is called "workplace style" and the other is called "neutral style." The "workplace style" and the "neutral style" cannot be visually distinguished. If it is difficult for people to distinguish, while the machine recognition accuracy can exceed 80%, then something must have gone wrong.

In another case, the person who added the tag may not understand these styles. To give an extreme example, Taobao merchants tag clothes. For a period of time, half of all women's clothes were tagged by merchants as "Korean style," but these clothes were not "Korean style" at all. This is because Korean-style clothes sell well, so they write that they are Korean style. Therefore, it is necessary to determine the style directly from the images because the tags are not always reliable.

Over the past few years, we learned about the apparel operations of Taobao and Tmall, and made some adjustments based on the operating knowledge of several editions, but it was not good enough. Last year, we held the FashionAI competition and cooperated with the Department of Textiles and Clothing of Hong Kong Polytechnic University. Later, we cooperated with Beijing Institute Of Fashion Technology and Zhejiang Sci-Tech University. In fact, the knowledge system provided by apparel experts is not feasible, because we need a knowledge system oriented to machine learning. Machines need to interpret binary data, and the principles we have summarized, such as completeness, ambiguity and "visual indistinguishability", should be met as far as possible.

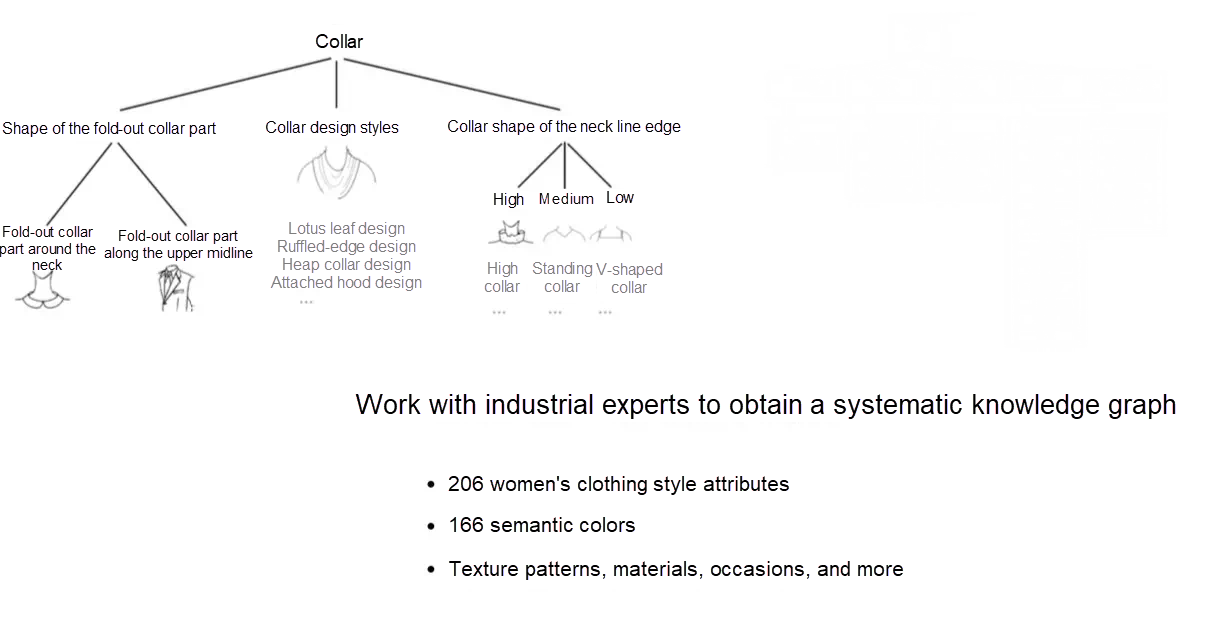

We organize the scattered knowledge according to division logic. For example, for collars, we divide them according to the fabric, design method, and neck-edge line respectively, to summarize the scattered knowledge points from several dimensions. It was a mess before, but a tree shape will finally emerge. After sorting out the common attributes of women's wear, we found a total of 206 attributes, not including open and constantly expanding attributes, such as "popular design techniques." This "sorting" is much more complicated than you think. It took 3 to 4 years. In addition to considering the knowledge itself, it also is necessary to further investigate the difficulty and necessity of data collection corresponding to knowledge points. For example, the suit collar for women's wear can also be subdivided into 9 categories, which are almost visually indistinguishable. At this point, staying at this granularity for suit collars in women's wear is sufficient, and no further subdivision is required.

Sometimes, it is difficult to determine in advance whether a good model can be obtained by learning an attribute. At this time, the definition of the attribute requires multiple rounds of iteration. If a problem is found with the attribute definition, we need to go back and redefine it, then re-collect the data and train the model until the model meets the requirements. After knowledge rebuilding, the accuracy of the recognition for more than a dozen attributes has generally increased by 20%, which is a great improvement.

Now, we have 206 styles for women's wear, 166 semantic colors, and knowledge systems, such as materials, scenarios, and temperature systems. How to define colors? For example, in the fashion industry, "yellow" is almost meaningless, but "lemon yellow" makes sense. Women's wear in lemon yellow was popular last year. We know that the RGB color space has 256 × 256 × 256 possible colors, and the Pantone Color Table has a total of 2310 colors related to clothing, but the colors in the table are all color numbers, which consumers cannot understand. Therefore, we have created another layer of 560 colors with corresponding semantics, which was set together with the Beijing Institute of Clothing Technology. However, it is too subdivided for clustering clothes by color. Therefore, we have created another layer of 166 colors with corresponding semantics, such as "lemon yellow" and "mustard green." At this point, consumers can understand these colors.

There are still a lot of technical details, such as how to deal with lighting and chromatic aberration. Here, I mainly focus on the knowledge rebuilding for machine learning.

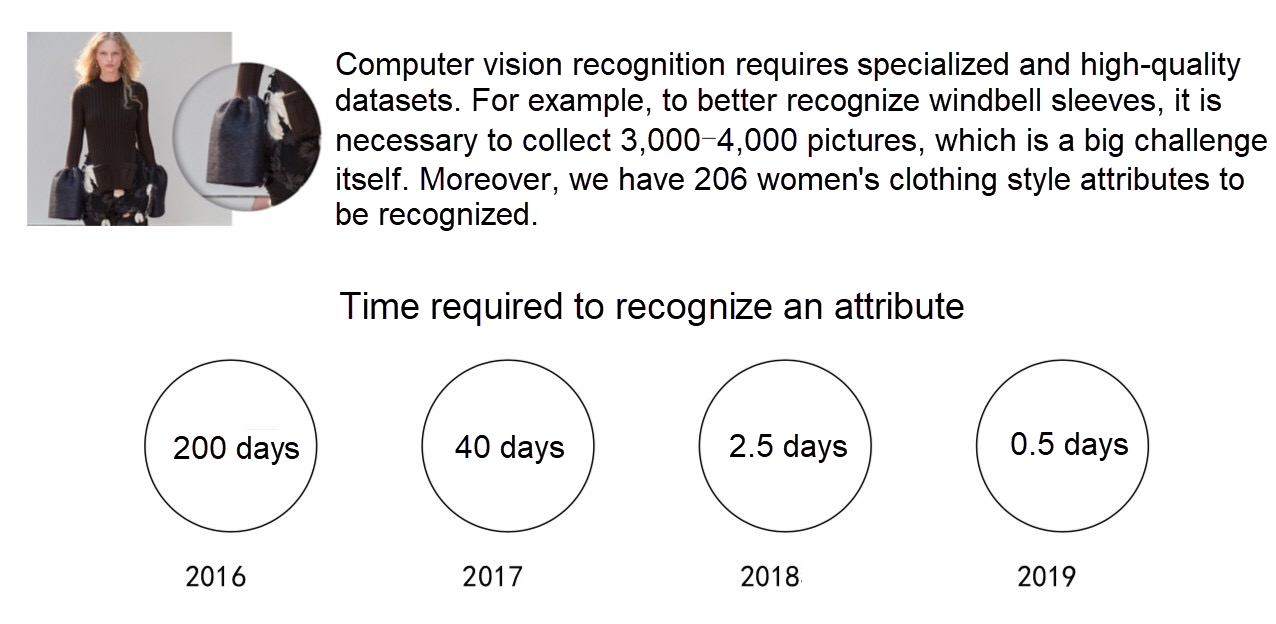

The problem arises. I have 206 styles of women's wear. If I collect data and train models, how can I ever finish? What's more, a definition may require multiple rounds of iterations for correction.

For example, in the figure, this is called the windbell sleeves. A qualified data set requires about 3000 to 4000 images. Collecting enough and high-quality images is a big challenge. In 2016, to create a high-quality dataset of 3,000 to 4,000 images, more than 100,000 images needed to be tagged. At that time, the tag retention rate was only 1.5%. The method at that time was similar to that in academic community, that is, to search through a lot of images with one word and then find someone to tag them. More likely, you cannot find enough images tagged with windbell sleeves. The images are not tagged, so you cannot find them. Therefore, rebuilding knowledge is indeed a huge challenge. No one had the courage to do it before, because it couldn't be done at all.

In 2016, it took us 200 days to complete an attribute recognition. This time includes the time spent on defining iterations. It took 40 days in 2017 and 2.5 days in 2018. By now, it takes us 15 hours. And, by the end of 2019, we plan to reduce the time to 0.5 days. This is a huge change. About three years ago, we proposed "Few-Shot Learning." At that time, not many people in the academic community raised this question. We, however, had already experienced it, because this is what we were having challenges with, and we had to start to solve it.

The "Few-Shot Learning" proposed by the academic community is more focused on how to get a good model directly from a small number of samples. The road we chose is different, and we made a detour. I cannot go into details at this time, but we have already gone down this path.

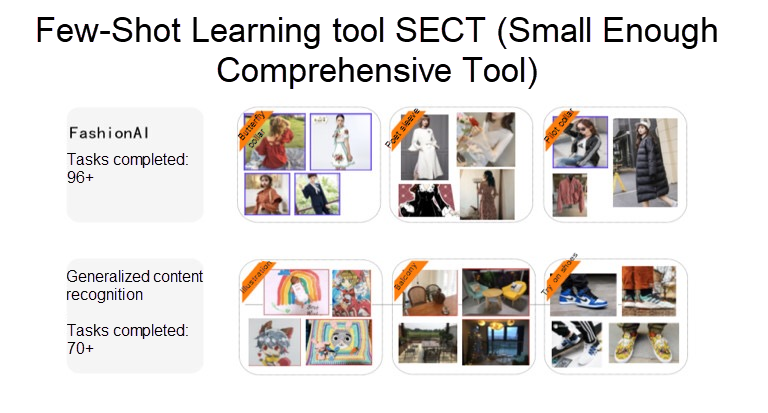

Let's look at the first image set in the figure below. One is called the bow collar and the other is called the pilot collar, which are from the women's wear attribute set. Today, we have completed 96 popular women's wear attributes by using our Few-Shot Learning tool SECT (Small Enough Comprehensive Tool). Most importantly, SECT has not only played a role in FashionAI services, but can also be used for generic content recognition. Strictly speaking, it performs well in tasks, such as "simple content classification."

In terms of generic content recognition, we have used SECT to identify more than 70 labels, such as "illustration," "balcony," and "try on shoes." We have begun to change the working modes of business personnel and algorithm personnel. Before the advent of deep learning, business personnel did not dare to ask algorithm personnel to provide a recognition model, because the development cycle is too long. At that time, to identify a thing, we needed to consult with the algorithm personnel, and then the algorithm personnel would manually designed the attributes. In the previous working mode, to build a model that could be put online and made available to the industry, it would take at least six months or one year. After deep learning became more popular in 2013, the situation changed. With deep learning, business personnel will only need to collect enough images, and the algorithmic personnel will design a good model. If this model is not good, then the quality of the data collected is not good. At this time, if operators wants to collect 5,000 images, the cost is still very high.

Today, it is still difficult for us to use SECT to solve the "detection" problem in machine vision, or the detection task is not a matter of the "Few-Shot Learning" in our understanding, but should be a matter of the "Weakly Supervised Learning" under the detection task. "Weakly Supervised Learning" is also different from "Few-Shot Learning."

In my opinion, big data can be divided into two types. The first type is real big data: Either business insights or model analysis can only be implemented based on large-scale data. The other type is fake big data: A large amount of data is required for training one model due to the currently insufficient capability of machine learning. With the increasingly powerful AI capabilities, the number of samples required will be reduced.

Some companies flaunt that they have a great deal of data for example, facial data, and consider data their assets. This trend will gradually fade, because AI is getting increasingly powerful and the amount of data required is decreasing. To what extent will SECT evolve? Perhaps algorithm developers in the middle and upper layer will no longer be needed. Business operators directly submit several dozens graphs (no more than 50) to the system and a model is returned very quickly for the subsequent performance test. If the model does not perform well, iterative learning is constantly performed until the model performs well. It is no longer true that the three phases of tagging, training, and testing have long intervals. Today, the entire iteration process is faster and faster. If the iteration speed can be reduced to hours or minutes, it will actually become a human-machine interaction learning system, which will bring about significant changes in the future.

The O&M officers on the Taobao platform said that more models were produced over the past two months than over the past three years. Our own algorithm developers also solve various problems besides attribute recognition. For example, before I came to Silicon Valley, some team members wanted to recognize whether a person in a picture is from a back view or frontal view, and whether the person is standing or seated. To do that, we need to quickly generate six judgement models. Today, we can generate and publish these models in one or two weeks, with the accuracy, recall rate, and generalization capability meets the requirements. In the past, this took about one and a half years.

Many people in this industry have summarized some limitations of deep learning, including the need for big data and the lack of interpretability. I think that we will soon have a new understanding of what a sample is and what interpretability is. Last year, we published an article titled "How to make a practical image dataset" in Visual Exploration edited by Songchun Zhu. This year we plan to write a follow-up article titled "How to make a practical image dataset (2)," which will focus on our experience and prospects for Few-Shot Learning. Stay tuned!

Alibaba Cloud and Cainiao Network: Digital and Intelligent Upgrade of the Entire Logistics Chain

2,597 posts | 773 followers

FollowAlibaba Clouder - January 22, 2020

Amuthan Nallathambi - May 12, 2024

Alibaba Cloud Community - December 9, 2024

Alibaba Cloud Data Intelligence - July 18, 2023

Alibaba Cloud Community - October 9, 2024

Iain Ferguson - January 6, 2022

2,597 posts | 773 followers

Follow DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Clouder

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free