By Yingjian Yu (Xiaoyu)

Since the birth of cache, data inconsistency between cache and database has plagued developers.

Query cache first. If the query fails, query DB and then rebuild the cache. There is no objection.

Update DB or cache first? Update cache or delete cache? Under normal circumstances, you can do whatever you want. However, once you face a high concurrency scenario, you have to think twice.

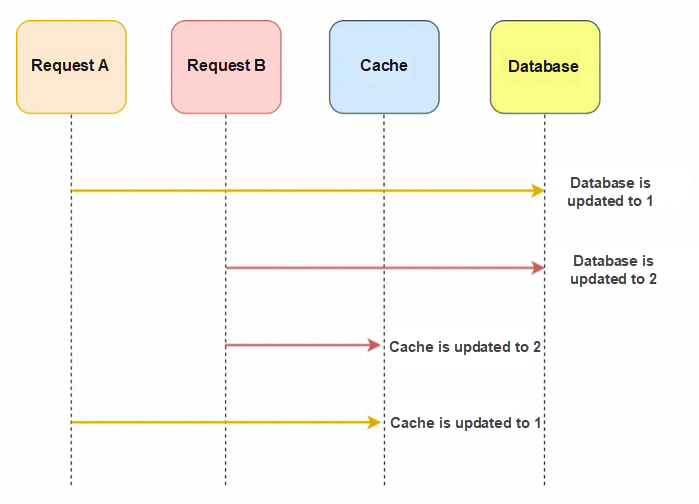

Thread A: Update database (1st second) --> Update cache (10th second)

Thread B: Update database (3rd second) --> Update cache (5th second)

In concurrent scenarios, it is easy to have this situation where each thread operates in a different order, which causes the cache value of request B to be overwritten by request A. The database contains the new value of thread B, and the cache contains the old value of thread A. It will remain dirty until the cache expires (if you set the expiration time).

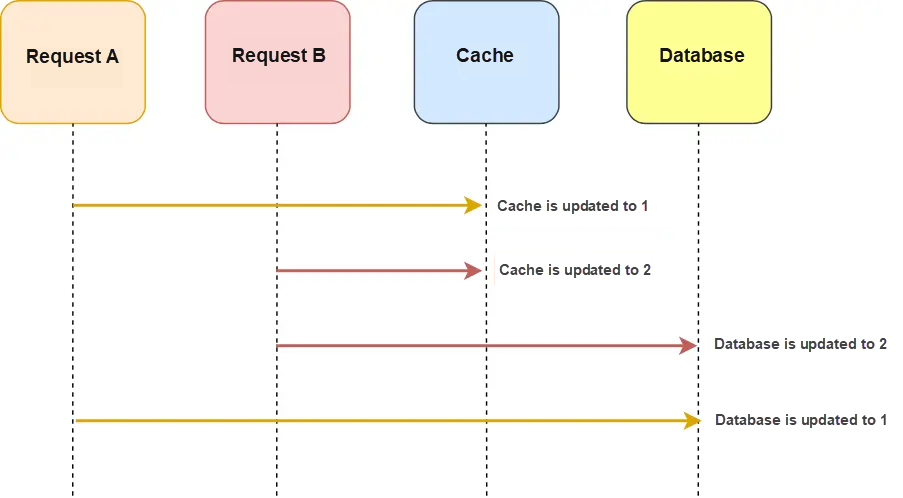

Thread A: Update cache (1st second) --> Update database (10th second)

Thread B: Update cache (3rd second) --> Update database (5th second)

In contrast to the previous case, the cache contains the new value of thread B, and the database contains the old value of thread A.

The first two methods cause exceptions in concurrency scenarios because updating the cache and updating the database are two operations. In concurrent scenarios, we cannot control the sequence of the two operations, and the thread that starts the operation first completes its work.

If you want to simplify it, you only need to update the database and delete the cache when updating. Is this problem solved by waiting for the next query to miss the cache and then rebuilding the cache? The latter two schemes were created based on this.

As such, it is surprising to find that the concurrent scenario problem that plagued us was solved! Both threads only modify the database. No matter who operates first, the database is subject to the thread that modifies it later.

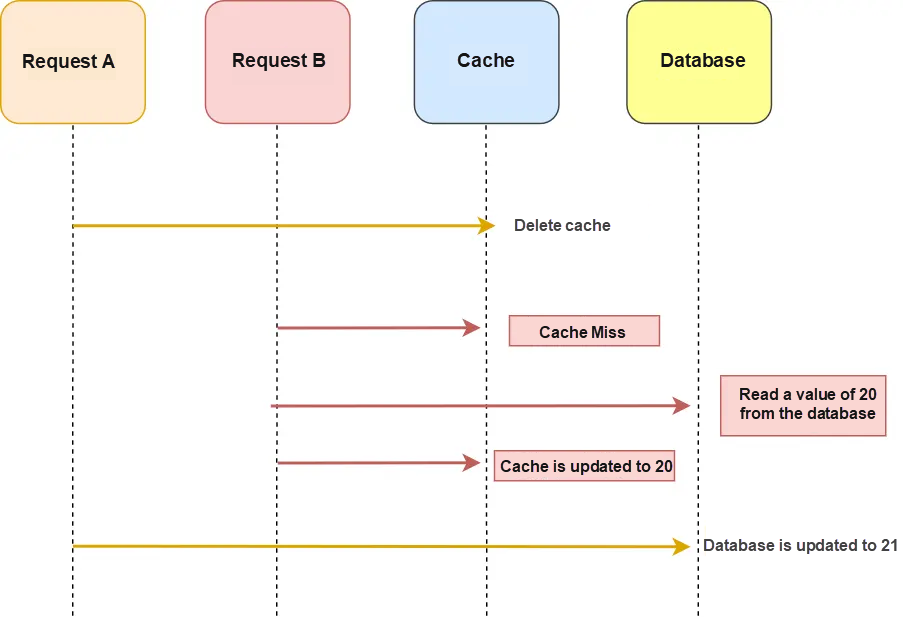

At this time, let's think about another scenario: two concurrent operations; one is an update operation, and the other is a query operation. After the update operation deletes the cache, the query operation does not hit the cache. The old data is read out and put into the cache first, and then the update operation updates the database. As a result, the data in the cache is still old data, resulting in dirty data in the cache. This situation is not what we want.

Under such a scheme, the solution of delayed double delete is developed.

A sleep time is added to ensure that when request A sleeps, request B can complete the operation of reading data from the database and writing the missing cache into the cache during this period and then delete the cache after request A finishes sleeping.

Therefore, the sleep time for request A needs to be greater than the time of reading data from the database + writing cache for request B.

However, it is difficult to evaluate the specific sleep duration. Therefore, this scheme is only to ensure consistency as much as possible. Cache inconsistency will occur in extreme cases.

This scheme is not recommended.

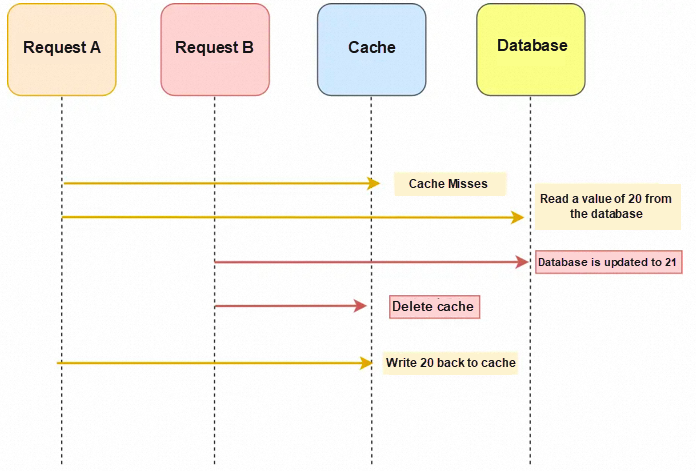

This scheme has changed the order of the database and cache based on scheme 3. We need to verify the previous scenario under this scheme: one is the query operation and the other is the concurrent update operation. We updated the data in the database first. At this time, the cache is valid. Therefore, the concurrent query operation takes the data that has not been updated. However, the update operation invalidates the cache, and the subsequent query operation pulls the data out of the database. The scheme is different from scheme 3 whose subsequent query operations keep fetching old data.

This is the standard design pattern used by the cache, which is cache aside.

Is this a perfect scheme? It is not perfect. Let's look at this scenario: one is a read operation. It does not hit the cache but gets data from the database. At this time, there is a write operation. After writing the database, the cache is invalidated. The previous read operation puts the old data in, so it will cause dirty data.

This case will only appear in theory. The probability of occurrence in practice is low because this condition requires cache invalidation and a concurrent write operation when the cache is read. The write operation of the database will be much slower than the read operation, and the table needs to be locked. At the same time, the read operation must enter the database operation before the write operation, and the cache is updated later than the write operation. The probability of having all these conditions is small.

Therefore, consistency is guaranteed through 2PC or Paxos protocols, or you try hard to reduce the probability of dirty data during concurrency. Facebook uses the latter method because 2PC is too slow, and Paxos is too complicated. It is better to set an expiration time for the cache. Even if the data is inconsistent, it can be invalidated after a period, and the consistent data can be updated.

Although the section above lists many complex concurrency scenarios, they are ideal situations. It means the operations on the database and cache are successful. However, in actual production, due to network jitter, service offline, and other reasons, the operations may fail.

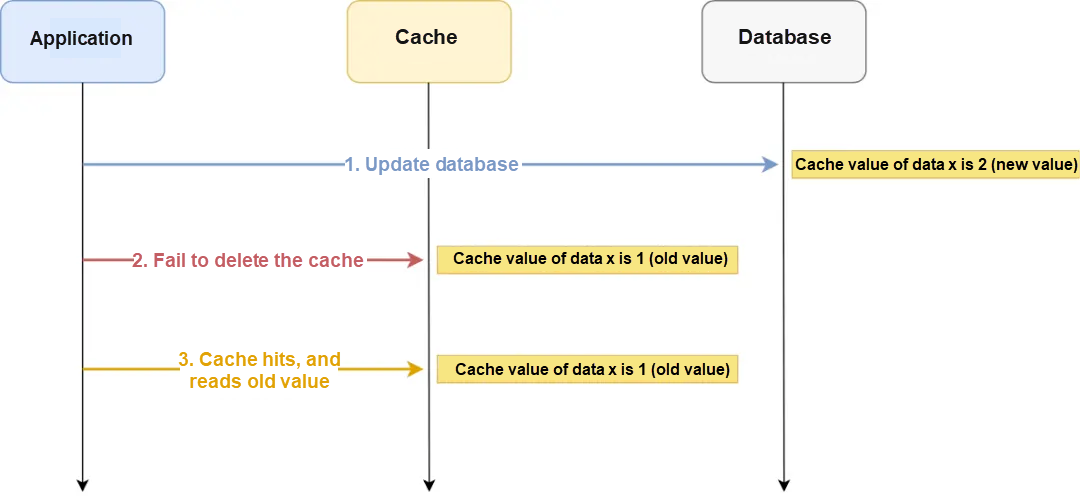

For example, the application needs to update the value of data X from 1 to 2. First, the database is updated, and then the cache of data X is deleted from the Redis cache. This operation fails. At this time, the new value of data X in the database is 2, and the cache value of data X in Redis is 1, resulting in inconsistency between the database data and the cache data.

If there is a subsequent request to access data X, it will be queried in Redis first because the cache is not deleted. So, the cache hits, but the old value 1 is read.

No matter whether the database is operated first, or the cache is operated first, as long as the second operation fails, there will be data inconsistency.

Now that we know the cause of the problem, how can we solve it? There are two methods:

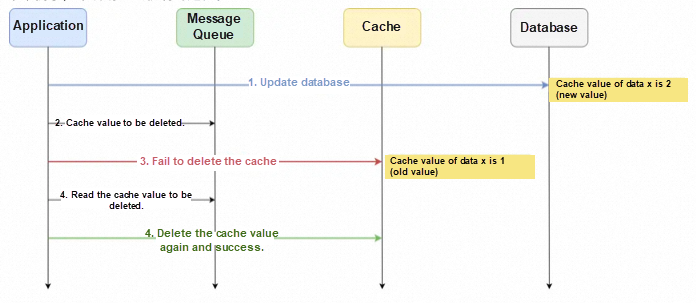

We can introduce a message queue to add the data to be operated by the second operation (delete cache) to the message queue and let the consumer operate the data.

The example below illustrates the process of the retry mechanism:

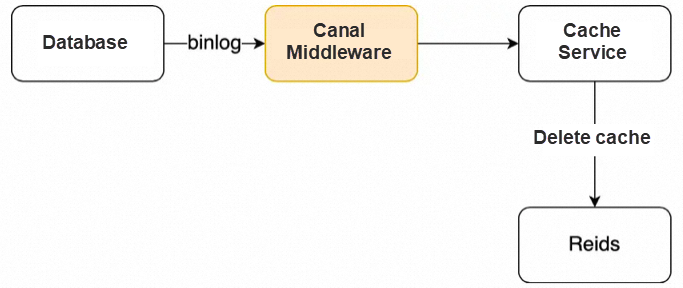

The first step of the Update the database before deleting the cache strategy is to update the database. If the database is updated, a change log will be generated and recorded in the binlog.

Therefore, we can subscribe to binlog, obtain the specific data to be operated, and then delete the cache. The open-source Canal middleware of Alibaba is implemented based on this strategy.

Canal simulates the interaction protocol of MySQL master-slave replication, disguises itself as a MySQL slave node, and sends a dump request to the MySQL master node. After MySQL receives the request, it starts to push Binlog to Canal. Canal parses the Binlog byte stream and converts it into easy-to-read structured data for downstream programs to subscribe to.

The following figure shows how Canal works:

Therefore, if you want to ensure that the Update the database before deleting the cache strategy of the second operation can be executed, we can use the message queue to retry the cache deletion or subscribe to MySQL binlog and then cache. These two methods have a common feature: both use asynchronous operations to cache.

Although catch aside can be called the best practice of cache usage, it makes the cache hit rate lower (deleting the cache every time makes it less easy to hit). It is more suitable for scenarios where a high cache hit rate is not required. If a high cache hit ratio is required, it is necessary to update the cache right after updating the database.

As mentioned earlier, updating the cache right after updating the database will cause data inconsistency in concurrent scenarios. How can we avoid it? There are two schemes.

Try to acquire the lock before updating the cache. If it is already occupied, block the thread first and wait for other threads to release the lock before trying to update again. This will affect the performance of concurrent operations.

Setting a short cache expiration time can make data inconsistency exist for a long time, and the impact on the business is smaller. At the same time, this makes the cache hit rate lower, and this is a problem we mentioned before.

In summary, there is no eternal best solution, only the best choice of solutions in different business scenarios.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

An Illustration of JVM and the Java Program Operation Principle

Indonesia Hackathon 2023: Igniting Innovation with Generative AI

1,340 posts | 470 followers

FollowApsaraDB - August 7, 2024

ApsaraDB - April 24, 2024

Alipay Technology - February 20, 2020

ApsaraDB - October 26, 2023

Alibaba Cloud Native Community - October 26, 2023

yzq1989 - April 10, 2020

1,340 posts | 470 followers

Follow SOFAStack™

SOFAStack™

A one-stop, cloud-native platform that allows financial enterprises to develop and maintain highly available applications that use a distributed architecture.

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Security Center

Security Center

A unified security management system that identifies, analyzes, and notifies you of security threats in real time

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Cloud Community