By Alexandru Andrei, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

When we create an Alibaba Cloud Elastic Compute Service (ECS) instance, we can easily estimate how much storage space is required at the moment. But predicting future growth is difficult. Alibaba Cloud Disks can be easily resized. To complete the operation, the instance has to be restarted, the partition extended, and the filesystem expanded. For mission critical systems that can't afford to be offline, this can be very inconvenient. The LVM (Logical Volume Manager) can help users avoid downtime and simplify the administration of storage devices.

Here are just a few examples of what it can do:

Any data that is important should be backed up. A cloud datacenter is much better than a home computer at keeping information safely stored, because of redundant copies being made, power being much more reliable, etc. But hardware failures can happen, instances can get hacked, and users themselves can make mistakes and accidentally wipe, overwrite and corrupt data.

This section has been added to make you aware that while Alibaba Cloud offers a convenient feature to snapshot your Cloud Disks, this is incompatible with LVM. Do not use Alibaba Cloud's Snapshot feature in combination with LVM, since you will likely end up with corrupt volumes when rolling back to those snapshots! The recommended method of backing up data when using LVM, is to snapshot volumes periodically and transfer files from the snapshot to another location (preferably in another datacenter). Whatever tools you will use for the operation, make sure to preserve file/directory permissions, ownership information, timestamps, extended attributes, possibly SELinux contexts and every other kind of optional metadata you may be using (e.g. ACL).

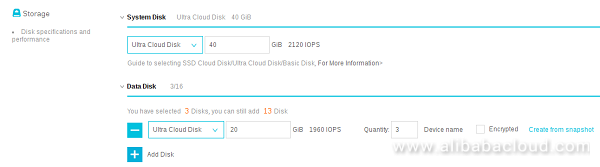

Log in to the ECS Cloud Console, create a new instance and choose Debian 9 as your Linux distribution. 1GB of RAM will suffice. Besides the system disk, add 3 data disks (sized at 20GB), like in the following picture:

After you configure and launch your instance, connect to it with an SSH client and log in as root.

If you want to add LVM to an existing system, then be careful to follow only the steps you require and adapt commands according to your needs. In the following link you can find the instructions on how to add and attach a Cloud Disk. Skip all follow-up operations such as partitioning and formatting since they are not necessary.

This tutorial is meant as a guide for the user to experiment on the instance, in order to get a better understanding of LVM.

PV - Physical Volume: the first thing LVM needs is a physical storage device. This can be an entire disk, a partition or even a file (loopback).

VG - Volume Group: Physical volumes are organized in volume groups, forming something like virtual disks and allowing multiple, separate physical storage devices to be viewed by the system as one large, contiguous device. There's usually no need to have more than one VG, except if we want different allocation policies or extent sizes.

LV - Logical Volume: A virtual logical partition that resides in a volume group. These will host our filesystems.

PE - Physical Extent: Information is divided in equal parts (blocks) of data. This is how LVM keeps track of where data is stored (mapped from the virtual volume, to the real devices). An 8MB file might have 4MB stored on physical volume /dev/vdb and 4MB on /dev/vdc. These are two extents.

Update package manager information on the system:

apt updateUpgrade packages to pull in potential bug fixes and security patches:

apt upgradeIf text-user-interface dialog boxes appear, press ENTER to select default answers.

Reboot the system:

systemctl rebootWait 15-30 seconds, then reconnect your SSH client and log back in as root.

Install LVM:

apt install lvm2We first need to configure our physical devices. Let's list the available ones on the system:

lvmdiskscan/dev/vda1 should be ignored as that is the partition containing the operating system (root partition).

Initialize two devices as LVM physical volumes:

pvcreate /dev/vdb /dev/vdcThe syntax of the command is pvcreate . We can add just one device, or many at once. /dev/vda is the path to the first cloud disk, /dev/vda1 is the first partition on the first disk, /dev/vdb is the second disk and so on.

Now we can create a volume group. We will name it "group1".

vgcreate group1 /dev/vdb /dev/vdcFinally, we can create a logical volume in our volume group (we will name it volume1):

lvcreate --extents 100%VG --name volume1 group1There are multiple ways to choose a size for the volume. In the command above, we used --extents 100%VG which means 100% of the space in our volume group. We can also pass arguments such as --extents 33%FREE to use 33% of the remaining free space in the VG. For absolute numerical sizes, we can replace --extents with --size. lvcreate --size 10G --name volume1 group1 would create a 10 gigabytes volume. We can replace the "G" with "M" for megabytes or "T" for terrabytes.

You can consult the command manual with man lvcreate to learn more. Quit the manual by pressing "q".

To make a logical volume useful, we must create a filesystem on it. You can use any filesystem you like. In this example we'll use the ext4 filesystem.

We will find our logical volumes either in /dev/mapper or /dev/name_of_volume_group. ls -l /dev/group1/ /dev/mapper/ will show that these are just symbolic links to the real block device files, such as /dev/dm-0.

Let's create a filesystem on volume1:

mkfs.ext4 /dev/group1/volume1And a directory to use as a mount point for our volume:

mkdir /mnt/volume1To make the file system accessible, we need to mount it:

mount /dev/group1/volume1 /mnt/volume1Now we can access the files in directories hosted by volume1 by accessing /mnt/volume1.

Usually, we will want to use volumes that can be flexibly expanded, to host the data of storage hungry applications, such as database or file servers. Let's take MySQL/MariaDB as an example. One solution is to mount our volume in a location such as /mnt/volume1 and then change the database server configuration to keep its largest files there. It's possible we may also have to setup correct permissions with chmod. When dealing with multiple programs that need to store a lot of data, we may find it more convenient to go another route. For example, we can migrate the whole /var directory (where a lot of applications keep variable, possibly fast growing files) to our volume and then mount the LV in /var. This way, the volume will be the one hosting files dropped in /var, instead of the root disk (the disk containing the operating system). Let's go through an example.

Don't execute the commands in this section!. They are offered just as a guide for future operations readers may want to run on their servers once they are comfortable with LVM. Executing the commands here would make it impossible to follow some of the other steps in the tutorial.

We would first copy all files and directories from /var to volume1:

cp -axv /var/* /mnt/volume1/Then we would configure the system to automatically mount our volume to /var at boot time:

echo '/dev/group1/volume1 /var ext4 defaults 0 2' >> /etc/fstabA reboot would be mandatory at this point because further actions, such as installing additional packages, would update files in /var, on the root filesystem, leaving us with an inconsistent copy on our volume (expired information).

systemctl rebootYou can execute the commands that follow after this point.

While maintaining a LVM pool, we will need to periodically monitor the free space available. We may also want to see how physical extents are distributed, progress on mirroring or moving data around and so on.

To inspect physical volumes we have pvdisplay, pvs and pvscan. Details about volume groups can be displayed with vgdisplay or vgs. When dealing with logical volumes we have lvdisplay and lvs.

In this tutorial, we already have 3 disks available and we only used 2 so we can skip adding another Cloud Disk.

In a real scenario, at some point in the future, the available space on the volume group will run out. Before that happens, we should add another Cloud Disk. After we attach the disk, we should be able to see it in the output of lvmdiskscan, without needing to reboot.

The first step in expanding our pool is to initialize the physical device:

pvcreate /dev/vddThen add it to the existing volume group:

vgextend group1 /dev/vddRunning vgs should now show us that we have additional space available. The last step is to expand our logical volume. After extending the logical volume, the filesystem also has to be resized. Fortunately, the lvextend command has an optional parameter, --resizefs, that can do that for us, which makes the operation easier and safer. Extending the filesystem manually has some potential pitfalls.

lvextend --verbose --extents +100%FREE --resizefs /dev/group1/volume1If we enter df -h in the command line, we should now see that the filesystem under /dev/mapper/group1-volume1 is larger.

After a while, we might end up with too many disks in our pool. LVM has a solution for that, allowing us to remove multiple older, smaller disks and replace them with a single, larger one.

Add another Cloud Disk and make it 65GB in size. Make sure it's in the same zone as your Elastic Compute instance. Attach it to your instance and then initialize it:

pvcreate /dev/vdeExtend the volume group:

vgextend group1 /dev/vdeIn a real scenario, this would be a good time to snapshot the volume and copy its data to a remote location. pvmove --atomic should be safe, but it's better to have a backup plan in case anything goes wrong.

Move the data from one physical volume to another:

pvmove --atomic /dev/vdb /dev/vdeAfter the transfer is complete, we can remove the physical volume from the group:

vgreduce group1 /dev/vdbMake sure the PV does not belong to group1 anymore:

pvsRemove the LVM header from the PV:

pvremove /dev/vdbNow it's safe to permanently remove the corresponding Cloud Disk.

In a real situation, we would repeat the steps for the remaining disks, dev/vdc and /dev/vdd, but since that can take a long time, we will skip it. In the end, we would end up with only /dev/vde in our volume group, replacing three 20GB disks with one 65GB disk.

For reader's future reference: when running jobs that can take a long time to complete, we can chain them. In our situation, we would use a line such as pvmove --atomic /dev/vdb /dev/vde && pvmove --atomic /dev/vdc /dev/vde && pvmove --atomic /dev/vdd /dev/vde. What this does is that if the previous command ran successfully, it automatically runs the next command in the chain. If one fails, it aborts. We would also run this in tmux or screen, which allows us to return to our SSH session, exactly as we left it, in case we get disconnected. This way, you can leave the system do multiple jobs for hours, and come back when they're done, instead of constantly having to come back to issue the next command.

From time to time, we have to backup data. Snapshots allow us to freeze the state of files and directories, so we can copy them to another location. They're also used to have a point that we can quickly revert to, in case something goes wrong, for example before upgrading an operating system to the next major version.

Create a snapshot volume:

lvcreate --snapshot group1/volume1 --size 5G --name snap1The size of the volume represents the amount of changes that LVM can keep track of. If you add a new 500MB file to your origin volume, that will consume 500MB of snapshot storage, plus whatever is needed for metadata. You can keep track of used space with lvs. Keep an eye on the Data% column. When that gets to 100%, the snapshot becomes invalid since LVM cannot track changes anymore.

Create a directory that will serve as a mount point for the snapshot:

mkdir /mnt/snapshotMount the volume:

mount /dev/group1/snap1 /mnt/snapshot/Now we can copy contents from /mnt/snapshot to a remote location without worrying that files will change during the operation.

If we ever need to revert the original volume back to the frozen snapshot state, we will first have to unmount it:

umount /mnt/volume1And then revert:

lvconvert --merge group1/snap1If the volume is mounted in vital areas such as /var, we can't unmount on a running system and we will see this message:

Can't merge until origin volume is closed.

Merging of snapshot group1/snap1 will occur on next activation of group1/volume1.In such a case we would just have to reboot and the merge will happen automatically.

Note: merging can take a long time on large volumes so you might have to wait a long time until you can log back in to your instance.

To begin, we have to unmount our volume (skip if already done in previous section):

umount /mnt/volume1Now delete it:

lvremove /dev/group1/volume1Since we have three Cloud Disks available (we theoretically removed /dev/vdb), we can create a three stripe volume:

lvcreate --stripes 3 --size 10G --name stripedvolume group1At the moment of writing this tutorial, after some quick benchmarks, it was discovered that on a single core ECS instance, a volume with 4 stripes works best for improving writes and 8 stripes for improving reads. Each stripe needs a separate physical device in the volume group. Adding more virtual CPU cores to your Elastic Compute instance can help with I/O performance when a single core is overwhelmed by intense input/output interrupt requests.

It's worth reminding readers to backup more often when using striped volumes since losing one physical device leads to losing the whole volume.

If you want to learn more about LVM, consult Arch Linux's LVM Wiki page. You can find an even more comprehensive LVM guide on The Linux Documentation Project's website.

Alibaba Cloud ET Environment Brain Assists in AI-based Flood Control in Zhejiang, China

2,593 posts | 793 followers

FollowAlibaba Clouder - November 14, 2018

OpenAnolis - February 10, 2023

Alibaba Cloud Native - May 23, 2022

Alibaba Developer - November 5, 2020

Alibaba Clouder - January 9, 2019

digoal - May 19, 2021

2,593 posts | 793 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn MoreLearn More

More Posts by Alibaba Clouder