In this article, we will show you how to build a big data environment on Alibaba Cloud with Object Storage Service and E-MapReduce.

By Priyankaa Arunachalam, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

In the first article of the series, we have walked through the basics of big data. In this article, we will make the environment ready. In the early days, setting up a big data environment itself was a big deal. Nowadays, with the emerging technologies on cloud, the number of process have been reduced making things simpler. This article deals with various big data solutions from Alibaba Cloud and shows you the steps to get started with these services.

Data Storage

The most fundamental requirement of Big Data is Storage. Alibaba Cloud's Object Storage Service (OSS) is a cloud-based storage service. which helps in storing extremely large quantities of data of different types and from different sources. It is well suited for large volumes of multimedia files. Regardless of the data type or the access frequency, OSS can help. It even includes migration tools to move data from on-premises or third-party providers to OSS.

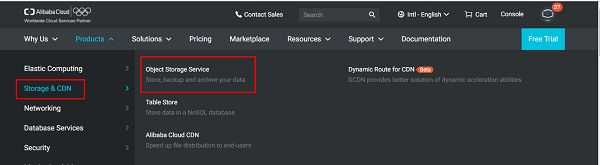

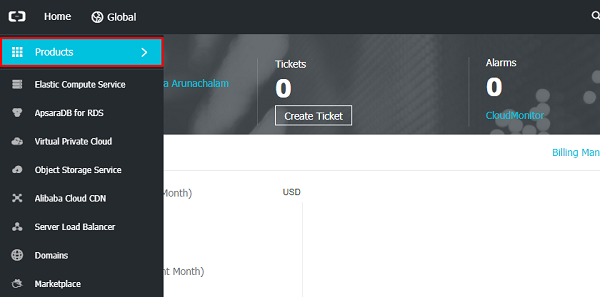

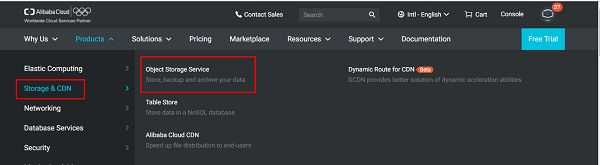

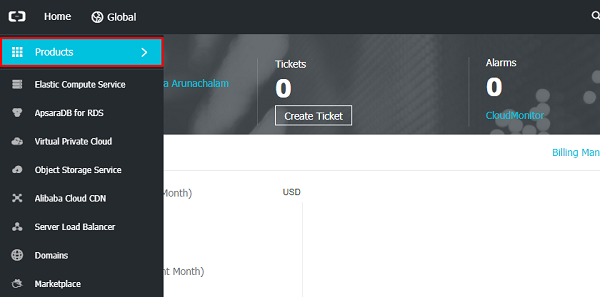

- On the Home page of Alibaba, move to "Products" tab and select Object Storage Service under Storage.

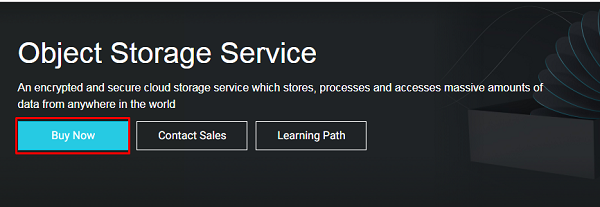

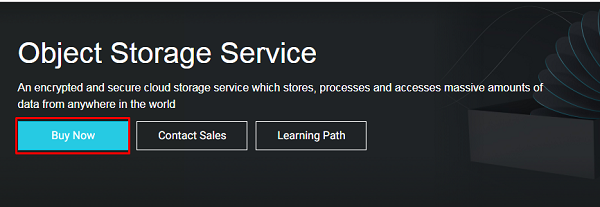

- Click on Buy Now. The pricing is based on the amount of data you store. The more you store, the less the cost per-unit is. Alibaba Cloud offers free storage up to 5GB.

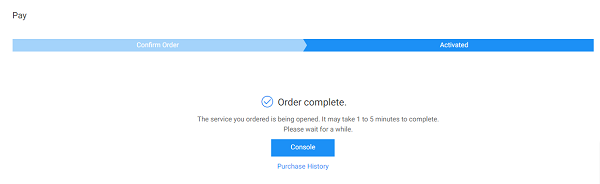

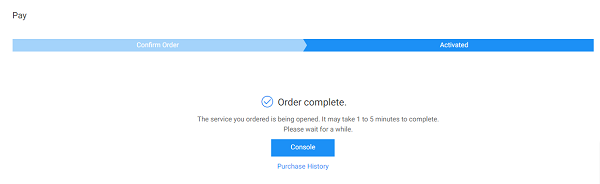

- Agree with the terms and conditions which will enable OSS and you will see the Order Complete page.

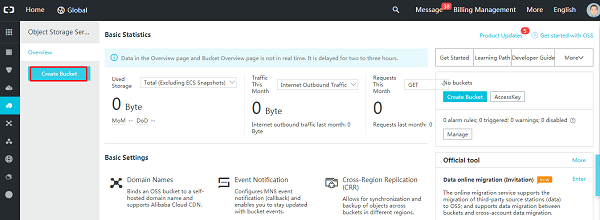

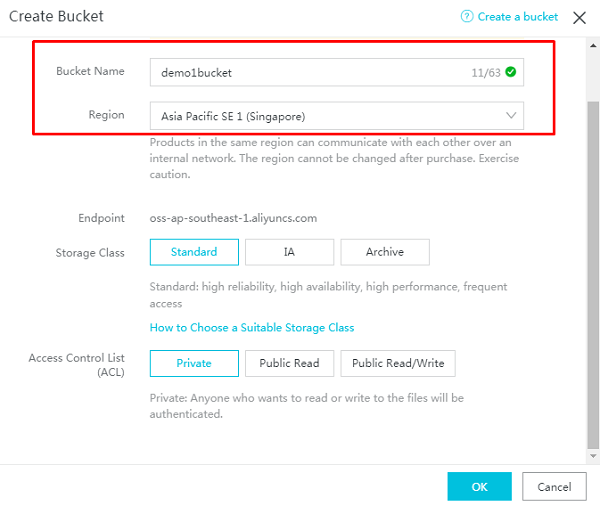

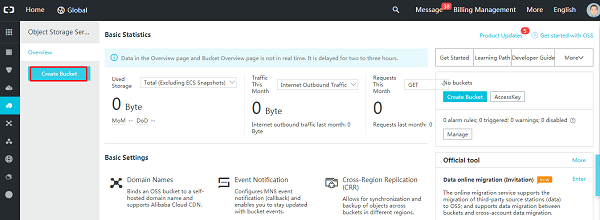

- Now that you can start creating a bucket to use for E-MapReduce. Go to the OSS console and click on Create Bucket.

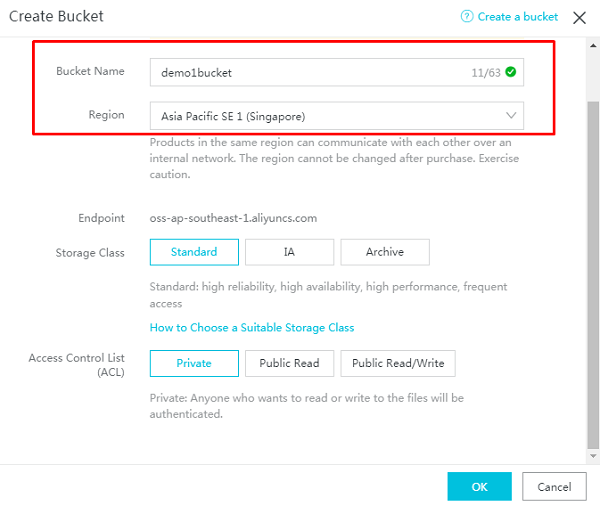

- In the Create Bucket wizard, fill in the necessary details. Let's follow a constant of "demo1" in naming conversions and "Singapore" as Region in the entire article.

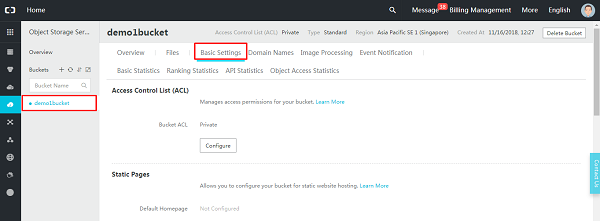

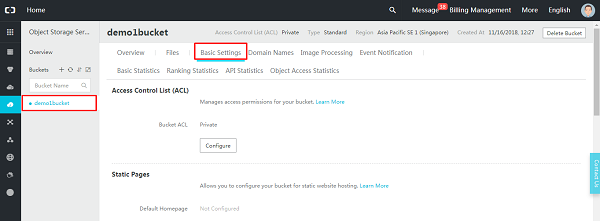

- If needed, change the configurations of the bucket created. On the left panel, you will see the bucket created. Click on it and move to Basic Settings tab.

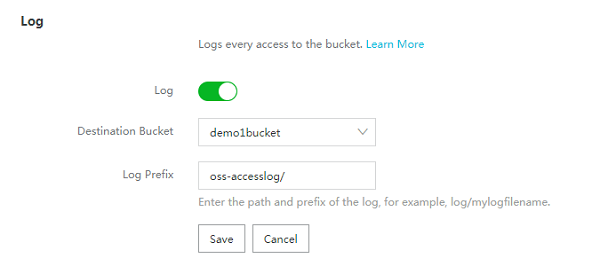

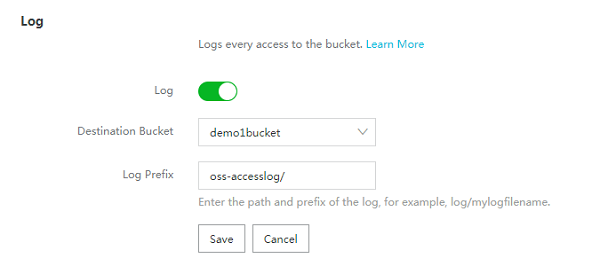

- You can change the configurations wherever necessary. Click on configure under logs and enable logs.

OSS is now ready to use with the logs enabled.

Data Processing

My Storage is all set now. When moving to the word "Data Processing", we have two main Alibaba Cloud Products to look at.

-

MaxCompute - Alibaba's platform for processing Big Data

-

E-MapReduce – A rich framework for managing and processing Big Data

In this article, we will focus on Big Data with Alibaba Cloud's E-MapReduce

What Is E-MapReduce?

Alibaba Cloud Elastic MapReduce, also known as EMR or E-MapReduce, offers a fully managed service which allows you to create Hadoop clusters for Big Data applications within minutes. It is built on ECS and uses open source tools like Apache Hadoop and Spark (covered in the first article) which forms the core of E-MapReduce to quickly process and analyze huge amounts of data through a user-friendly web interface.

Why Choose E-MapReduce?

E-MapReduce takes care of most of the basic tasks required for cluster creation and provisioning, while at the same time it provides an integrated framework for managing and using clusters. It utilizes complete capabilities of Hadoop and Spark, so you need not provision Hadoop right from scratch. Based on Spark –means you can even stream large volumes of data. It easily integrates with other products of Alibaba Cloud such as Alibaba Elastic Computing Services (ECS) and OSS.

What Is a Hadoop Cluster?

We came across the term "Hadoop" in our first article. So, what is a cluster?

A cluster is a collection of nodes where node is a process running on a physical machine. There are two main advantages of a Hadoop cluster. Firstly you have huge data and you can't expect them to be the same. A Hadoop cluster helps in this scenario, as it divides data into blocks and each node processes the data in parallel. Secondly, big data is growing every day. So there is constant configuration for a cluster setup may need to scale my cluster i.e.) add or remove nodes from my cluster whenever needed. Yes, a Hadoop cluster solves this too as it is linearly scalable.

Hadoop is a master-slave model where the two main components are

-

Master node- A cluster consists of a single Master which runs NameNode, Secondary NameNode and JobTracker. NameNode stores the metadata of HDFS, The secondary NameNode keeps a backup of the NameNode data and the JobTracker monitors the parallel processing of data using MapReduce

-

Worker node- A cluster can have any number of Worker nodes. This component runs a DataNode which stores the actual data and a Task Tracker service which is secondary to the JobTracker.

Types of Clusters

-

Single node cluster- Also known as Pseudo-Distributed cluster where Namenode and Datanode runs on the same machine.

-

Multinode cluster-Also known as Distributed cluster where one node acts as Master and the other nodes as slaves. The default replication factor of these type of clusters is set to 3.

-

High Availability cluster- In standard configuration, the NameNode becomes a single point of failure because the whole cluster becomes unavailable if the Namenode goes down. The reasons for unavailability might be a planned or unplanned event. This cluster allows to run two Namenodes at the same time namely Active NameNode and Standby/Passive NameNode.If one NameNode goes down, the other NameNode will automatically takeover reducing the cluster down time.

In Alibaba each single node is an ECS instance where one will be Master instance and the other will be Worker/Core Instances. Most Business scenarios use Multinode cluster as there is huge data to process and analyze.

Let's create a simple cluster in EMR.

- Log in to your Alibaba Cloud account and click on "Console" on top right corner. This leads to a dashboard comprising of various information like resource used, billing, etc.

- On the left, there are various icons for navigations. Among that select "Products", and choose E-MapReduce under analysis.

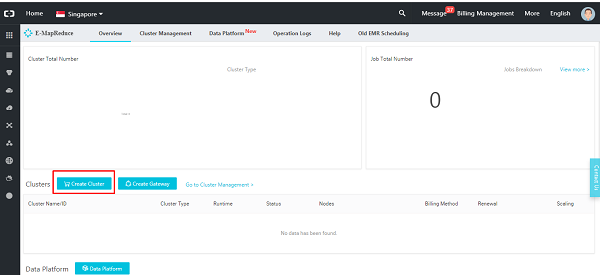

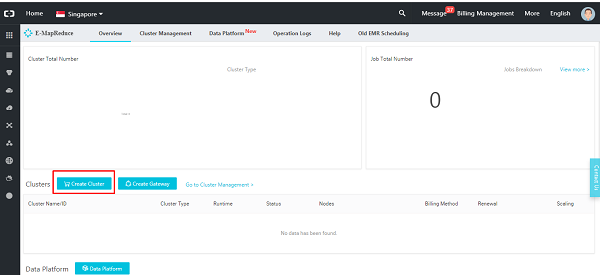

- This leads to the EMR console

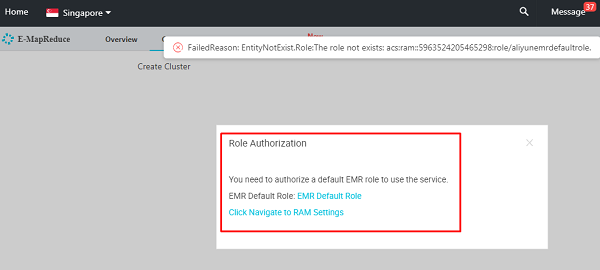

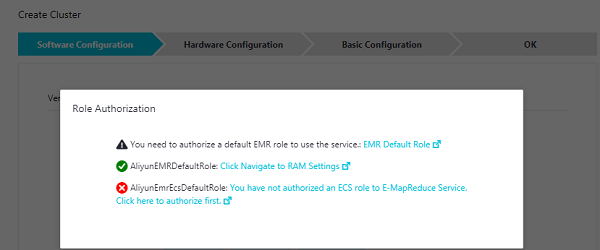

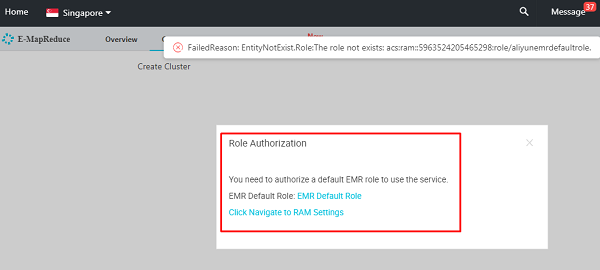

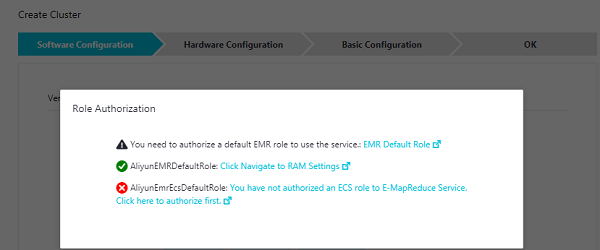

- You need a default EMR role to start with the service. If you haven't set this up already, you will see a warning as shown below.

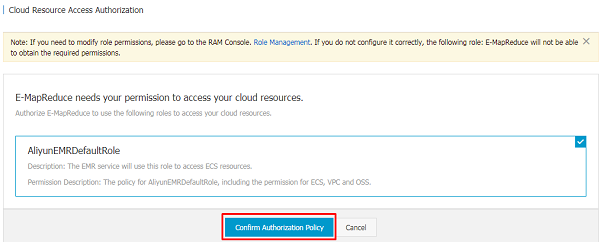

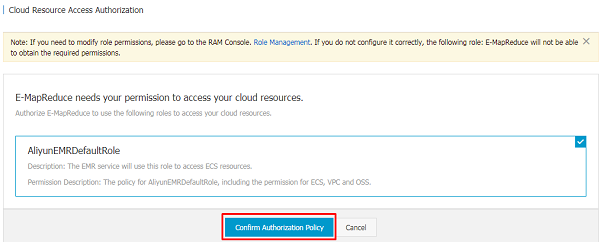

- In that case, click "Go to RAM" and set up a default EMR role by clicking on Confirm Authorization Policy.

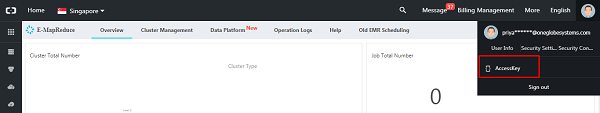

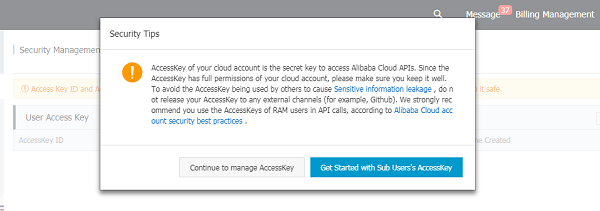

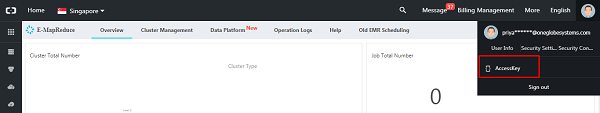

- Next, make sure you have an AccessKey. On the top right, hover over the user name and select the AccessKey from the drop down.

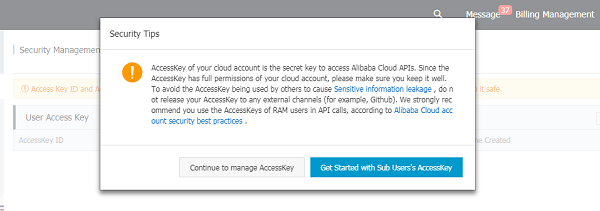

- Ignore the Security Tips. Clicking on "Get started with Sub User's AccessKey" will take you to the Document center where you can find steps to get started

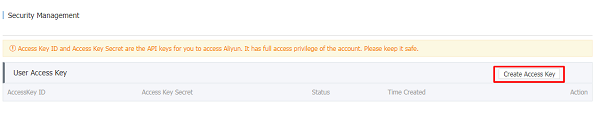

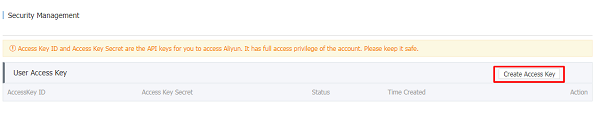

- Continue with manage access key and proceed with "Create Access Key"

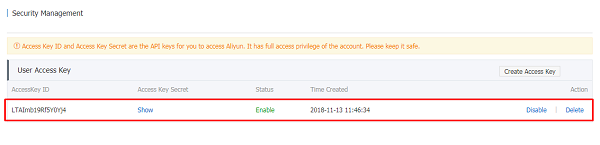

- In few seconds, you will see the access key created.

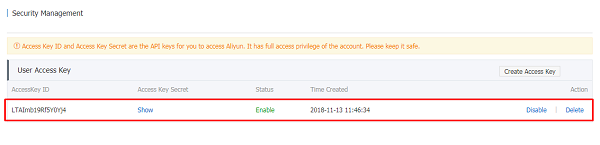

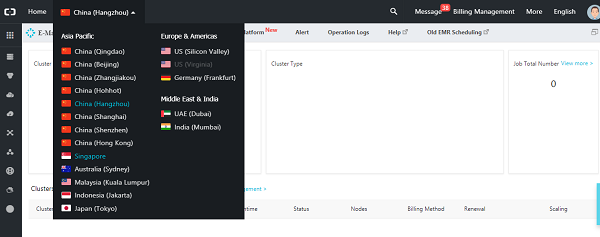

- Now that all prerequisites is set up, decide the zone where the cluster is to be located. If better network connectivity is required, let all your Alibaba Products sit at same zone. As mentioned earlier, we will use "Singapore" in this entire article. Now my OSS and EMR are in same location.

- Now click on "Create Cluster". If any other role authorization is requested by Alibaba, continue setting it up which leads to further steps of cluster creation.

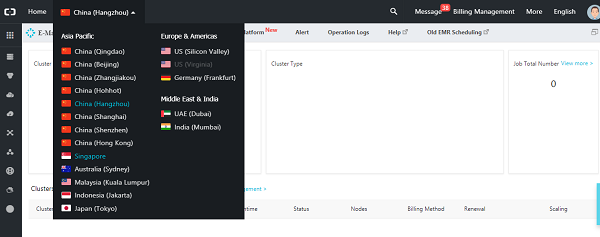

Alibaba E-MapReduce provides four different cluster types as follows:

-

Hadoop clusters: It provides various Big data tools like

- Hadoop, Hive, and Spark for distributed Storage and Processing

- Spark Streaming, Flink, and Storm form the stream processing systems

- Oozie and Pig for processing and scheduling jobs

-

Druid clusters: Helps in real-time interactive analysis, query large amount of data with low latency. In collaboration with EMR Hadoop, EMR Spark and OSS it offers real-time solutions.

-

Data Science clusters: A cluster better for Data Scientists provisioned specially for big data and AI scenarios, which provides Tensor Flow models in addition.

-

Kafka clusters: A distributed message system of high throughput and scalability, providing a complete service monitoring system.

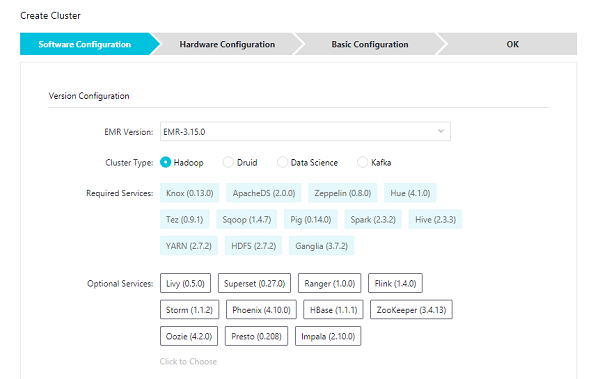

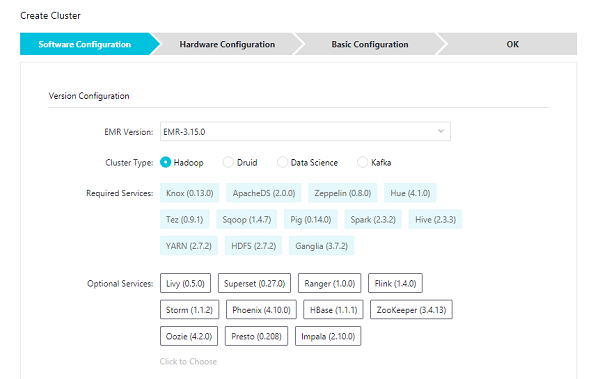

Software Configuration

For now, we will create Hadoop cluster. Select "Hadoop". You will have a set of required services with versions mentioned. You can also select additional tools from Optional services.

High security mode: In this mode, you can set authentication for the cluster which is turned off by default. Once the Software Configurations are done, click on Next and move to Hardware Configuration.

Hardware Configuration

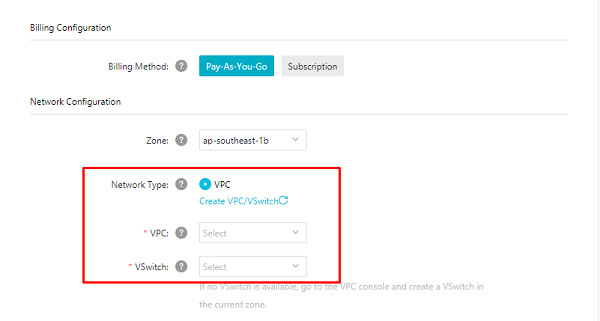

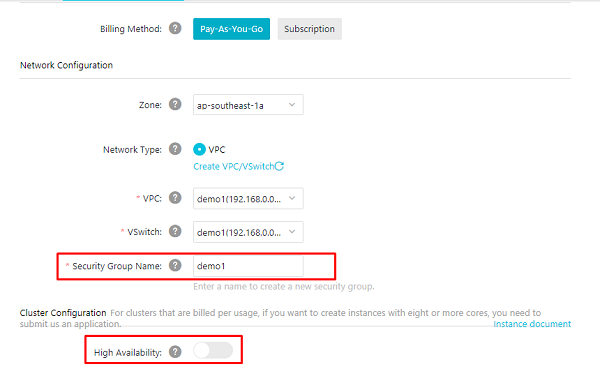

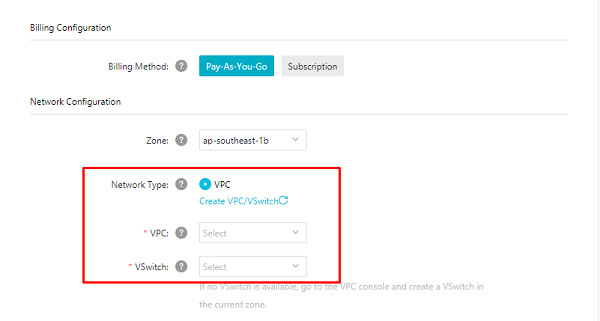

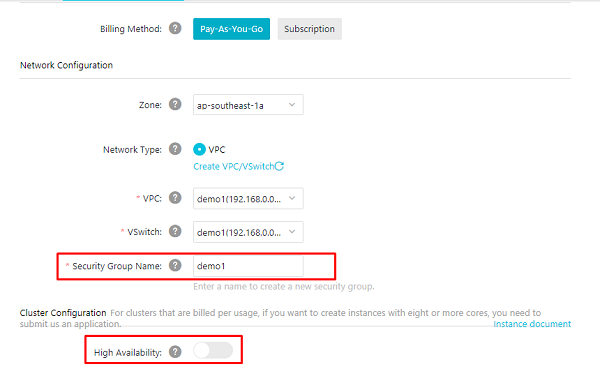

- In the Hardware settings tab, you can set up few services which is required by the cluster like Virtual Private Cloud (VPC) ,Virtual switch (VSwitch) and Security group.

-

Network type: When you select the zone, VPC and VSwitch .created will be selected. Else create a new one.

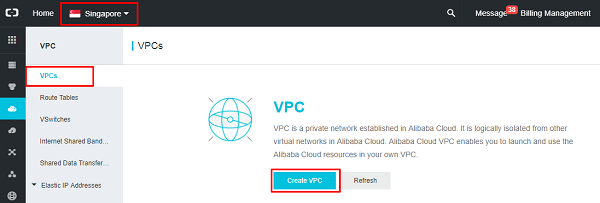

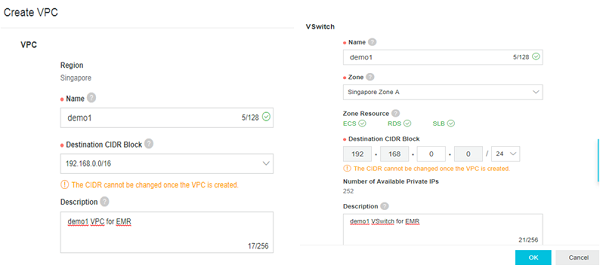

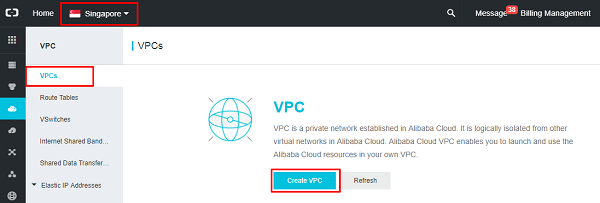

- Let's create a new VPC. Move to the VPC console and click on "Create VPC"

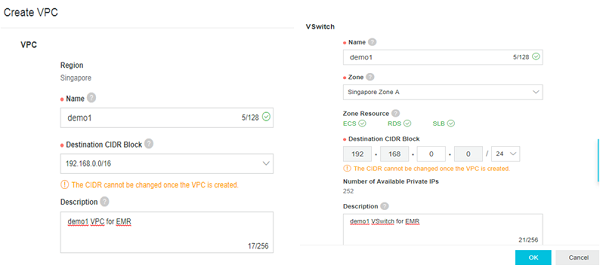

- In the VPC and VSwitch wizard, give the zone and name of VPC and click ok.

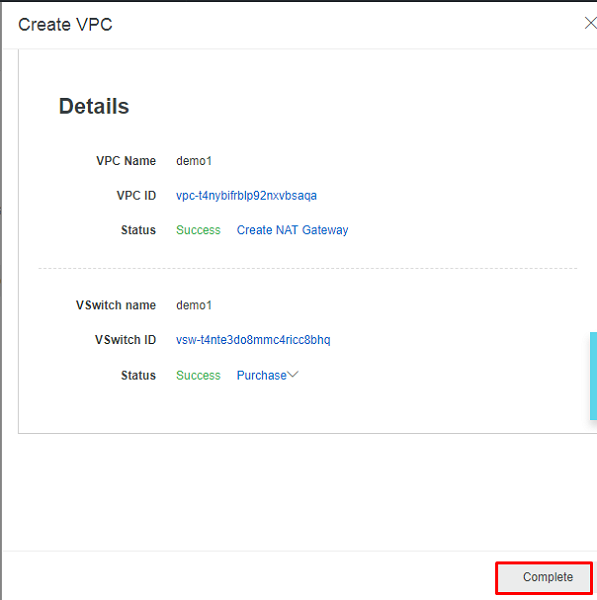

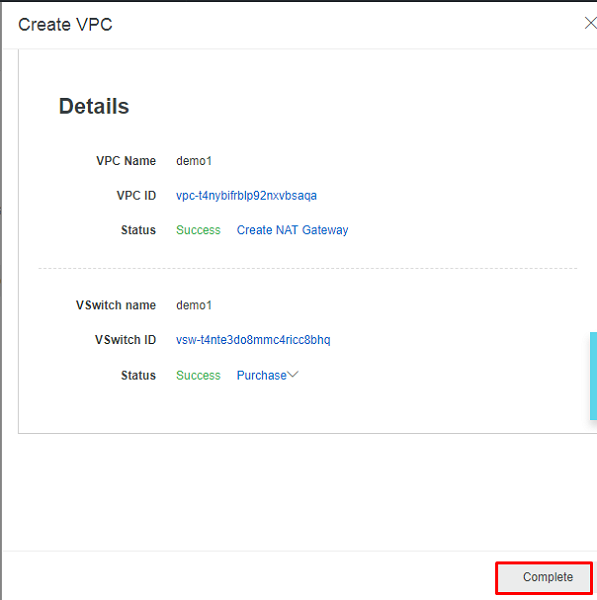

- Clicking on ok will start creating the VPC and VSwitch. You can see "Creating" in the place of ok. Once it is created you can see the window below.

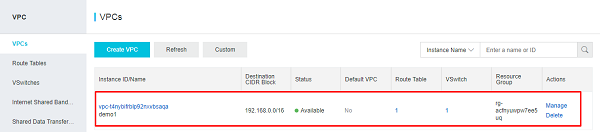

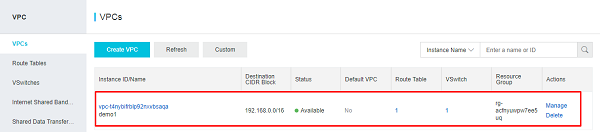

- Click on complete. Now you have one created. If you don't see the created one, click on refresh.

- Once the VPC is created go back to the Hardware Configuration page, and select the one which is created now.

- If you are creating a cluster for the first time, there will be no security group to select. Give a name to create a new security group.

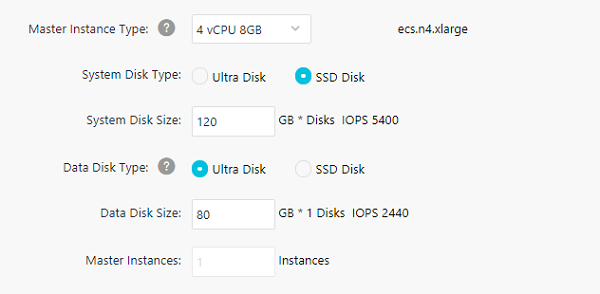

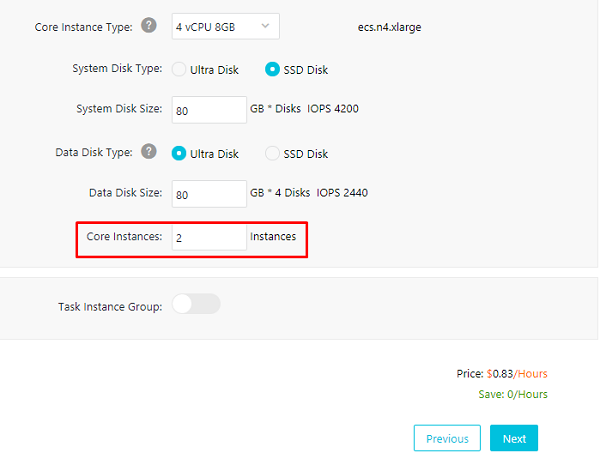

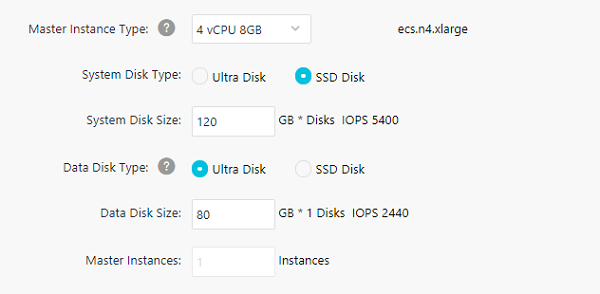

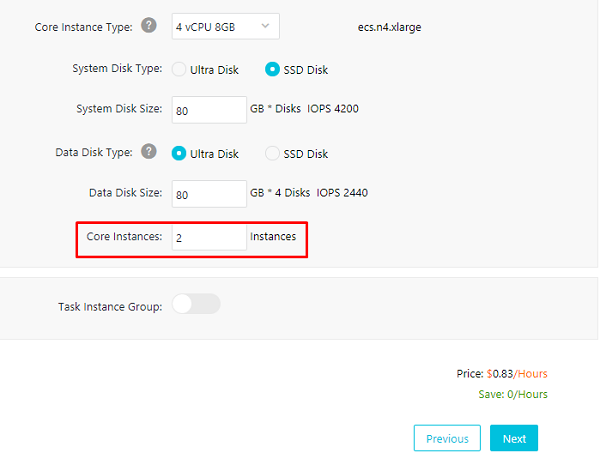

- Since Hadoop is a Master-Slave Model, select the configurations of Master and Core Instance

- Also select the number of core instances to decide the number of data nodes. Here we have given core instances=2, thus creating a Multinode cluster.

- Once this is done, you will see the price estimated below. Based on this, you can even change the instance type and disk size. Finally click next.

Basic Configuration

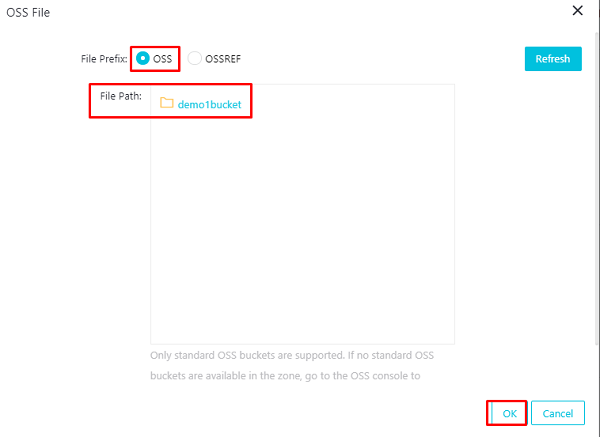

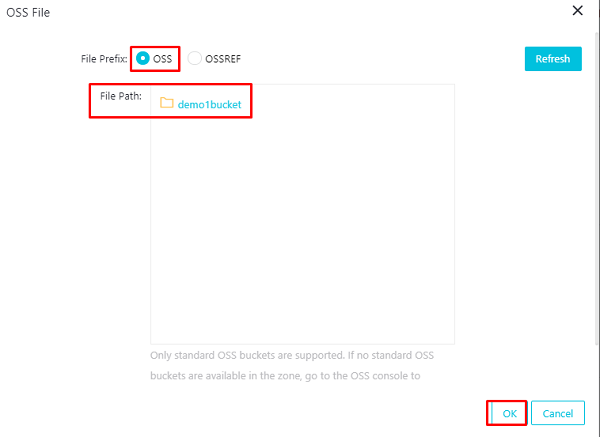

- On this tab, give the cluster a name, set the log path (which we set up earlier in the OSS).

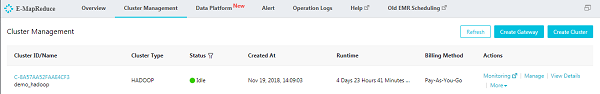

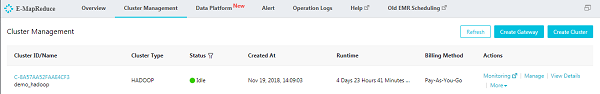

- Also authorize the roles and set a password for the cluster which we will use later to access the cluster. Once everything is done, click on ok. Relax for few seconds, while your cluster is being created. Now move back to EMR console and there is your cluster.

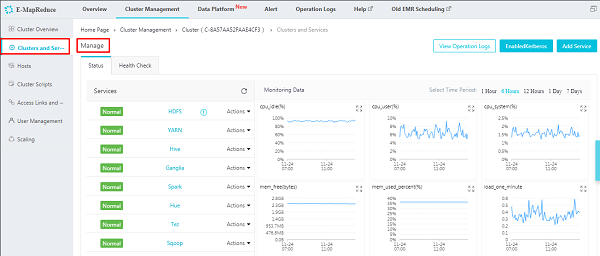

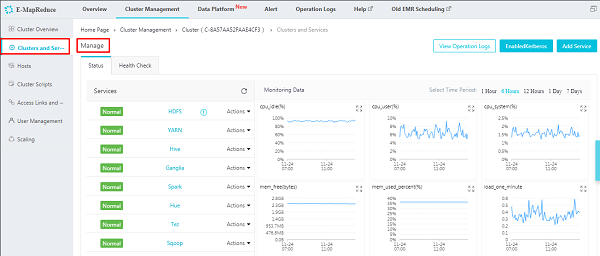

- Let's click on "Manage". You will see all the tools started by default. Anytime you can start, stop, restart the services and even monitor them. Add security and add extra services if needed.

Best Practices for Building a Cluster

- The data volume to be processed is the key to decide the number of nodes and memory capacity for each machine.

- Run the jobs with default configurations and observe the resources and time taken. Keep enhancing the cluster based on this.

The cluster is now ready for the big deal - get ready to play with Big Data!

In the next article, we will talk about data sources and various data formats to ingest the data into our big data environment.

"We are so obsessed with Big data, we forget how to interpret it," Danah Boyd

E-MapReduce Service

E-MapReduce Service

MaxCompute

MaxCompute

ECS(Elastic Compute Service)

ECS(Elastic Compute Service)