By Linxi

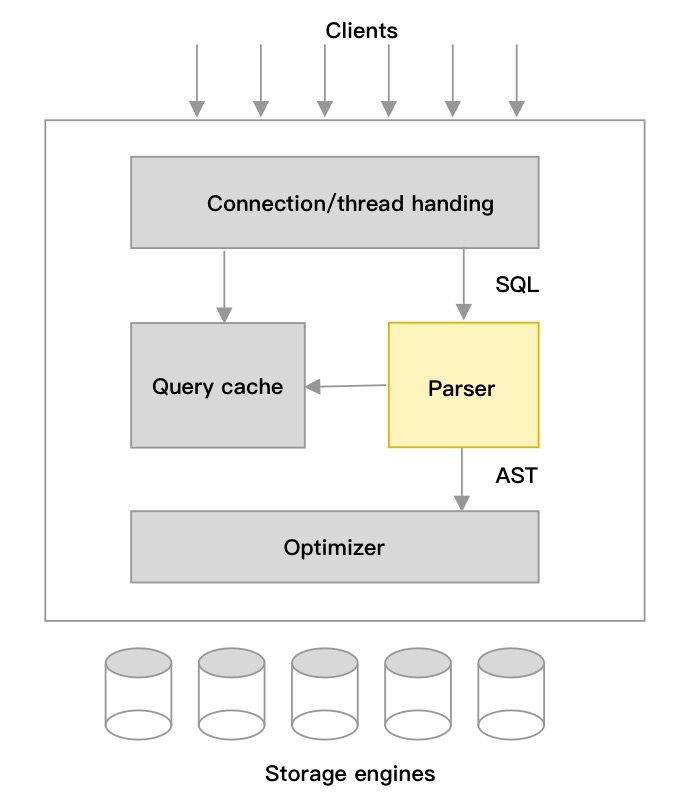

Structured Query Language (SQL) is a domain-specific language (programming language) used first in relational databases, which is convenient for managing structured data. SQL contains many different types of languages, including data definition language, data control language, and data manipulation language. Different database products have different declarations and implementations. It is convenient for users to process data with SQL. The lexical and syntactic parser in the database system is responsible for analyzing the meaning of SQL text, including lexical analysis, syntactic analysis, and semantic analysis. The Abstract Syntax Tree (AST) is generated by the parser and processed by the optimizer to create an execution plan, which is executed by the execution engine. The following figure uses the MySQL framework as an example to show the position of the parser.

This article introduces the parser technology and its current situation in the industry, analyzes the problems encountered when using the auto-generated parser, shares the design and practice of self-developed SQL Parser, and analyzes its performance improvements.

Depending on the different code development modes of resolvers, the following two methods are used:

Many tools are available in the industry to facilitate lexical development and syntactic analysis. For example, Flex, Lex, and Bison are often used to generate lexical and syntactic code in C and C++. If Java is the target language, you can use popular tools, such as ANTLR and JavaCC. Both ANTLR and JavaCC take user-written lexical and syntactic rules files as the input, and the syntactic files must meet EBNF (extended Backus-Naur form) [1] syntactic rules. These two tools use the LL(k) (Left-to-right, Leftmost derivation) algorithm to parse the SQL text from top to down and build the SQL AST. Databases and big data systems, such as Presto, Spark, and Hive, are generated in this way. The generated code includes those for lexical and syntactic parsing. Semantic analysis needs to be combined with metadata and processed by each database kernel. You can find more information about the functions and algorithms [4] of automatic generation tools in the references section.

Unlike automatic generation tools, SQL Parser components for popular databases, such as InfluxDB, H2, and ClickHouse, are all written by hand.

Advantages:

Disadvantages:

In the production environment of real-time analytic databases, it is often necessary to handle thousands of rows of complex query requests or deeply-nested query requests. Due to complex state machine management and deep thread stack, the automatically generated parser may experience severe performance degradation when processing individual query requests during lexical and syntactic parsing.

Analytic databases have high requirements on the performance and stability of SQL Parser components to handle large volume and high concurrent writing requests stably. Many tools, such as ANTLR and JavaCC have been used to generate SQL Parser. However, the values clause will produce too many AST temporary objects when a huge amount of data needs to be written, which prolongs garbage collection.

The automatically generated parser is not very flexible and cannot perform a fast traversal of the AST tree to find leaf nodes that conform to certain rules and modify them.

Auto-generated code is difficult to read and complicates troubleshooting. In complex queries, its poor performance affects system stability and version iteration speed. At the beginning of the design, we abandoned the automatic generation scheme and wrote the parser completely manually.

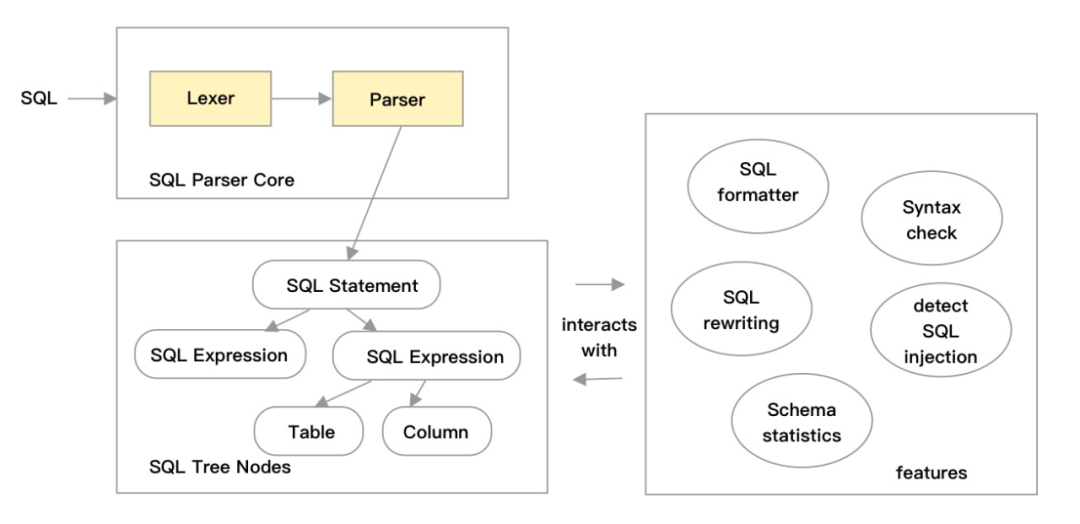

The automatic generation tool is mainly used to generate the SQL Parser Core and SQL Tree Nodes on the left in the following figure. The features on the right need to be processed by the developers. If the features on the right (for example, SQL rewriting) have more demands on the changing Tree Nodes on the left, there is no way to modify the automatically generated code.

The automatic generation tool is designed to construct a general syntactic parser. It has particular optimization technologies to improve the stability and performance of SQL. We use LL(k) as the algorithm for syntactic analysis from the beginning of the design. Its top-down feature is clear in logic, easy to read in code, and convenient to develop and maintain when writing a parser manually. The left recursion problem of LL(k) can be avoided through manual judge loop programming.

In lexical analysis, Lexer reads sequential SQL text continuously, identifies a piece of continuous text with a certain feature as a Token, and marks the Token category. Let's use the assignment statement x = 30 as an example. After lexical analysis, x, =, and 30 are identified as ID, equal sign operator, and numerical constant, respectively. In particular, strings must be compared several times to detect identifiers (variables, table names, column names, etc.) and reserved words (TABLE, FROM, SELECT, etc.) In this stage, the automatic generation tool uses Deterministic Finite Automaton (DFA) and predefined lexical files to determine the value and type of each Token. Manually writing a parser does not require additional maintenance of a state machine. Branch prediction is used to reduce the amount of computing and the depth of the stack. The syntactic parser uses a Token in the lexical analysis as input and SQL syntax description as a rule. From top to down, non-leaf nodes are expanded in sequence to build a syntax tree. The whole process is like walking through a maze, and there is only one correct entrance and exit. After you finish the maze, a correct AST will be generated.

selECT c1 From T1;Since most database systems are not case sensitive, selECT and From in the preceding query are identified as reserved words, and c1 and T1 are identified as identifiers. It is necessary to distinguish between the two types by matching strings. Generally speaking, it is a feasible solution to convert characters into uppercase or lowercase characters and then compare the literal values. First, the database reserved word is initialized in the memory according to Map<String, Token>. Key is an uppercase string of reserved words, and value is the Token type. When converting key to uppercase characters, the ASCII value +32 method can be used instead of the toUpperCase() method to achieve performance improvements without affecting the correctness.

When parsing a constant value, the common practice is to read the string in SQL, taking the string as a parameter. You can read and calculate the value on the original text by calling Java's Integer.parseInt() / Float.parseFloat() / Long.parseLong(). In this process, only the basic type is used to avoid constructing strings, which can save memory and improve the parsing speed. This optimization is particularly good for large numbers of writes.

In SQL syntactic parsing, you usually only need to read a Token in advance to decide how to build the relationship between syntactic nodes or which type of syntactic nodes to build. Some syntax has more branches, and you must read two or more Tokens in advance to make a judgment. Pre-reading multiple Tokens in advance reduces the performance consumption caused by backtracking. Rarely, if a pre-reading of more than two Tokens fails to match the correct syntactic branch, the pre-reading needs to be withdrawn and another branch must be used. You can save the Token point before the pre-reading to speed up the process. When you revoke, you can return to the save point quickly. The constant literal values appear more frequently than other Tokens in the insert into values statement. Branch prediction can reduce judgment logic steps to avoid backtracking and improve performance.

Query rewriting [6] technology modifies the AST based on relational algebra. The new AST has better performance with correctness guaranteed. For example, the size of Table A and Table B differs substantially, and the wrong Join order is unfriendly to the database system. You can achieve better performance by changing the Join order of tables A and B. The parser generated by the tool usually does not allow direct modification of the AST node. Each time an AST node is changed, the entire AST needs to be rebuilt, and the performance is not good. In a self-developed Parser, each AST node class has the replace interface, which can only be rewritten by modifying the subtree in the AST.

public interface Replaceable {

boolean replace(Node expr, Node target);

}

public class BetweenNode implements Replaceable {

public Node beginExpr;

public Node endExpr;

@Override

public int hashCode(){...}

@Override

public boolean equals(Object obj) {...}

@Override

public boolean replace(SQLExpr expr, SQLExpr target) {

if (expr == beginExpr) {

setBeginExpr(target);

return true;

}

if (expr == endExpr) {

setEndExpr(target);

return true;

}

return false;

}

}public abstract class Node {

public abstract List<Node> getChildren()

}

public class BetweenNode extends Node {

public Node beginExpr;

public Node endExpr;

@Override

public List<Node> getChildren() {

return Arrays.<Node>asList(beginExpr, this.endExpr);

}

@Override

public BetweenNode clone() {

BetweenNode x = new BetweenNode();

if (beginExpr != null) {

x.setBeginExpr(beginExpr.clone());

}

if (endExpr != null) {

x.setEndExpr(endExpr.clone());

}

return x;

}

public void setBeginExpr(Node beginExpr) {

if (beginExpr != null) {

beginExpr.setParent(this);

}

this.beginExpr = beginExpr;

}

public void setEndExpr(Node endExpr) {

if (endExpr != null) {

endExpr.setParent(this);

}

this.endExpr = endExpr;

}

}As mentioned earlier, when importing data in large quantities, many AST small objects will be generated in the lexical and syntactic parsing process, which brings pressure to garbage collection. The core of solving this problem is to use foundation data types as much as possible and try not to generate AST node objects. You need to start at the lexical analysis stage and avoid entering the syntactic analysis stage. In the lexical analysis stage, the write interface class is implemented by allowing external registration. When the lexical parser parses a specific value in values or parses a complete row in values, it also calls back the write interface to implement the database write logic.

public interface InsertValueHandler {

Object newRow() throws SQLException;

void processInteger(Object row, int index, Number value);

void processString(Object row, int index, String value);

void processDate(Object row, int index, String value);

void processDate(Object row, int index, java.util.Date value);

void processTimestamp(Object row, int index, String value);

void processTimestamp(Object row, int index, java.util.Date value);

void processTime(Object row, int index, String value);

void processDecimal(Object row, int index, BigDecimal value);

void processBoolean(Object row, int index, boolean value);

void processNull(Object row, int index);

void processFunction(Object row, int index, String funcName, Object... values);

void processRow(Object row);

void processComplete();

}

public class BatchInsertHandler implements InsertValueHandler {

...

}

public class Application {

BatchInsertHandler handler = new BatchInsertHandler();

parser.parseInsertHeader(); // header: parse insert into xxx values parts

parser.parseValues(handler); // batch value: values (xxx), (xxx), (xxx) parts

}A manually written SQL Parser can work more flexibly with the optimizer. Some of the optimization capabilities in Query Rewriting are added into the SQL Parser, allowing the optimizer to focus more on price and cost-based optimization. Parsers can combine meta-information with equivalence relational algebra to implement the Query Rewriting function on AST at low costs. This can improve the query performance, such as constant folding, functional transformation, conditional push-down or up, type inference, implicit conversion, semantic deduplication, etc. First, you need to design a structure to store catalog and table structural information, including library name, table name, column name, column type, etc. Then, use the Visitor Pattern to write the Visitor program and analyze the fields, functions, expressions, and operators by traversing the AST in depth. Then, infer the type of expressions according to the table structure and type information. Note: The same expressions that appear in different positions may belong to different scopes in the nested inquire statements. Last, AST is processed by Rule-Based Optimization (RBO) rules following equivalence relation algebra to achieve the purpose of the optimizer.

-- Constant Folding Example

SELECT * FROM T1

WHERE c_week

BETWEEN CAST(date_format(date_add('day', -day_of_week('20180605'),

date('20180605')), '%Y%m&d') as bigint)

AND CAST(date_format(date_add('day', -day_of_week('20180606'),

date('20180606')), '%Y%m&d') as bigint)

------------After Folding-----------

SELECT * from T1

WHERE c_week BETWEEN 20180602 and 20180603-- Example of Function Conversion

SELECT * FROM T1

WHERE DATE_FORMAT(t1."pay_time", '%Y%m%d') >= '20180529'

AND DATE_FORMAT(t1."pay_time", '%Y%m%d') <= '20180529'

-----------Make Better Use of Indexes after Conversion------------

SELECT * FROM T1

WHERE t1."pay_time" >= '2018-05-29 00:00:00'

AND t1."pay_time" < '2018-05-30 00:00:00'The performance and stability of the optimized SQL Parser are improved significantly. Compared with 99 Queries in TPC-DS [7], the manually written SQL Parser is 20 times faster than ANTLR Parser (generated by ANTLR), 30 times faster than JSQLParser (generated by JavaCC), and 30 to 50 times faster in batch Insert statements.

This article introduces the advantages and disadvantages of the SQL Parser and shows the self-developed analyzer generated with the automatic generation tool. Based on OLAP's high throughput and characteristics of handling business, the decision is to write the parser manually. The performance optimization approach is close to SQL parsing features. At the semantic analysis level, many semantic analysis tools are developed in combination with schema information, making it more lightweight and convenient in offline and online SQL statistics and feature analysis.

[1] Pattis, Richard E."EBNF: A Notation to Describe Syntax"(PDF).ICS.UCI.edu.University of California, Irvine. p. 1. Retrieved 2021-02-26.

[2] Parr, Terence and Fisher, Kathleen (2011). "LL (*) the foundation of the ANTLR parser generator".ACM SIGPLAN Notices.46(6): 425–436.doi:10.1145/1993316.1993548.

[3] Rosenkrantz, D. J.; Stearns, R. E. (1970)."Properties of Deterministic Top Down Grammars".Information and Control.17(3): 226–256.doi:10.1016/s0019-9958(70)90446-8.

[4] Gurari, Eitan (1999)."CIS 680: DATA STRUCTURES: Chapter 19: Backtracking Algorithms". Archived fromthe originalon 17 March 2007.

[5] Pirahesh, Hamid; Hellerstein, Joseph M."Extensible/Rule Based Query Rewrite Optimization in Starburst".citeseerx.ist.psu.edu. Retrieved 2020-04-06.

Compilation Optimization: LLVM Code Generation Technology Details and Its Application in Databases

ApsaraDB - June 15, 2023

digoal - September 12, 2019

Alibaba Cloud New Products - June 2, 2020

Apache Flink Community China - September 27, 2020

Apache Flink Community China - March 17, 2023

ApsaraDB - July 7, 2021

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB